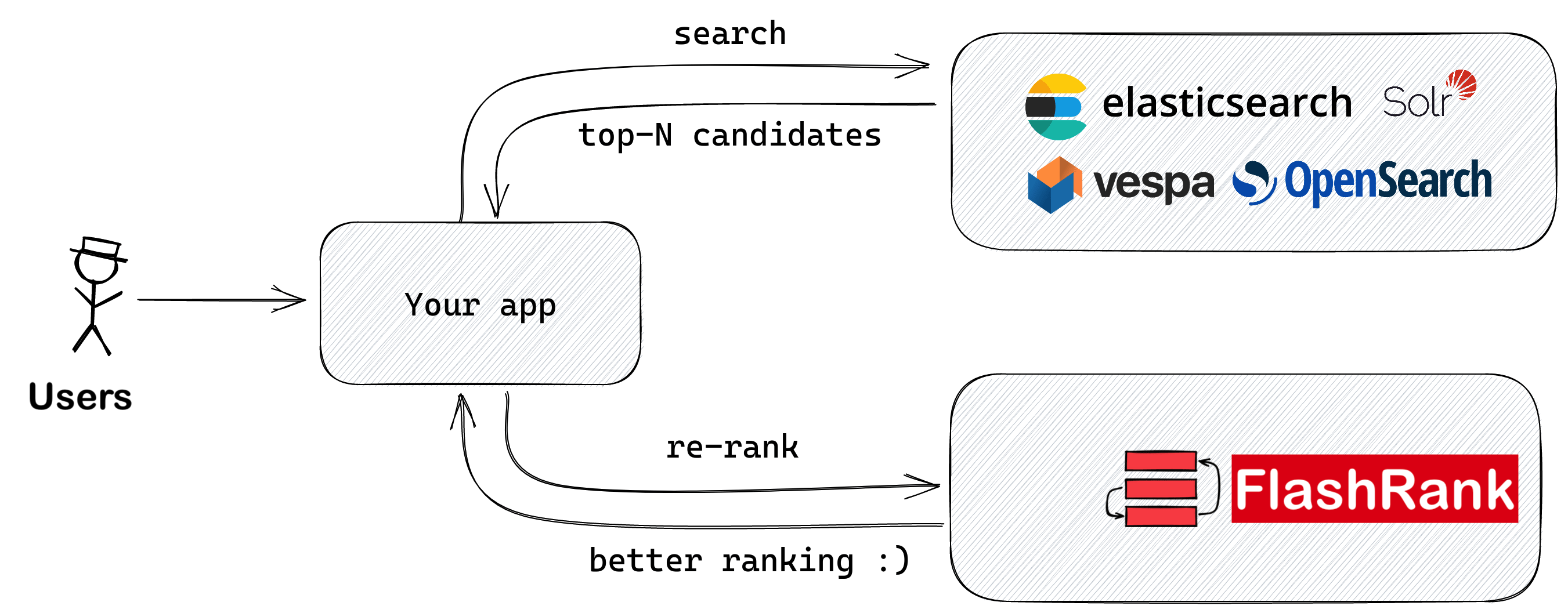

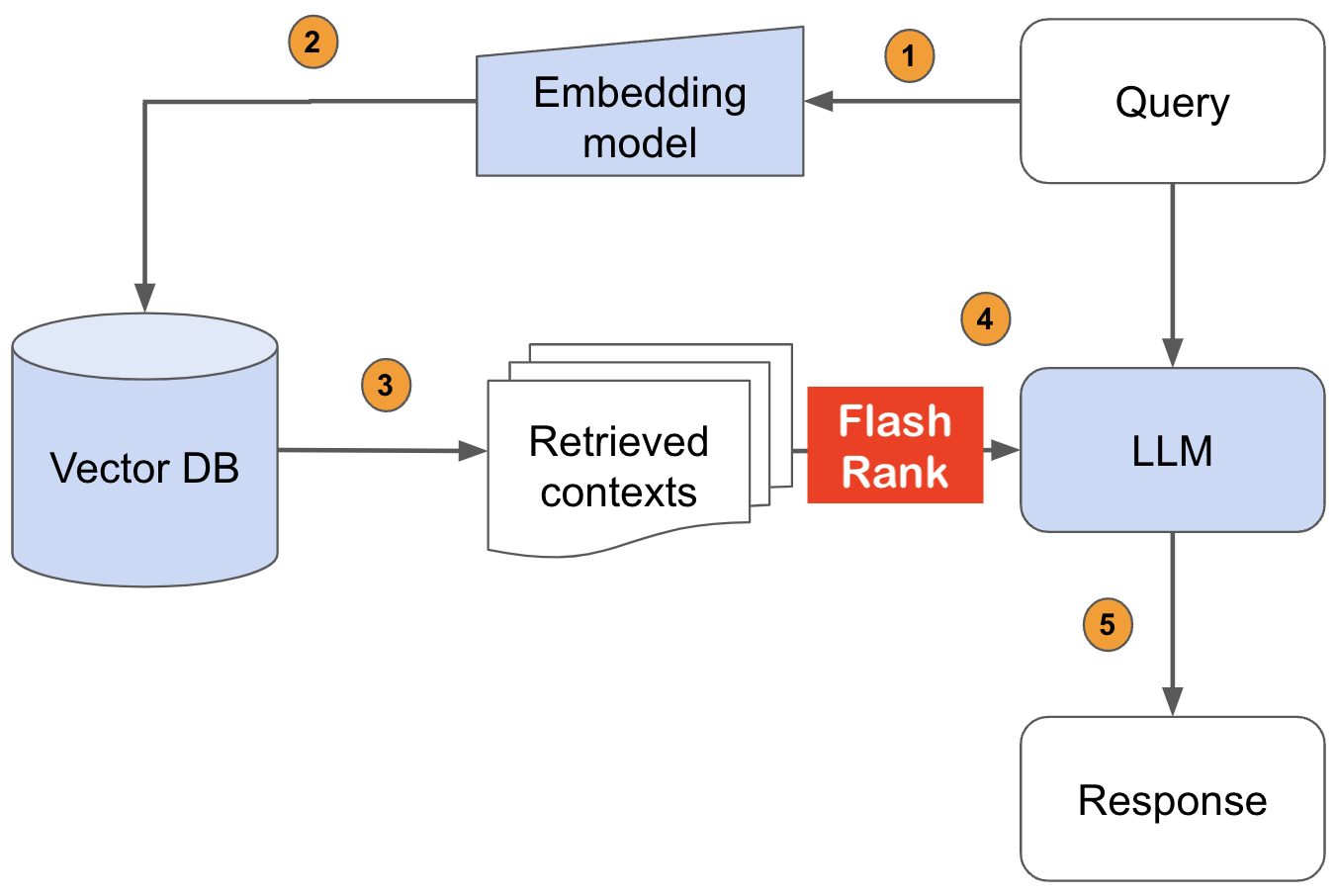

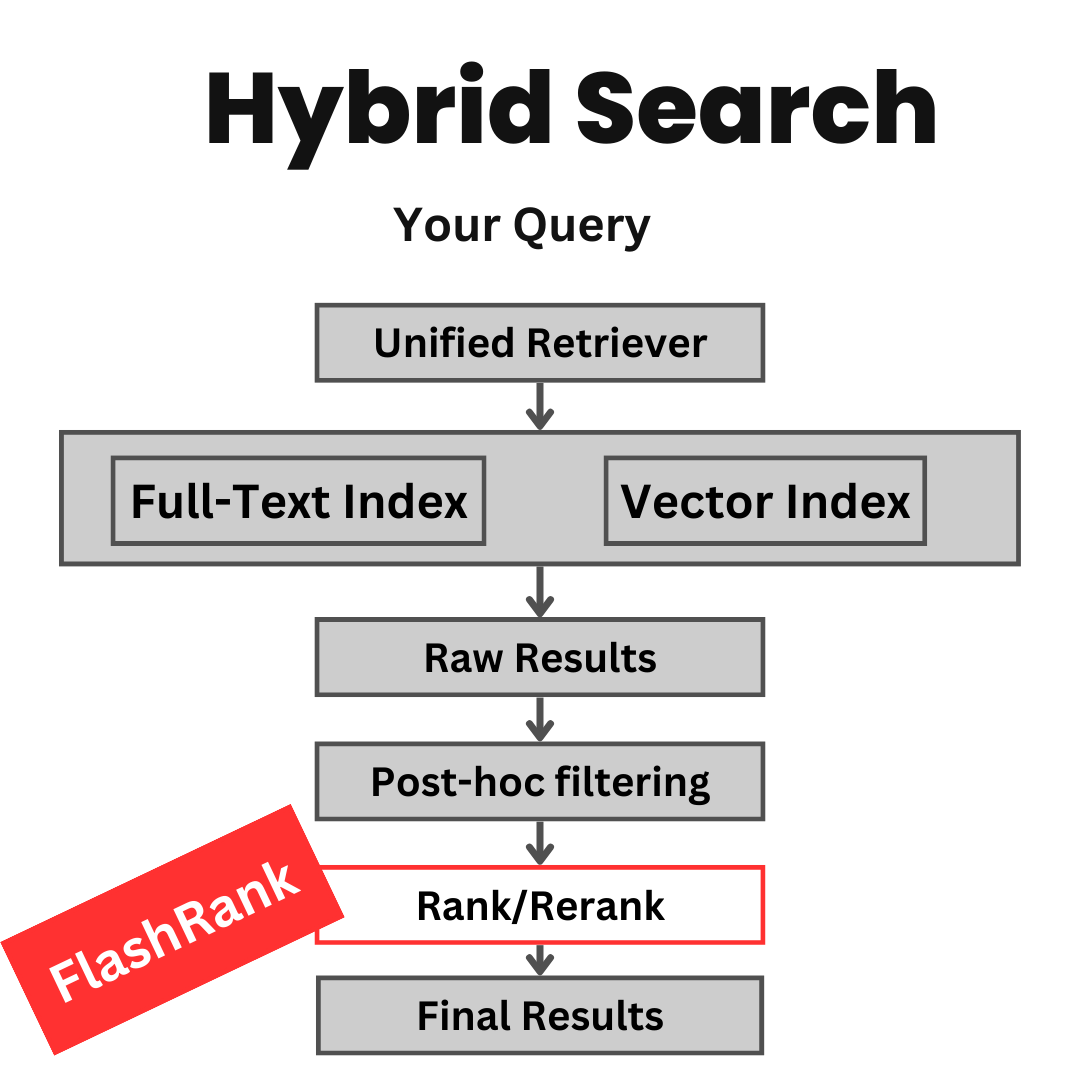

在輸入LLMS之前,將與SOTA成對或ListWise Rerankers重新排列您的搜索結果

Ultra-lite&Super-Fast Python庫,可在您現有的搜索和檢索管道中添加重新排列。它基於Sota LLM和交叉編碼器,並感謝所有模型所有者。

支持:

Max tokens = 512 )Max tokens = 8192 )⚡超輕型:

⏱️超級快:

? $ concious :

基於SOTA跨編碼器和其他模型:

| 模型名稱 | 描述 | 尺寸 | 筆記 |

|---|---|---|---|

ms-marco-TinyBERT-L-2-v2 | 默認模型 | 〜4MB | 型號卡 |

ms-marco-MiniLM-L-12-v2 | Best Cross-encoder reranker | 〜34MB | 型號卡 |

rank-T5-flan | 最佳的非跨編碼器reranker | 〜110MB | 型號卡 |

ms-marco-MultiBERT-L-12 | 多語言,支持100多種語言 | 〜150MB | 支持的語言 |

ce-esci-MiniLM-L12-v2 | 在Amazon ESCI數據集上進行了微調 | - | 型號卡 |

rank_zephyr_7b_v1_full | 4位定量的GGUF | 〜4GB | 型號卡 |

miniReranker_arabic_v1 | Only dedicated Arabic Reranker | - | 型號卡 |

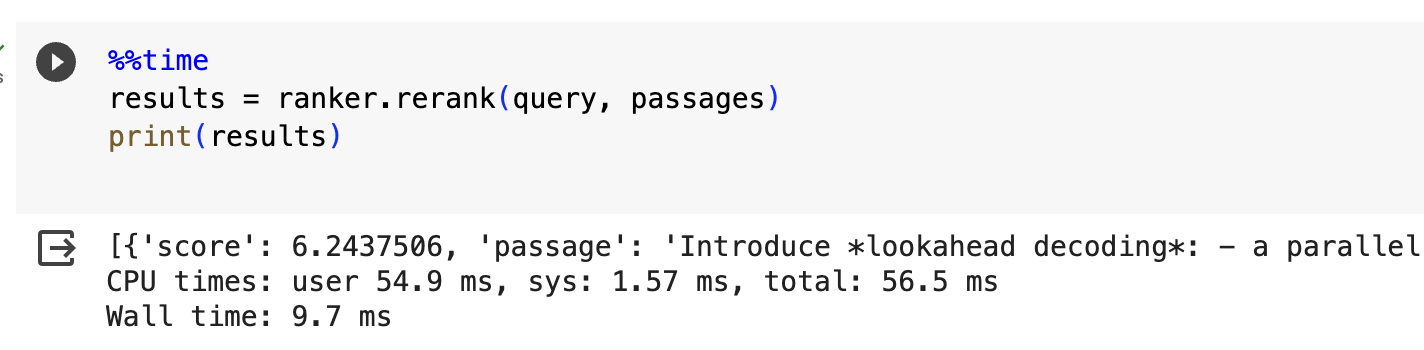

pip install flashrank pip install flashrank [ listwise ] max_length值應該很大,能夠適應您最長的段落。換句話說,如果您最長的段落(100個令牌) +查詢(16個令牌)對代幣估計為116,然後說設置max_length = 128足夠好,以適合[cls]和[sep]之類的保留令牌。使用Openai Tiktoken庫庫估算令牌密度,如果每個令牌的性能對您來說至關重要。對於較小的通道大小,給出更長的max_length (例如512)將對響應時間產生負面影響。

from flashrank import Ranker , RerankRequest

# Nano (~4MB), blazing fast model & competitive performance (ranking precision).

ranker = Ranker ( max_length = 128 )

or

# Small (~34MB), slightly slower & best performance (ranking precision).

ranker = Ranker ( model_name = "ms-marco-MiniLM-L-12-v2" , cache_dir = "/opt" )

or

# Medium (~110MB), slower model with best zeroshot performance (ranking precision) on out of domain data.

ranker = Ranker ( model_name = "rank-T5-flan" , cache_dir = "/opt" )

or

# Medium (~150MB), slower model with competitive performance (ranking precision) for 100+ languages (don't use for english)

ranker = Ranker ( model_name = "ms-marco-MultiBERT-L-12" , cache_dir = "/opt" )

or

ranker = Ranker ( model_name = "rank_zephyr_7b_v1_full" , max_length = 1024 ) # adjust max_length based on your passage length # Metadata is optional, Id can be your DB ids from your retrieval stage or simple numeric indices.

query = "How to speedup LLMs?"

passages = [

{

"id" : 1 ,

"text" : "Introduce *lookahead decoding*: - a parallel decoding algo to accelerate LLM inference - w/o the need for a draft model or a data store - linearly decreases # decoding steps relative to log(FLOPs) used per decoding step." ,

"meta" : { "additional" : "info1" }

},

{

"id" : 2 ,

"text" : "LLM inference efficiency will be one of the most crucial topics for both industry and academia, simply because the more efficient you are, the more $$$ you will save. vllm project is a must-read for this direction, and now they have just released the paper" ,

"meta" : { "additional" : "info2" }

},

{

"id" : 3 ,

"text" : "There are many ways to increase LLM inference throughput (tokens/second) and decrease memory footprint, sometimes at the same time. Here are a few methods I’ve found effective when working with Llama 2. These methods are all well-integrated with Hugging Face. This list is far from exhaustive; some of these techniques can be used in combination with each other and there are plenty of others to try. - Bettertransformer (Optimum Library): Simply call `model.to_bettertransformer()` on your Hugging Face model for a modest improvement in tokens per second. - Fp4 Mixed-Precision (Bitsandbytes): Requires minimal configuration and dramatically reduces the model's memory footprint. - AutoGPTQ: Time-consuming but leads to a much smaller model and faster inference. The quantization is a one-time cost that pays off in the long run." ,

"meta" : { "additional" : "info3" }

},

{

"id" : 4 ,

"text" : "Ever want to make your LLM inference go brrrrr but got stuck at implementing speculative decoding and finding the suitable draft model? No more pain! Thrilled to unveil Medusa, a simple framework that removes the annoying draft model while getting 2x speedup." ,

"meta" : { "additional" : "info4" }

},

{

"id" : 5 ,

"text" : "vLLM is a fast and easy-to-use library for LLM inference and serving. vLLM is fast with: State-of-the-art serving throughput Efficient management of attention key and value memory with PagedAttention Continuous batching of incoming requests Optimized CUDA kernels" ,

"meta" : { "additional" : "info5" }

}

]

rerankrequest = RerankRequest ( query = query , passages = passages )

results = ranker . rerank ( rerankrequest )

print ( results ) # Reranked output from default reranker

[

{

"id" : 4 ,

"text" : "Ever want to make your LLM inference go brrrrr but got stuck at implementing speculative decoding and finding the suitable draft model? No more pain! Thrilled to unveil Medusa, a simple framework that removes the annoying draft model while getting 2x speedup." ,

"meta" :{

"additional" : "info4"

},

"score" : 0.016847236

},

{

"id" : 5 ,

"text" : "vLLM is a fast and easy-to-use library for LLM inference and serving. vLLM is fast with: State-of-the-art serving throughput Efficient management of attention key and value memory with PagedAttention Continuous batching of incoming requests Optimized CUDA kernels" ,

"meta" :{

"additional" : "info5"

},

"score" : 0.011563735

},

{

"id" : 3 ,

"text" : "There are many ways to increase LLM inference throughput (tokens/second) and decrease memory footprint, sometimes at the same time. Here are a few methods I’ve found effective when working with Llama 2. These methods are all well-integrated with Hugging Face. This list is far from exhaustive; some of these techniques can be used in combination with each other and there are plenty of others to try. - Bettertransformer (Optimum Library): Simply call `model.to_bettertransformer()` on your Hugging Face model for a modest improvement in tokens per second. - Fp4 Mixed-Precision (Bitsandbytes): Requires minimal configuration and dramatically reduces the model's memory footprint. - AutoGPTQ: Time-consuming but leads to a much smaller model and faster inference. The quantization is a one-time cost that pays off in the long run." ,

"meta" :{

"additional" : "info3"

},

"score" : 0.00081340264

},

{

"id" : 1 ,

"text" : "Introduce *lookahead decoding*: - a parallel decoding algo to accelerate LLM inference - w/o the need for a draft model or a data store - linearly decreases # decoding steps relative to log(FLOPs) used per decoding step." ,

"meta" :{

"additional" : "info1"

},

"score" : 0.00063596206

},

{

"id" : 2 ,

"text" : "LLM inference efficiency will be one of the most crucial topics for both industry and academia, simply because the more efficient you are, the more $$$ you will save. vllm project is a must-read for this direction, and now they have just released the paper" ,

"meta" :{

"additional" : "info2"

},

"score" : 0.00024851

}

]

在AWS或其他無服務器環境中,整個VM僅讀取,您可能必須創建自己的自定義DIR。您可以在Dockerfile中這樣做,並將其用於加載模型(最終作為溫暖呼叫之間的緩存)。您可以在INIT期間使用CACHE_DIR參數進行操作。

ranker = Ranker ( model_name = "ms-marco-MiniLM-L-12-v2" , cache_dir = "/opt" )

要在您的工作中引用此存儲庫,請單擊右側的“引用此存儲庫”鏈接(Bewlow Repo描述和標籤)

cos-mix:餘弦相似性和距離融合以改進信息檢索

bryndza在2024年的氣候主義中:姿態,目標和仇恨事件檢測通過檢索型GPT-4和Llama

與氣候行動主義有關的推文中的態度和仇恨事件檢測 - 案例2024年共享任務

一個名為Swiftrank的克隆庫指向我們的模型存儲庫,我們正在使用臨時解決方案來避免這種偷竊。感謝您的耐心和理解。

這個問題已經解決,模型現在在HF中。請升級以繼續PIP安裝-U閃存。謝謝您的耐心理解