庫可幫助用戶根據其特定任務或需求選擇適當的摘要工具。包括模型,評估指標和數據集。

圖書館架構如下:

注意:夏季有積極發展,強烈鼓勵任何有用的評論,請打開問題或與任何團隊成員接觸。

# install extra dependencies first

pip install pyrouge@git+https://github.com/bheinzerling/pyrouge.git

pip install en_core_web_sm@https://github.com/explosion/spacy-models/releases/download/en_core_web_sm-3.0.0/en_core_web_sm-3.0.0-py3-none-any.whl

# install summertime from PyPI

pip install summertime

pip安裝另外,要享受最新功能,您可以從來源安裝:

git clone [email protected]:Yale-LILY/SummerTime

pip install -e . ROUGE (使用評估時) export ROUGE_HOME=/usr/local/lib/python3.7/dist-packages/summ_eval/ROUGE-1.5.5/導入模型,初始化默認模型並總結樣本文檔。

from summertime import model

sample_model = model . summarizer ()

documents = [

""" PG&E stated it scheduled the blackouts in response to forecasts for high winds amid dry conditions.

The aim is to reduce the risk of wildfires. Nearly 800 thousand customers were scheduled to be affected

by the shutoffs which were expected to last through at least midday tomorrow."""

]

sample_model . summarize ( documents )

# ["California's largest electricity provider has turned off power to hundreds of thousands of customers."]另外,請運行我們的COLAB筆記本,以進行更多的動手演示和更多示例。

夏季支持不同的模型(例如Textrank,Bart,Longformer)以及用於更複雜的摘要任務的模型包裝器(例如,用於多doc摘要的關節模型,基於查詢的摘要的BM25檢索)。還支持幾種多語言模型(MT5和MBART)。

| 型號 | 單次 | 多分子 | 基於對話 | 基於查詢 | 多種語言 |

|---|---|---|---|---|---|

| Bartmodel | ✔️ | ||||

| BM25SummModel | ✔️ | ||||

| hmnetmodel | ✔️ | ||||

| Lexrankmodel | ✔️ | ||||

| longformermodel | ✔️ | ||||

| mbartmodel | ✔️ | 50種語言(此處完整列表) | |||

| MT5模型 | ✔️ | 101語言(在此處列表) | |||

| Translation PipeLineModel | ✔️ | 〜70種語言 | |||

| MultidocjointModel | ✔️ | ||||

| 多尾paratemodel | ✔️ | ||||

| Pegasusmodel | ✔️ | ||||

| TextrankModel | ✔️ | ||||

| tfidfsummmodel | ✔️ |

要查看所有受支持的模型,請運行:

from summertime . model import SUPPORTED_SUMM_MODELS

print ( SUPPORTED_SUMM_MODELS ) from summertime import model

# To use a default model

default_model = model . summarizer ()

# Or a specific model

bart_model = model . BartModel ()

pegasus_model = model . PegasusModel ()

lexrank_model = model . LexRankModel ()

textrank_model = model . TextRankModel ()用戶可以輕鬆地訪問文檔以協助模型選擇

default_model . show_capability ()

pegasus_model . show_capability ()

textrank_model . show_capability ()要使用模型進行總結,只需運行:

documents = [

""" PG&E stated it scheduled the blackouts in response to forecasts for high winds amid dry conditions.

The aim is to reduce the risk of wildfires. Nearly 800 thousand customers were scheduled to be affected

by the shutoffs which were expected to last through at least midday tomorrow."""

]

default_model . summarize ( documents )

# or

pegasus_model . summarize ( documents )所有模型都可以使用以下可選選項初始化:

def __init__ ( self ,

trained_domain : str = None ,

max_input_length : int = None ,

max_output_length : int = None ,

):所有模型將實現以下方法:

def summarize ( self ,

corpus : Union [ List [ str ], List [ List [ str ]]],

queries : List [ str ] = None ) -> List [ str ]:

def show_capability ( cls ) -> None :夏季支持跨不同域的不同匯總數據集(例如,CNNDM數據集 - 新聞文章,Samsum,Samsum-對話語料庫,QM -SUM-基於查詢的對話語料庫,Multinews-多文件 - 多文件語料庫,ML -SUM,ML -SUM,ML -SUM-多語言語料庫 - 多語言語料庫,PubMedqa -Medical Domain,Arxiv -Arxiv -Science Paperers domain,其他。

| 數據集 | 領域 | #示例 | src。長度 | TGT。長度 | 詢問 | 多分子 | 對話 | 多種語言 |

|---|---|---|---|---|---|---|---|---|

| arxiv | 科學文章 | 215k | 4.9k | 220 | ||||

| CNN/DM(3.0.0) | 消息 | 300k | 781 | 56 | ||||

| mlsumdataset | 多語言新聞 | 1.5m+ | 632 | 34 | ✔️ | 德國,西班牙,法語,俄羅斯,土耳其語 | ||

| 多新的 | 消息 | 56k | 2.1k | 263.8 | ✔️ | |||

| Samsum | 開放域 | 16K | 94 | 20 | ✔️ | |||

| PubMedqa | 醫療的 | 272k | 244 | 32 | ✔️ | |||

| QMSUM | 會議 | 1k | 9.0k | 69.6 | ✔️ | ✔️ | ||

| Scisummnet | 科學文章 | 1k | 4.7k | 150 | ||||

| summscreen | 電視節目 | 26.9k | 6.6k | 337.4 | ✔️ | |||

| XSUM | 消息 | 226k | 431 | 23.3 | ||||

| xlsum | 消息 | 135m | ??? | ??? | 45種語言(請參閱文檔) | |||

| 大規模 | 消息 | 12m+ | ??? | ??? | 78種語言(有關詳細信息,請參見README的多語言摘要部分) |

要查看所有受支持的數據集,請運行:

from summertime import dataset

print ( dataset . list_all_dataset ()) from summertime import dataset

cnn_dataset = dataset . CnndmDataset ()

# or

xsum_dataset = dataset . XsumDataset ()

# ..etc 所有數據集都是SummDataset類的實現。他們的數據拆分可以訪問如下:

dataset = dataset . CnndmDataset ()

train_data = dataset . train_set

dev_data = dataset . dev_set

test_data = dataset . test_set 要查看數據集的詳細信息,請運行:

dataset = dataset . CnndmDataset ()

dataset . show_description ()所有數據集中的數據都包含在SummInstance類對像中,該對象具有以下屬性:

data_instance . source = source # either `List[str]` or `str`, depending on the dataset itself, string joining may needed to fit into specific models.

data_instance . summary = summary # a string summary that serves as ground truth

data_instance . query = query # Optional, applies when a string query is present

print ( data_instance ) # to print the data instance in its entirety使用發電機加載數據以節省空間和時間

data_instance = next ( cnn_dataset . train_set )

print ( data_instance ) import itertools

# Get a slice from the train set generator - first 5 instances

train_set = itertools . islice ( cnn_dataset . train_set , 5 )

corpus = [ instance . source for instance in train_set ]

print ( corpus )您可以使用定制數據使用CustomDataset數據,該類將數據加載到夏季數據集類別中

from summertime . dataset import CustomDataset

''' The train_set, test_set and validation_set have the following format:

List[Dict], list of dictionaries that contain a data instance.

The dictionary is in the form:

{"source": "source_data", "summary": "summary_data", "query":"query_data"}

* source_data is either of type List[str] or str

* summary_data is of type str

* query_data is of type str

The list of dictionaries looks as follows:

[dictionary_instance_1, dictionary_instance_2, ...]

'''

# Create sample data

train_set = [

{

"source" : "source1" ,

"summary" : "summary1" ,

"query" : "query1" , # only included, if query is present

}

]

validation_set = [

{

"source" : "source2" ,

"summary" : "summary2" ,

"query" : "query2" ,

}

]

test_set = [

{

"source" : "source3" ,

"summary" : "summary3" ,

"query" : "query3" ,

}

]

# Depending on the dataset properties, you can specify the type of dataset

# i.e multi_doc, query_based, dialogue_based. If not specified, they default to false

custom_dataset = CustomDataset (

train_set = train_set ,

validation_set = validation_set ,

test_set = test_set ,

query_based = True ,

multi_doc = True

dialogue_based = False ) import itertools

from summertime import dataset , model

cnn_dataset = dataset . CnndmDataset ()

# Get a slice of the train set - first 5 instances

train_set = itertools . islice ( cnn_dataset . train_set , 5 )

corpus = [ instance . source for instance in train_set ]

# Example 1 - traditional non-neural model

# LexRank model

lexrank = model . LexRankModel ( corpus )

print ( lexrank . show_capability ())

lexrank_summary = lexrank . summarize ( corpus )

print ( lexrank_summary )

# Example 2 - A spaCy pipeline for TextRank (another non-neueral extractive summarization model)

# TextRank model

textrank = model . TextRankModel ()

print ( textrank . show_capability ())

textrank_summary = textrank . summarize ( corpus )

print ( textrank_summary )

# Example 3 - A neural model to handle large texts

# LongFormer Model

longformer = model . LongFormerModel ()

longformer . show_capability ()

longformer_summary = longformer . summarize ( corpus )

print ( longformer_summary )多語言模型的summarize()方法自動檢查輸入文檔語言。

單大型多語言模型可以以與單語模型相同的方式初始化和使用。如果模型不支持的語言輸入,他們會返回錯誤。

mbart_model = st_model . MBartModel ()

mt5_model = st_model . MT5Model ()

# load Spanish portion of MLSum dataset

mlsum = datasets . MlsumDataset ([ "es" ])

corpus = itertools . islice ( mlsum . train_set , 5 )

corpus = [ instance . source for instance in train_set ]

# mt5 model will automatically detect Spanish as the language and indicate that this is supported!

mt5_model . summarize ( corpus )目前在我們實施Massiveumm數據集中支持以下語言:Amharic,Amharic,Arabic,Assamese,Asmare,Amymara,Azerbaijani,Bambara,Bambara,Bengali,Bengali,Bosnian,Bosnian,Bosnian,Pulgarian,Pulgarian,Catalan,Catalan,Catalan,Czech,捷克 Gujarati, Haitian, Hausa, Hebrew, Hindi, Croatian, Hungarian, Armenian,Igbo, Indonesian, Icelandic, Italian, Japanese, Kannada, Georgian, Khmer, Kinyarwanda, Kyrgyz, Korean, Kurdish, Lao, Latvian, Lingala, Lithuanian, Malayalam, Marathi, Macedonian,馬爾加斯加斯,蒙古人,緬甸,南恩德貝爾,尼泊爾,荷蘭人,奧里亞,奧里莫,旁遮普,波蘭,波蘭語,葡萄牙語,達里(Dari塔吉克,泰國,蒂格里尼亞,土耳其語,烏克蘭,烏爾都語,烏茲別克,越南,Xhosa,Yoruba,Yue Chinese,中文,中文,Bislama和Gaelic。

夏季支持不同的評估指標,包括:Bertscore,Bleu,Meteor,Rouge,Rougewe

打印所有支持的指標:

from summertime . evaluation import SUPPORTED_EVALUATION_METRICS

print ( SUPPORTED_EVALUATION_METRICS ) import summertime . evaluation as st_eval

bert_eval = st_eval . bertscore ()

bleu_eval = st_eval . bleu_eval ()

meteor_eval = st_eval . bleu_eval ()

rouge_eval = st_eval . rouge ()

rougewe_eval = st_eval . rougewe ()所有評估指標都可以通過以下可選參數初始化:

def __init__ ( self , metric_name ):所有評估指標對象實現以下方法:

def evaluate ( self , model , data ):

def get_dict ( self , keys ):獲取樣本摘要數據

from summertime . evaluation . base_metric import SummMetric

from summertime . evaluation import Rouge , RougeWe , BertScore

import itertools

# Evaluates model on subset of cnn_dailymail

# Get a slice of the train set - first 5 instances

train_set = itertools . islice ( cnn_dataset . train_set , 5 )

corpus = [ instance for instance in train_set ]

print ( corpus )

articles = [ instance . source for instance in corpus ]

summaries = sample_model . summarize ( articles )

targets = [ instance . summary for instance in corpus ]評估不同指標的數據

from summertime . evaluation import BertScore , Rouge , RougeWe ,

# Calculate BertScore

bert_metric = BertScore ()

bert_score = bert_metric . evaluate ( summaries , targets )

print ( bert_score )

# Calculate Rouge

rouge_metric = Rouge ()

rouge_score = rouge_metric . evaluate ( summaries , targets )

print ( rouge_score )

# Calculate RougeWe

rougewe_metric = RougeWe ()

rougwe_score = rougewe_metric . evaluate ( summaries , targets )

print ( rougewe_score )給定夏季數據集,您可以使用pipelines.assemble_model_pipeline函數來檢索與提供的數據集兼容的初始化夏季模型的列表。

from summertime . pipeline import assemble_model_pipeline

from summertime . dataset import CnndmDataset , QMsumDataset

single_doc_models = assemble_model_pipeline ( CnndmDataset )

# [

# (<model.single_doc.bart_model.BartModel object at 0x7fcd43aa12e0>, 'BART'),

# (<model.single_doc.lexrank_model.LexRankModel object at 0x7fcd43aa1460>, 'LexRank'),

# (<model.single_doc.longformer_model.LongformerModel object at 0x7fcd43b17670>, 'Longformer'),

# (<model.single_doc.pegasus_model.PegasusModel object at 0x7fccb84f2910>, 'Pegasus'),

# (<model.single_doc.textrank_model.TextRankModel object at 0x7fccb84f2880>, 'TextRank')

# ]

query_based_multi_doc_models = assemble_model_pipeline ( QMsumDataset )

# [

# (<model.query_based.tf_idf_model.TFIDFSummModel object at 0x7fc9c9c81e20>, 'TF-IDF (HMNET)'),

# (<model.query_based.bm25_model.BM25SummModel object at 0x7fc8b4fa8c10>, 'BM25 (HMNET)')

# ]=======

給定夏季數據集,您可以使用pipelines.assemble_model_pipeline函數來檢索與提供的數據集兼容的初始化夏季模型的列表。

# Get test data

import itertools

from summertime . dataset import XsumDataset

# Get a slice of the train set - first 5 instances

sample_dataset = XsumDataset ()

sample_data = itertools . islice ( sample_dataset . train_set , 100 )

generator1 = iter ( sample_data )

generator2 = iter ( sample_data )

bart_model = BartModel ()

pegasus_model = PegasusModel ()

models = [ bart_model , pegasus_model ]

metrics = [ metric () for metric in SUPPORTED_EVALUATION_METRICS ] from summertime . evaluation . model_selector import ModelSelector

selector = ModelSelector ( models , generator1 , metrics )

table = selector . run ()

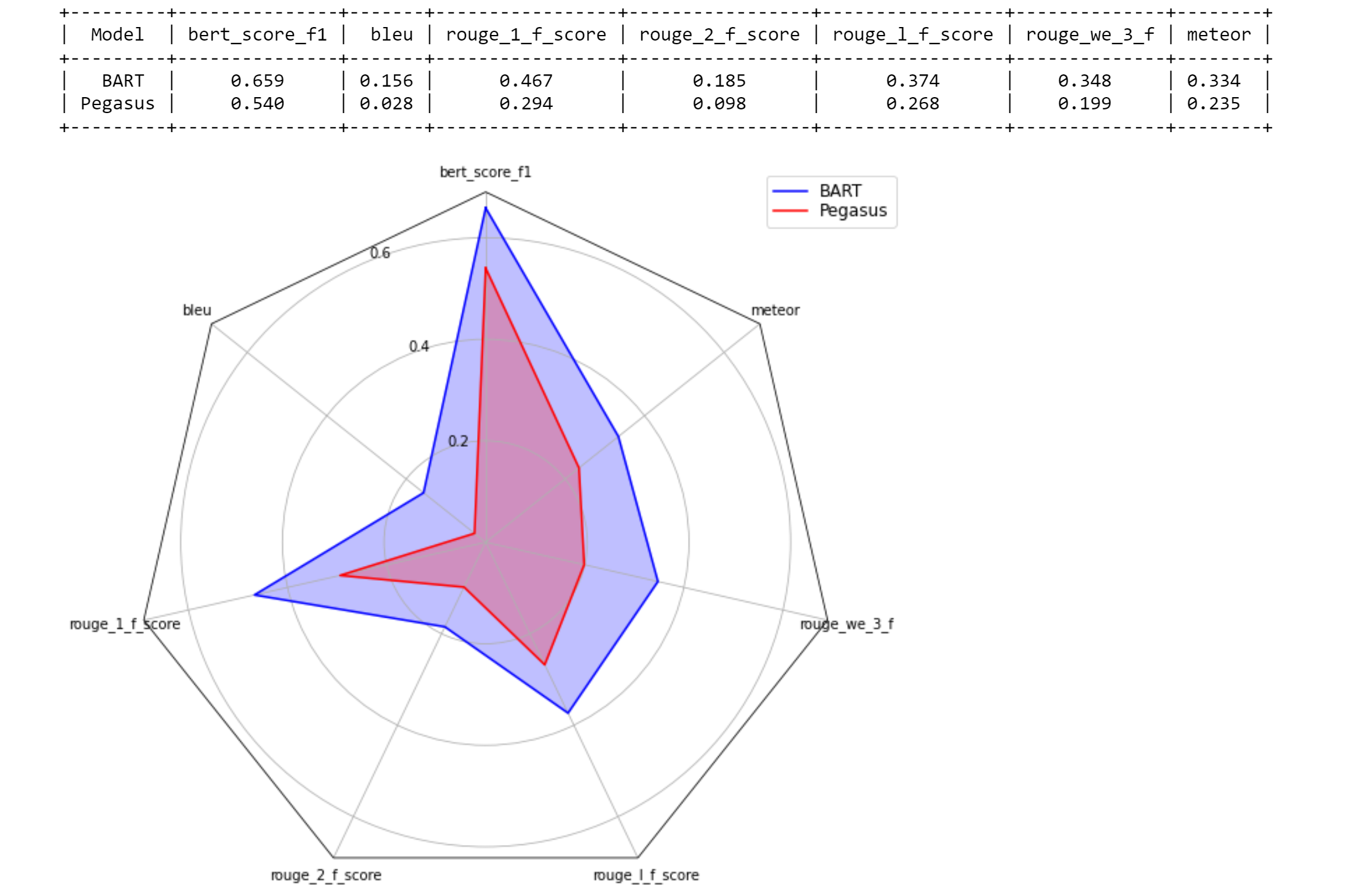

print ( table )

visualization = selector . visualize ( table )

from summertime . evaluation . model_selector import ModelSelector

new_selector = ModelSelector ( models , generator2 , metrics )

smart_table = new_selector . run_halving ( min_instances = 2 , factor = 2 )

print ( smart_table )

visualization_smart = selector . visualize ( smart_table ) from summertime . evaluation . model_selector import ModelSelector

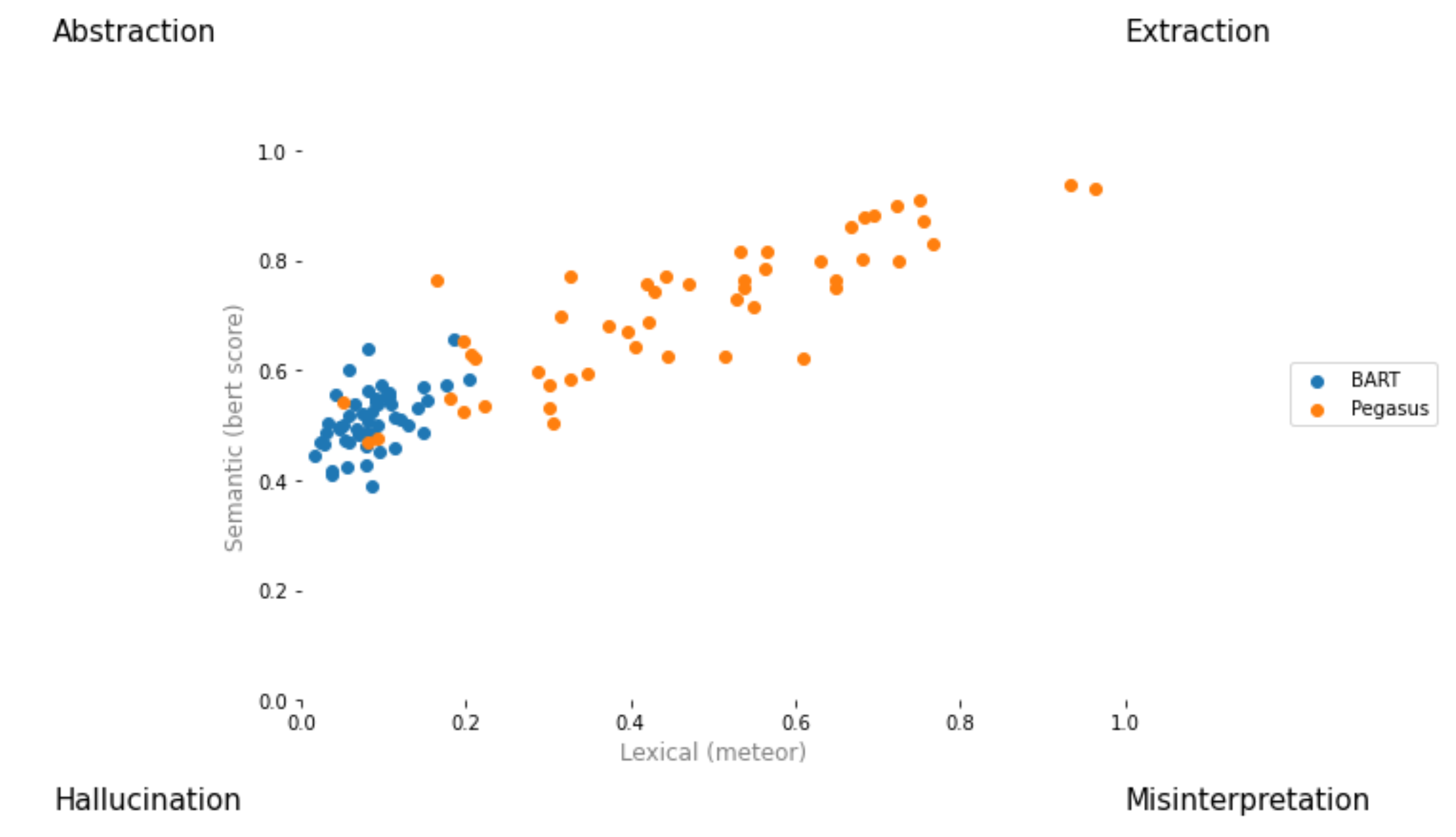

from summertime . evaluation . error_viz import scatter

keys = ( "bert_score_f1" , "bleu" , "rouge_1_f_score" , "rouge_2_f_score" , "rouge_l_f_score" , "rouge_we_3_f" , "meteor" )

scatter ( models , sample_data , metrics [ 1 : 3 ], keys = keys [ 1 : 3 ], max_instances = 5 )

創建一個拉請求並將其命名[ your_gh_username ]/[ your_branch_name ]。如果需要,請解決您自己的分支機構與MAIN的合併衝突。不要直接推到主。

如果還沒有,請安裝black和flake8 :

pip install black

pip install flake8在推動提交或合併分支之前,請從項目根部運行以下命令。請注意, black將寫入文件,並且您應該在推動之前添加並提交black做出的更改:

black .

flake8 .或者,如果您想提起特定的文件:

black path/to/specific/file.py

flake8 path/to/specific/file.py確保black不會重新格式化任何文件,並且flake8不會打印任何錯誤。如果您想覆蓋或忽略black或flake8強制執行的任何偏好或實踐,請在PR中發表評論,以獲取生成警告或錯誤日誌的任何代碼行。不要直接編輯配置文件,例如setup.cfg 。

有關安裝的文檔,忽略文件/行和高級用法,請參見black文檔和flake8文檔。另外,以下可能很有用:

black [file.py] --diff以差異為diff而不是直接進行更改black [file.py] --check以使用狀態代碼預覽更改而不是直接進行更改git diff -u | flake8 --diff僅在工作分支上僅運行flake8的木請注意,我們的CI測試套件將包括調用black --check .和flake8 --count .在所有非計算和非設定的Python文件上,所有測試都需要零錯誤級輸出。

我們的連續集成系統是通過GitHub動作提供的。當創建或更新任何拉動請求或每當更新main時,存儲庫的單元測試將作為tangra上的作業運行,以供該拉動請求。構建作業將在幾分鐘內通過或失敗,並且在操作下可見構建狀態和日誌。請確保在合併之前,請確保所有支票都通過所有支票(即所有作業運行到完成的所有步驟),或者請求審查。要跳過任何特定的提交中的構建,請將[skip ci]附加到提交消息。請注意,CI中不包含帶有子字符串/no-ci/分支名稱中任何地方的PR。

該存儲庫由Dragomir Radev教授領導的耶魯大學的Lily Lab建造。主要貢獻者是Ansong Ni,Zhangir Azerbayev,Troy Feng,Murori Mutuma,Hailey Schoelkopf和Yusen Zhang(Penn State)。

如果您在工作中使用夏季,請考慮引用:

@article{ni2021summertime,

title={SummerTime: Text Summarization Toolkit for Non-experts},

author={Ansong Ni and Zhangir Azerbayev and Mutethia Mutuma and Troy Feng and Yusen Zhang and Tao Yu and Ahmed Hassan Awadallah and Dragomir Radev},

journal={arXiv preprint arXiv:2108.12738},

year={2021}

}

有關評論和問題,請打開一個問題。