功能級是可以解釋和執行功能/插件的語言模型。

該模型確定何時執行功能,無論是並行還是串行,並且可以理解其輸出。它僅根據需要觸發功能。功能定義作為JSON模式對像給出,類似於OpenAI GPT功能調用。

文檔和更多示例:firsteramary.meetkai.com

{type: "code_interpreter"}中傳遞工具中! 可以使用我們的VLLM或SGLANG服務器部署工作人員。根據您的喜好選擇一個。

vllm

pip install -e .[vllm]sglang

pip install -e .[sglang] --find-links https://flashinfer.ai/whl/cu121/torch2.4/flashinfer/vllm

python3 server_vllm.py --model " meetkai/functionary-small-v3.2 " --host 0.0.0.0 --port 8000 --max-model-len 8192sglang

python3 server_sglang.py --model-path " meetkai/functionary-small-v3.2 " --host 0.0.0.0 --port 8000 --context-length 8192我們的中型型號需要:4xa6000或2xA100 80GB要運行,需要使用: tensor-parallel-size或tp (sglang)

vllm

# vllm requires to run this first: https://github.com/vllm-project/vllm/issues/6152

export VLLM_WORKER_MULTIPROC_METHOD=spawn

python server_vllm.py --model " meetkai/functionary-medium-v3.1 " --host 0.0.0.0 --port 8000 --max-model-len 8192 --tensor-parallel-size 2sglang

python server_sglang.py --model-path " meetkai/functionary-medium-v3.1 " --host 0.0.0.0 --port 8000 --context-length 8192 --tp 2與VLLM中的Lora類似,我們的服務器支持在啟動和動態上為Lora適配器提供服務。

要在啟動時為LORA適配器服務,請使用--lora-modules參數運行服務器:

python server_vllm.py --model {BASE_MODEL} --enable-lora --lora-modules {name}={path} {name}={path} --host 0.0.0.0 --port 8000要動態使用LORA適配器,請使用/v1/load_lora_adapter端點:

python server_vllm.py --model {BASE_MODEL} --enable-lora --host 0.0.0.0 --port 8000

# Load a LoRA adapter dynamically

curl -X POST http://localhost:8000/v1/load_lora_adapter

-H " Content-Type: application/json "

-d ' {

"lora_name": "my_lora",

"lora_path": "/path/to/my_lora_adapter"

} '

# Example chat request to lora adapter

curl -X POST http://localhost:8000/v1/chat/completions

-H " Content-Type: application/json "

-d ' {

"model": "my_lora",

"messages": [...],

"tools": [...],

"tool_choice": "auto"

} '

# Unload a LoRA adapter dynamically

curl -X POST http://localhost:8000/v1/unload_lora_adapter

-H " Content-Type: application/json "

-d ' {

"lora_name": "my_lora"

} '我們還提供了自己的功能稱呼語法採樣功能,該功能限制了LLM的生成以始終遵循及時模板,並確保功能名稱的100%精度。參數是使用有效的LM-Format-Enforcer生成的,該參數可確保參數遵循該工具的架構。要啟用語法採樣,請使用命令行參數運行VLLM服務器--enable-grammar-sampling :

python3 server_vllm.py --model " meetkai/functionary-medium-v3.1 " --max-model-len 8192 --tensor-parallel-size 2 --enable-grammar-sampling注意:語法採樣支持僅適用於V2,v3.0,v3.2型號。對V1和V3.1模型沒有這樣的支持。

我們還提供了使用文本生成推導(TGI)對工作模型進行推斷的服務。請按照以下步驟開始:

按照其安裝說明安裝Docker。

為Python安裝Docker SDK

pip install docker在啟動時,功能性TGI服務器試圖連接到現有的TGI端點。在這種情況下,您可以運行以下內容:

python3 server_tgi.py --model < REMOTE_MODEL_ID_OR_LOCAL_MODEL_PATH > --endpoint < TGI_SERVICE_ENDPOINT >如果不存在TGI端點,則使用已安裝的Docker Python SDK在endpoint CLI參數中提供的地址啟動新的TGI端點容器。分別為遠程和本地模型運行以下命令:

python3 server_tgi.py --model < REMOTE_MODEL_ID > --remote_model_save_folder < PATH_TO_SAVE_AND_CACHE_REMOTE_MODEL > --endpoint < TGI_SERVICE_ENDPOINT > python3 server_tgi.py --model < LOCAL_MODEL_PATH > --endpoint < TGI_SERVICE_ENDPOINT >Docker

如果您在依賴方面遇到麻煩,並且有Nvidia-container-toolkit,則可以像這樣開始環境:

sudo docker run --gpus all -it --ipc=host --name functionary -v ${PWD} /functionary_workspace:/workspace -p 8000:8000 nvcr.io/nvidia/pytorch:23.10-py3 from openai import OpenAI

client = OpenAI ( base_url = "http://localhost:8000/v1" , api_key = "functionary" )

client . chat . completions . create (

model = "meetkai/functionary-small-v3.2" ,

messages = [{ "role" : "user" ,

"content" : "What is the weather for Istanbul?" }

],

tools = [{

"type" : "function" ,

"function" : {

"name" : "get_current_weather" ,

"description" : "Get the current weather" ,

"parameters" : {

"type" : "object" ,

"properties" : {

"location" : {

"type" : "string" ,

"description" : "The city and state, e.g. San Francisco, CA"

}

},

"required" : [ "location" ]

}

}

}],

tool_choice = "auto"

) import requests

data = {

'model' : 'meetkai/functionary-small-v3.2' , # model name here is the value of argument "--model" in deploying: server_vllm.py or server.py

'messages' : [

{

"role" : "user" ,

"content" : "What is the weather for Istanbul?"

}

],

'tools' :[ # For functionary-7b-v2 we use "tools"; for functionary-7b-v1.4 we use "functions" = [{"name": "get_current_weather", "description":..., "parameters": ....}]

{

"type" : "function" ,

"function" : {

"name" : "get_current_weather" ,

"description" : "Get the current weather" ,

"parameters" : {

"type" : "object" ,

"properties" : {

"location" : {

"type" : "string" ,

"description" : "The city and state, e.g. San Francisco, CA"

}

},

"required" : [ "location" ]

}

}

}

]

}

response = requests . post ( "http://127.0.0.1:8000/v1/chat/completions" , json = data , headers = {

"Content-Type" : "application/json" ,

"Authorization" : "Bearer xxxx"

})

# Print the response text

print ( response . text )| 模型 | 描述 | VRAM FP16 |

|---|---|---|

| 功能性中等v3.2 | 128K上下文,代碼解釋器,使用我們自己的提示模板 | 160GB |

| 功能性 - small-v3.2 / gguf | 128K上下文,代碼解釋器,使用我們自己的提示模板 | 24GB |

| 功能性中等v3.1 / gguf | 128K上下文,代碼解釋器,使用原始Meta的提示模板 | 160GB |

| 功能性 - small-v3.1 / gguf | 128K上下文,代碼解釋器,使用原始Meta的提示模板 | 24GB |

| 功能性中等v3.0 / gguf | 8K上下文,基於元式/元路3-70B教學 | 160GB |

| 功能性 - small-v2.5 / gguf | 8K上下文,代碼解釋器 | 24GB |

| 功能級 - small-v2.4 / gguf | 8K上下文,代碼解釋器 | 24GB |

| 功能性中等v2.4 / gGGUF | 8K上下文,代碼解釋器,更好的準確性 | 90GB |

| 功能性 - small-v2.2 / gguf | 8K上下文 | 24GB |

| 功能中含量-v2.2 / gguf | 8K上下文 | 90GB |

| Firictary-7b-v2.1 / gguf | 8K上下文 | 24GB |

| 功能性-7B-V2 / GGUF | 並行功能呼叫支持。 | 24GB |

| 功能級-7B-V1.4 / GGUF | 4K上下文,更好的準確性(棄用) | 24GB |

| 功能性-7b-v1.1 | 4K上下文(已棄用) | 24GB |

| 功能級-7B-V0.1 | 2K上下文(不建議)不建議,請使用2.1 | 24GB |

Openai-Python V0和V1之間的區別您可以在此處參考官方文件

| 功能/項目 | 工作人員 | Nexusraven | 大猩猩 | glaive | GPT-4-1106-preiview |

|---|---|---|---|---|---|

| 單功能調用 | ✅ | ✅ | ✅ | ✅ | ✅ |

| 並行函數調用 | ✅ | ✅ | ✅ | ✅ | |

| 跟進丟失的函數參數 | ✅ | ✅ | |||

| 多轉 | ✅ | ✅ | ✅ | ||

| 生成基於工具執行結果的模型響應 | ✅ | ✅ | |||

| 閒談 | ✅ | ✅ | ✅ | ✅ | |

| 代碼解釋器 | ✅ | ✅ |

您可以在此處找到更多功能的詳細信息

可以在以下內容中找到使用Llama-CPP-Python推斷的示例:llama_cpp_inference.py。

此外,工作人員還將其集成到Llama-CPP-Python中,但是該集成可能不會快速更新,因此,如果結果有錯誤或怪異,請使用:llama_cpp_inference.py。當前,v2.5尚未集成,因此,如果您使用的是官僚主義v2.5-gguf ,請使用:llama_cpp_inference.py

確保最新版本的Llama-CPP-Python成功安裝在系統中。功能性V2完全集成到Llama-CPP-Python中。您可以通過正常的聊天完成或通過與我們相似的Llama-CPP-Python的OpenAI兼容服務器進行推理。

以下是使用普通聊天完成的示例代碼:

from llama_cpp import Llama

from llama_cpp . llama_tokenizer import LlamaHFTokenizer

# We should use HF AutoTokenizer instead of llama.cpp's tokenizer because we found that Llama.cpp's tokenizer doesn't give the same result as that from Huggingface. The reason might be in the training, we added new tokens to the tokenizer and Llama.cpp doesn't handle this successfully

llm = Llama . from_pretrained (

repo_id = "meetkai/functionary-small-v2.4-GGUF" ,

filename = "functionary-small-v2.4.Q4_0.gguf" ,

chat_format = "functionary-v2" ,

tokenizer = LlamaHFTokenizer . from_pretrained ( "meetkai/functionary-small-v2.4-GGUF" ),

n_gpu_layers = - 1

)

messages = [

{ "role" : "user" , "content" : "what's the weather like in Hanoi?" }

]

tools = [ # For functionary-7b-v2 we use "tools"; for functionary-7b-v1.4 we use "functions" = [{"name": "get_current_weather", "description":..., "parameters": ....}]

{

"type" : "function" ,

"function" : {

"name" : "get_current_weather" ,

"description" : "Get the current weather" ,

"parameters" : {

"type" : "object" ,

"properties" : {

"location" : {

"type" : "string" ,

"description" : "The city and state, e.g., San Francisco, CA"

}

},

"required" : [ "location" ]

}

}

}

]

result = llm . create_chat_completion (

messages = messages ,

tools = tools ,

tool_choice = "auto" ,

)

print ( result [ "choices" ][ 0 ][ "message" ])輸出將是:

{ 'role' : 'assistant' , 'content' : None , 'tool_calls' : [{ 'type' : 'function' , 'function' : { 'name' : 'get_current_weather' , 'arguments' : '{ n "location": "Hanoi" n }' }}]}有關更多詳細信息,請參閱Llama-CPP-Python中的函數調用部分。要使用Llama-CPP-Python與OpenAI兼容的服務器使用我們的工作型GGUF模型,請參閱此處以獲取更多詳細信息和文檔。

筆記:

messages中提供默認系統消息。要調用真實的Python函數,請獲取結果並提取響應結果,您可以使用ChatLab。以下示例使用chatlab == 0.16.0:

請注意,ChatLab當前不支持並行功能調用。此示例代碼僅與功能性1.4版兼容,並且可能無法與功能性2.0版正確使用。

from chatlab import Conversation

import openai

import os

openai . api_key = "functionary" # We just need to set this something other than None

os . environ [ 'OPENAI_API_KEY' ] = "functionary" # chatlab requires us to set this too

openai . api_base = "http://localhost:8000/v1"

# now provide the function with description

def get_car_price ( car_name : str ):

"""this function is used to get the price of the car given the name

:param car_name: name of the car to get the price

"""

car_price = {

"tang" : { "price" : "$20000" },

"song" : { "price" : "$25000" }

}

for key in car_price :

if key in car_name . lower ():

return { "price" : car_price [ key ]}

return { "price" : "unknown" }

chat = Conversation ( model = "meetkai/functionary-7b-v2" )

chat . register ( get_car_price ) # register this function

chat . submit ( "what is the price of the car named Tang?" ) # submit user prompt

# print the flow

for message in chat . messages :

role = message [ "role" ]. upper ()

if "function_call" in message :

func_name = message [ "function_call" ][ "name" ]

func_param = message [ "function_call" ][ "arguments" ]

print ( f" { role } : call function: { func_name } , arguments: { func_param } " )

else :

content = message [ "content" ]

print ( f" { role } : { content } " )輸出看起來像這樣:

USER: what is the price of the car named Tang?

ASSISTANT: call function: get_car_price, arguments:{

"car_name": "Tang"

}

FUNCTION: {'price': {'price': '$20000'}}

ASSISTANT: The price of the car named Tang is $20,000.

通過Modal_server_vllm.py腳本支持功能模型的無服務器部署。註冊並安裝模態後,請按照以下步驟在模態上部署我們的VLLM服務器:

modal environment create dev如果您已經創建了開發環境,則無需創建另一個環境。只需在下一步中配置它。

modal config set-environment devmodal serve modal_server_vllmmodal deploy modal_server_vllm以下是如何使用此功能調用系統的一些示例:

功能plan_trip(destination: string, duration: int, interests: list)可以接受用戶輸入,例如“我想計劃7天前往巴黎,重點關注藝術和文化”,並相應地生成行程。

client . chat . completions . create ((

model = "meetkai/functionary-7b-v2" ,

messages = [

{ "role" : "user" , "content" : 'I want to plan a 7-day trip to Paris with a focus on art and culture' },

],

tools = [

{

"type" : "function" ,

"function" : {

"name" : "plan_trip" ,

"description" : "Plan a trip based on user's interests" ,

"parameters" : {

"type" : "object" ,

"properties" : {

"destination" : {

"type" : "string" ,

"description" : "The destination of the trip" ,

},

"duration" : {

"type" : "integer" ,

"description" : "The duration of the trip in days" ,

},

"interests" : {

"type" : "array" ,

"items" : { "type" : "string" },

"description" : "The interests based on which the trip will be planned" ,

},

},

"required" : [ "destination" , "duration" , "interests" ],

}

}

}

]

)回應將有:

{ "role" : " assistant " , "content" : null , "tool_calls" : [{ "type" : " function " , "function" : { "name" : " plan_trip " , "arguments" : '{n "destination": "Paris",n "duration": 7,n "interests": ["art", "culture"]n } ' } } ]}然後,您需要使用提供的參數調用plan_trip函數。如果您想從模型中發表評論,那麼您將通過功能的響應再次調用模型,該模型將編寫必要的評論。

諸如estimate_property_value(property_details:dict)之類的函數可以允許用戶輸入有關屬性的詳細信息(例如位置,大小,房間數量等)並獲得估計的市場價值。

client . chat . completions . create (

model = "meetkai/functionary-7b-v2" ,

messages = [

{

"role" : "user" ,

"content" : 'What is the estimated value of a 3-bedroom house in San Francisco with 2000 sq ft area?'

},

{

"role" : "assistant" ,

"content" : None ,

"tool_calls" : [

{

"type" : "function" ,

"function" : {

"name" : "estimate_property_value" ,

"arguments" : '{ n "property_details": {"location": "San Francisco", "size": 2000, "rooms": 3} n }'

}

}

]

}

],

tools = [

{

"type" : "function" ,

"function" : {

"name" : "estimate_property_value" ,

"description" : "Estimate the market value of a property" ,

"parameters" : {

"type" : "object" ,

"properties" : {

"property_details" : {

"type" : "object" ,

"properties" : {

"location" : {

"type" : "string" ,

"description" : "The location of the property"

},

"size" : {

"type" : "integer" ,

"description" : "The size of the property in square feet"

},

"rooms" : {

"type" : "integer" ,

"description" : "The number of rooms in the property"

}

},

"required" : [ "location" , "size" , "rooms" ]

}

},

"required" : [ "property_details" ]

}

}

}

],

tool_choice = "auto"

)回應將有:

{ "role" : " assistant " , "content" : null , "tool_calls" : [{ "type" : " function " , "function" : { "name" : " plan_trip " , "arguments" : '{n "destination": "Paris",n "duration": 7,n "interests": ["art", "culture"]n } ' } } ]}然後,您需要使用提供的參數調用plan_trip函數。如果您想從模型中發表評論,那麼您將通過功能的響應再次調用模型,該模型將編寫必要的評論。

函數parse_customer_complaint(complaint: {issue: string, frequency: string, duration: string})可以幫助從復雜的敘述性客戶投訴中提取結構化信息,從而確定核心問題和潛在的解決方案。 complaint對象可以包括issue (主要問題), frequency (問題發生的頻率)和duration (問題已經發生多長時間)之類的屬性。

client . chat . completions . create (

model = "meetkai/functionary-7b-v2" ,

messages = [

{ "role" : "user" , "content" : 'My internet has been disconnecting frequently for the past week' },

],

tools = [

{

"type" : "function" ,

"function" : {

"name" : "parse_customer_complaint" ,

"description" : "Parse a customer complaint and identify the core issue" ,

"parameters" : {

"type" : "object" ,

"properties" : {

"complaint" : {

"type" : "object" ,

"properties" : {

"issue" : {

"type" : "string" ,

"description" : "The main problem" ,

},

"frequency" : {

"type" : "string" ,

"description" : "How often the issue occurs" ,

},

"duration" : {

"type" : "string" ,

"description" : "How long the issue has been occurring" ,

},

},

"required" : [ "issue" , "frequency" , "duration" ],

},

},

"required" : [ "complaint" ],

}

}

}

],

tool_choice = "auto"

)回應將有:

{ "role" : " assistant " , "content" : null , "tool_calls" : [{ "type" : " function " , "function" : { "name" : " parse_customer_complaint " , "arguments" : '{n "complaint": {"issue": "internet disconnecting", "frequency": "frequently", "duration": "past week" } n } '}} ]}然後,您需要使用提供的參數調用parse_customer_complaint函數。如果您想從模型中發表評論,那麼您將通過功能的響應再次調用模型,該模型將編寫必要的評論。

我們將函數定義轉換為類似的文本為打字稿定義。然後,我們將這些定義注入系統提示。之後,我們注入默認系統提示。然後,我們開始對話消息。

備用示例可以在此處找到:v1(v1.4),v2(v2,v2.1,v2.2,v2.4)和v2.llama3(v2.5)

我們不會更改符合某個模式的logit概率,但是該模型本身知道如何符合。這使我們可以輕鬆地使用現有的工具和緩存系統。

我們在伯克利功能呼叫排行榜中排名第二(上次更新:2024-08-11)

| 模型名稱 | 函數調用準確性(名稱和參數) |

|---|---|

| Meetkai/工作人員中等v3.1 | 88.88% |

| GPT-4-1106-PREVIEW(提示) | 88.53% |

| Meetkai/工作人員 - small-v3.2 | 82.82% |

| Meetkai/Pilitchary-Small-V3.1 | 82.53% |

| Firefunction-V2(FC) | 78.82.47% |

我們還在ToolsAndbox上評估了模型,該基準測試比伯克利功能呼叫排行榜更加困難。該基準包括陳述的工具執行,工具之間的隱式狀態依賴,內置的用戶模擬器支持對話對話性評估,以及對任意軌蹟的中級和最終里程碑的動態評估策略。該基準的作者表明,開源模型和專有模型之間存在巨大的性能差距。

從我們的評估結果中,我們的模型與最佳專有模型相當,並且比其他開源模型要好得多。

| 模型名稱 | 平均相似性得分 |

|---|---|

| GPT-4O-2024-05-13 | 73 |

| Claude-3-Opus-20240229 | 69.2 |

| 功能性中v3.1 | 68.87 |

| GPT-3.5-Turbo-0125 | 65.6 |

| GPT-4-0125-Qureview | 64.3 |

| Claude-3-Sonnet-20240229 | 63.8 |

| 功能性 - small-v3.1 | 63.13 |

| Gemini-1.5-Pro-001 | 60.4 |

| 功能性 - small-v3.2 | 58.56 |

| Claude-3-Haiku-20240307 | 54.9 |

| 雙子座1.0-Pro | 38.1 |

| Hermes-2-Pro-Mistral-7b | 31.4 |

| MISTRAL-7B-INSTRUCT-V0.3 | 29.8 |

| C4AI-Command-R-V01 | 26.2 |

| 大猩猩 - opunctions-v2 | 25.6 |

| C4ai-Command R+ | 24.7 |

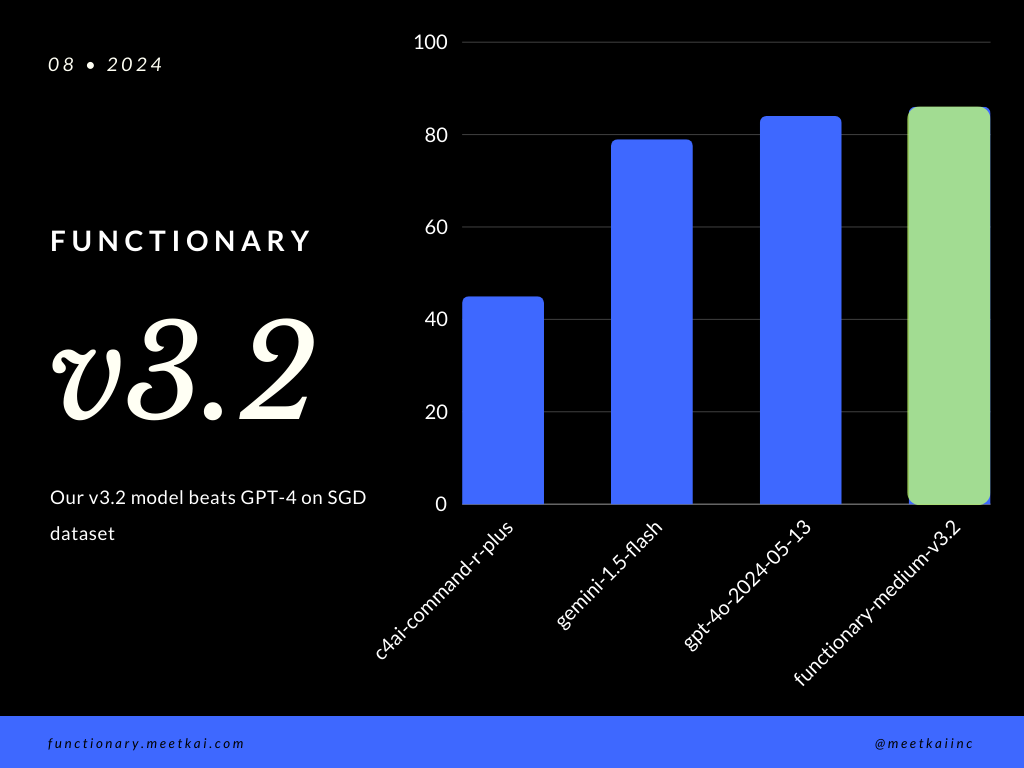

SGD數據集中的評估功能調用預測。精度度量衡量預測功能調用的總體正確性,包括功能名稱預測和參數提取。

| 數據集 | 模型名稱 | 函數調用準確性(名稱和參數) |

|---|---|---|

| SGD | Meetkai/工作人員中等v3.1 | 88.11% |

| SGD | GPT-4O-2024-05-13 | 82.75% |

| SGD | 雙子座1.5閃爍 | 79.64% |

| SGD | C4AI-Command-R-Plus | 45.66% |

請參閱培訓讀數

雖然它不是嚴格執行的,為了確保更安全的功能執行,但可以啟用語法採樣來強制執行類型檢查。主要安全檢查需要在功能/動作本身中進行。例如驗證給定輸入的驗證,或將給出模型的OUPUT。