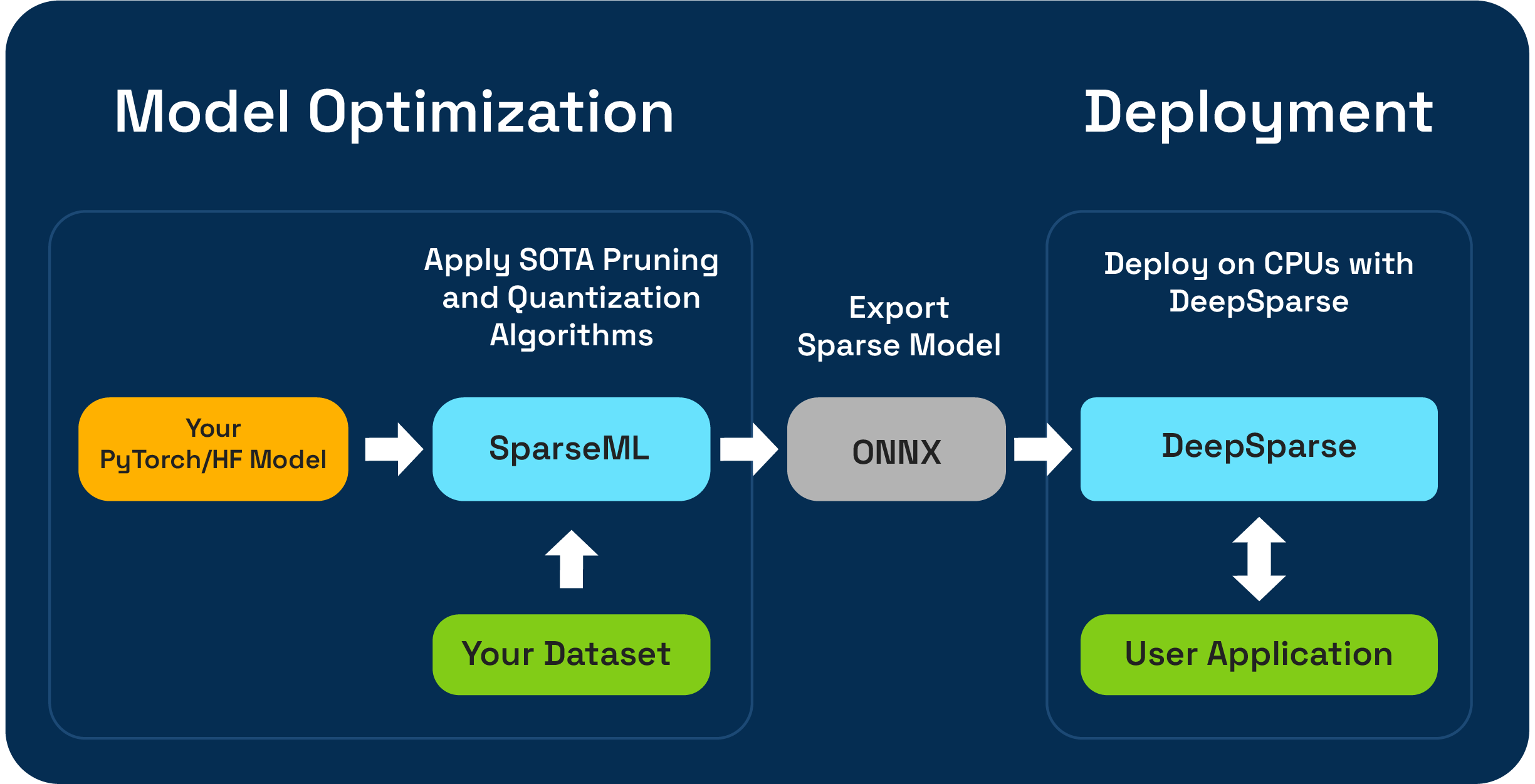

SPARSEML是一种开源模型优化工具包,使您可以使用修剪,量化和蒸馏算法创建推理优化的稀疏模型。然后可以将Sparseml优化的模型导出到ONNX,并使用DeepSparse部署,以在CPU硬件上进行GPU级性能。

神经魔术很高兴能使用新的SparseGPTModfier预览单发LLM压缩工作流程!

要修剪和量化Tinyllama聊天模型,这只是安装依赖项,下载食谱并将其应用于模型的几个步骤:

git clone https://github.com/neuralmagic/sparseml

pip install -e "sparseml[transformers]"

wget https://huggingface.co/neuralmagic/TinyLlama-1.1B-Chat-v0.4-pruned50-quant-ds/raw/main/recipe.yaml

sparseml.transformers.text_generation.oneshot --model_name TinyLlama/TinyLlama-1.1B-Chat-v1.0 --dataset_name open_platypus --recipe recipe.yaml --output_dir ./obcq_deployment --precision float16

src/sparseml/transformers/sparsification/obcq的README具有详细的演练。

Sparseml使您能够以两种方式在数据集上训练的稀疏模型:

稀疏的传输学习使您可以从Sparsezoo(稀疏模型的开源存储库(例如Bert,Yolov5和Resnet-50)中的开源存储库微调预先放置的模型,同时保持稀疏性。这种途径就像您在训练简历和NLP型号中习惯的典型微调一样工作,并且如果您的模型体系结构在Sparsezoo中可用,则非常喜欢。

从头开始稀疏,您可以将最先进的修剪(例如逐步修剪或修剪)和量化(例如量化意识培训)算法应用于任意的pytorch和拥抱的面部模型。该途径需要更多的实验,但允许您创建任何模型的稀疏版本。

该存储库在Python 3.8-3.11和Linux/Debian系统上进行了测试。

建议在虚拟环境中安装以保持系统的顺序。当前支持的ML框架如下: torch>=1.1.0,<=2.0 , tensorflow>=1.8.0,<2.0.0 , tensorflow.keras >= 2.2.0 。

使用PIP安装:

pip install sparseml有关安装的更多信息,例如可选依赖项和要求,请参见此处。

为了启用灵活性,易用性和可重复性,Sparseml使用称为recipes声明界面来指定应由Sparseml应用的与稀疏性相关算法和超参数。

Recipes是YAML文件格式为modifiers列表,该列表编码Sparseml的指令。示例modifiers可以是从设置学习率到编码逐渐修剪算法的超参数的任何东西。 Sparseml系统将recipes解析为每个框架的天然格式,并将修改应用于模型和训练管道。

由于采用了陈述性,基于配方的方法,您可以在现有的Pytorch培训管道中添加Sparseml。 ScheduleModifierManager类负责解析YAML recipes和覆盖标准Pytorch模型和优化对象,并从配方中编码稀疏算法的逻辑。调用manager.modify后,您可以像往常一样使用模型和优化器,因为Sparseml抽象了稀疏算法的复杂性。

工作流如下所示:

model = Model () # model definition

optimizer = Optimizer () # optimizer definition

train_data = TrainData () # train data definition

batch_size = BATCH_SIZE # training batch size

steps_per_epoch = len ( train_data ) // batch_size

from sparseml . pytorch . optim import ScheduledModifierManager

manager = ScheduledModifierManager . from_yaml ( PATH_TO_RECIPE )

optimizer = manager . modify ( model , optimizer , steps_per_epoch )

# typical PyTorch training loop, using your model/optimizer as usual

manager . finalize ( model )Trainer一起使用Sparseml的详细信息。除了代码级API外,Sparseml还通过CLI接口为常见的NLP和CV任务提供了预制的培训管道。 CLI使您可以通过各种公用事业(例如数据集加载和预处理,保存检查点保存,度量报告以及为您处理的日志记录)进行启动培训。这使得在通用训练途径中启动和运行变得容易。

例如,我们可以使用以下启动Yolov5稀疏传输学习运行到VOC数据集(使用Sparsezoo Stubs拉下稀疏模型检查点并转移学习食谱):

sparseml.yolov5.train

--weights zoo:cv/detection/yolov5-s/pytorch/ultralytics/coco/pruned75_quant-none ? recipe_type=transfer_learn

--recipe zoo:cv/detection/yolov5-s/pytorch/ultralytics/coco/pruned75_quant-none ? recipe_type=transfer_learn

--data VOC.yaml

--hyp hyps/hyp.finetune.yaml --cfg yolov5s.yaml --patience 0有关代码库和包含过程的更多信息,请参见Sparseml文档:

官方版本在PYPI上托管

此外,可以通过GitHub释放找到更多信息。

该项目是根据Apache许可证2.0版获得许可的。

我们感谢对代码,示例,集成和文档以及错误报告和功能请求的贡献!了解这里的方式。

对于用户帮助或有关Sparseml的问题,请注册或登录我们的神经魔术社区。我们正在成员成长,很高兴见到您。错误,功能请求或其他问题也可以发布到我们的GitHub问题队列中。

您可以通过订阅神经魔术社区来获得最新的新闻,网络研讨会和活动邀请,研究论文以及其他ML性能。

有关神经魔术的更多一般性问题,请填写此表格。

发现此项目在您的研究或其他沟通中有用吗?请考虑引用:

@InProceedings {

pmlr-v119-kurtz20a,

title = { Inducing and Exploiting Activation Sparsity for Fast Inference on Deep Neural Networks } ,

author = { Kurtz, Mark and Kopinsky, Justin and Gelashvili, Rati and Matveev, Alexander and Carr, John and Goin, Michael and Leiserson, William and Moore, Sage and Nell, Bill and Shavit, Nir and Alistarh, Dan } ,

booktitle = { Proceedings of the 37th International Conference on Machine Learning } ,

pages = { 5533--5543 } ,

year = { 2020 } ,

editor = { Hal Daumé III and Aarti Singh } ,

volume = { 119 } ,

series = { Proceedings of Machine Learning Research } ,

address = { Virtual } ,

month = { 13--18 Jul } ,

publisher = { PMLR } ,

pdf = { http://proceedings.mlr.press/v119/kurtz20a/kurtz20a.pdf } ,

url = { http://proceedings.mlr.press/v119/kurtz20a.html } ,

abstract = {Optimizing convolutional neural networks for fast inference has recently become an extremely active area of research. One of the go-to solutions in this context is weight pruning, which aims to reduce computational and memory footprint by removing large subsets of the connections in a neural network. Surprisingly, much less attention has been given to exploiting sparsity in the activation maps, which tend to be naturally sparse in many settings thanks to the structure of rectified linear (ReLU) activation functions. In this paper, we present an in-depth analysis of methods for maximizing the sparsity of the activations in a trained neural network, and show that, when coupled with an efficient sparse-input convolution algorithm, we can leverage this sparsity for significant performance gains. To induce highly sparse activation maps without accuracy loss, we introduce a new regularization technique, coupled with a new threshold-based sparsification method based on a parameterized activation function called Forced-Activation-Threshold Rectified Linear Unit (FATReLU). We examine the impact of our methods on popular image classification models, showing that most architectures can adapt to significantly sparser activation maps without any accuracy loss. Our second contribution is showing that these these compression gains can be translated into inference speedups: we provide a new algorithm to enable fast convolution operations over networks with sparse activations, and show that it can enable significant speedups for end-to-end inference on a range of popular models on the large-scale ImageNet image classification task on modern Intel CPUs, with little or no retraining cost.}

} @misc {

singh2020woodfisher,

title = { WoodFisher: Efficient Second-Order Approximation for Neural Network Compression } ,

author = { Sidak Pal Singh and Dan Alistarh } ,

year = { 2020 } ,

eprint = { 2004.14340 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.LG }

}