Alibaba Damo Academy recently released a large multilingual language model called Babel, aiming to break the global language barriers and enable artificial intelligence to understand and communicate using the language of more than 90% of the world's population. This innovative move marks an important step in the field of language processing.

At present, many large language models focus on resource-rich languages such as English, French, and German, while languages with a large user base such as Hindi, Bengali, and Urdu are often overlooked. This phenomenon is similar to the situation in which small language users are often marginalized in global conferences.

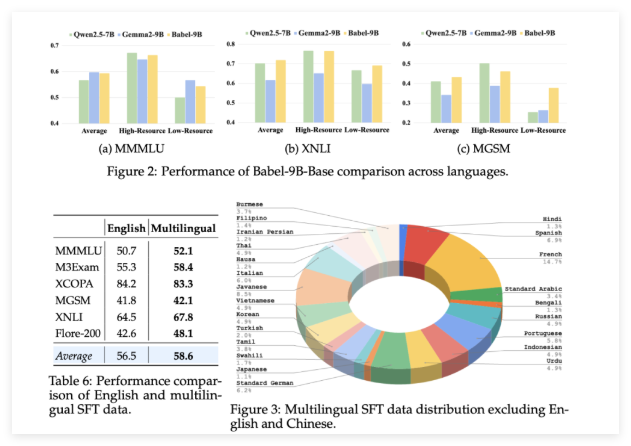

Babel was launched to change this situation. The model supports the 25 most spoken languages in the world, covering more than 90% of the world's population. More importantly, Babel also pays special attention to Swahili, Javanese, Burmese and other languages that are rarely found in open source LLM. This move will bring more convenient and better AI language services to billions of people who use these languages.

Unlike traditional continuous pre-training methods, Babel uses unique layer expansion technology to enhance the capabilities of the model. This method can be understood as increasing "knowledge reserve" in a more sophisticated way based on the original model, thereby improving performance while ensuring computing efficiency. The research team launched two distinctive models: Babel-9B, optimized for efficient single-GPU inference and fine-tuning; and Babel-83B, a 83 billion-parameter "big" aimed at setting a new benchmark for open source multilingual LLM.

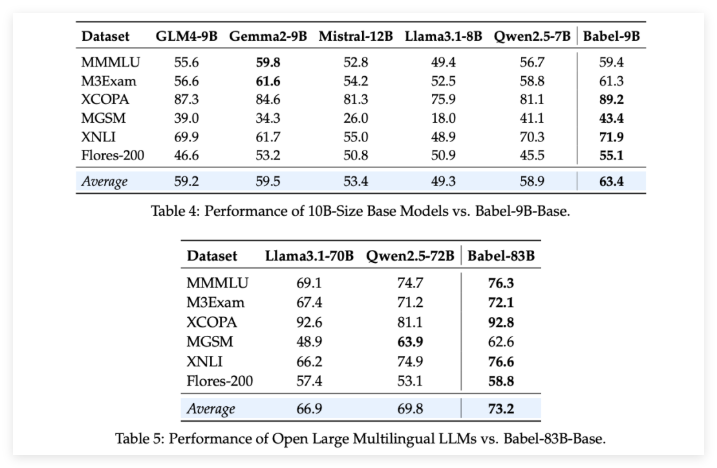

To verify Babel's strength, the research team conducted rigorous evaluations on multiple multilingual tasks. The results are exciting: Whether it is the 9 billion-parameter Babel-9B or the 83 billion-parameter Babel-83B, it surpasses other open source models of the same scale in multiple benchmarks. For example, Babel performed well in tasks such as world knowledge (MMMLU, M3Exam), reasoning (MGSM, XCOPA), understanding (XNLI), and translation (Flores-200).

It is particularly worth mentioning that when Babel deals with resource-scarce languages, its accuracy rate has increased by 5% to 10% compared to previous multilingual LLM. This fully demonstrates that while Babel improves language coverage, it also pays attention to the performance of the model in various languages.

What’s even more surprising is that after supervised fine-tuning (SFT) on more than one million conversation datasets, Babel’s chat versions Babel-9B-Chat and Babel-83B-Chat demonstrate strong conversational capabilities, and their performance is even comparable to some top commercial AI models. For example, Babel-83B-Chat has been able to compete with GPT-4o on certain tasks. This undoubtedly injects new vitality into the open source community, proving that the open source model can also gain a leading position in multilingual capabilities.

Project: https://babel-llm.github.io/babel-llm/

github:https://github.com/babel-llm/babel-llm