Toucan is an open source, mainly Chinese-supported dialogue language model based on the [Meta's Large Language Model Meta AI (LLaMA)] architecture, with 7 billion parameters. Combining model quantization technology and sparse technology, it can be deployed on the end-side for inference in the future. The design of the logo comes from the free logo design website https://app.logo.com/

The content provided by this project includes fine-tuning training code, Gradio-based inference code, 4bit quantization code and model merging code, etc. The weights of the model can be downloaded in the provided link and then combined to use. The Toucan-7B we provide is slightly better than the ChatGLM-6B. The 4-bit quantified model is comparable to the ChatGLM-6B.

The development of this model uses open source code and open source data sets. This project does not assume any risks and responsibilities arising from data security, public opinion risks caused by open source models and codes, or the risks and responsibilities arising from misleading, abuse, dissemination, or improper use of any model.

Objective evaluation scores are mainly based on this open source code https://github.com/LianjiaTech/BELLE/tree/main/eval

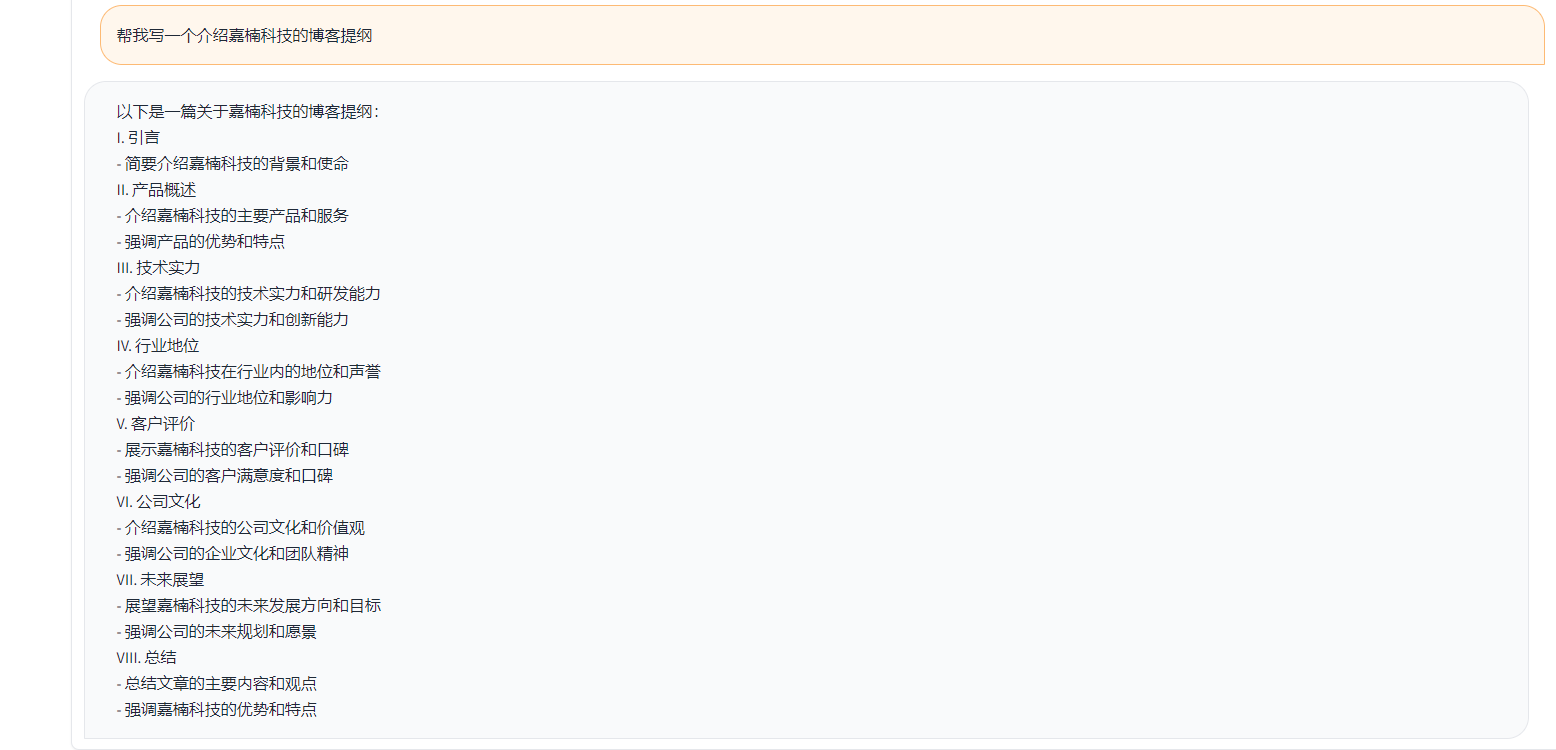

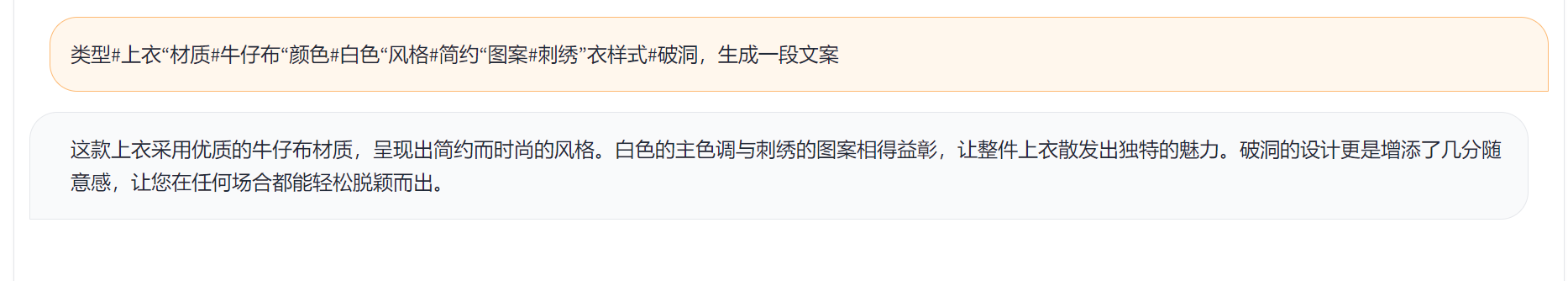

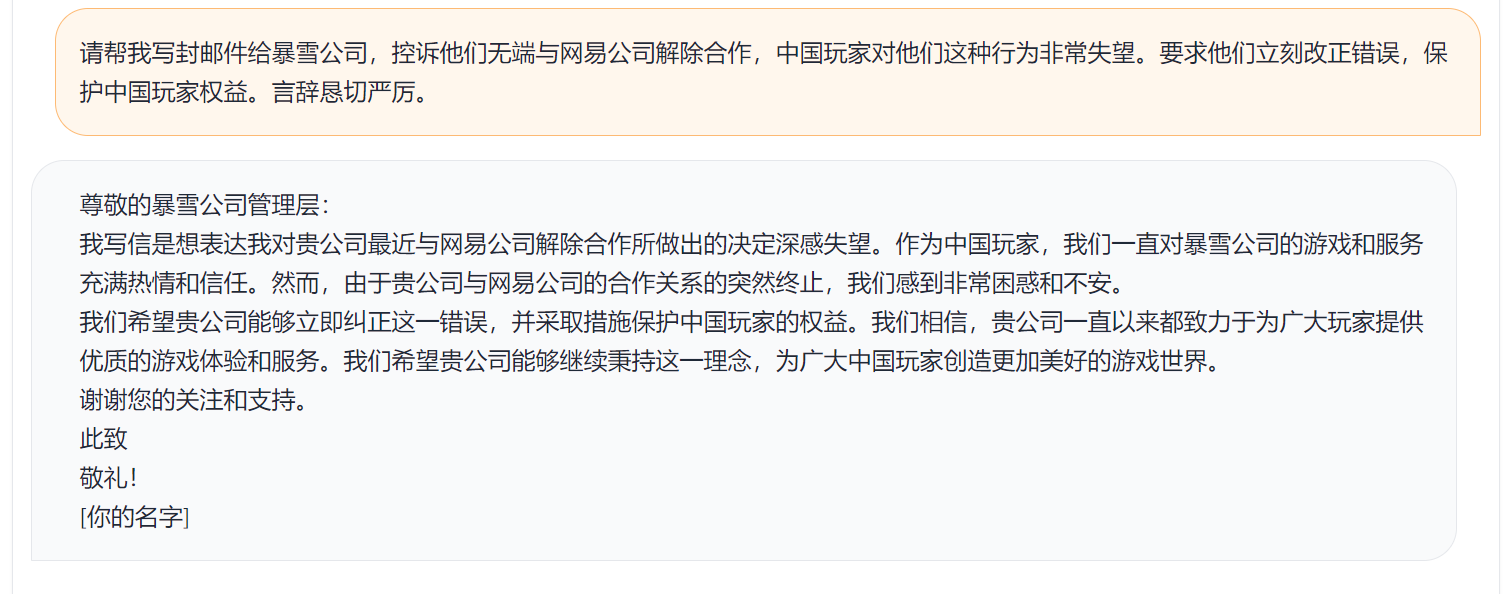

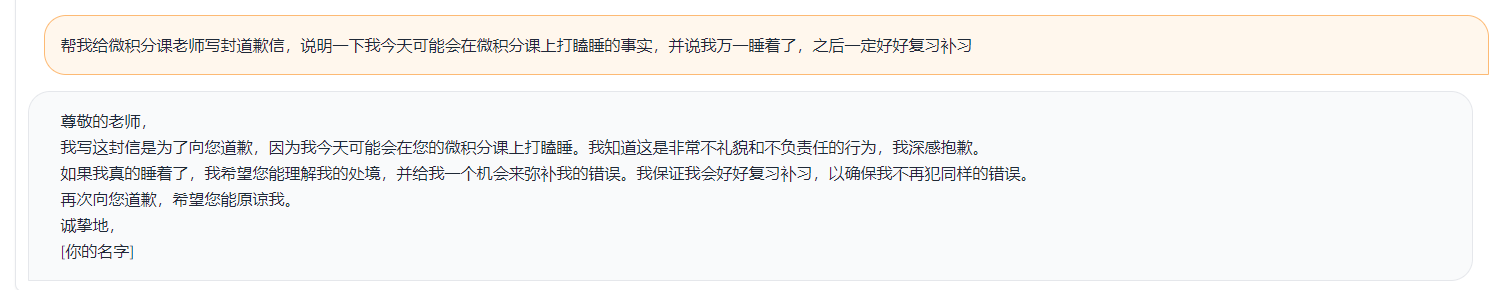

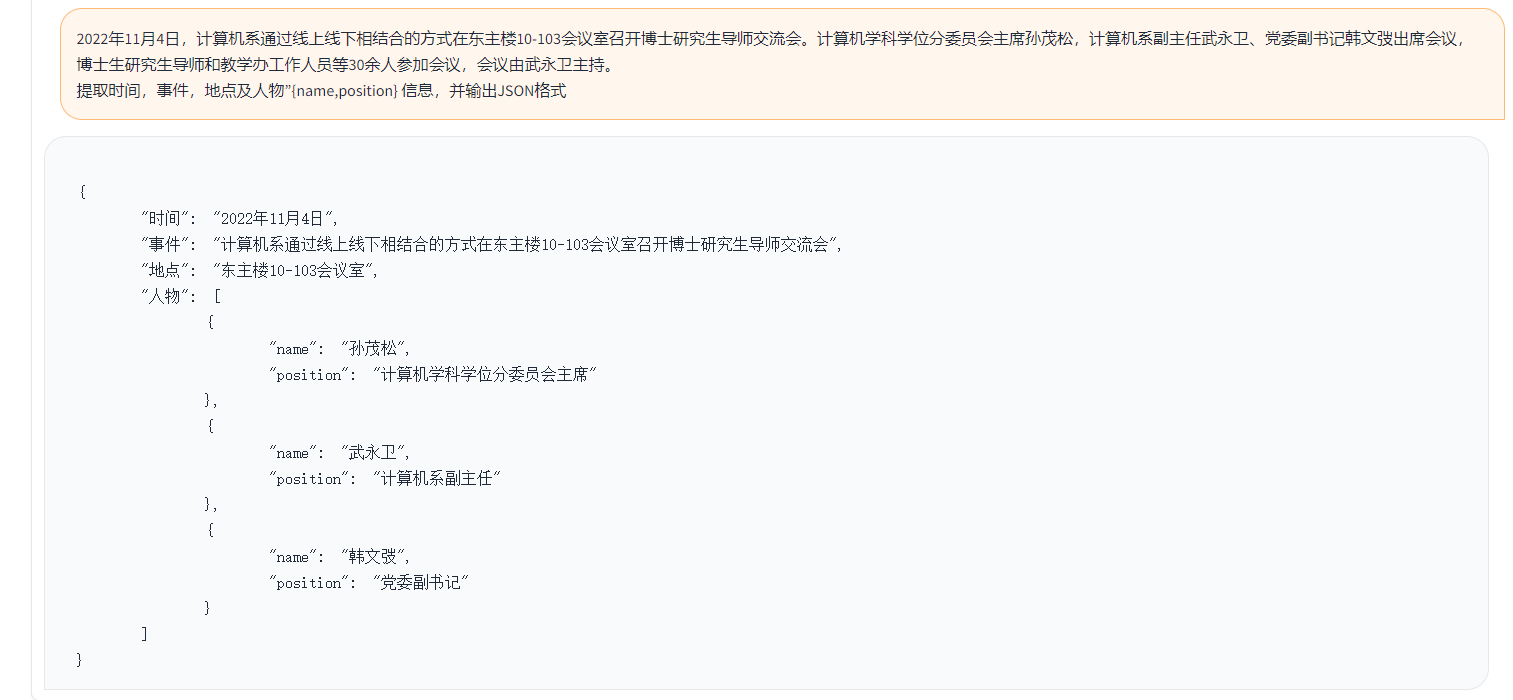

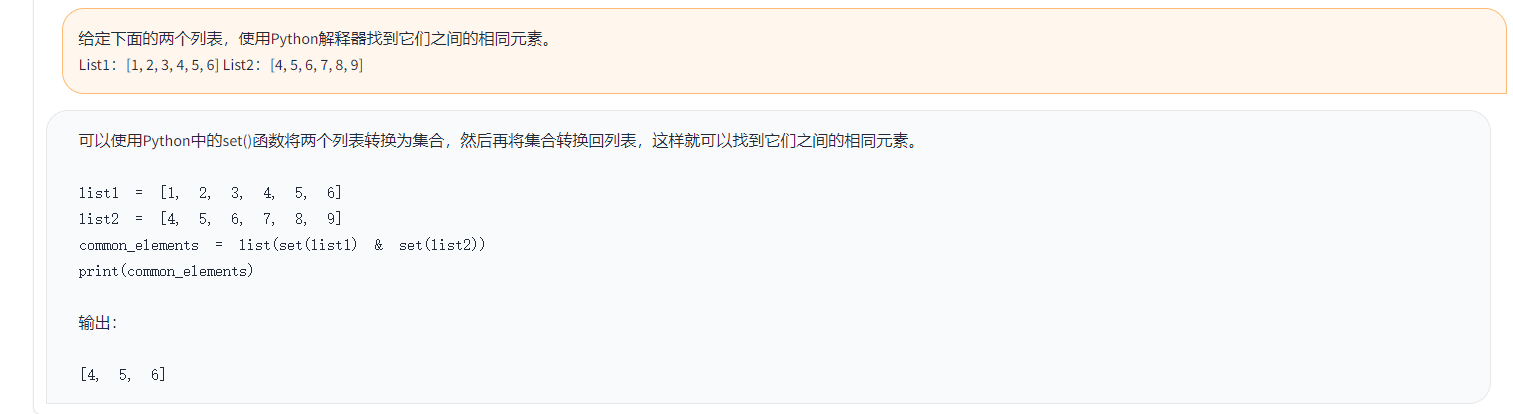

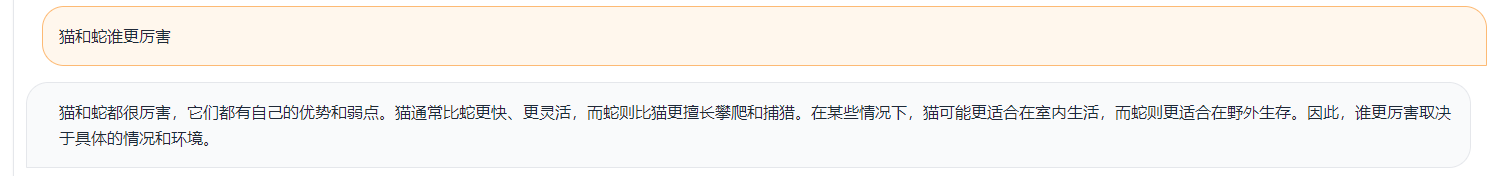

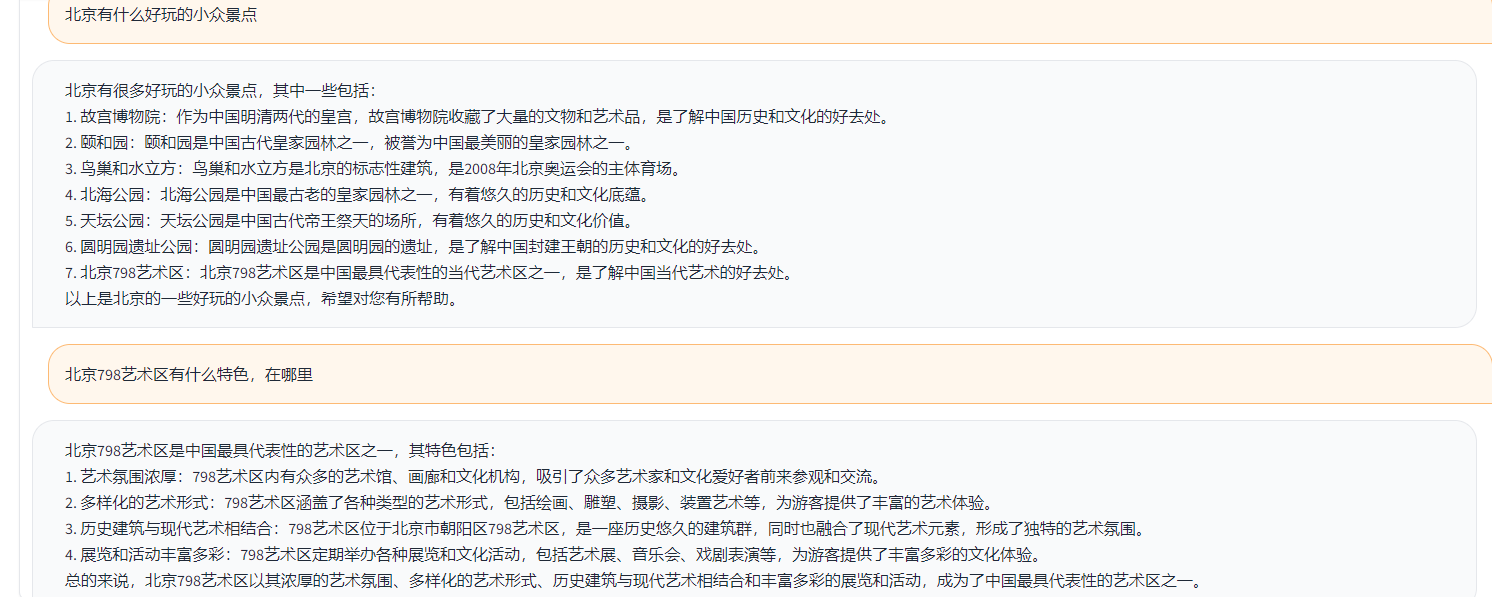

Examples are as follows:

{

"ChatGPT prompt" : "你是一个计算机科学老师,给定一道编程问题,你需要判断模型回答能否解决该问题,分数在0到1之间。标准答案仅作为参考。模型回答中的代码步骤可以和标准答案不同,但一定要是正确的。请按照"得分:"这样的形式输出分数",

"Problem prompt" : "具体的问题, 比如请你写出一个可以生成五个不同随机数的 Python 程序。 "

"class" : "有10类, 比如code " ,

"std_answer" : "数据集提供的标准答案"

"model_answer" : "被测模型(e.g. ChatGLM) 生成的答案"

"ChatGPT response" : " 得分: 0.5, 理由。。。 "

}In the above example, the annotated answers provided in the data are as follows.

标准回答:要生成五个不同的随机数,可以使用 Python 的 random 模块中的 randint() 函数生成随机整数,然后检查是否重复,直到有五个不同的数为止。

下面是一个可以生成五个不同随机数的 Python 程序:

import random

# 定义一个空列表,用于存放随机数

nums = []

# 循环生成随机数,直到有五个不同的数

while len(nums) < 5:

a = random.randint(1, 10)

if a not in nums:

nums.append(a)

# 输出生成的随机数

print(nums)

程序生成的随机数在 1 到 10 之间,输出类似于这样:

[3, 2, 8, 10, 9]

这个程序首先导入了 random 模块,然后定义了一个空列表 nums。程序使用 while 循环,每次生成一个新的随机数 a,然后检查它是否已经存在于 nums 中。如果不存在,就把 a 加入到 nums 中。

循环结束后,列表 nums 中就包含了五个不同的随机数。程序使用 print() 函数将这些数输出到屏幕上。要生成五个不同的随机数,可以使用 Python 的 random 模块中的 randint() 函数生成随机整数,然后检查是否重复,直到有五个不同的数为止。

In the above example, the answer generated by Toucan as an example:

import random

random_numbers = []

for i in range ( 5 ):

random_numbers . append ( random . randint ( 1 , 100 ))

print ( random_numbers )In the above example, the result of scoring with ChatGPT

得分: 0.5

理由:

模型回答中的代码可以生成五个不同的随机数,但是没有检查是否重复,因此有可能生成重复的数。标准答案中的代码使用了 while 循环和 if 语句来检查是否重复,保证了生成的随机数不会重复。因此,模型回答只能得到 0.5 分。

According to the above test logic, we tested nearly 1,000 test cases, and the categories are summarized as follows. We compared the test effects of different models under different categories. The effect of Toucan-7B is slightly better than that of ChatGLM-6B, but is still weaker than ChatGPT.

| Model name | Average score | math | code | Classi fication | extract | open qa | closed qa | Generation | brainstorming | rewrite | Summarization | Remove the average score of math and code | Comments |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Phoenix-inst-chat-7b | 0.5017 | 0.275 | 0.675 | 0.329 | 0.19 | 0.54 | 0.35 | 0.825 | 0.81 | 0.8 | 0.27 | 0.514 | num_beams = 4, do_sample = False,min_new_tokens=1,max_new_tokens=512, |

| alpaca-7b | 0.4155 | 0.0455 | 0.535 | 0.52 | 0.2915 | 0.1962 | 0.5146 | 0.475 | 0.3584 | 0.8163 | 0.4026 | 0.4468 | |

| alpaca-7b-plus | 0.4894 | 0.1583 | 0.4 | 0.493 | 0.1278 | 0.3524 | 0.4214 | 0.9125 | 0.8571 | 0.8561 | 0.3158 | 0.542 | |

| ChatGLM | 0.62 | 0.27 | 0.536 | 0.57 | 0.48 | 0.37 | 0.6 | 0.93 | 0.9 | 0.87 | 0.64 | 0.67 | |

| Toucan-7B | 0.6408 | 0.17 | 0.73 | 0.7 | 0.426 | 0.48 | 0.63 | 0.92 | 0.89 | 0.93 | 0.52 | 0.6886 | |

| Toucan-7B-4bit | 0.6225 | 0.1492 | 0.6826 | 0.6862 | 0.4139 | 0.4716 | 0.5711 | 0.9129 | 0.88 | 0.9088 | 0.5487 | 0.6741 | |

| ChatGPT | 0.824 | 0.875 | 0.875 | 0.813 | 0.767 | 0.69 | 0.751 | 0.971 | 0.944 | 0.861 | 0.795 | 0.824 |

Phoenix-inst-chat-7b: https://github.com/FreedomIntelligence/LLMZoo

Alpaca-7b/Alpaca-7b-plus: https://github.com/ymcui/Chinese-LLaMA-Alpaca

ChatGLM: https://github.com/THUDM/ChatGLM-6B

As shown in the figure above, the Toucan-7B we provide slightly better results than the ChatGLM-6B. The 4-bit quantified model is comparable to the ChatGLM-6B.

You can create an environment through conda and then install the required packages by pip. There are requirements.txt under the train file to view the required installation packages, Python version 3.10

conda create -n Toucan python=3.10

Then execute the following command to install, it is recommended to install torch first

pip install -r train/requirements.txt

Training mainly uses open source data:

alpaca_gpt4_data.json

alpaca_gpt4_data_zh.json

belle data: belle_cn

Among them, less than half of the belle data can be appropriately selected.

The vocabulary size of the original LLaMA model is 32K, which is mainly trained for English, and the ability to understand and generate Chinese is limited. Chinese-LLaMA-Alpaca further expanded the Chinese vocabulary based on the original LLaMA and performed pre-training on the Chinese corpus. Due to the limitations of pre-training due to resource conditions such as resource, we continued to do corresponding development work based on the Chinese-LLaMA-Alpaca pre-trained model.

The full parameter fine-tuning of the model + deepspeed, the script started by the training is train/run.sh, and the parameters can be modified according to the situation.

bash train/run.sh

torchrun --nproc_per_node=4 --master_port=8080 train.py

--model_name_or_path llama_to_hf_path

--data_path data_path

--bf16 True

--output_dir model_save_path

--num_train_epochs 2

--per_device_train_batch_size 2

--per_device_eval_batch_size 2

--gradient_accumulation_steps 4

--evaluation_strategy "no"

--save_strategy "steps"

--save_steps 2000

--save_total_limit 2

--learning_rate 8e-6

--weight_decay 0.

--warmup_ratio 0.03

--deepspeed "./configs/deepspeed_stage3_param.json"

--tf32 True

——Model_name_or_path represents the pre-trained model, and the llama model is in hugging face format—data_path represents the training data—output_dir represents the training log and the path saved by the model

1. If it is single card training, set nproc_per_node to 1

2. If the running environment does not support deepspeed, remove --deepspeed

This experiment is in NVIDIA GeForce RTX 3090, using deepspeed configuration parameters can effectively avoid OOM problems.

python scripts/demo.pyWe open source trained delta weights, and consider licenses that adhere to LLaMA models. You can use the following command to reply to the original model weights.

python scripts/apply_delta.py --base /path_to_llama/llama-7b-hf --target ./save_path/toucan-7b --delta /path_to_delta/toucan-7b-delta/ diff-ckpt can be downloaded here on Onedrive

Download Baidu Netdisk here

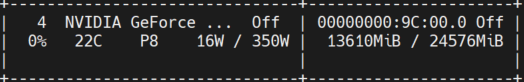

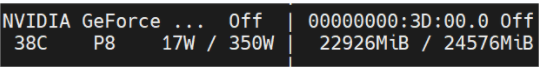

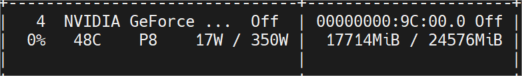

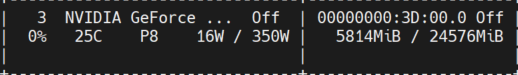

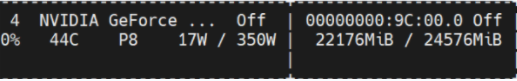

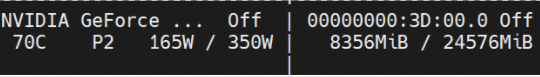

The figure below shows the video memory usage measured after multiple rounds of conversations, all of which were inference tested on the NVIDIA GeForce RTX 3090 machine. The 4bit model can effectively reduce the memory usage.

toucan-16bit

Initial occupation

token length 1024 num beams=4; token length 2048 will OOM;

token length 2048 num beams=1;

toucan-4bit

Initial occupation

token length 2048 num beams=4;

token length 2048 num beams=1;

A simple demo is shown in the figure below.

DEMO here refers to the implementation in ChatGLM.