在ACL 2019中發布的論文“與空白:關係學習的分佈相似性相匹配”的模型的Pytorch實施。

注意:這不是該論文的正式回購。

基於本文的方法論,此處實施的其他關係提取的其他模型:

有關實施的更多概念詳細信息,請參閱https://towardsdatascience.com/bert-s-for-relation-traction-nlp-2c7c3ab487c4

如果您喜歡我的工作,請考慮通過單擊頂部的讚助商按鈕來贊助。

要求:Python(3.8+)

python3 -m pip install -r requirements.txt

python3 -m spacy download en_core_web_lg預先訓練的BERT模型(Albert,Bert)由HuggingFace.co(https://huggingface.co)提供

https://github.com/dmis-lab/biobert提供的預培訓的生物模型

要使用biobert(biobert_v1.1_pubmed),請從此處下載並解開該模型。

在下面的參數中運行main_pretraining.py。預培訓數據可以是任何.txt連續文本文件。

我們使用Spacy NLP從文本中獲取成對實體(窗口大小在40個令牌長度內),以形成與預訓練的關係語句。實體識別基於對象/受試者的依賴樹解析。

我使用的從CNN數據集(CNN.TXT)獲取的預培訓數據可以在此處下載。

下載並另存為./data/cnn.txt

但是,請注意,本文使用Wiki轉儲數據進行MTB預訓練,該數據比CNN數據集大得多。

注意:根據可用的GPU,預培訓可能需要很長時間。遵循以下部分,可以直接對關係撤銷任務進行微調,並仍然獲得合理的結果。

main_pretraining.py [-h]

[--pretrain_data TRAIN_PATH]

[--batch_size BATCH_SIZE]

[--freeze FREEZE]

[--gradient_acc_steps GRADIENT_ACC_STEPS]

[--max_norm MAX_NORM]

[--fp16 FP_16]

[--num_epochs NUM_EPOCHS]

[--lr LR]

[--model_no MODEL_NO (0: BERT ; 1: ALBERT ; 2: BioBERT)]

[--model_size MODEL_SIZE (BERT: ' bert-base-uncased ' , ' bert-large-uncased ' ;

ALBERT: ' albert-base-v2 ' , ' albert-large-v2 ' ;

BioBERT: ' bert-base-uncased ' (biobert_v1.1_pubmed))]在下面的參數上運行main_task.py。需要SEMEVAL2010任務8數據集,可在此處提供。下載&UNZIP到./data/文件夾。

main_task.py [-h]

[--train_data TRAIN_DATA]

[--test_data TEST_DATA]

[--use_pretrained_blanks USE_PRETRAINED_BLANKS]

[--num_classes NUM_CLASSES]

[--batch_size BATCH_SIZE]

[--gradient_acc_steps GRADIENT_ACC_STEPS]

[--max_norm MAX_NORM]

[--fp16 FP_16]

[--num_epochs NUM_EPOCHS]

[--lr LR]

[--model_no MODEL_NO (0: BERT ; 1: ALBERT ; 2: BioBERT)]

[--model_size MODEL_SIZE (BERT: ' bert-base-uncased ' , ' bert-large-uncased ' ;

ALBERT: ' albert-base-v2 ' , ' albert-large-v2 ' ;

BioBERT: ' bert-base-uncased ' (biobert_v1.1_pubmed))]

[--train TRAIN]

[--infer INFER]要推斷句子,您可以在句子中註釋entity1&entity2 in and and in suntions and tity and int sunts and tity and in sunts and sunts的實體標籤[E1],[E2]。例子:

Type input sentence ( ' quit ' or ' exit ' to terminate):

The surprise [E1]visit[/E1] caused a [E2]frenzy[/E2] on the already chaotic trading floor.

Sentence: The surprise [E1]visit[/E1] caused a [E2]frenzy[/E2] on the already chaotic trading floor.

Predicted: Cause-Effect(e1,e2) from src . tasks . infer import infer_from_trained

inferer = infer_from_trained ( args , detect_entities = False )

test = "The surprise [E1]visit[/E1] caused a [E2]frenzy[/E2] on the already chaotic trading floor."

inferer . infer_sentence ( test , detect_entities = False )Sentence: The surprise [E1]visit[/E1] caused a [E2]frenzy[/E2] on the already chaotic trading floor.

Predicted: Cause-Effect(e1,e2) 該腳本還可以自動檢測輸入句子中的潛在實體,在這種情況下,都會推斷出所有可能的關係組合:

inferer = infer_from_trained ( args , detect_entities = True )

test2 = "After eating the chicken, he developed a sore throat the next morning."

inferer . infer_sentence ( test2 , detect_entities = True )Sentence: [E2]After eating the chicken[/E2] , [E1]he[/E1] developed a sore throat the next morning .

Predicted: Other

Sentence: After eating the chicken , [E1]he[/E1] developed [E2]a sore throat[/E2] the next morning .

Predicted: Other

Sentence: [E1]After eating the chicken[/E1] , [E2]he[/E2] developed a sore throat the next morning .

Predicted: Other

Sentence: [E1]After eating the chicken[/E1] , he developed [E2]a sore throat[/E2] the next morning .

Predicted: Other

Sentence: After eating the chicken , [E2]he[/E2] developed [E1]a sore throat[/E1] the next morning .

Predicted: Other

Sentence: [E2]After eating the chicken[/E2] , he developed [E1]a sore throat[/E1] the next morning .

Predicted: Cause-Effect(e2,e1) 在此處下載少數1.0數據集。並解開為./data/文件夾。

使用參數'task'設置為“少數”,運行main_task.py。

python main_task.py --task fewrel結果:

(5向1桿)

沒有MTB的BERT EM ,未接受任何少數數據的培訓

| 型號大小 | 準確性(41646個樣本) |

|---|---|

| Bert-base-uncund | 62.229% |

| Bert-large uncund | 72.766% |

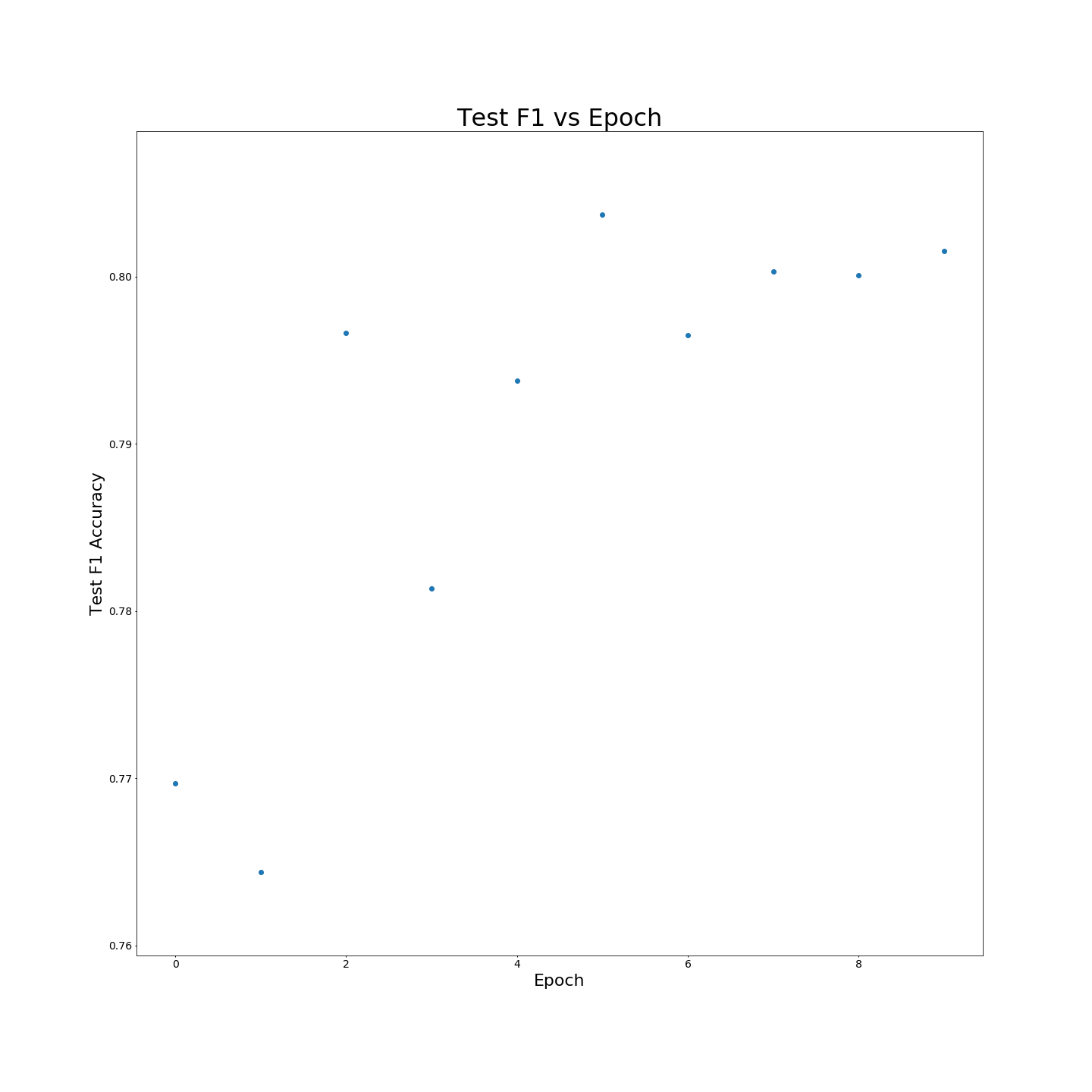

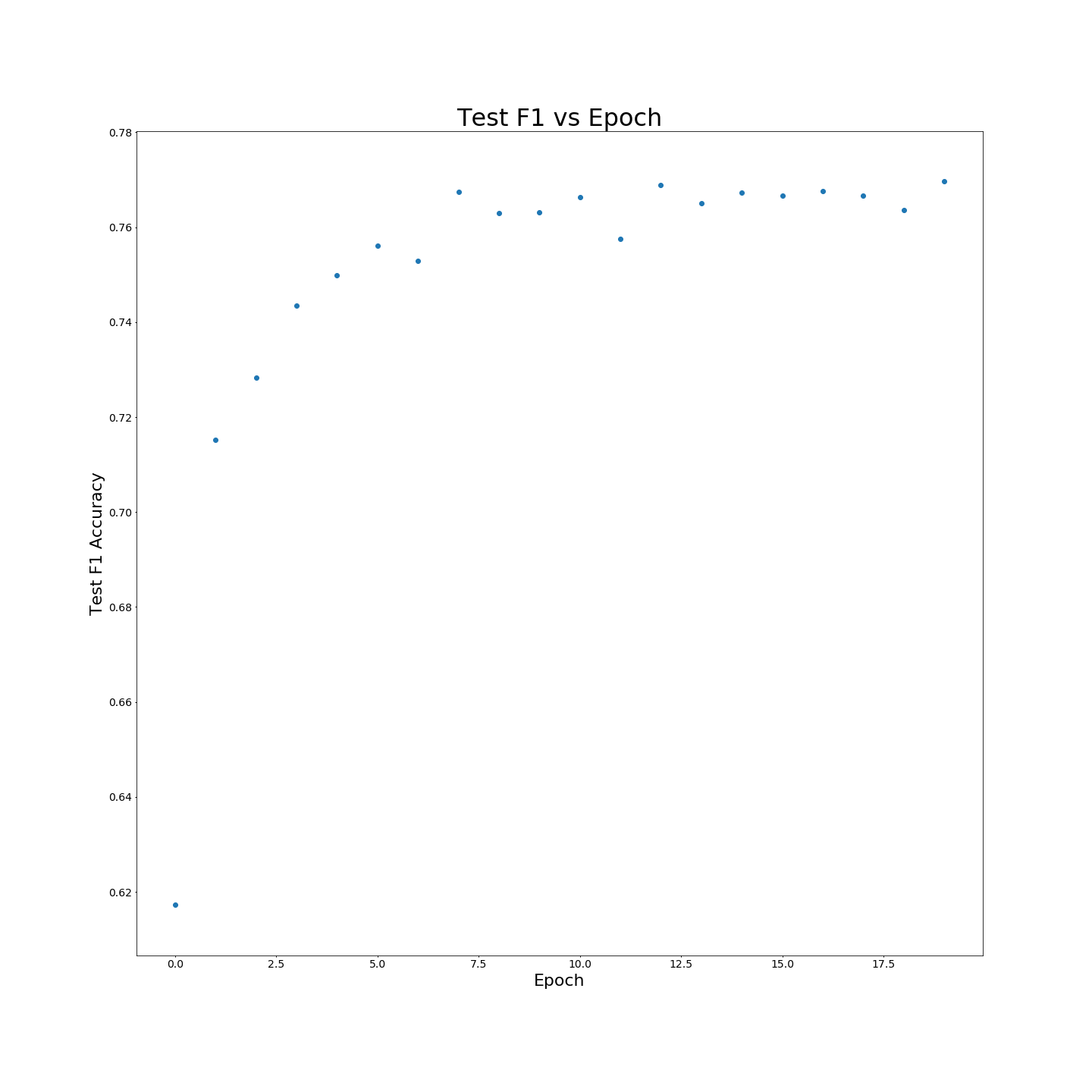

沒有MTB預培訓:對100%培訓數據進行培訓時F1結果:

沒有MTB預培訓:對100%培訓數據進行培訓時F1結果: