魔术没有什么魔术。魔术师只是理解一些简单的东西,对于未经训练的观众来说似乎并不简单或自然。一旦您学习了如何在使手看起来空的同时握住卡,那么您只需要练习才能“做魔术”。 - 杰弗里·弗里德尔(Jeffrey Friedl)在书中掌握正则表达式

注意:请提出任何建议,更正和反馈的问题。

大多数代码样本都是使用Jupyter笔记本(使用COLAB)完成的。因此每个代码可以独立运行。

已经探讨了以下主题:

注意:难度水平是根据我的理解分配的。

| 令牌化 | 单词嵌入 - word2vec | 单词嵌入 - 手套 | 单词嵌入 - elmo |

| RNN,LSTM,Gru | 包装衬垫序列 | 注意机制-Luong | 注意机制-Bahdanau |

| 指针网络 | 变压器 | GPT-2 | 伯特 |

| 主题建模 - LDA | 主成分分析(PCA) | 天真的贝叶斯 | 数据增强 |

| 句子嵌入 |

将文本数据转换为令牌的过程是NLP中最重要的一步之一。使用以下方法进行了代币化:

单词嵌入是文本的学习表示,其中具有相同含义的单词具有相似的表示形式。正是这种代表单词和文档的方法可能被认为是挑战自然语言处理问题的深度学习的关键突破之一。

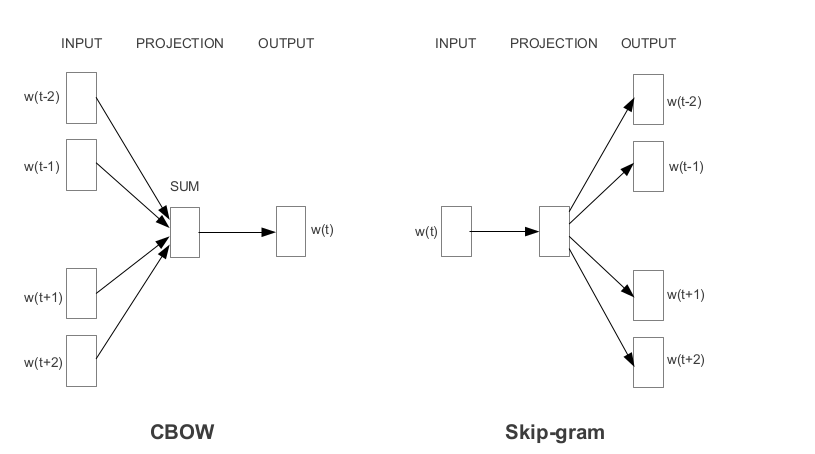

Word2Vec是Google开发的最受欢迎的预贴单词嵌入之一。根据学习嵌入的方式,Word2Vec分为两种方法:

手套是获得预训练的嵌入的另一种常用方法。手套旨在实现两个目标:

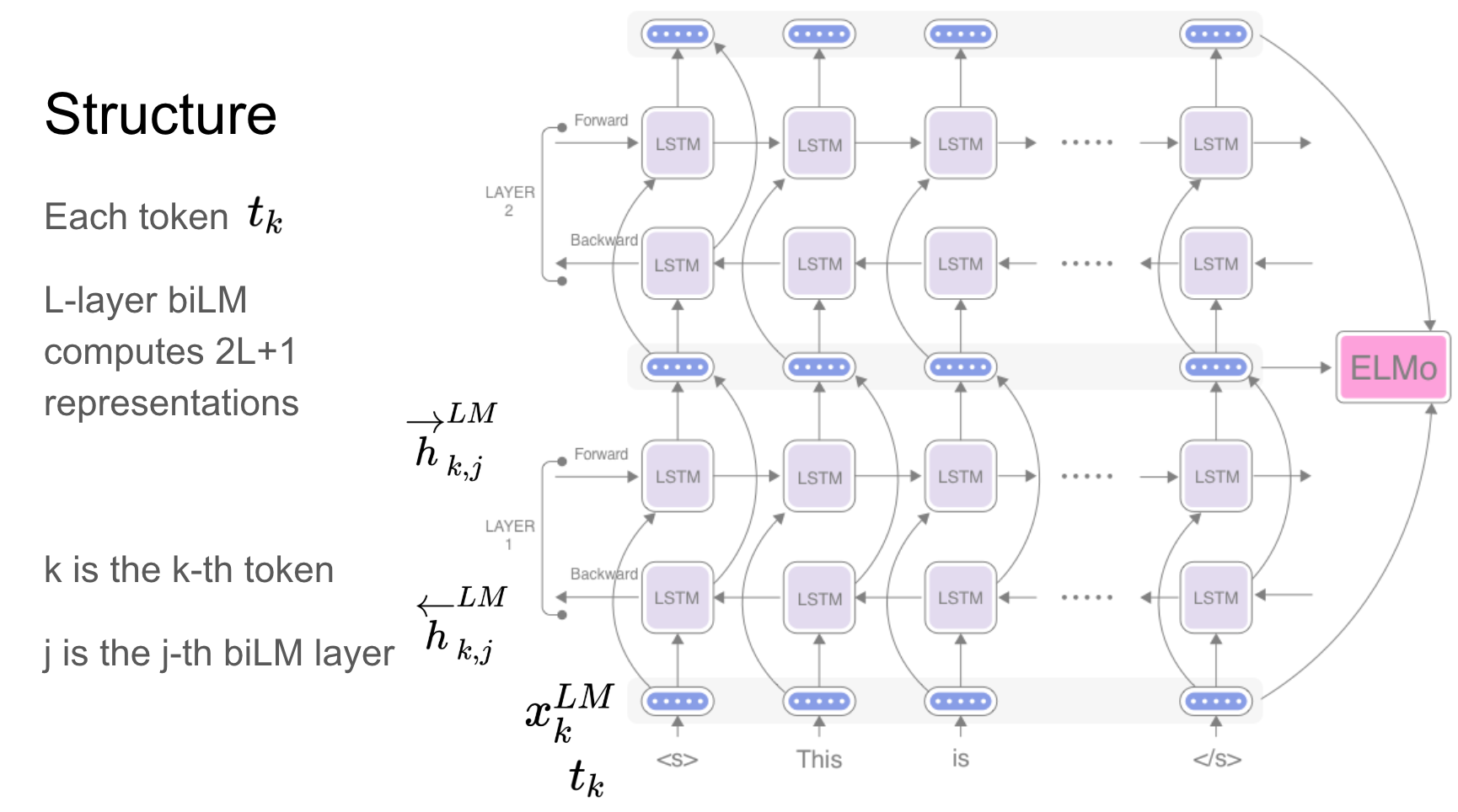

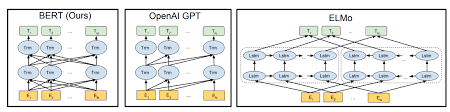

Elmo是一个建模的深层上下文化的单词表示形式:

这些单词向量是深层双向语言模型(BILM)的内部状态的函数,该功能已在大型文本语料库中进行了预培训。

经常性网络-RNN,LSTM,GRU已被证明是NLP应用程序中最重要的单元之一,因为它们的架构。在许多问题上,需要像预测场景中的情绪一样需要记住序列性质,需要记住以前的场景。

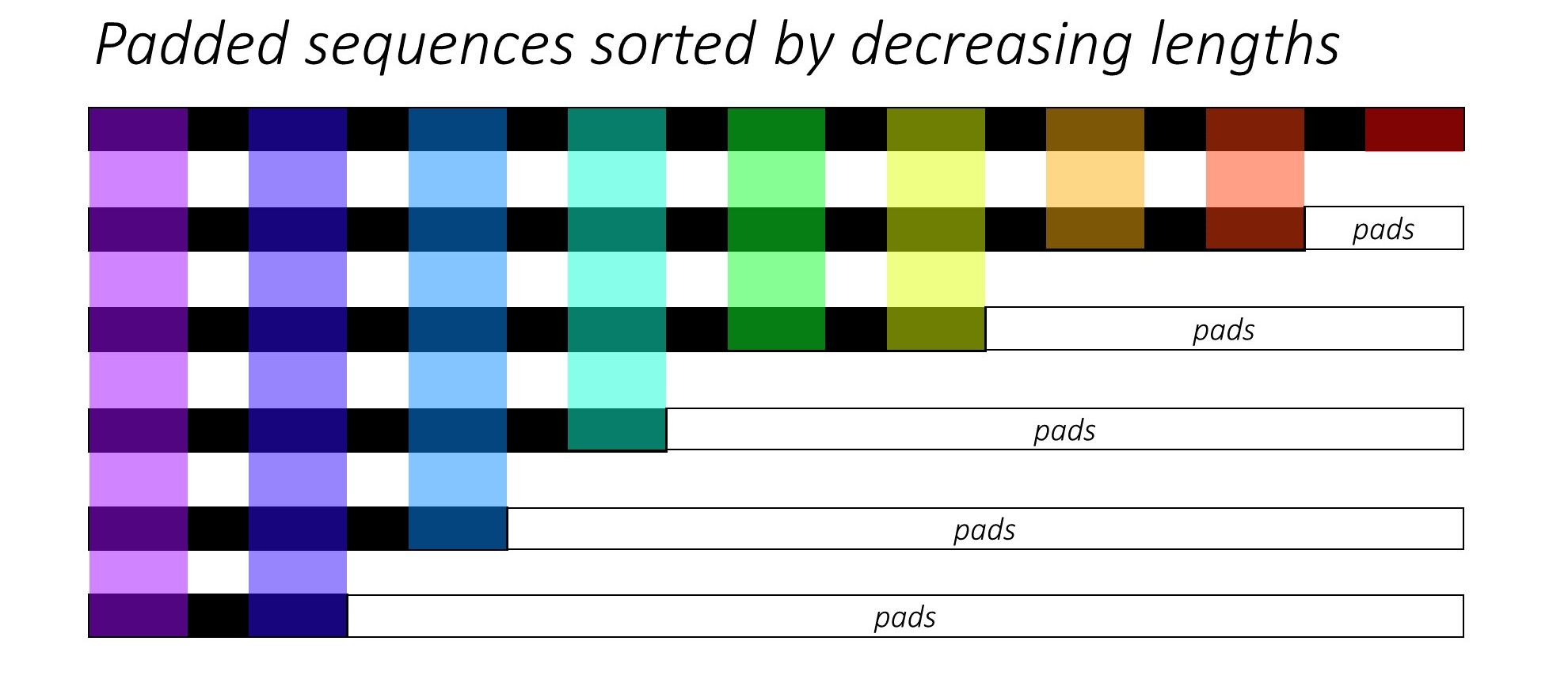

当训练RNN(LSTM,GRU或VANILLA-RNN)时,很难批量批次序列。理想情况下,我们将把所有序列都放在固定的长度上,并最终进行不必要的计算。我们如何克服这一点? Pytorch提供pack_padded_sequences功能。

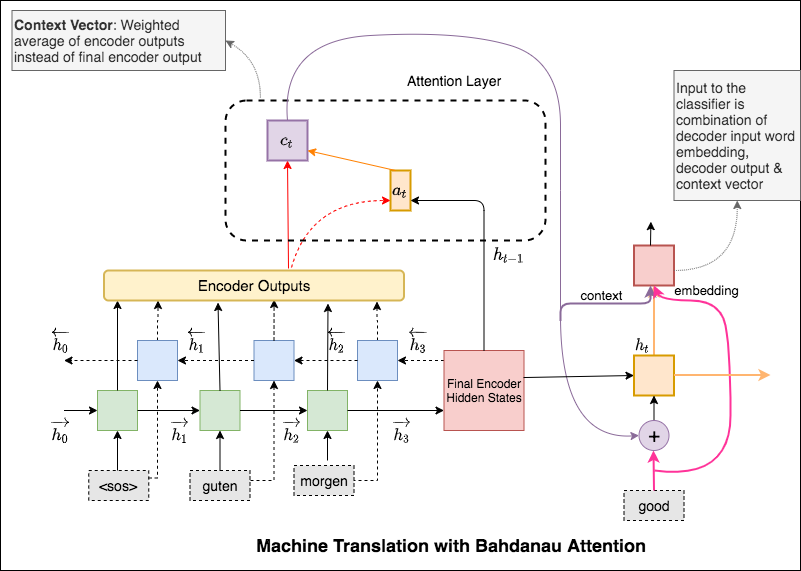

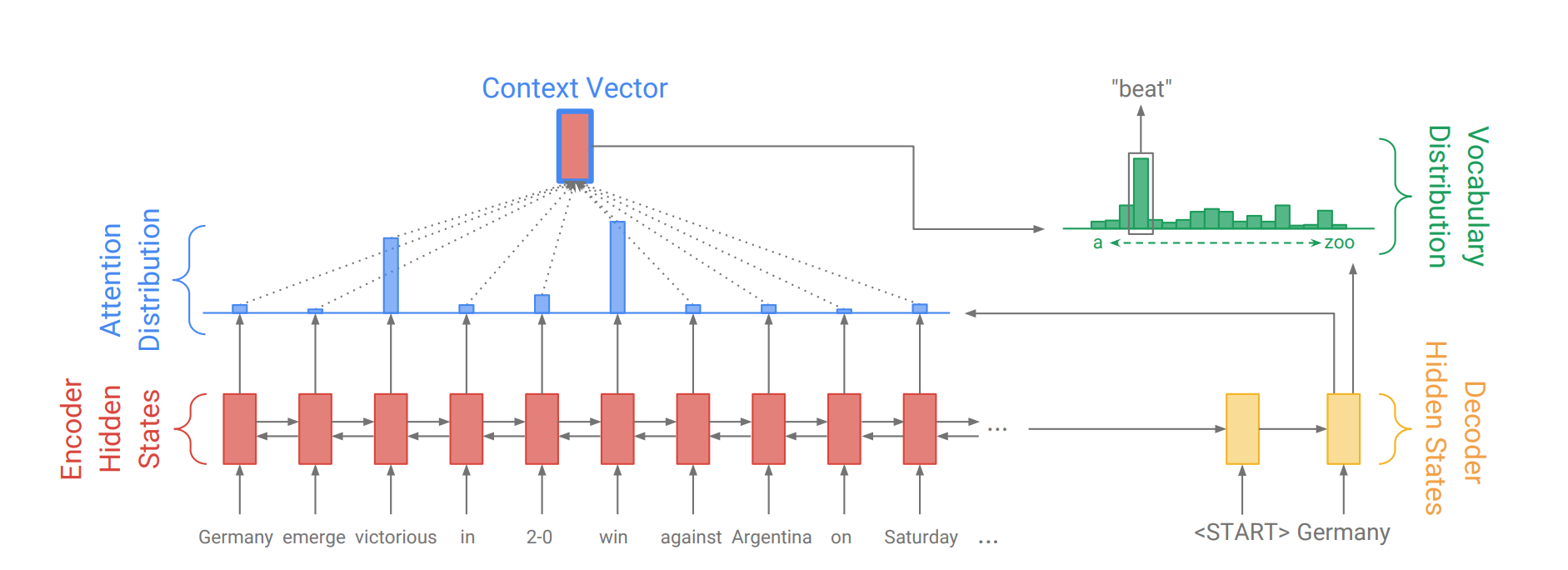

注意机制诞生是为了帮助记住神经机器翻译(NMT)中的长源句子。与其从编码器的最后一个隐藏状态中构建单个上下文向量,不如在解码句子时更多地专注于输入的相关部分。将通过获取编码器输出和解码器RNN的current output来创建上下文向量。

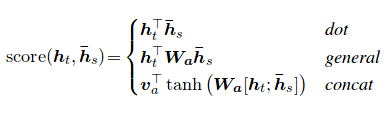

注意力评分可以通过三种方式计算。 dot , general和concat 。

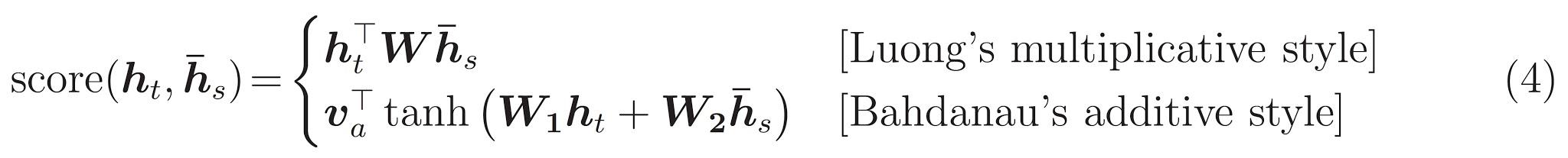

Bahdanau和Luong关注之间的主要区别在于上下文向量的创建方式。上下文向量将通过取编码器输出和解码器RNN的previous hidden state创建。在Luong注意的位置,将通过取编码器输出和解码器RNN的current hidden state来创建上下文向量。

一旦计算上下文,它将与解码器输入嵌入并作为解码器RNN输入。

Bahdanau的关注也被称为additive 。

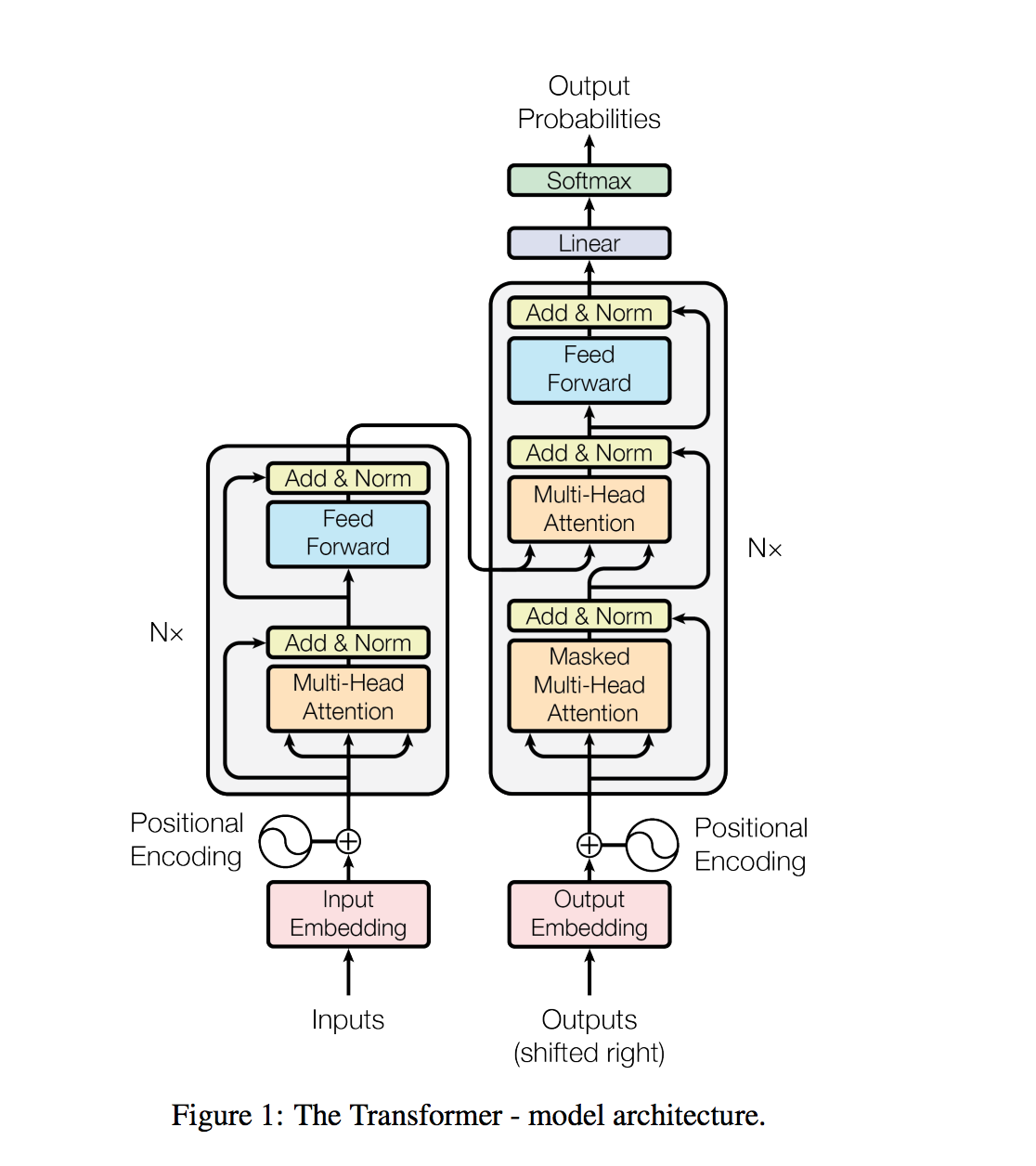

变压器是一种模型体系结构避免复发,而是完全依靠注意机制在输入和输出之间吸引全局依赖性。

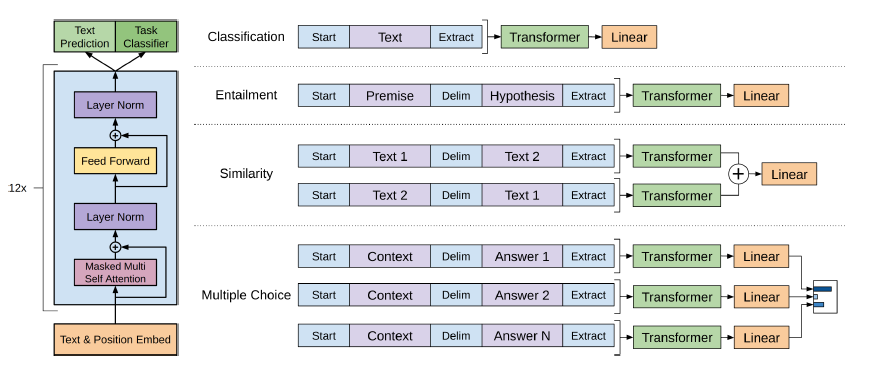

通常,在任务规定的数据集中进行监督学习,通常会接近自然语言处理任务,例如问答,机器翻译,阅读理解和摘要。我们证明,当在新的名为WebText的新网页上培训时,语言模型开始学习这些任务而没有任何明确的监督。我们最大的型号GPT-2是1.5B参数变压器,在8个测试的语言建模数据集中有7个以零拍设置的方式实现了最先进的结果,但仍未拟合WebText。该模型的样本反映了这些改进,并包含文本的连贯段落。这些发现提出了建立语言处理系统的有希望的途径,该途径学会从其自然发生的演示中执行任务。

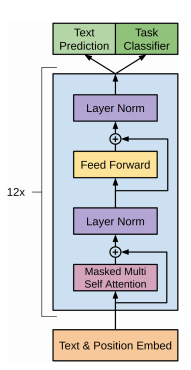

GPT-2利用了仅12层解码器的变压器体系结构。

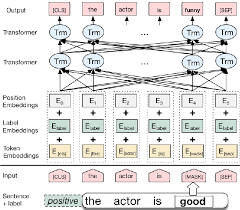

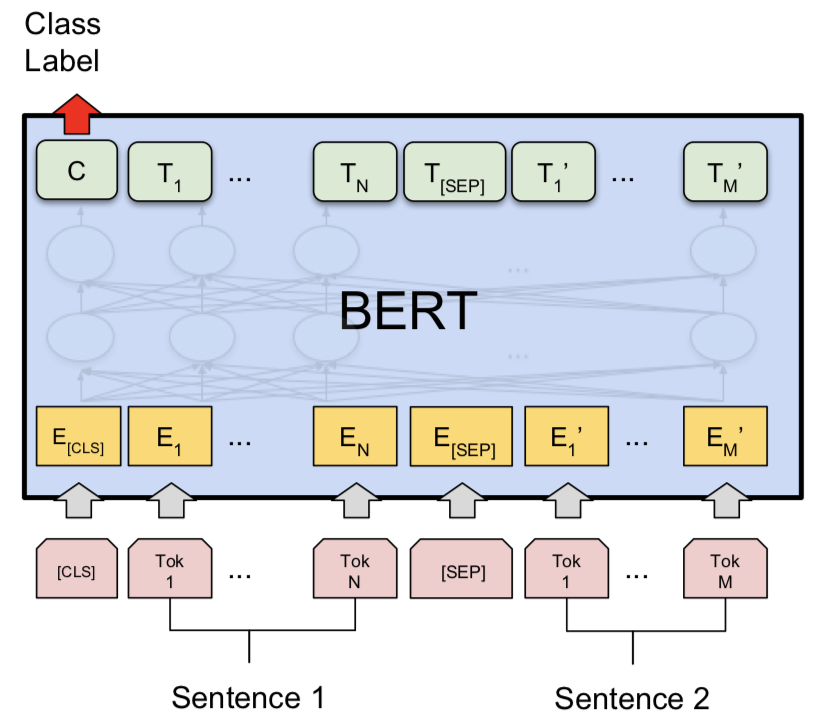

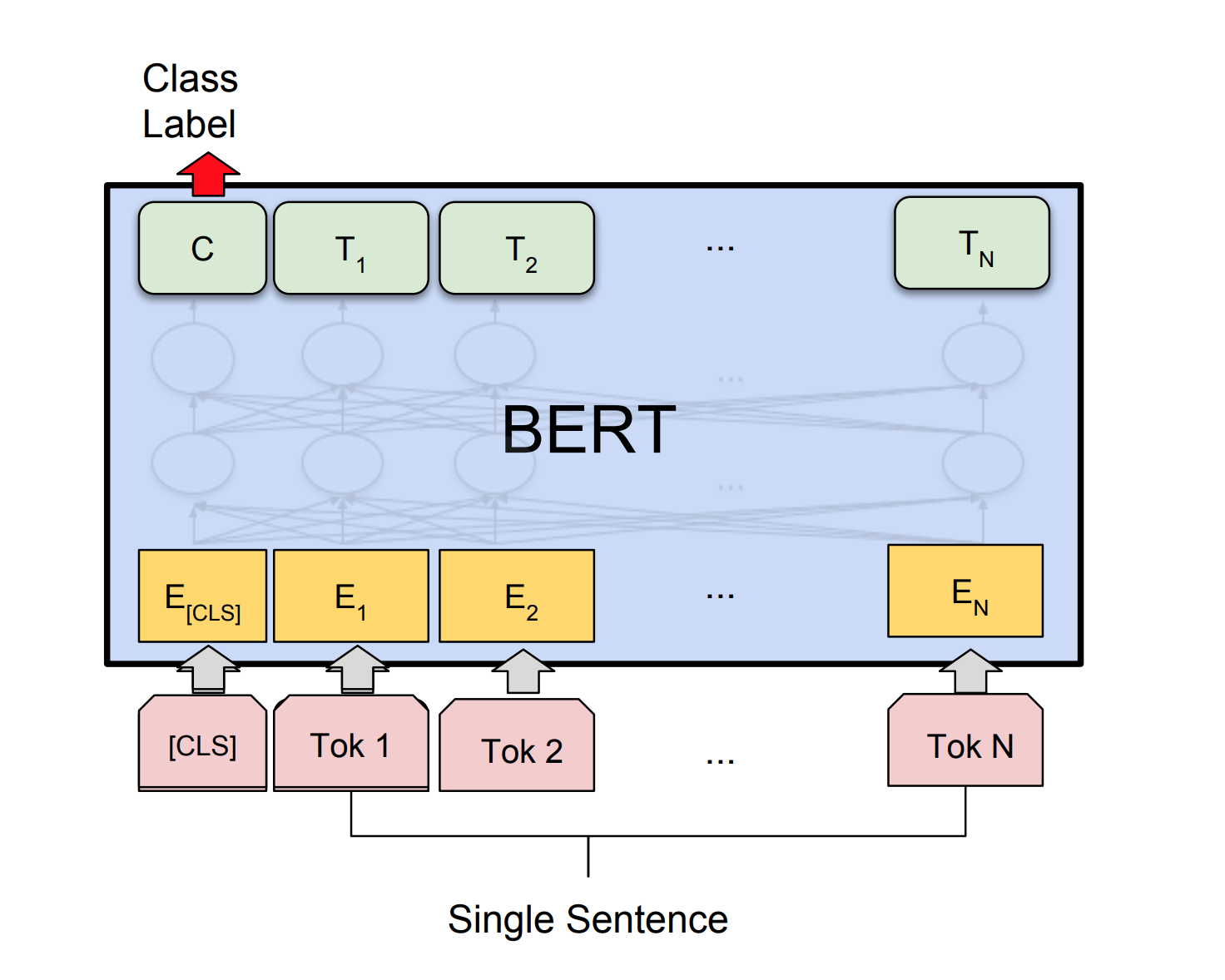

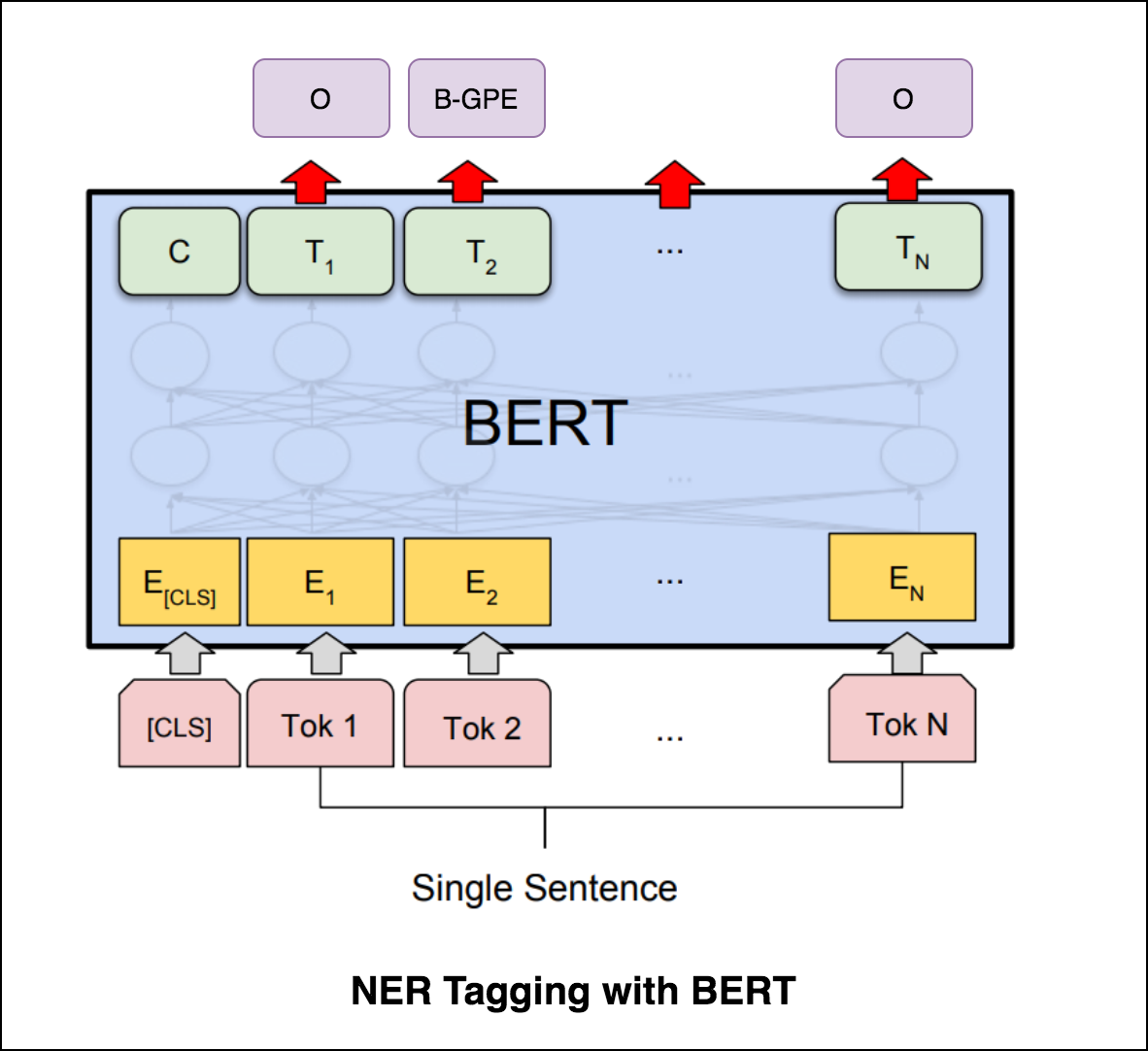

伯特使用变压器体系结构来编码句子。

指针网络的输出是离散的,对应于输入序列中的位置

输出每个步骤中目标类的数量取决于输入的长度,这是可变的。

它与以前的注意力尝试不同,而不是使用注意力将编码器的隐藏单元与每个解码器步骤的上下文向量进行混合,而是将注意力用作指针来选择输入序列的成员作为输出。

自然语言处理的主要应用之一是自动提取人们从大量文本中讨论的主题。一些大文本的示例可能是社交媒体,酒店,电影等客户评论,用户反馈,新闻报道,客户投诉的电子邮件等。

了解人们在说什么,并理解他们的问题和观点对企业,管理人员和政治运动非常有价值。而且很难手动阅读如此大的卷并编译主题。

因此,需要一种自动化算法,该算法可以通过文本文档读取并自动输出所讨论的主题。

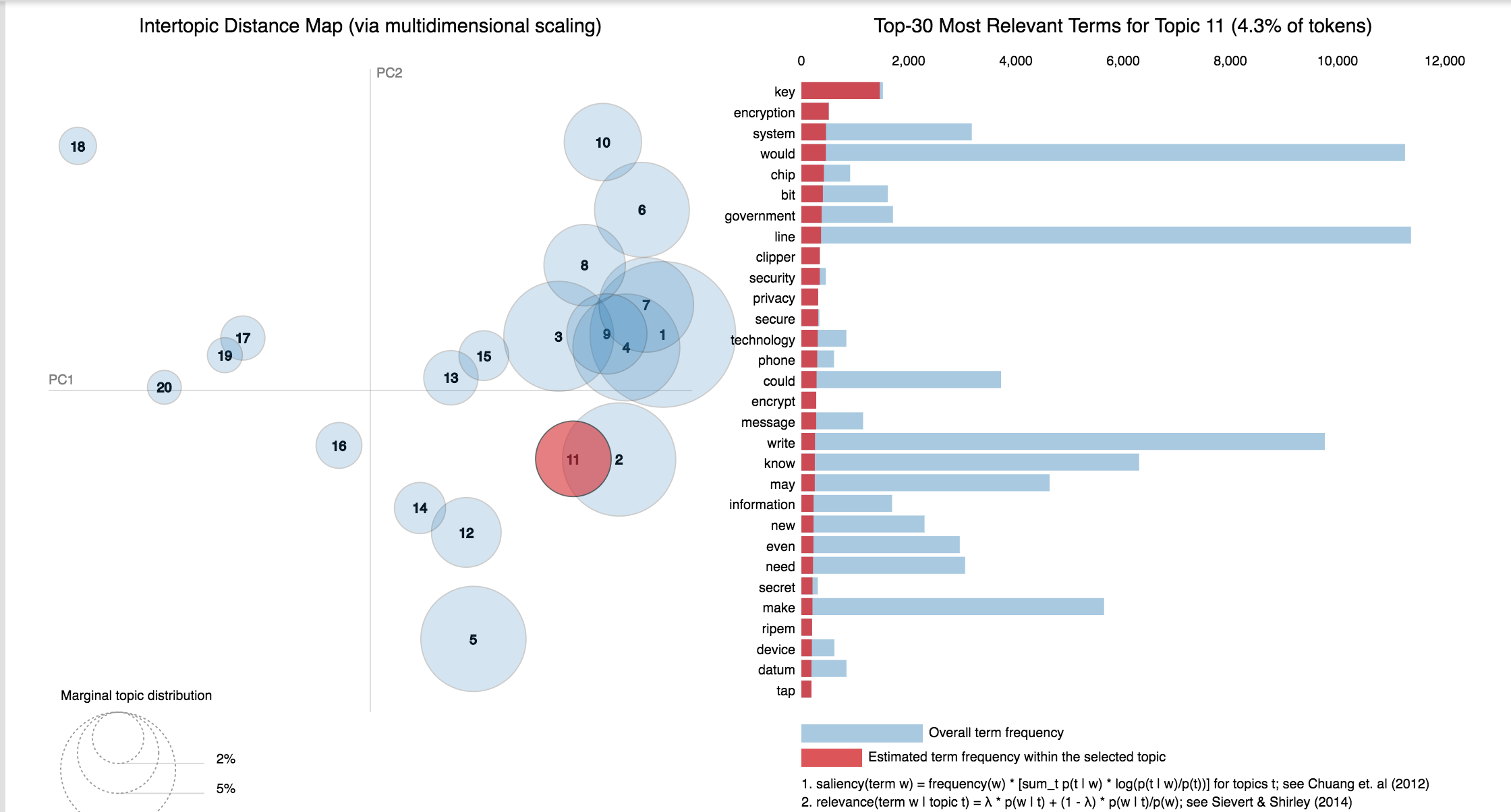

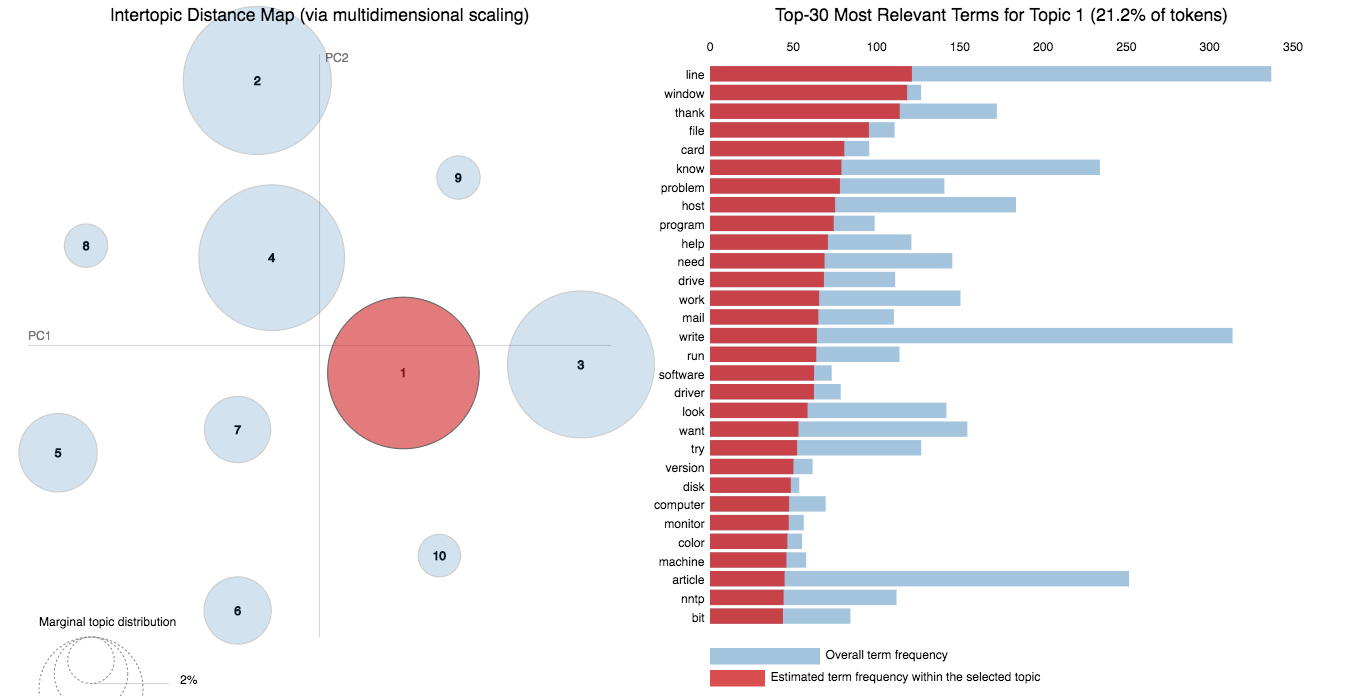

在此笔记本中,我们将以20 Newsgroups数据集的真实示例,并使用LDA提取自然讨论的主题。

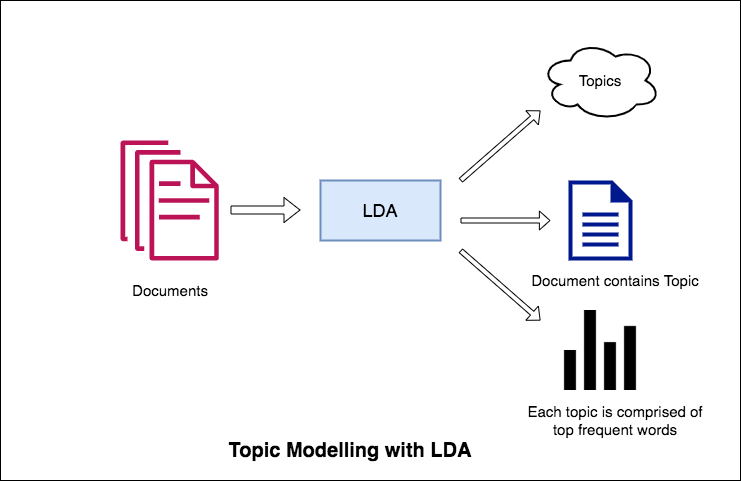

LDA的主题建模方法是将每个文档视为一定比例的主题集合。并且每个主题都是关键字集合,并以一定比例的比例。

一旦您提供了主题数量的算法,就可以将文档和关键字分布中的主题分布重新排列,以获得主题键单词分布的良好组成。

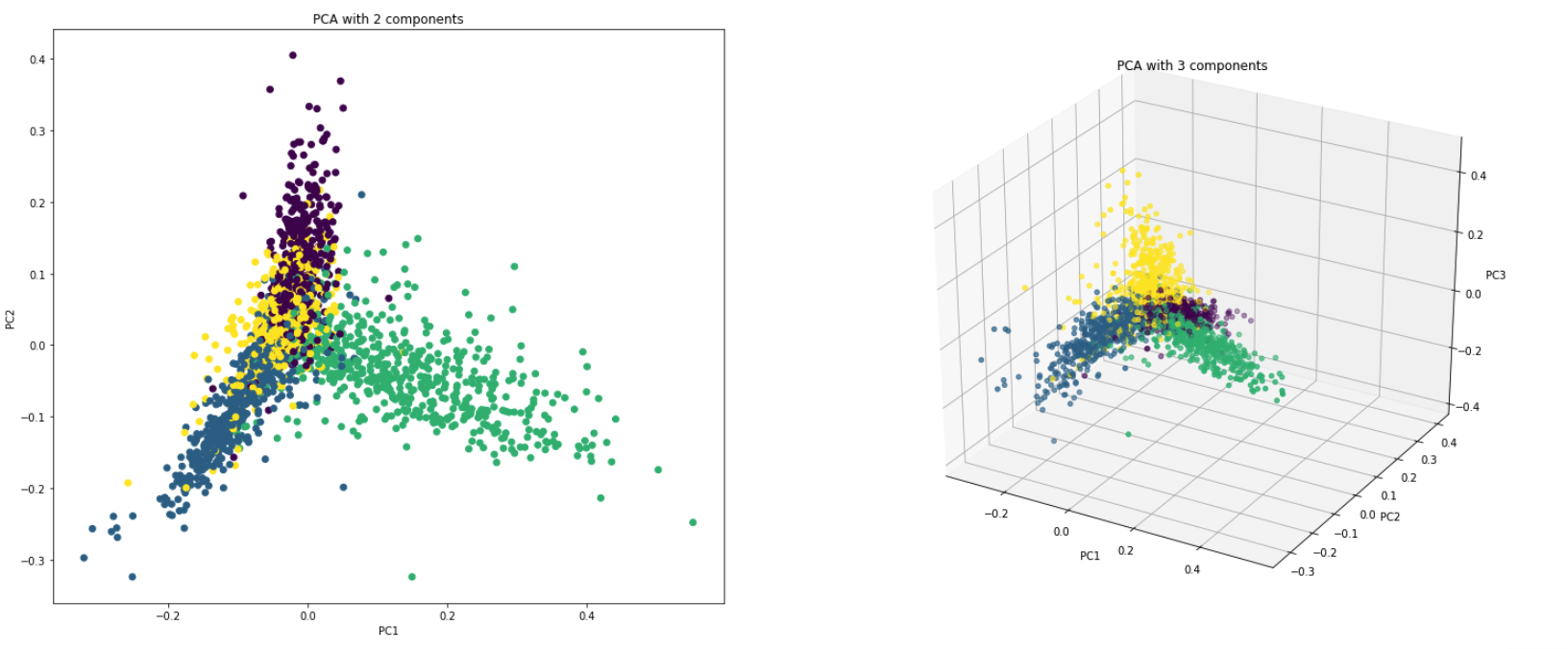

从根本上讲,PCA是一种减少维度的技术,可将数据集的列转换为新集合功能。它通过找到一组新的方向(例如X和Y轴)来解释数据中的最大可变性。这个新的系统坐标轴称为主组件(PC)。

实际上,PCA的使用有两个原因:

Dimensionality Reduction :分布在大量列中的信息被转换为主组件(PC),以便前几个PC可以解释总信息的相当大部分(方差)。这些PC可以用作机器学习模型中的解释变量。

Visualize Data :可视化类(或群集)的分离很难具有超过3个维度(功能)的数据。对于前两台PC本身,通常可以看到明确的分离。

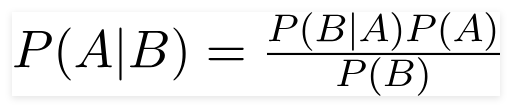

天真的贝叶斯分类器是用于分类任务的概率机器学习模型。分类器的关键基于贝叶斯定理。

考虑到B发生了B,使用贝叶斯定理,我们可以找到发生A的可能性。在这里,B是证据,A是假设。这里提出的假设是预测因素/特征是独立的。那就是一个特定特征的存在不会影响另一个特征。因此,它被称为天真。

天真贝叶斯分类器的类型:

Multinomial Naive Bayes :这主要是当变量离散(如单词)时使用的。分类器使用的功能/预测指标是文档中存在的单词的频率。

Gaussian Naive Bayes :当预测变量占据连续值并且不是离散的值时,我们假设这些值是从高斯分布中采样的。

Bernoulli Naive Bayes :这与多项式幼稚的贝叶斯相似,但预测因子是布尔变量。我们用来预测类变量的参数仅占用值是或否,例如,是否在文本中出现单词。

使用20NewSgroup数据集,探索了Naive Bayes算法进行分类。

探索了使用以下技术的数据增强:

探索了一个名为Sbert的新建筑。暹罗网络体系结构可以派生用于输入句子的固定尺寸向量。使用诸如余弦或曼哈顿 /欧几里得距离之类的相似性度量,可以找到语义上相似的句子。

| 情感分析-IMDB | 情感分类 - hinglish | 文档分类 |

| 重复的问题对分类 - Quora | POS标签 | 自然语言推论-SNLI |

| 有毒评论分类 | 语法正确的句子 - 可乐 | NER标记 |

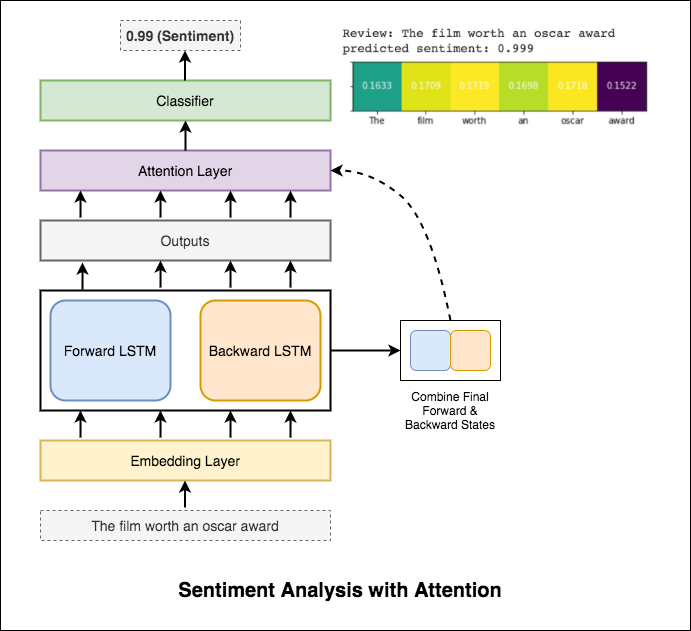

情感分析是指使用自然语言处理,文本分析,计算语言学和生物识别技术来系统地识别,提取,量化和研究情感状态和主观信息。

探索了以下变体:

RNN用于处理和识别情感。

在尝试了基本的RNN后,test_accuracy的基本RNN少于50%,已经实验了以下技术,并实现了超过88%的test_accuracy。

使用的技术:

在预测输入情绪时,注意力有助于关注相关输入。 Bahdanau的注意力被用来吸收LSTM的输出,并串联了最终的前进和向后隐藏状态。不使用预训练的单词嵌入,可以达到88%的测试准确性。

伯特(Bert)在11个自然语言处理任务上获得了新的最新结果。 NLP中的转移学习在发布BERT模型后触发。探索了使用BERT进行情感分析。

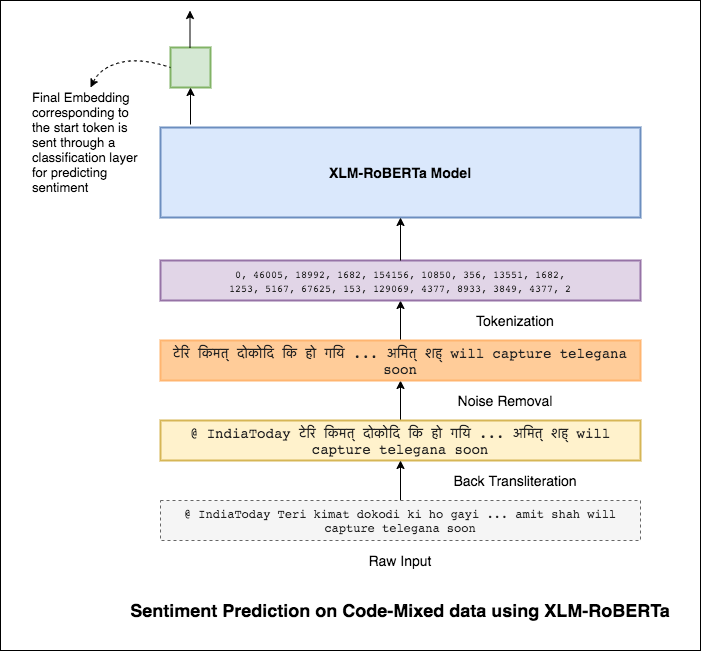

混合语言(也称为混合代码)是多语言社会的规范。多语言的人是非母语英语的人,他们倾向于使用基于英语的语音键入和插入英语的主语言来代码混音。

任务是预测给定代码混合推文的情感。情感标签是正面,负面或中立的,而混合的语言将是英语印度语。 (Sentimix)

探索了以下变体:

使用简单的MLP模型,在测试数据上达到了F1 score of 0.58

探索基本MLP模型后,将LSTM模型用于情感预测,并实现了F1分数为0.57 。

实际上,与基本MLP模型相比,结果较小。原因之一可能是LSTM由于代码混合数据的高度多样性而无法学习句子中单词之间的关系。

由于LSTM由于代码混合数据的高度多样性而无法使用代码混合句子中的单词之间的关系,并且不使用预训练的嵌入,因此F1分数较小。

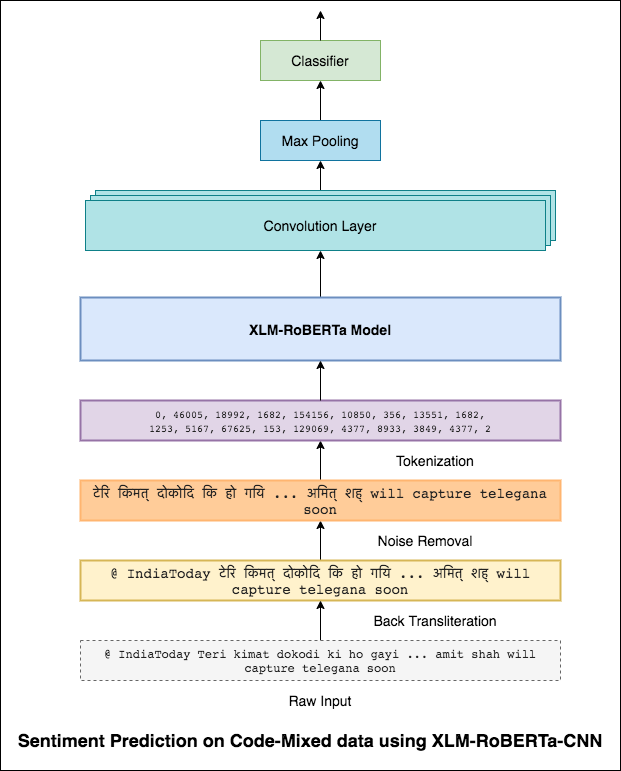

为了减轻此问题,XLM-Roberta模型(已在100种语言上进行了预培训)用于编码句子。为了使用XLM-Roberta模型,该句子需要使用适当的语言。因此,首先需要将Hinglish单词转换为印地语(Devanagari)形式。

达到0.59的F1分数。改进的方法将在稍后进行。

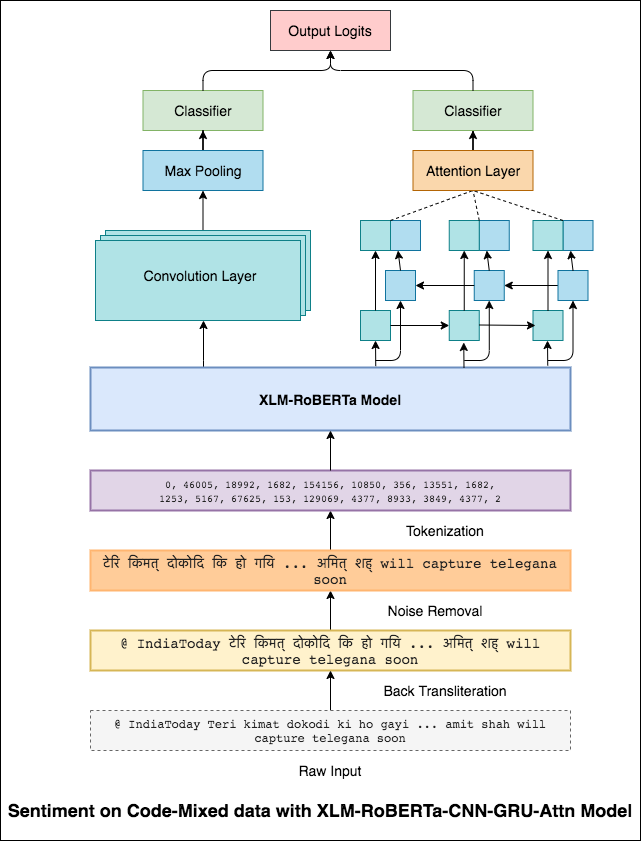

XLM-Roberta模型的最终输出用作双向LSTM模型的输入嵌入。从LSTM层中获取输出的注意力层产生输入的加权表示,然后通过分类器通过分类器来预测句子的情感。

达到0.64的F1分数。

就像3x3滤波器可以浏览图像的斑块一样,1x2滤波器可以在文本中浏览两个顺序单词,即Bi-gram。在此CNN模型中,我们将使用不同尺寸的多个过滤器,这些过滤器将查看BI-GRAM(1x2滤波器),Tri-gram(1x3滤波器)和/或N-Grams(1xn filtr)(1倍滤波器)。

此处的直觉是,审查中某些Bi-gram,Tri-grams和n-grams的外观将很好地表明最终情感。

达到0.69的F1分数。

CNN捕获了RNN捕获全球依赖性的本地依赖性。通过组合两者,我们可以更好地了解数据。 CNN模型和双向牵引模型的结合执行了其他模型。

达到0.71的F1分数。 (排名前5位)。

文档分类或文档分类是图书馆科学,信息科学和计算机科学中的一个问题。任务是将文档分配给一个或多个类或类别。

探索了以下变体:

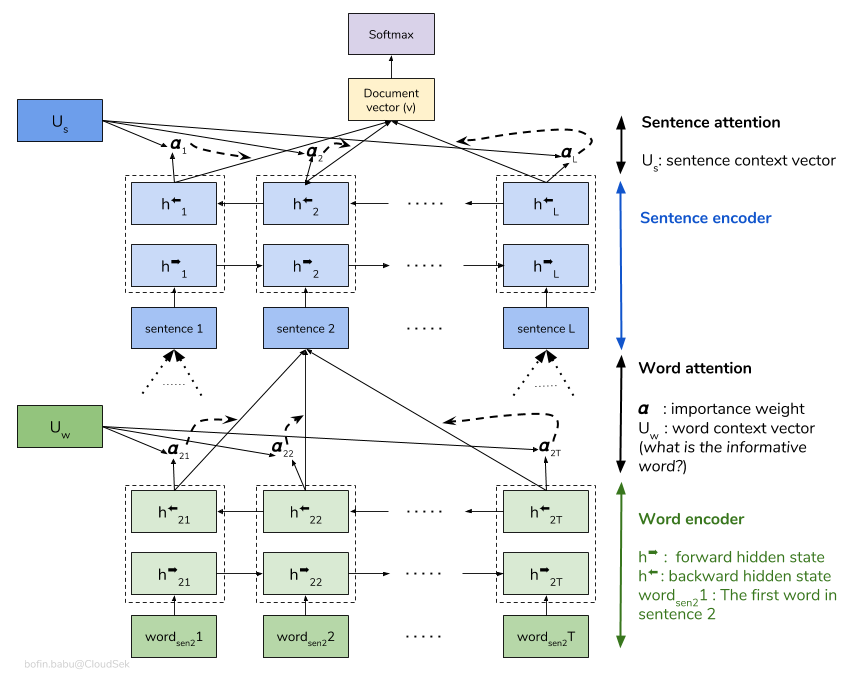

分层注意力网络(HAN)考虑文档的层次结构(文档 - 句子 - 单词),并包括一种注意机制,该机制能够在考虑上下文时在文档中找到最重要的单词和句子。

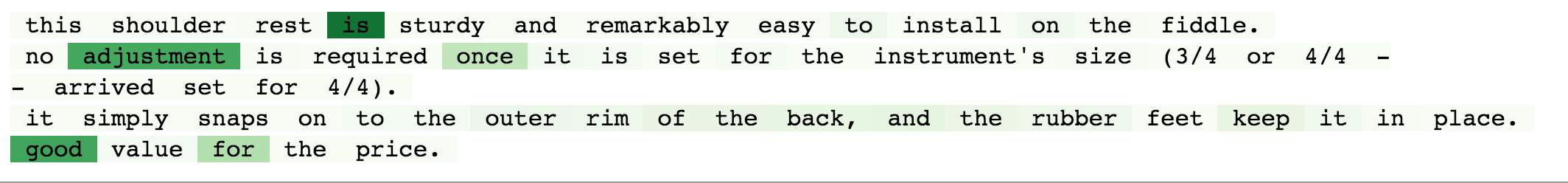

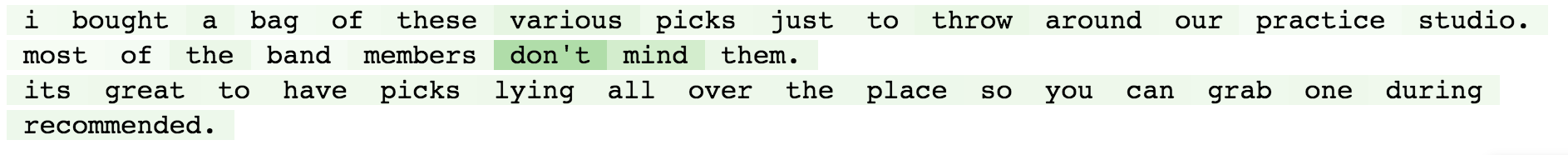

基本的HAN模型迅速拟合。为了克服这一问题,探索了Embedding Dropout的技术,探索了Locked Dropout 。还有另一种称为Weight Dropout的技术,该技术未实施(让我知道是否有任何实施此功能的资源)。还使用预训练的单词嵌入Glove代替随机初始化。由于可以在句子级别和单词级别上进行注意,因此我们可以看到哪些单词在句子中很重要,哪些句子在文档中很重要。

QQP代表Quora问题对。该任务的目的是给定的问题;我们需要找到这些问题在语义上是否彼此相似。

探索了以下变体:

该算法需要将这对问题作为输入,并应输出它们的相似性。使用暹罗网络。 Siamese neural network (有时称为双神经网络)是一个人工神经网络,在两个不同的输入向量的同时使用same weights来计算可比的输出向量。

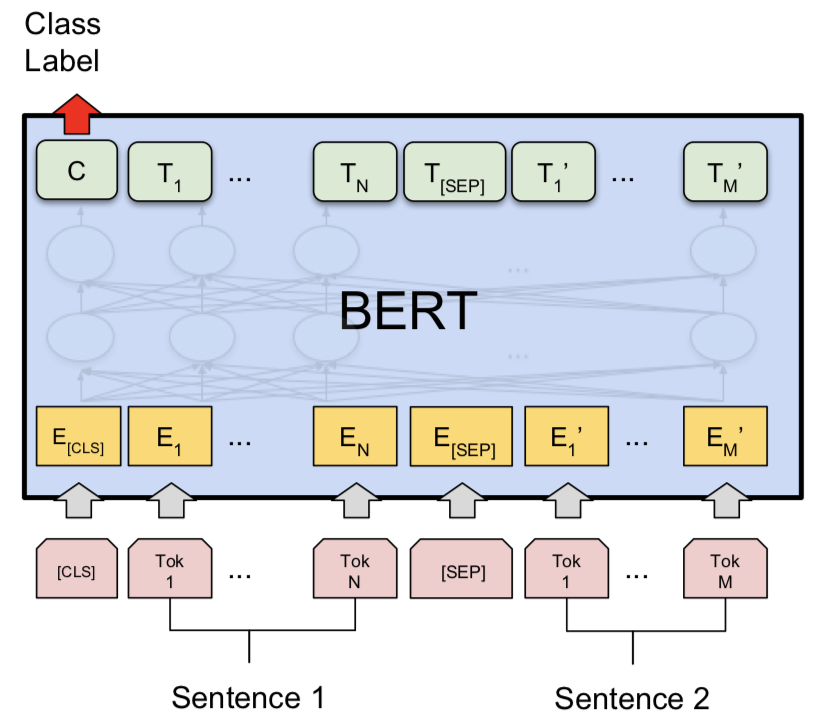

尝试暹罗模型后,探索了Bert进行Quora重复问题对检测。伯特(Bert)将问题1和问题2作为输入,由[SEP]令牌分开,分类是使用[CLS]令牌的最终表示。

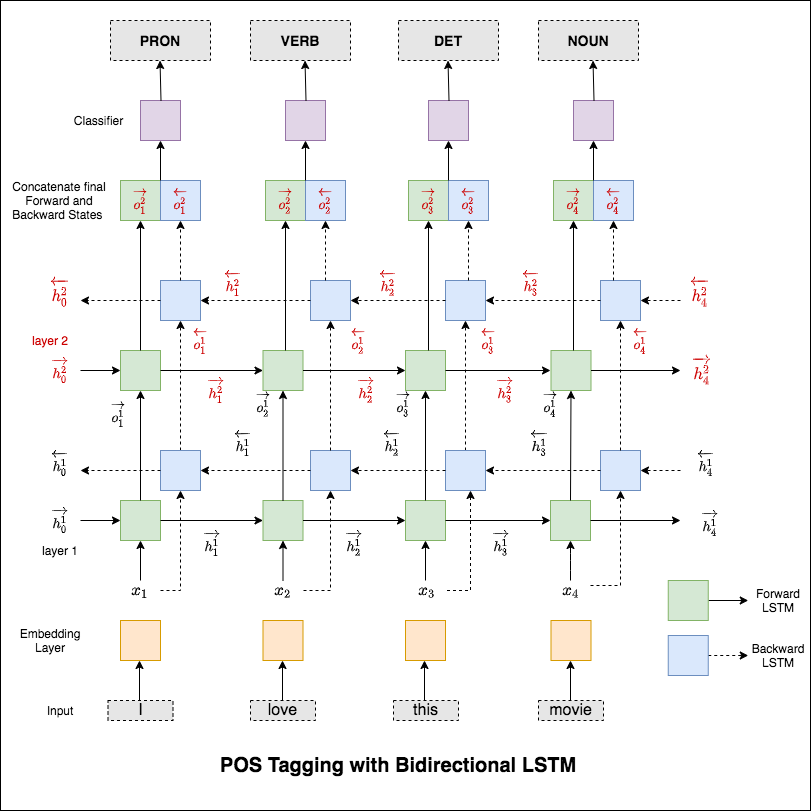

词性(POS)标记是一项任务,是在句子中标记每个单词,并具有适当的语音部分。

探索了以下变体:

此代码涵盖基本工作流程。我们将学习如何:加载数据,创建火车/测试/验证拆分,构建词汇,创建数据迭代器,定义模型并实现火车/评估/测试环和运行时间(推理)标记。

使用的模型是多层双向LSTM网络

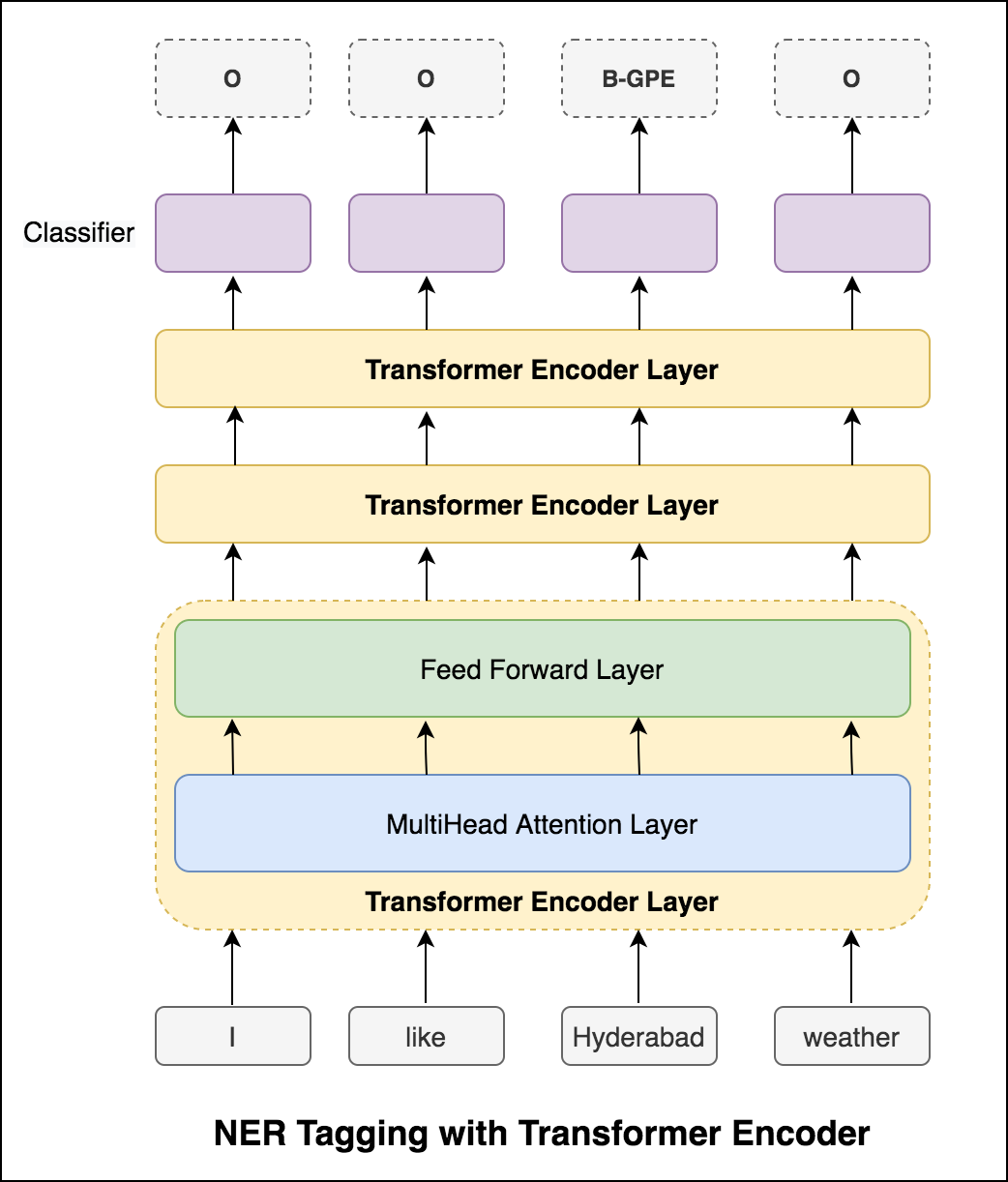

尝试RNN方法后,探索了具有基于变压器的体系结构的POS标记。由于变压器同时包含编码器和解码器,并且对于序列标记任务,仅Encoder就足够了。由于数据很小,因此具有6层编码器的数据将过度拟合数据。因此使用了三层变压器编码器模型。

尝试使用变压器编码器进行POS标记后,利用了预训练的BERT模型的POS标记。它达到了91%的测试准确性。

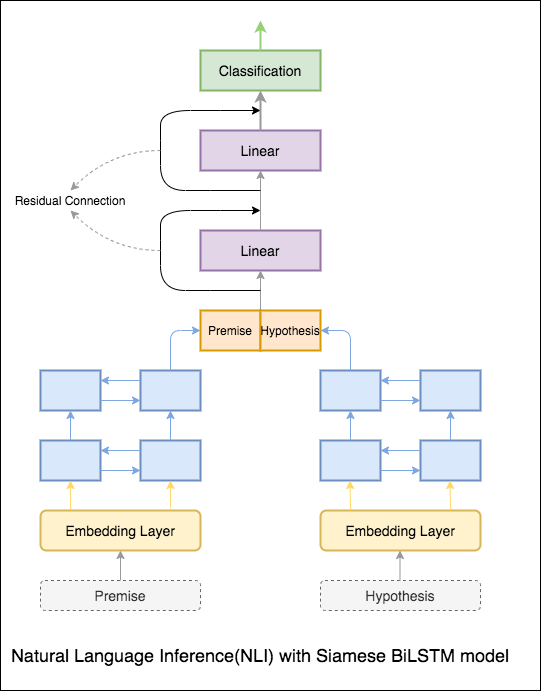

自然语言推理(NLI)的目标是一项广泛研究的自然语言处理任务,是确定一个给定的语句(前提)是否在语义上需要另一个给定的陈述(假设)。

探索了以下变体:

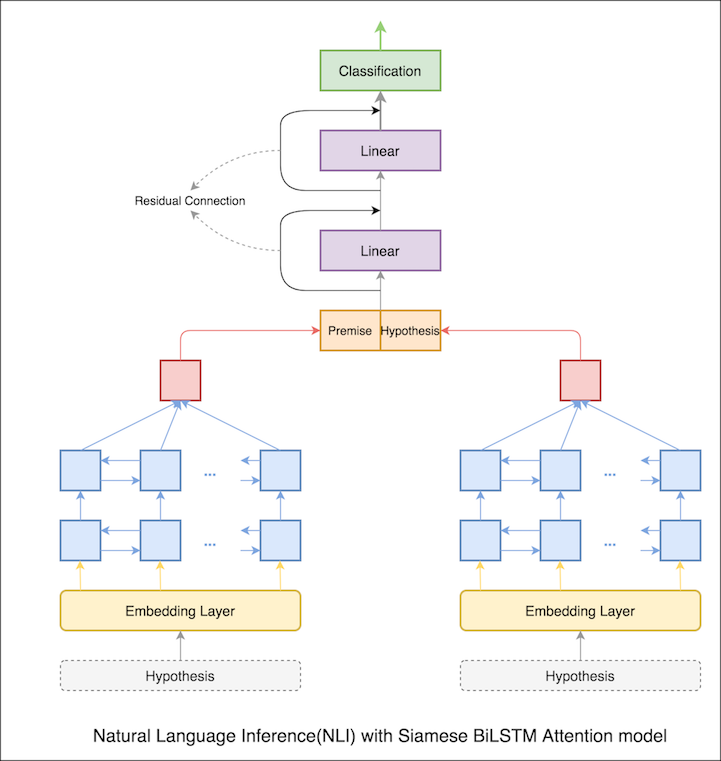

带有暹罗Bilstm网络的基本模型已插入

这可以视为基线设置。实现了76.84%的测试精度。

在上一个笔记本中,前提和假设的最终隐藏状态是LSTM的表示。现在,将在所有输入令牌上计算注意力,而不是采取最终的隐藏状态,并将最终加权向量作为前提和假设的表示。

测试准确性从76.84%提高到79.51% 。

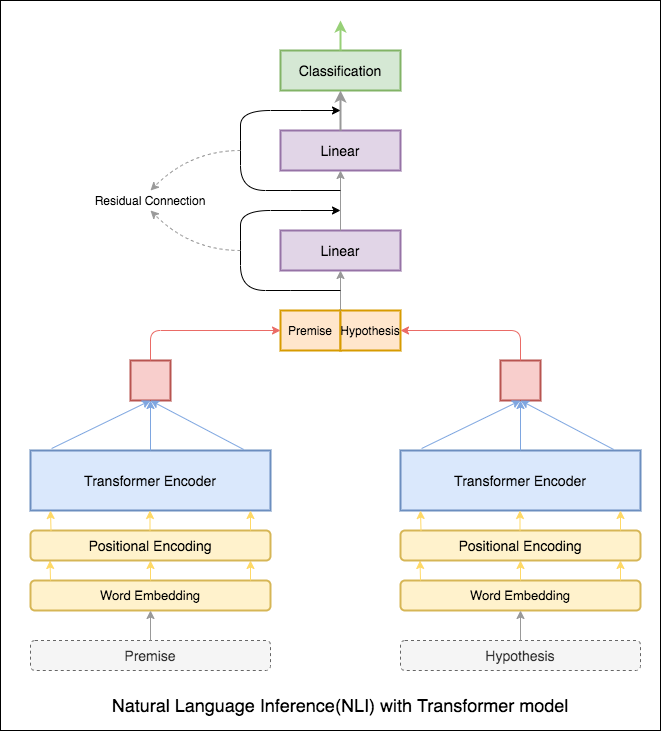

变压器编码器用于编码前提和假设。一旦句子通过编码器,所有令牌的求和被视为最终表示(可以探索其他变体)。与RNN变体相比,模型精度较低。

探索了带有BERT基本模型的NLI。伯特(Bert)将前提和假设作为[SEP]令牌分开的输入,并使用[CLS]令牌的最终表示进行了分类。

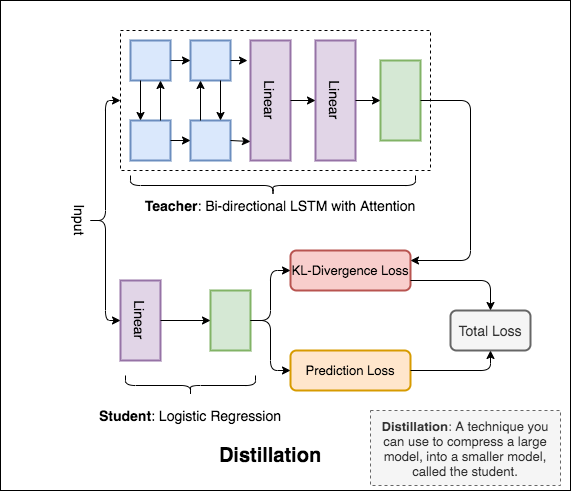

Distillation :您可以用来将一种称为teacher的大型模型压缩成一个较小的模型,称为student 。以下学生,使用教师模型来对NLI进行蒸馏。

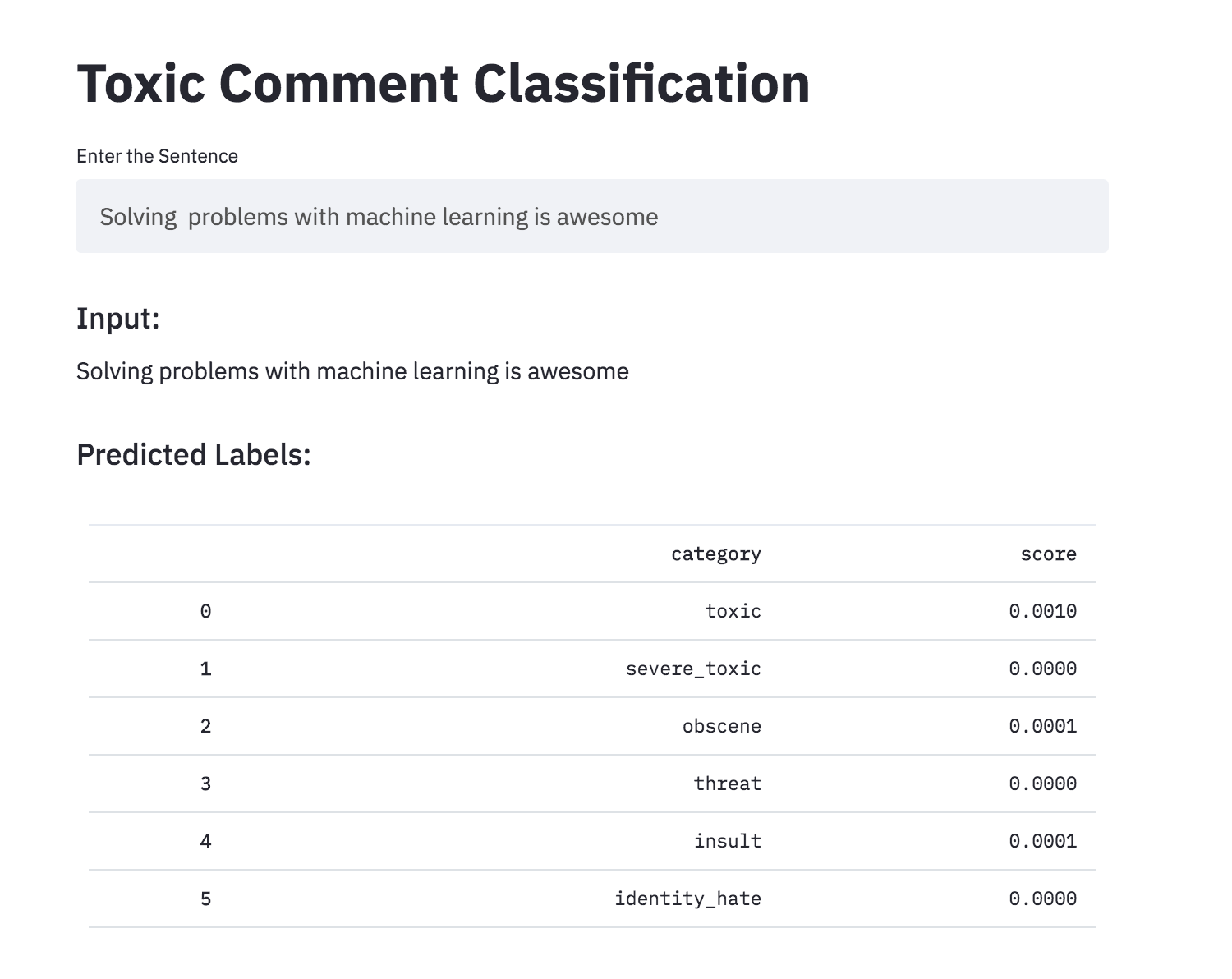

讨论您关心的事情可能很困难。在线虐待和骚扰的威胁意味着许多人停止表达自己并放弃寻求不同的意见。平台难以有效地促进对话,导致许多社区限制或完全关闭用户评论。

为您提供了大量的Wikipedia评论,这些评论已被人类对有毒行为的评估者标记。毒性的类型是:

探索了以下变体:

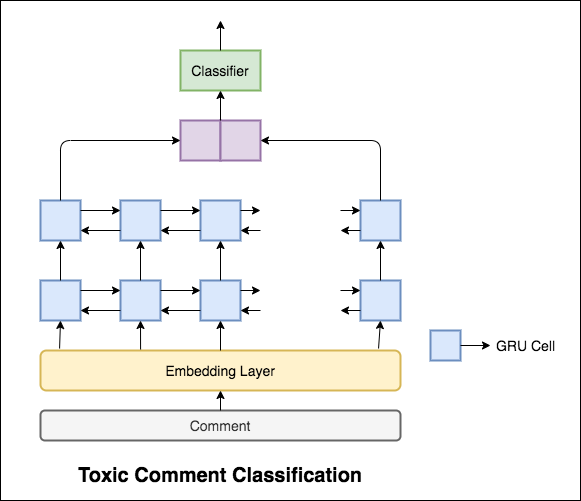

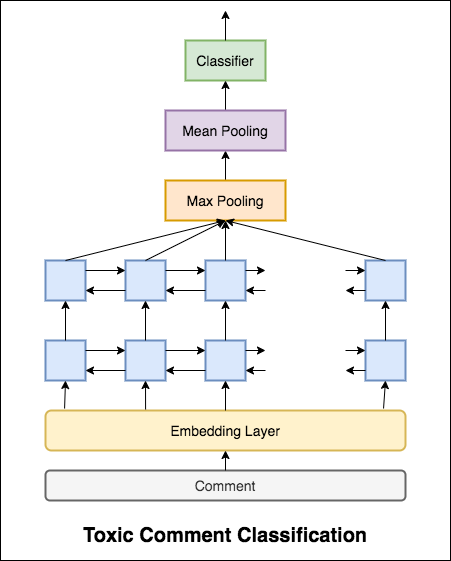

使用的模型是双向GRU网络。

实现了99.42%的测试精度。由于90%的数据未标记为任何毒性,因此仅将所有数据预测为无毒的数据提供了90%的准确模型。因此,准确性不是可靠的指标。实施了不同的ROC AUC。

由于Categorical Cross Entropy作为损失,ROC_AUC得分为0.5 。通过将损失更改为Binary Cross Entropy ,并通过添加池层(最大值,平均值)来修改模型,ROC_AUC得分提高到0.9873 。

使用简化将有毒评论分类转换为应用程序。预先训练的模型现已可用。

人工神经网络能够判断句子的语法可接受性吗?为了探索此任务,使用语言可接受性(COLA)数据集。可乐是一组标记为语法正确或不正确的句子。

探索了以下变体:

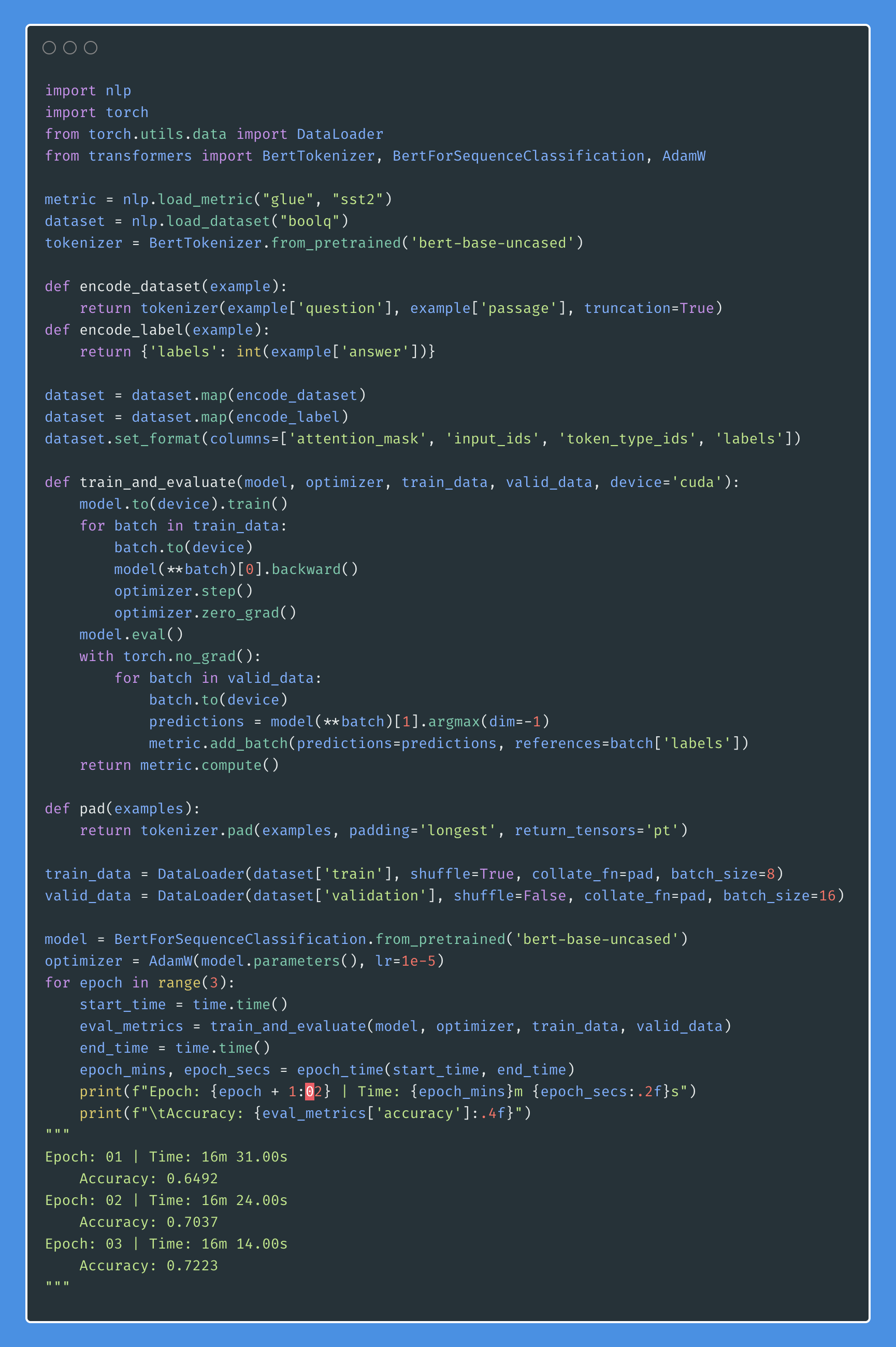

伯特(Bert)在11个自然语言处理任务上获得了新的最新结果。 NLP中的转移学习在发布BERT模型后触发。在本笔记本中,我们将探讨如何使用BERT对句子进行分类是否正确使用COLA数据集。

达到85%的准确性,Matthews相关系数(MCC)达到了64.1 。

Distillation :您可以用来将一种称为teacher的大型模型压缩成一个较小的模型,称为student 。以下学生,使用教师模型来对可乐进行蒸馏。

已尝试以下实验:

84.06 ,MCC: 61.582.54 ,MCC: 5782.92 ,MCC: 57.9 命名 - 实体识别(NER)标签是用适当的实体标记每个单词的任务。

探索了以下变体:

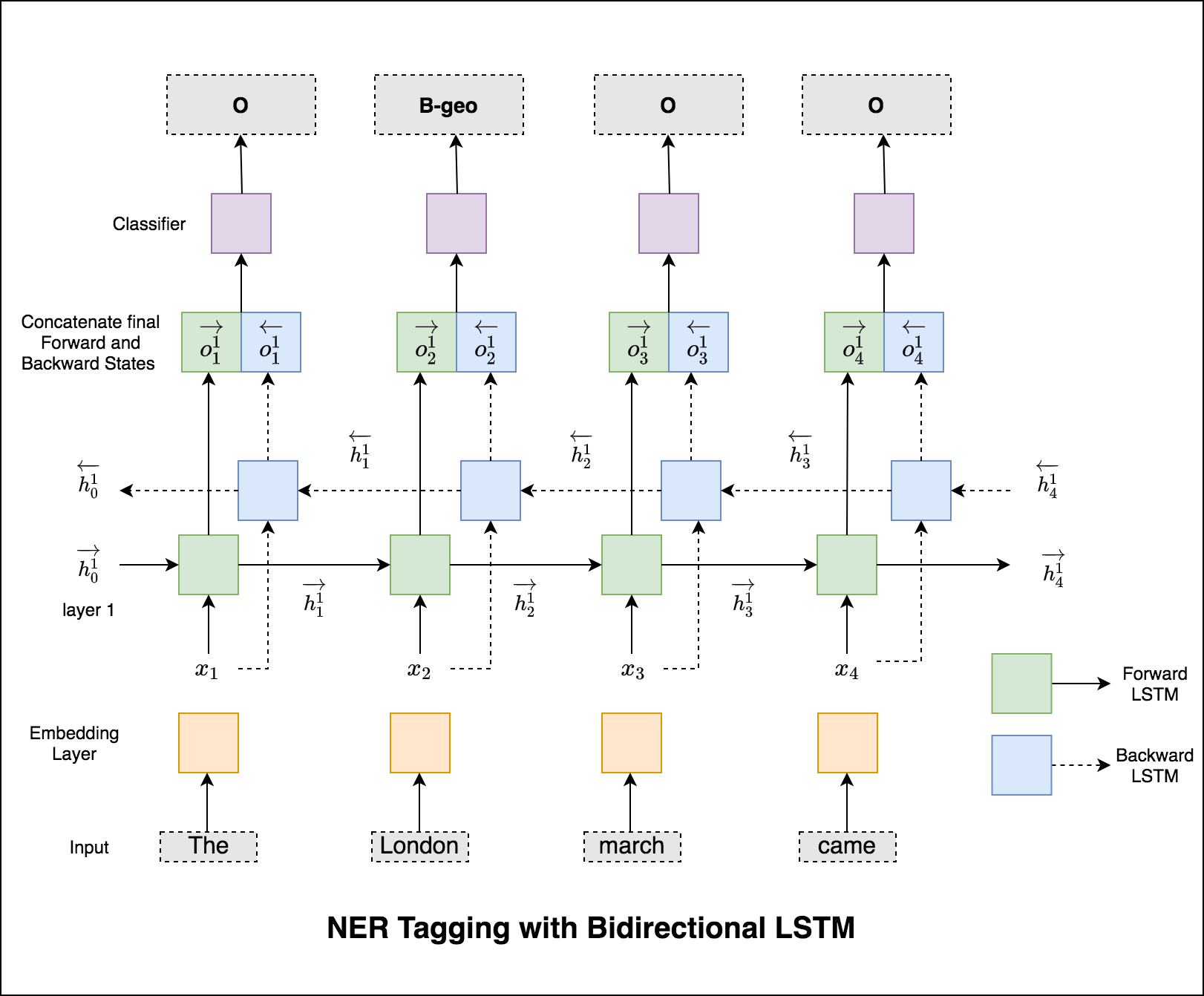

此代码涵盖基本工作流程。我们将查看如何:加载数据,创建火车/测试/验证拆分,构建词汇,创建数据迭代器,定义模型并实现火车/评估/测试环和火车,并测试模型。

使用的模型是双向LSTM网络

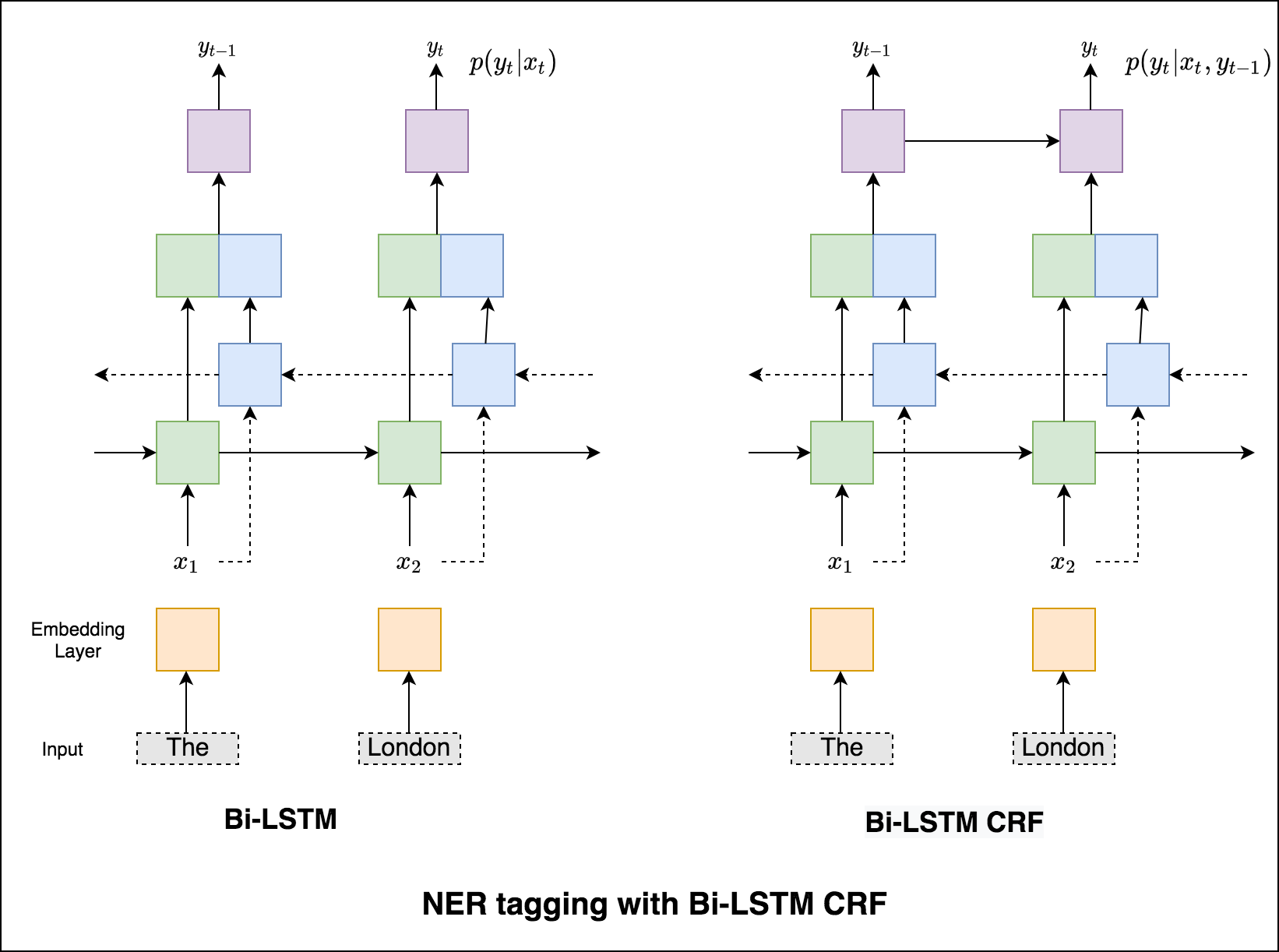

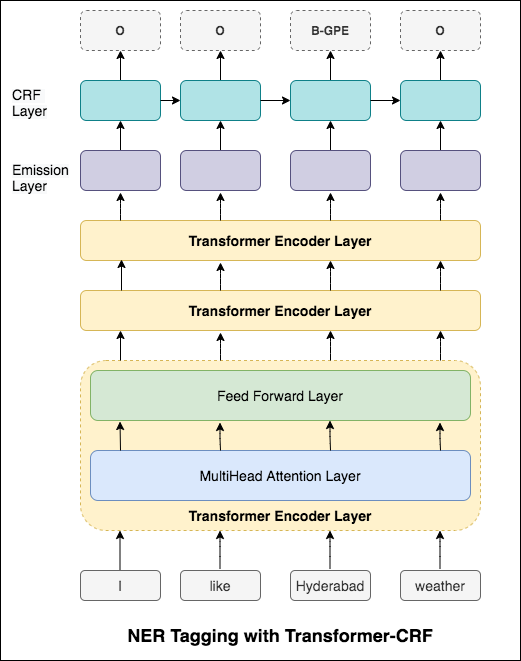

对于序列标记(NER),当前单词的标签可能取决于上一个单词的标签。 (例如:纽约)。

如果没有CRF,我们只会使用单个线性层将双向LSTM的输出转换为每个标签的分数。这些被称为emission scores ,这是单词为某个标签的可能性的表示。

CRF不仅计算排放得分,而且还计算transition scores ,这是一个单词是一个标签的可能性,因为上一个单词是某个标签。因此,过渡分数衡量从一个标签过渡到另一个标签的可能性。

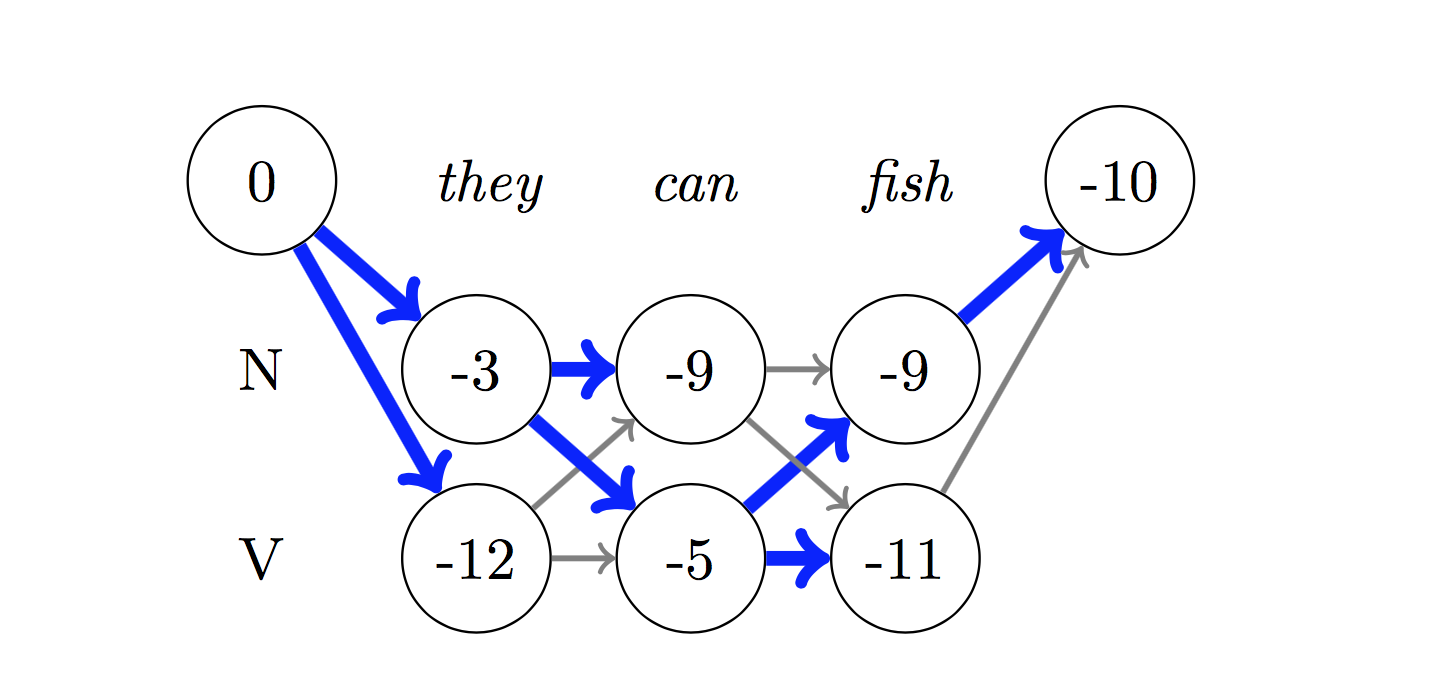

对于解码,使用Viterbi算法。

由于我们使用的是CRF,因此我们并没有在每个单词上预测正确的标签,而是我们预测单词序列的正确标签序列。 Viterbi解码是一种准确做到这一点的方法 - 从条件随机场计算出的分数中找到最佳的标签序列。

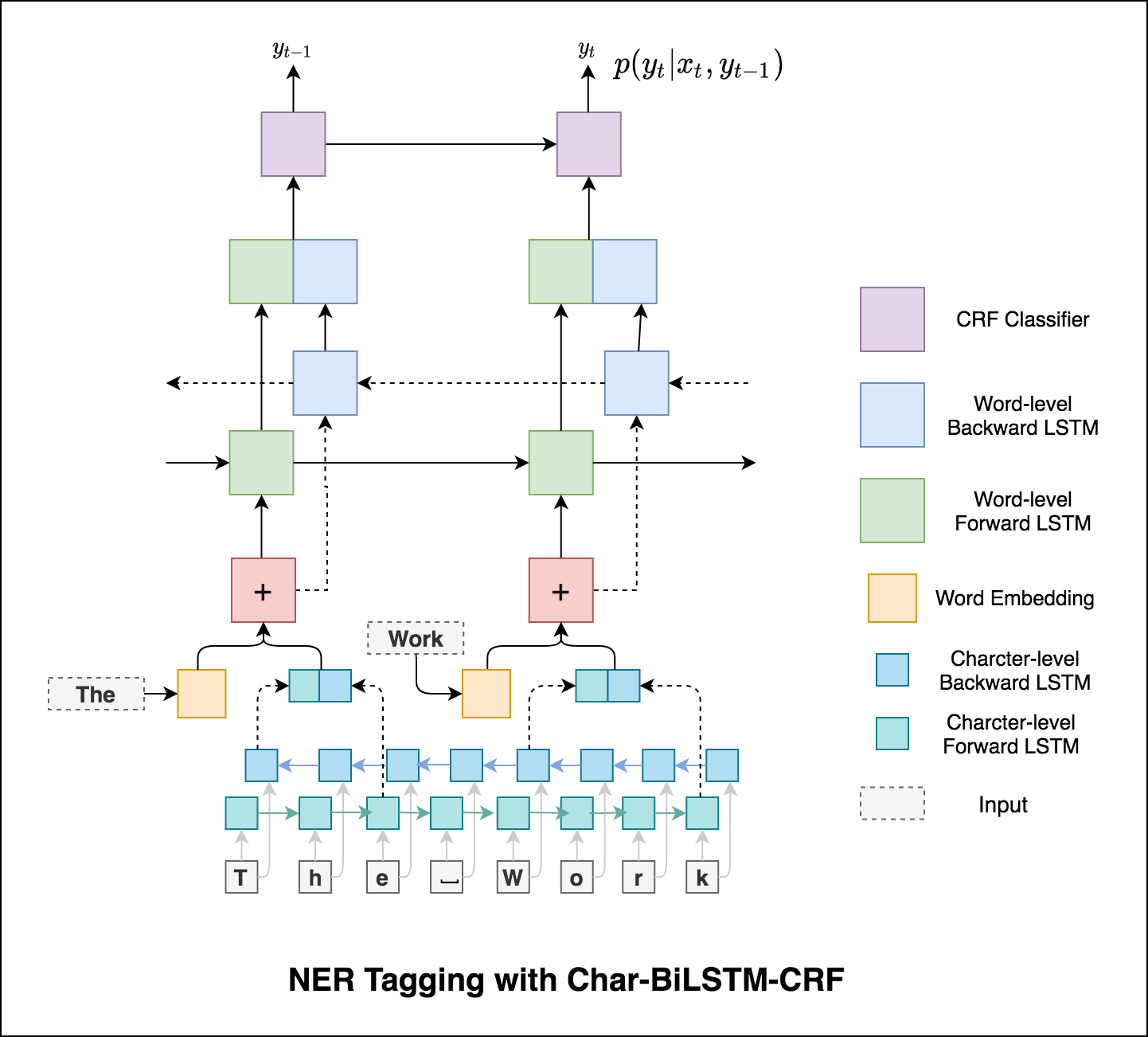

在我们的标记任务中使用子字信息,因为它可以是标签的有力指标,无论它们是语音的一部分还是实体的一部分。例如,它可能会学会形容词通常以“ -y”或“ -ul”结尾,或者通常以“ -land”或“ -burg”结尾。

因此,我们的序列标记模型都使用

word-level信息。character-level信息直至两个方向上都包含每个单词。

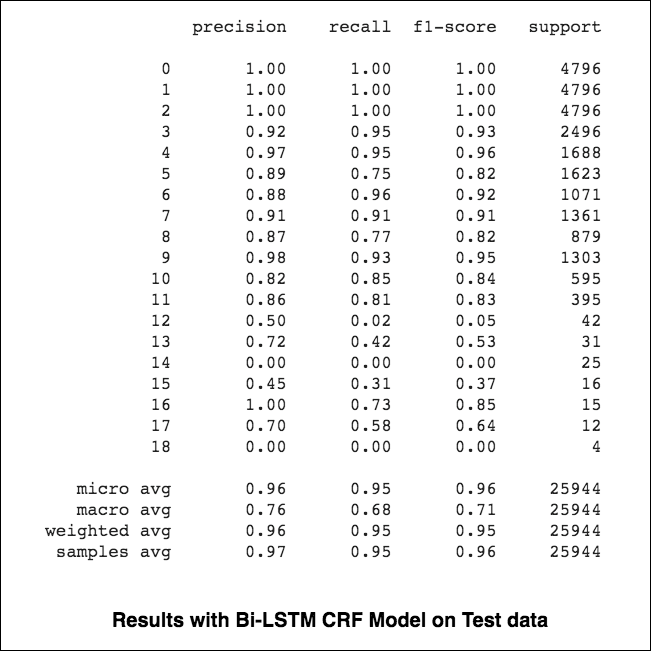

微观和宏观大小(对于任何指标)将计算略有不同的事物,因此它们的解释有所不同。宏观平均水平将独立计算每个类别的度量,然后取平均值(因此平均处理所有类),而微平均水平将汇总所有类以计算平均度量的贡献。在多级分类设置中,如果您怀疑可能存在类不平衡,那么微平均水平是可取的(即,与其他类别相比,您可能有更多的类示例)。

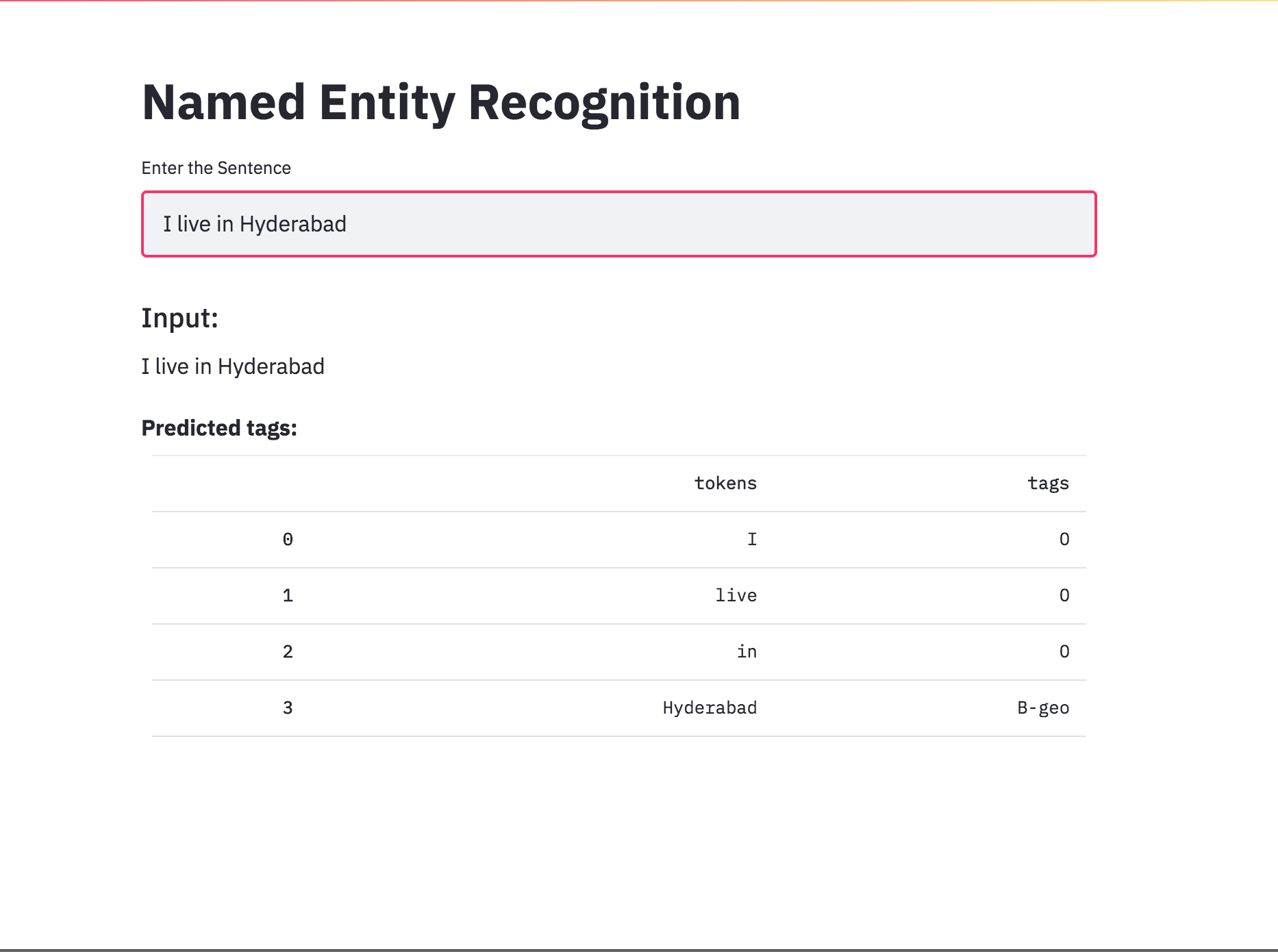

使用简化将NER标记转换为应用程序。预训练模型(CHAR-BILSTM-CRF)现已上市。

尝试RNN方法后,探索了使用基于变压器的体系结构的NER标记。由于变压器同时包含编码器和解码器,并且对于序列标记任务,仅Encoder就足够了。使用了三层变压器编码器模型。

尝试使用变压器编码器进行NER标记后,探索了使用预训练的bert-base-cased模型的NER标记。

与NER标记任务中的BilstM相比,仅具有变压器并不能给出良好的结果。与独立变压器相比,实现了变压器顶部上的CRF层,这正在改善结果。

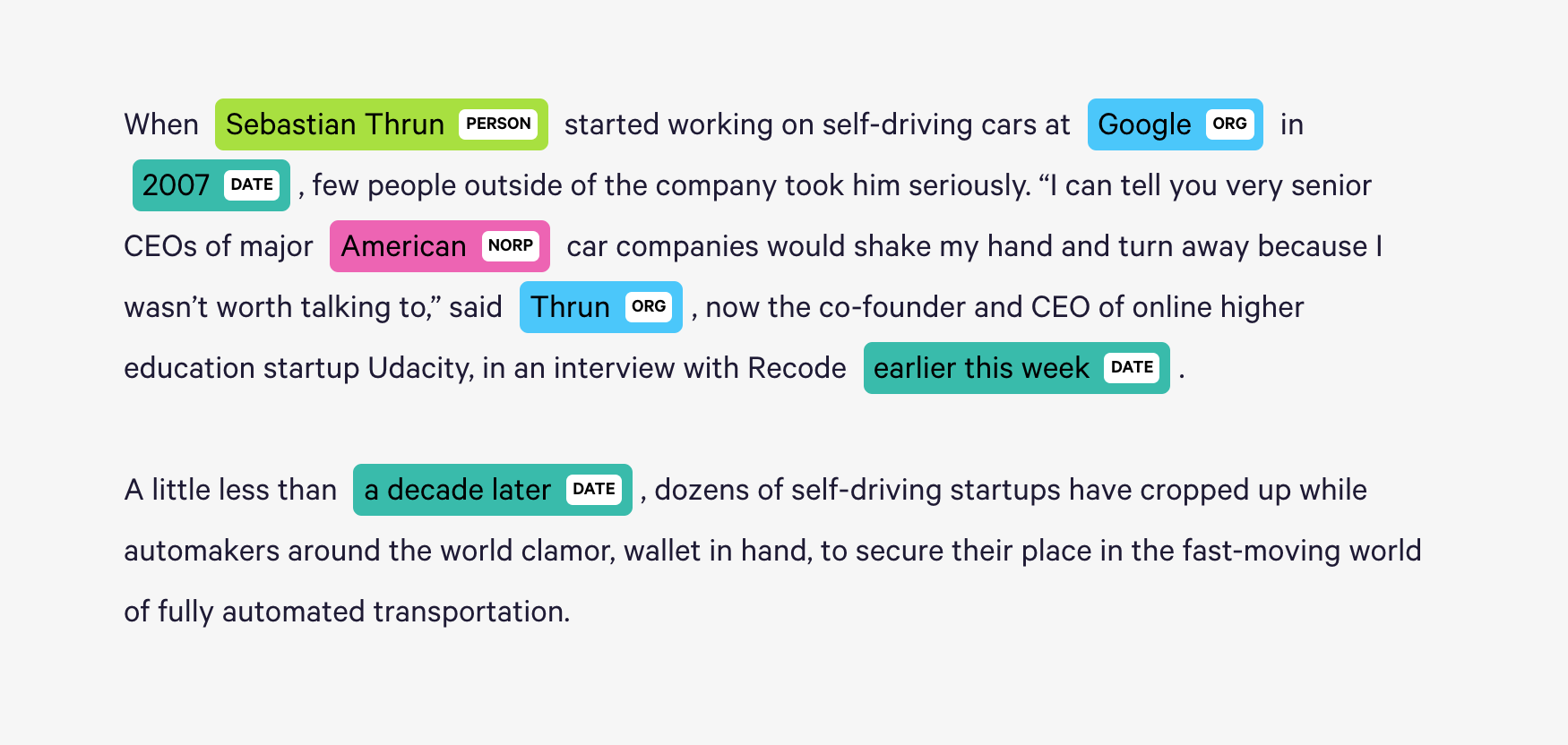

Spacy在Python中为NER提供了一个非常有效的统计系统,该系统可以将标签分配给令牌组。它提供了一个默认模型,该模型可以识别广泛的命名或数值实体,包括人,组织,语言,事件等。

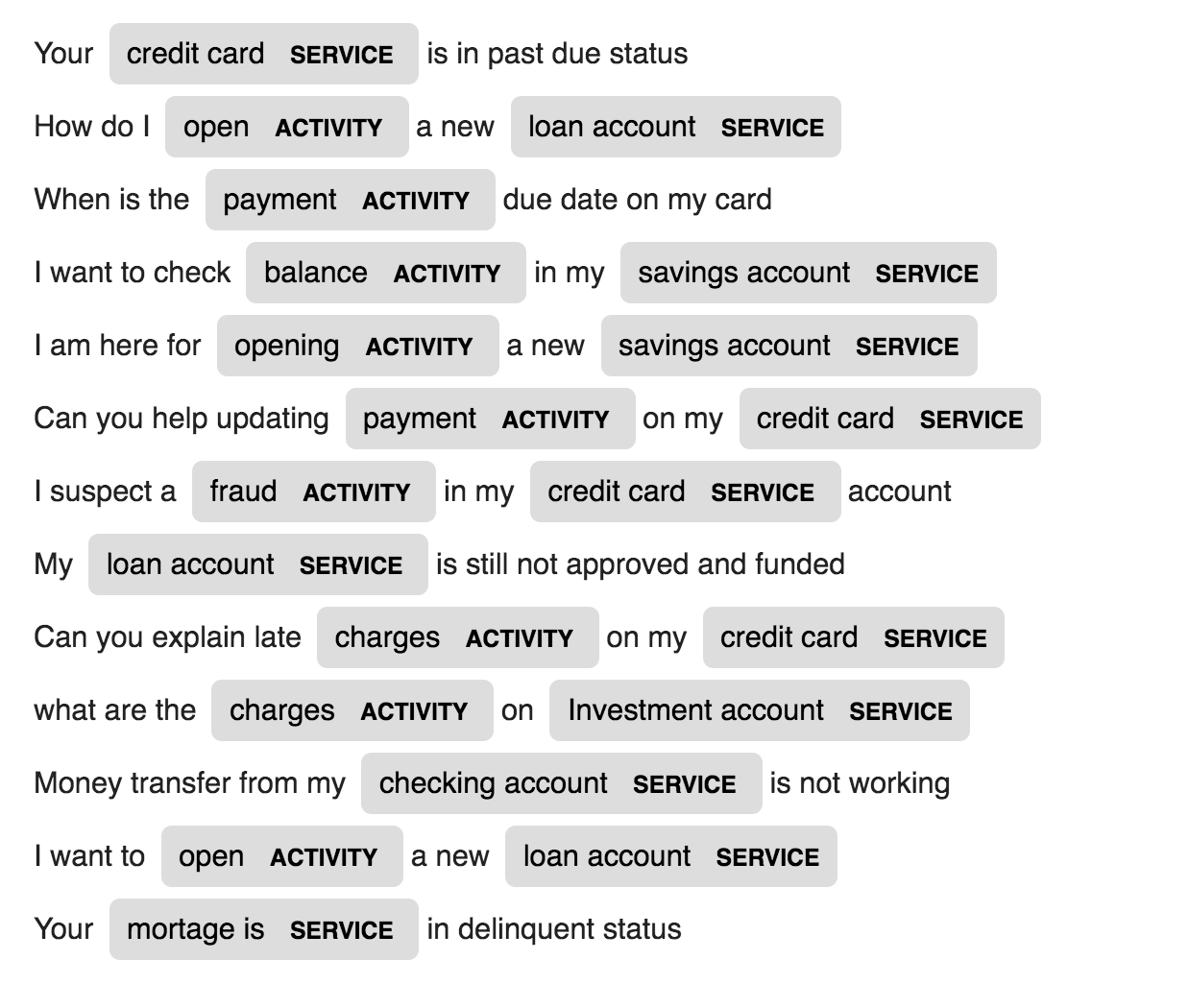

除了这些默认实体外,Spacy还通过训练模型以更新的训练有素的示例对其进行更新,还使我们可以自由地向NER模型添加任意类别。

在特定领域数据(银行)中使用的2个称为ACTIVITY和SERVICE的新实体是由很少的培训样本创建和培训的。

| 名称生成 | 机器翻译 | 话语产生 |

| 图像字幕 | 图像字幕 - 乳胶方程 | 新闻摘要 |

| 电子邮件主题生成 |

使用字符级LSTM语言模型。也就是说,我们将为LSTM提供大量名称,并要求它对下一个字符的下一个字符的概率分布进行建模给定以前的字符的顺序。然后,这将使我们一次生成一个新名称一个字符

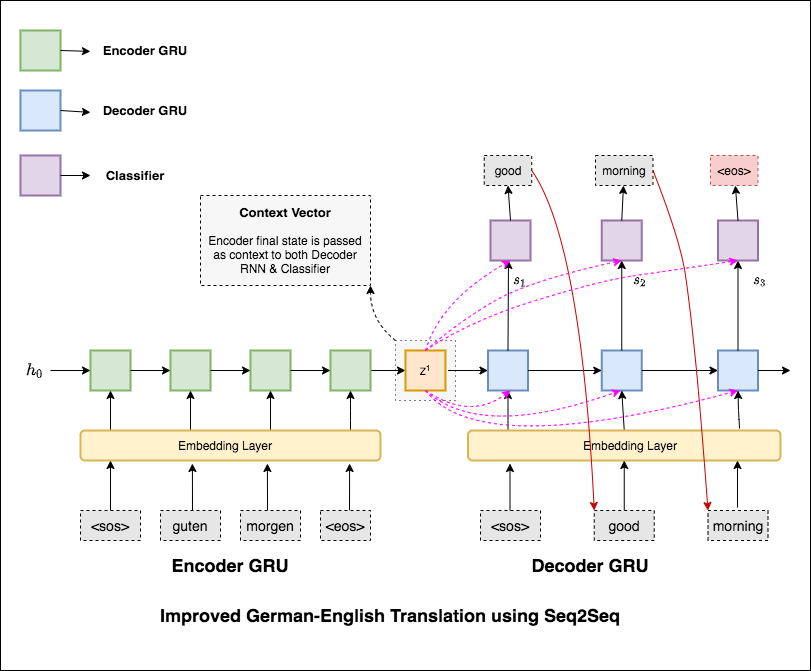

机器翻译(MT)是将一种自然语言自动转换为另一种自然语言,保留输入文本的含义并以输出语言产生流利的文本的任务。理想情况下,源语言序列被转化为目标语言序列。任务是将句子从German to English 。

探索了以下变体:

最常见的序列到序列(SEQ2SEQ)模型是编码器模型,通常使用经常性神经网络(RNN)将源(输入)句子编码为单个向量。在此笔记本中,我们将此单个向量称为上下文向量。我们可以将上下文向量视为整个输入句子的抽象表示。然后,该向量由第二个rnn解码,该载体通过一次生成一个单词来学习输出目标(输出)句子。

在尝试具有文本困惑36.68的基本机器翻译后,已经进行了以下技术并进行了测试困惑7.041 。

在applications/generation文件夹中查看代码

注意机制诞生是为了帮助记住神经机器翻译(NMT)中的长源句子。与其从编码器的最后一个隐藏状态中构建单个上下文向量,不如在解码句子时更多地专注于输入的相关部分。上下文向量将通过取编码器输出和解码器RNN的previous hidden state创建。

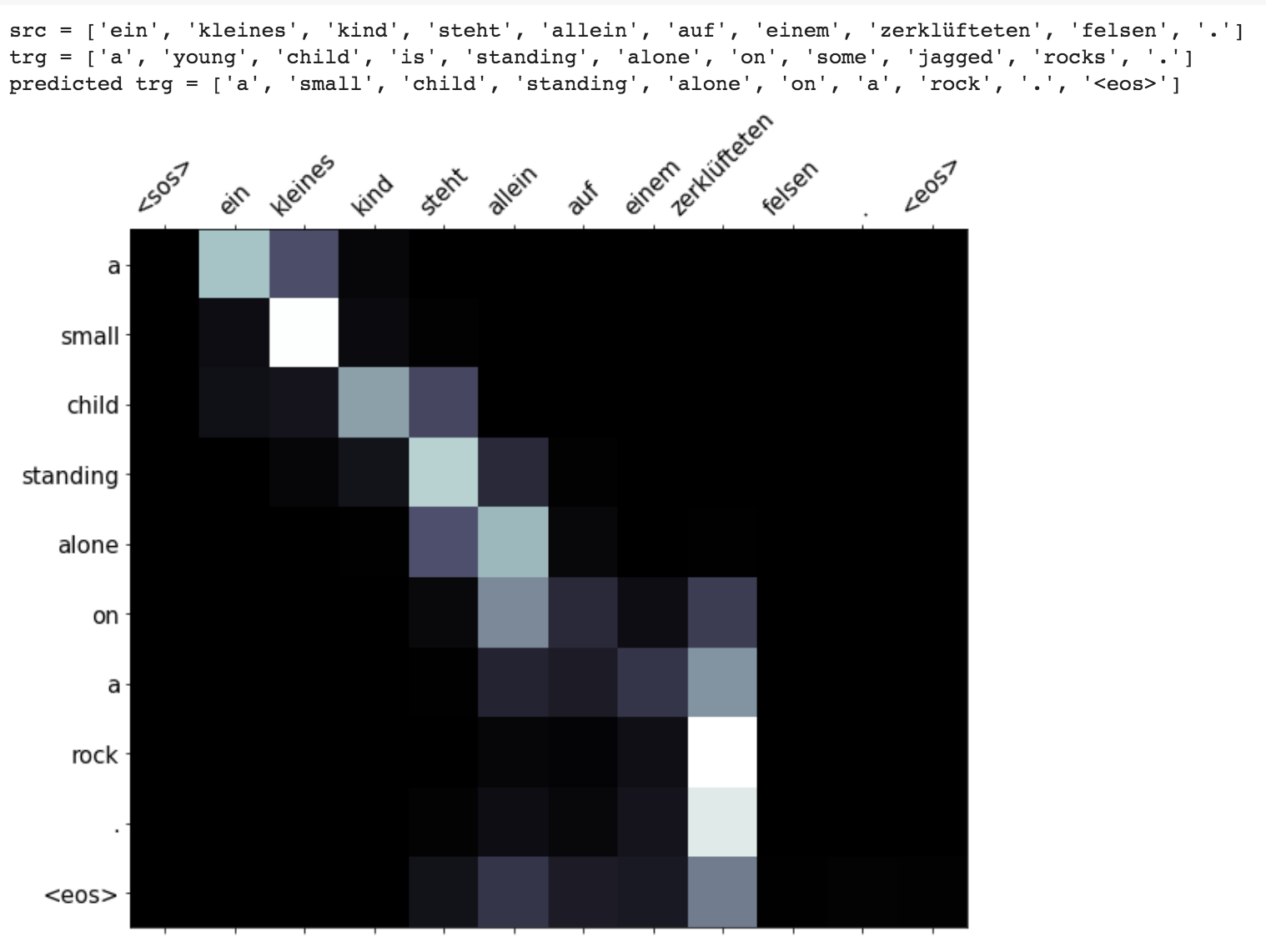

诸如掩盖(忽略填充输入的注意力),包装填充序列(以更好地计算),注意力可视化和测试数据上的BLEU度量等增强功能。

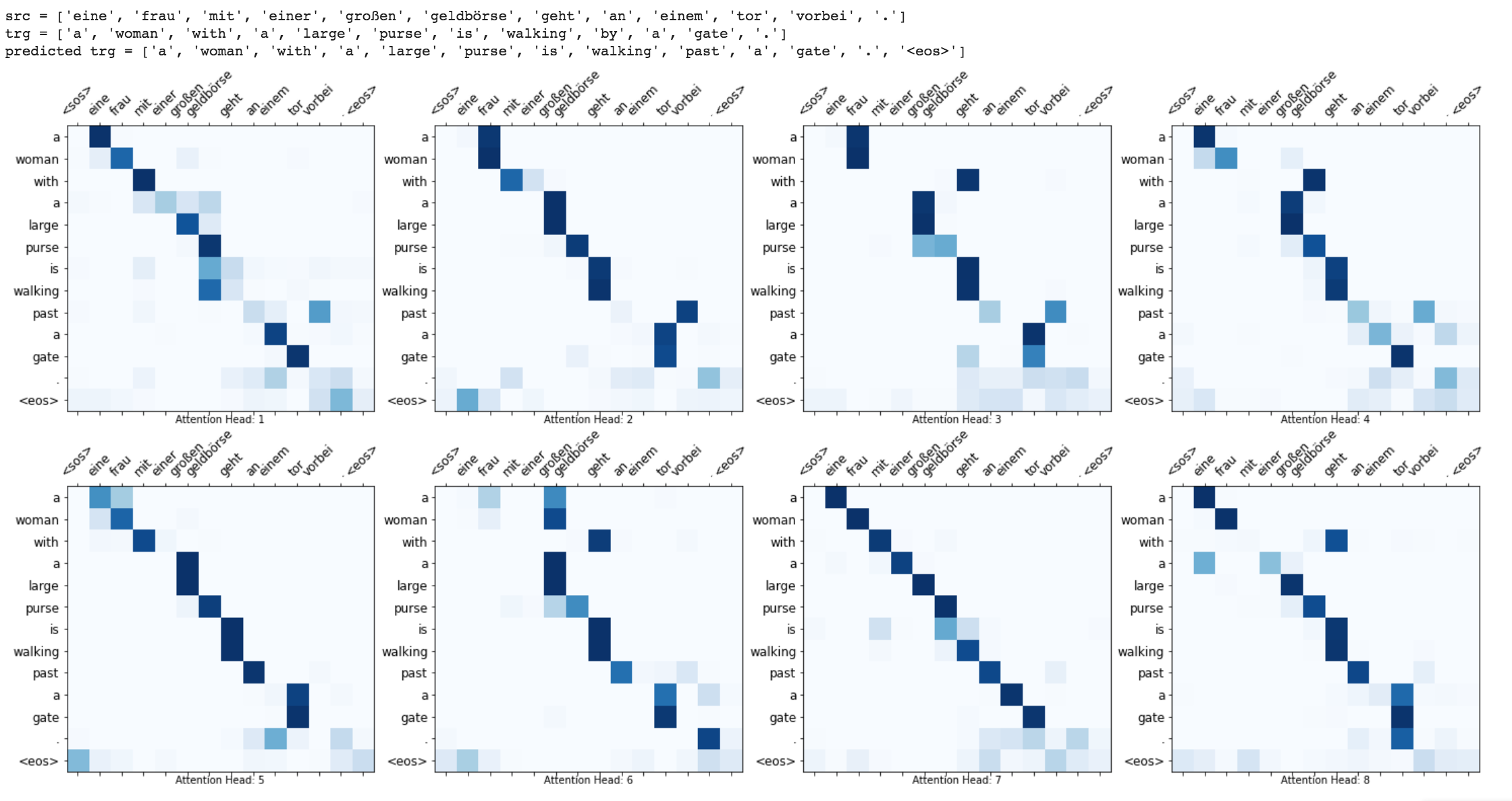

变压器,一种模型体系结构避免了复发,而完全依靠注意力机制来吸引输入和输出之间的全局依赖性,以从德语到英语进行机器翻译

为基于变压器的机器翻译模型添加了运行时间翻译(推理)和注意力可视化。

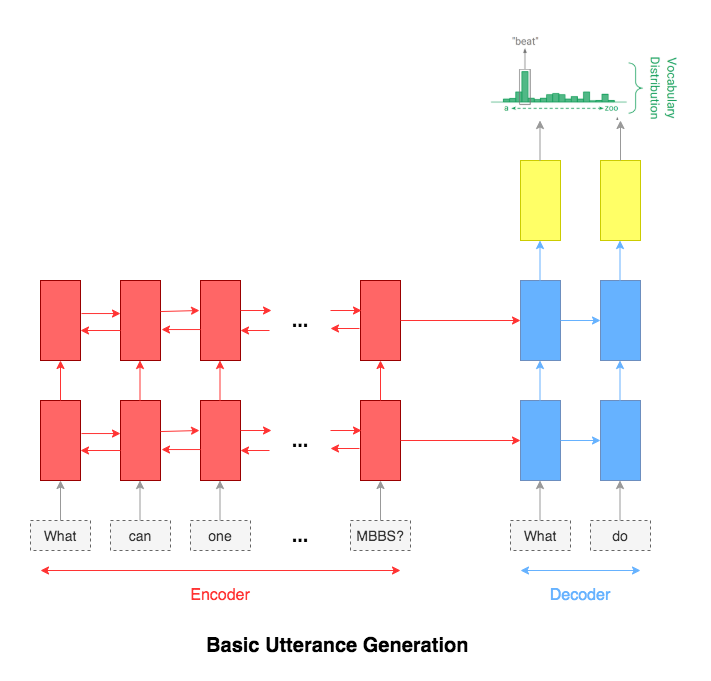

在NLP中,尤其是在问题回答,信息检索,信息提取,对话系统中,发言人的生成是一个重要的问题。它也可用于为许多NLP问题创建合成培训数据。

探索了以下变体:

The most common used model for this kind of application is sequence-to-sequence network. A basic 2 layer LSTM was used.

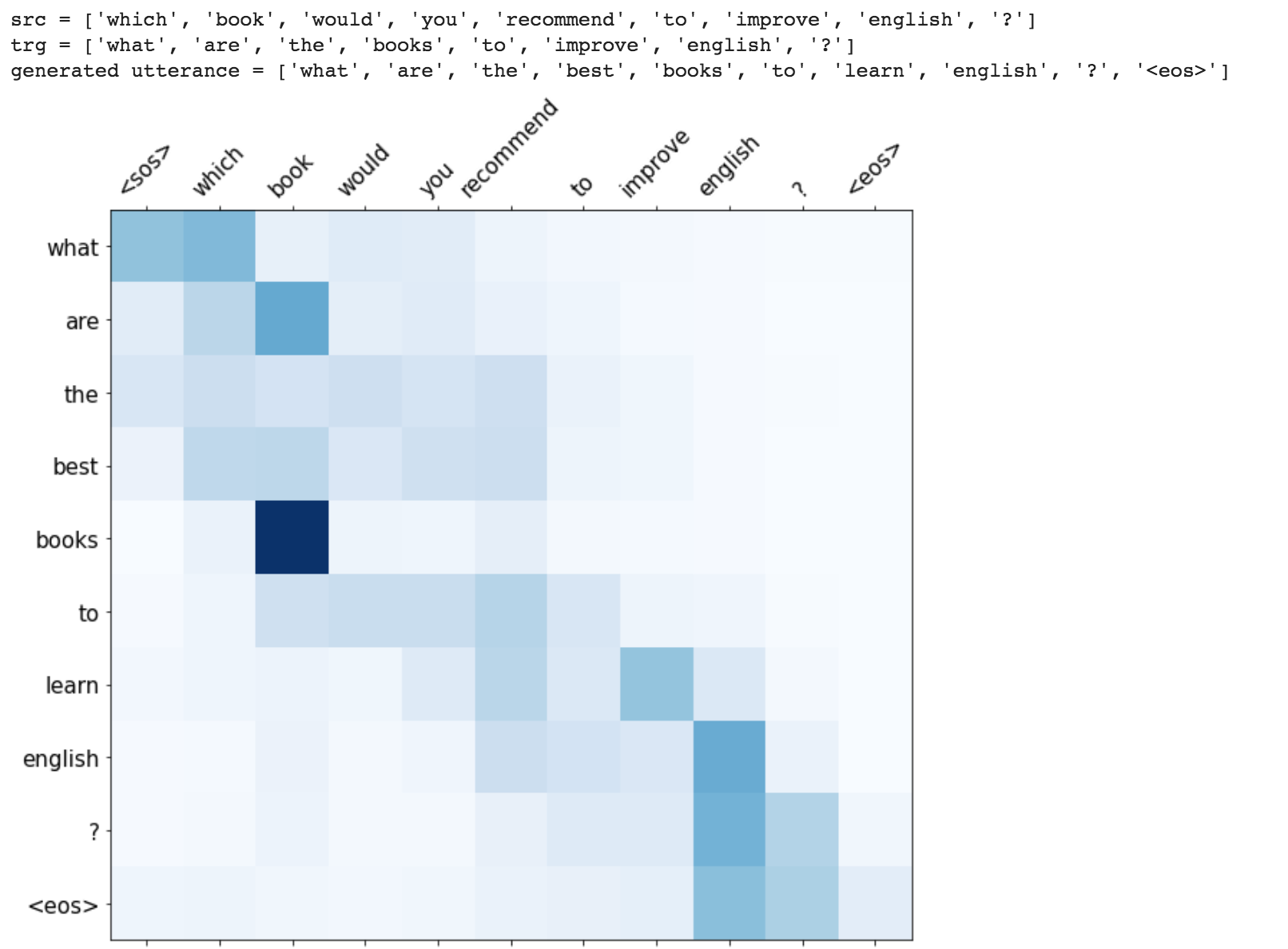

The attention mechanism will help in memorizing long sentences. Rather than building a single context vector out of the encoder's last hidden state, attention is used to focus more on the relevant parts of the input while decoding a sentence. The context vector will be created by taking encoder outputs and the hidden state of the decoder rnn.

After trying the basic LSTM apporach, Utterance generation with attention mechanism was implemented. Inference (run time generation) was also implemented.

While generating the a word in the utterance, decoder will attend over encoder inputs to find the most relevant word. This process can be visualized.

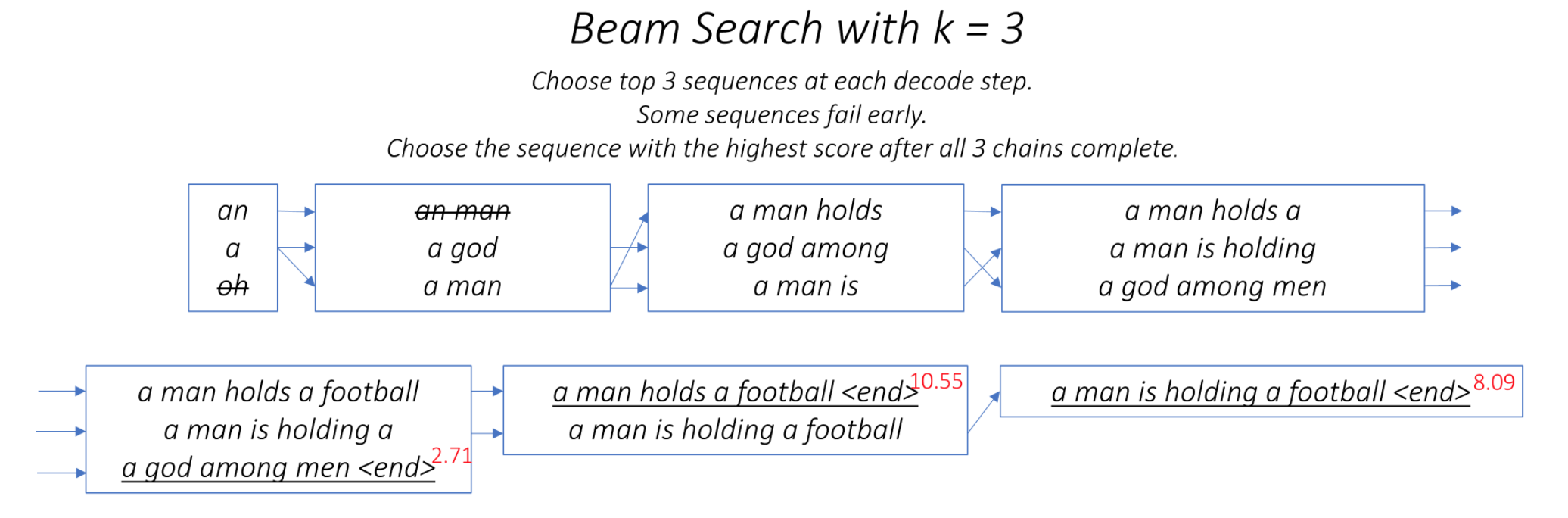

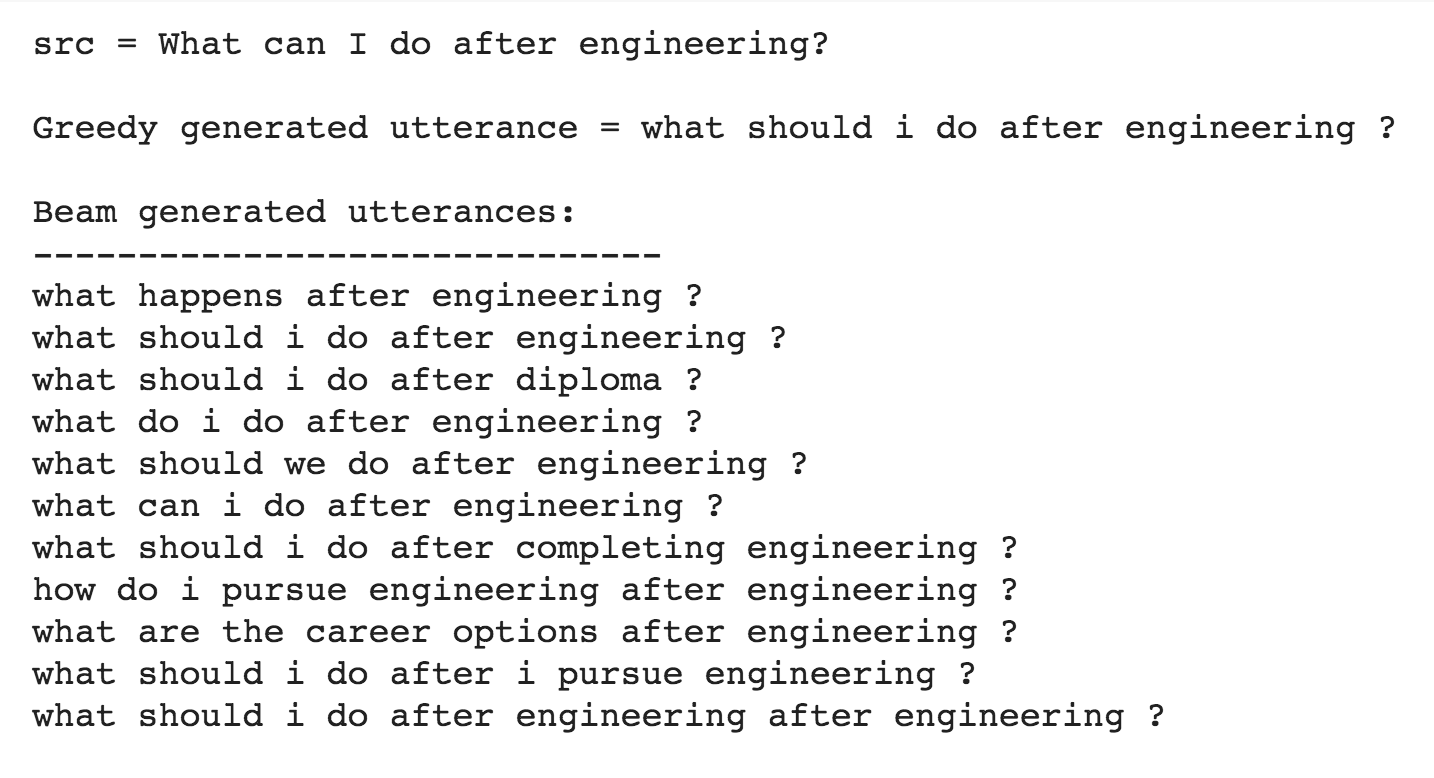

One of the ways to mitigate the repetition in the generation of utterances is to use Beam Search. By choosing the top-scored word at each step (greedy) may lead to a sub-optimal solution but by choosing a lower scored word that may reach an optimal solution.

Instead of greedily choosing the most likely next step as the sequence is constructed, the beam search expands all possible next steps and keeps the k most likely, where k is a user-specified parameter and controls the number of beams or parallel searches through the sequence of probabilities.

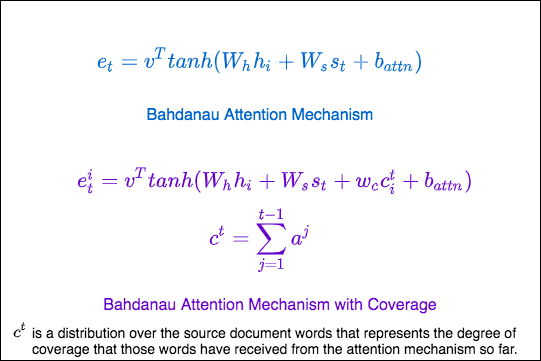

Repetition is a common problem for sequenceto-sequence models, and is especially pronounced when generating a multi-sentence text. In coverage model, we maintain a coverage vector c^t , which is the sum of attention distributions over all previous decoder timesteps

This ensures that the attention mechanism's current decision (choosing where to attend next) is informed by a reminder of its previous decisions (summarized in c^t). This should make it easier for the attention mechanism to avoid repeatedly attending to the same locations, and thus avoid generating repetitive text.

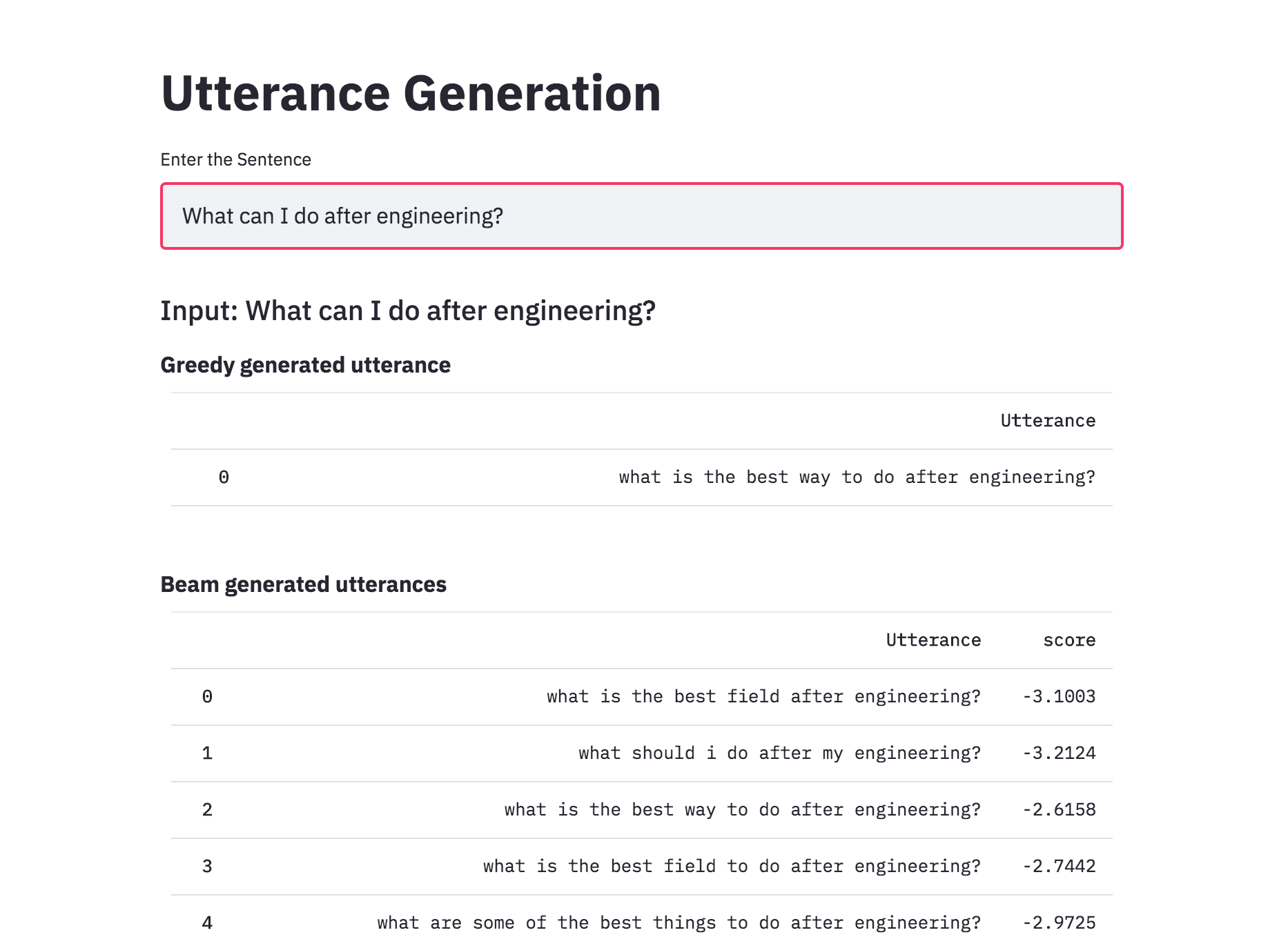

The Transformer, a model architecture eschewing recurrence and instead relying entirely on an attention mechanism to draw global dependencies between input and output is used to do generate utterance from a given sentence. The training time was also lot faster 4x times compared to RNN based architecture.

Added beam search to utterance generation with transformers. With beam search, the generated utterances are more diverse and can be more than 1 (which is the case of the greedy approach). This implemented was better than naive one implemented previously.

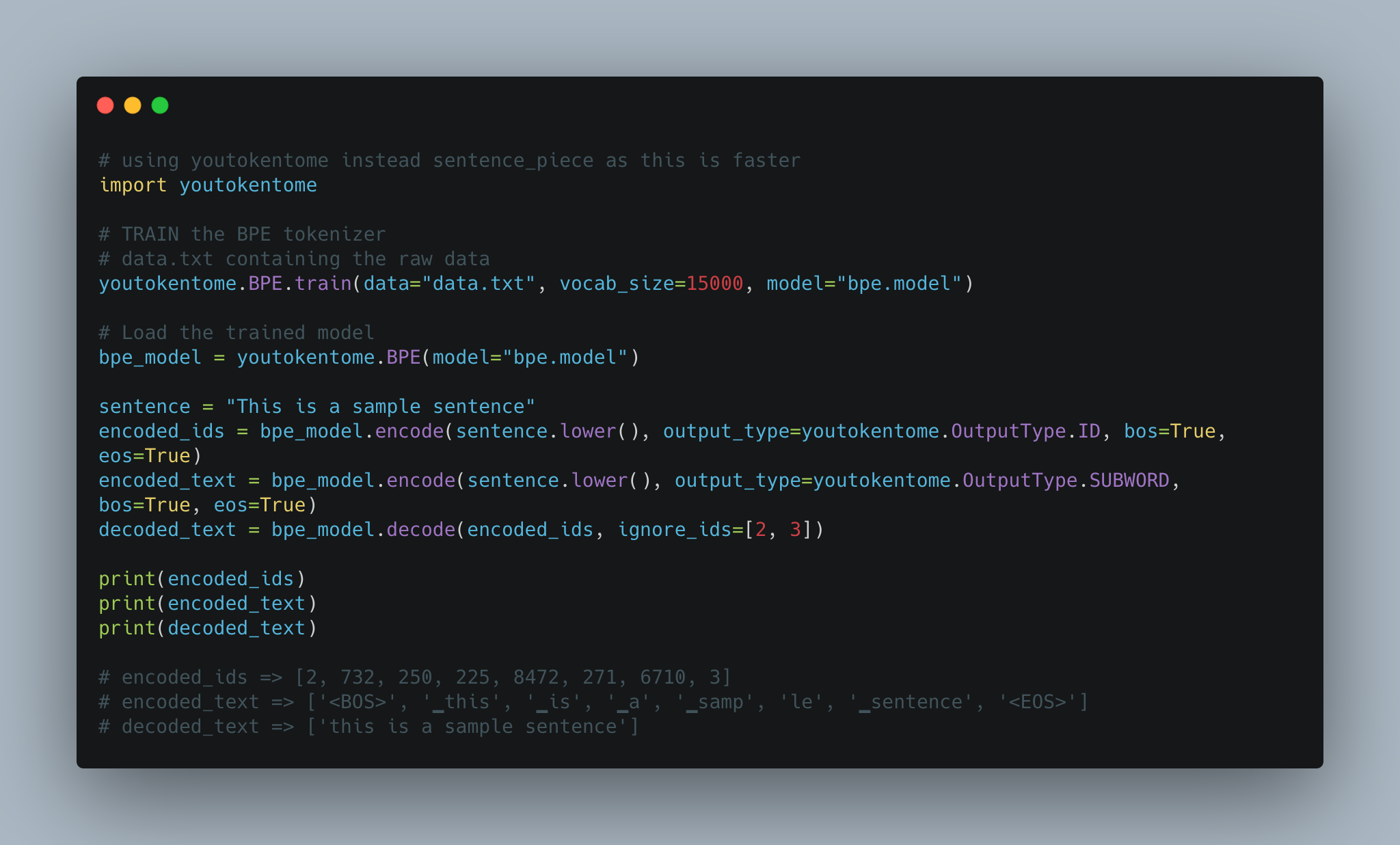

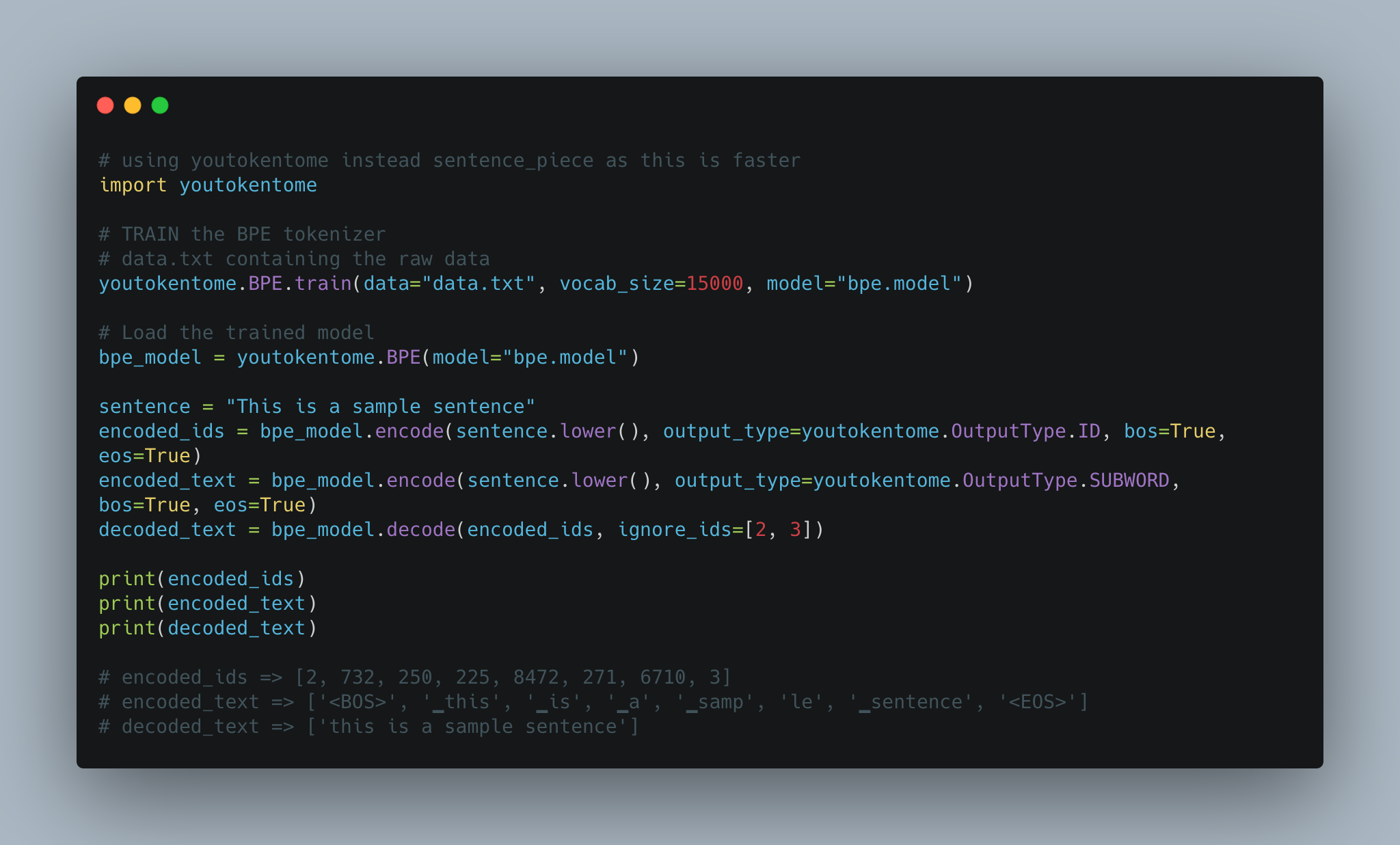

Utterance generation using BPE tokenization instead of Spacy is implemented.

Today, subword tokenization schemes inspired by BPE have become the norm in most advanced models including the very popular family of contextual language models like BERT, GPT-2,RoBERTa, etc.

BPE brings the perfect balance between character and word-level hybrid representations which makes it capable of managing large corpora. This behavior also enables the encoding of any rare words in the vocabulary with appropriate subword tokens without introducing any “unknown” tokens.

Converted the Utterance Generation into an app using streamlit. The pre-trained model trained on the Quora dataset is available now.

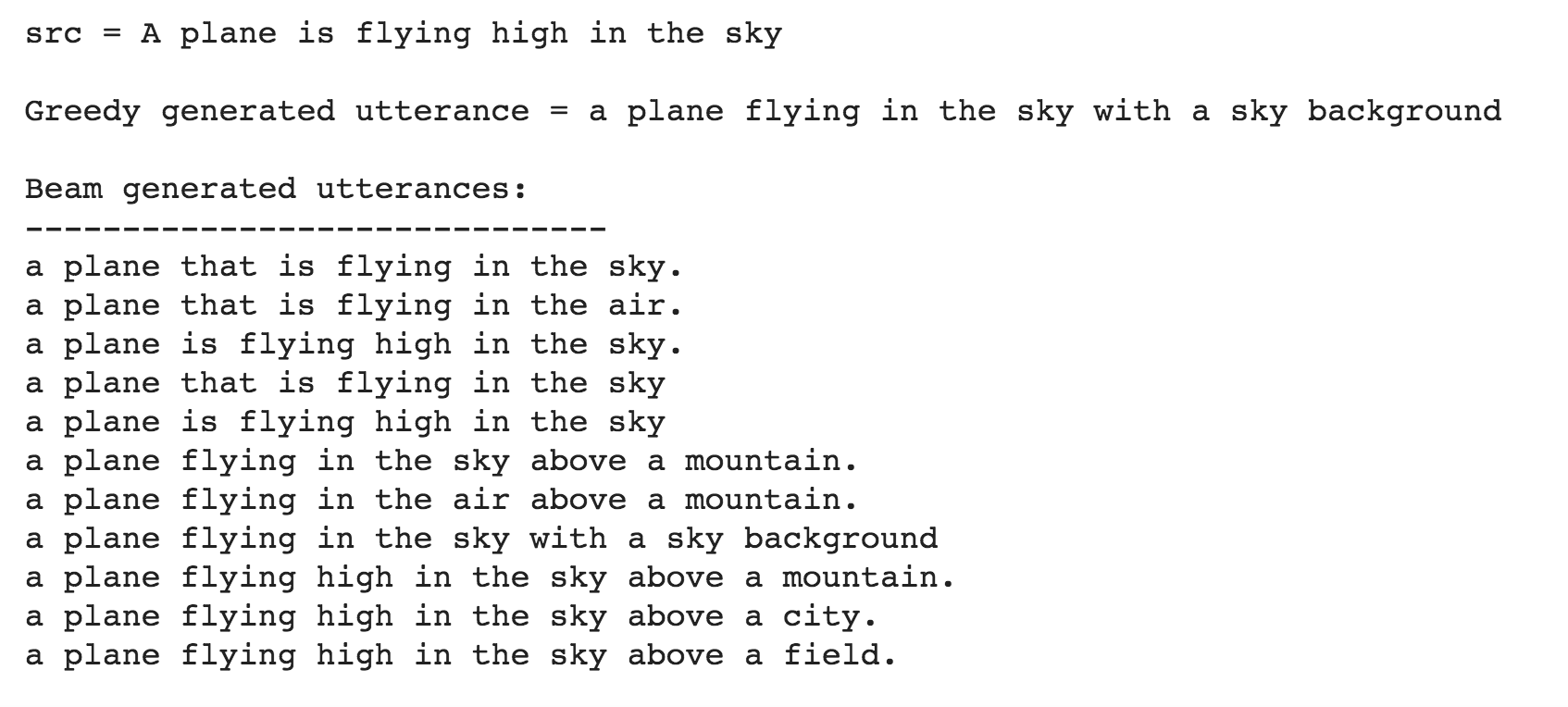

Till now the Utterance Generation is trained using the Quora Question Pairs dataset, which contains sentences in the form of questions. When given a normal sentence (which is not in a question format) the generated utterances are very poor. This is due the bias induced by the dataset. Since the model is only trained on question type sentences, it fails to generate utterances in case of normal sentences. In order to generate utterances for a normal sentence, COCO dataset is used to train the model.

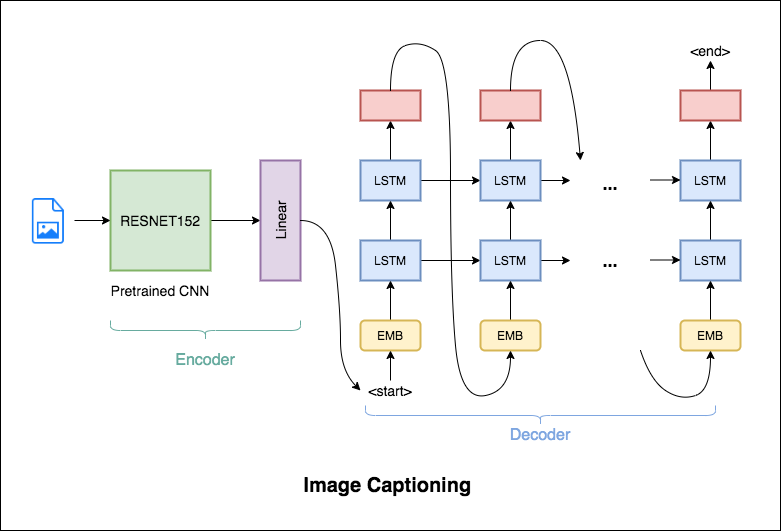

Image Captioning is the process of generating a textual description of an image. It uses both Natural Language Processing and Computer Vision techniques to generate the captions.

Flickr8K dataset is used. It contains 8092 images, each image having 5 captions.

Following varients have been explored:

The encoder-decoder framework is widely used for this task. The image encoder is a convolutional neural network (CNN). The decoder is a recurrent neural network(RNN) which takes in the encoded image and generates the caption.

In this notebook, the resnet-152 model pretrained on the ILSVRC-2012-CLS image classification dataset is used as the encoder. The decoder is a long short-term memory (LSTM) network.

In this notebook, the resnet-101 model pretrained on the ILSVRC-2012-CLS image classification dataset is used as the encoder. The decoder is a long short-term memory (LSTM) network. Attention is implemented. Instead of the simple average, we use the weighted average across all pixels, with the weights of the important pixels being greater. This weighted representation of the image can be concatenated with the previously generated word at each step to generate the next word of the caption.

Instead of greedily choosing the most likely next step as the caption is constructed, the beam search expands all possible next steps and keeps the k most likely, where k is a user-specified parameter and controls the number of beams or parallel searches through the sequence of probabilities.

Today, subword tokenization schemes inspired by BPE have become the norm in most advanced models including the very popular family of contextual language models like BERT, GPT-2,RoBERTa, etc.

BPE brings the perfect balance between character and word-level hybrid representations which makes it capable of managing large corpora. This behavior also enables the encoding of any rare words in the vocabulary with appropriate subword tokens without introducing any “unknown” tokens.

BPE was used in order to tokenize the captions instead of using nltk.

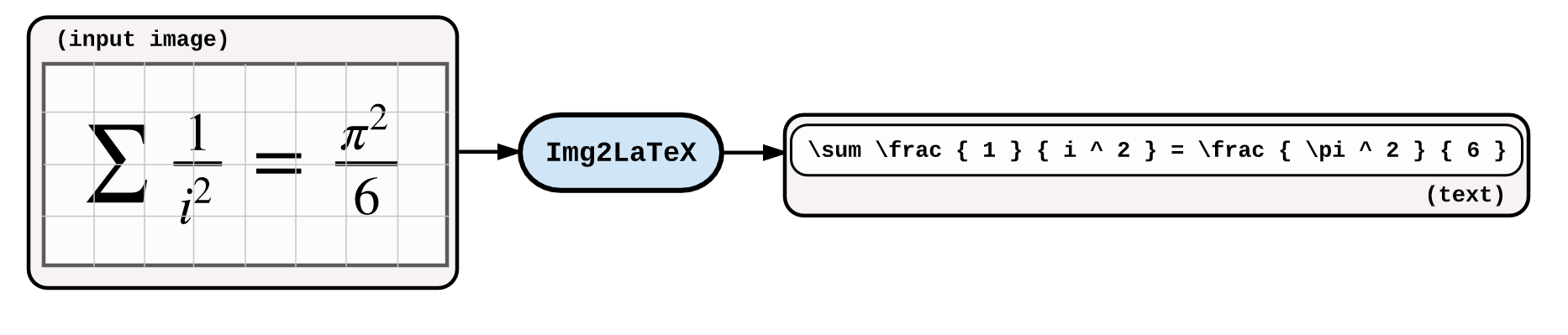

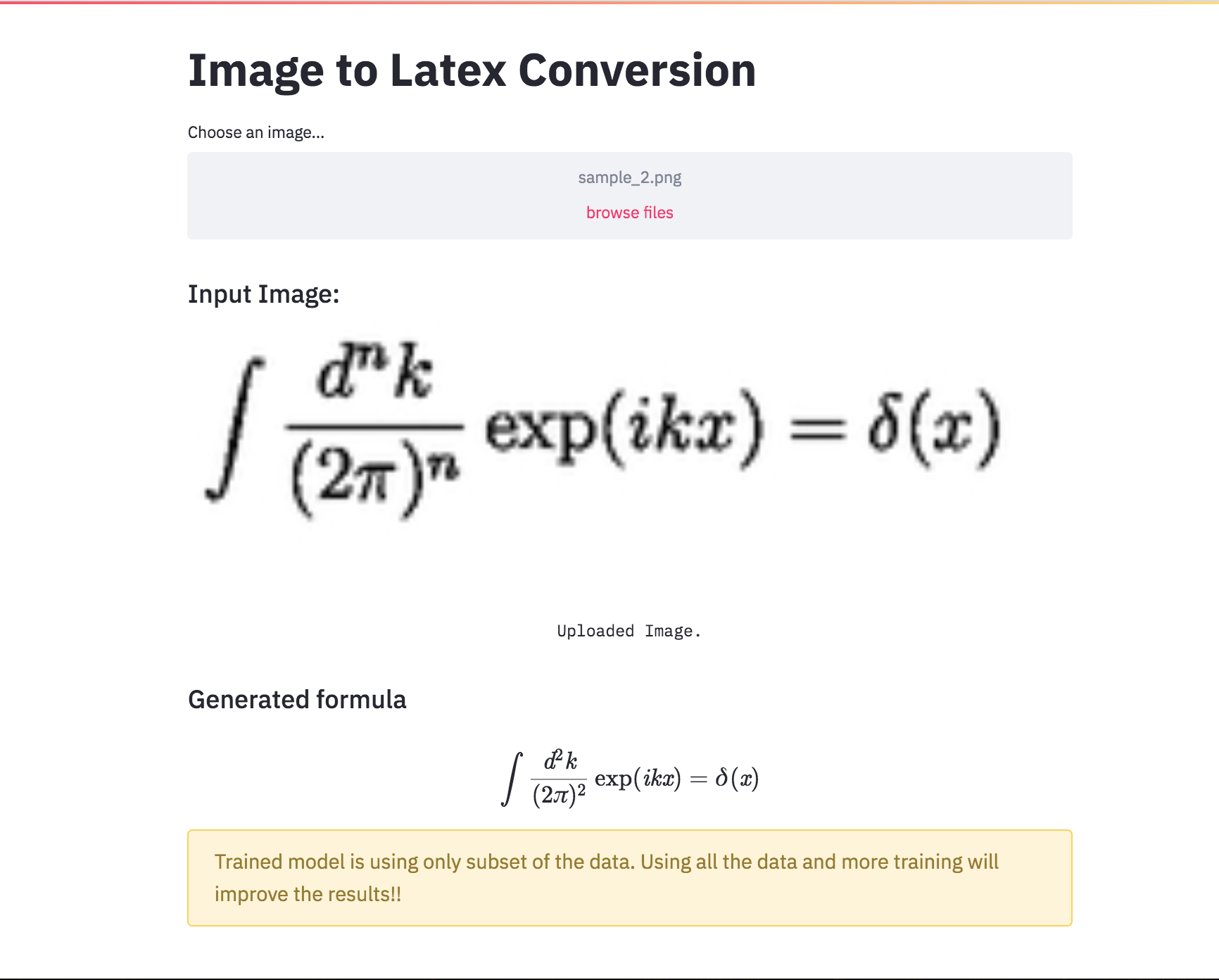

An application of image captioning is to convert the the equation present in the image to latex format.

Following varients have been explored:

An application of image captioning is to convert the the equation present in the image to latex format. Basic Sequence-to-Sequence models is used. CNN is used as encoder and RNN as decoder. Im2latex dataset is used. It contains 100K samples comprising of training, validation and test splits.

Generated formulas are not great. Following notebooks will explore techniques to improve it.

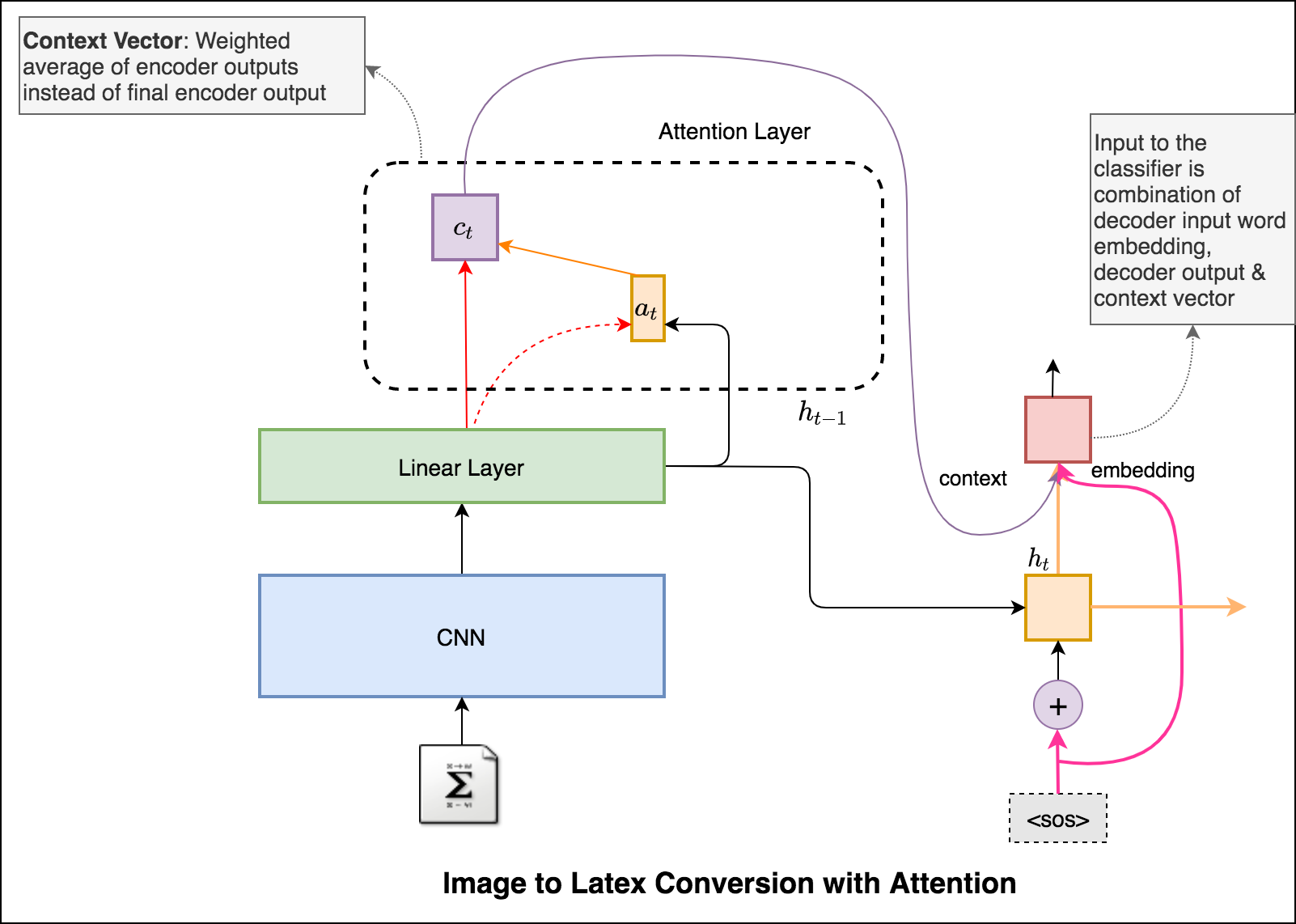

Latex code generation using the attention mechanism is implemented. Instead of the simple average, we use the weighted average across all pixels, with the weights of the important pixels being greater. This weighted representation of the image can be concatenated with the previously generated word at each step to generate the next word of the formula.

Added beam search in the decoding process. Also added Positional encoding to the input image and learning rate scheduler.

Converted the Latex formula generation into an app using streamlit.

Automatic text summarization is the task of producing a concise and fluent summary while preserving key information content and overall meaning. Have you come across the mobile app inshorts ? It's an innovative news app that converts news articles into a 60-word summary. And that is exactly what we are going to do in this notebook. The model used for this task is T5 .

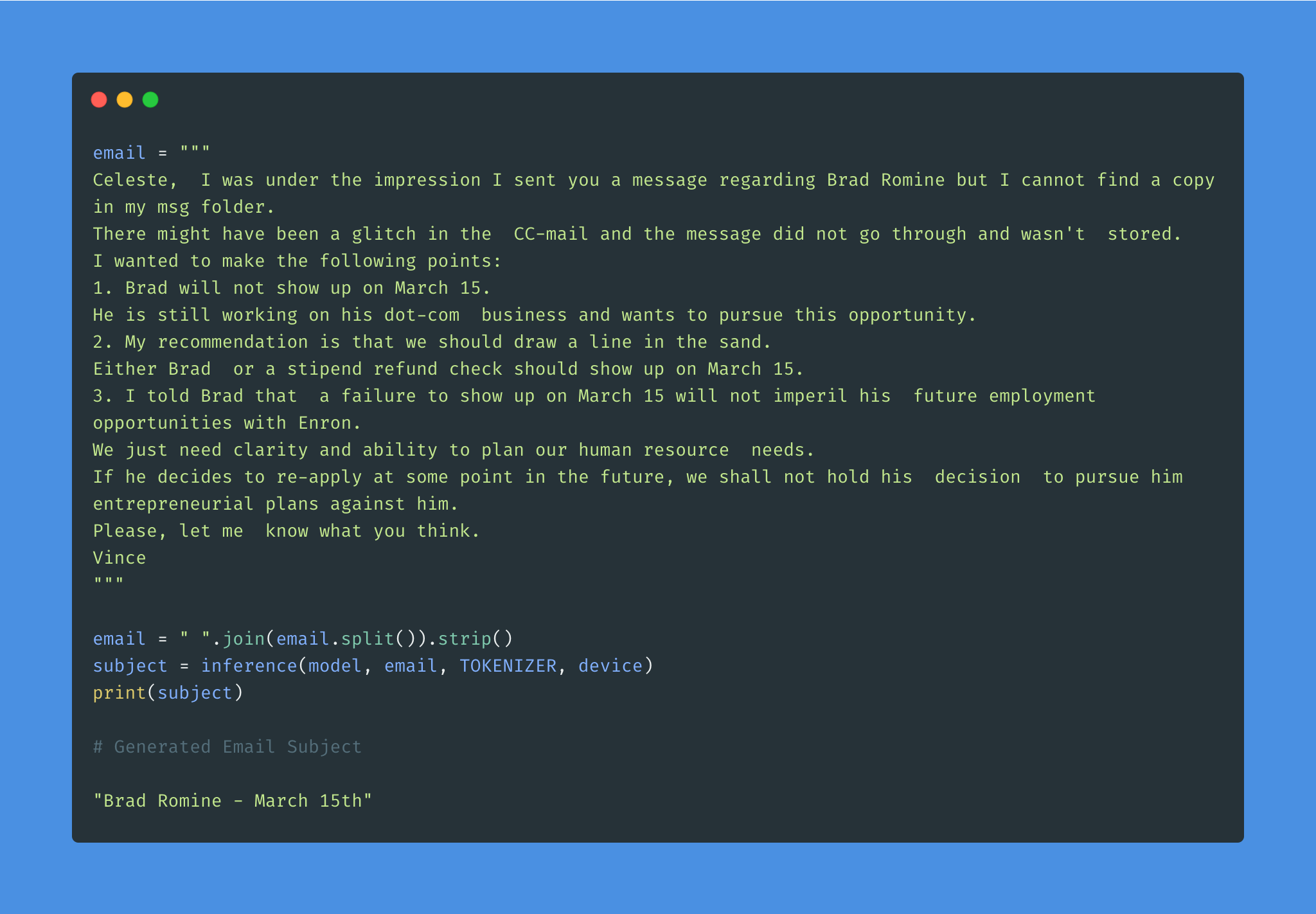

Given the overwhelming number of emails, an effective subject line becomes essential to better inform the recipient of the email's content.

Email subject generation using T5 model was explored. AESLC dataset was used for this purpose.

| Topic Identification in News | Covid Article finding |

Topic Identification is a Natural Language Processing (NLP) is the task to automatically extract meaning from texts by identifying recurrent themes or topics.

Following varients have been explored:

LDA's approach to topic modeling is it considers each document as a collection of topics in a certain proportion. And each topic as a collection of keywords, again, in a certain proportion.

Once you provide the algorithm with the number of topics, all it does it to rearrange the topics distribution within the documents and keywords distribution within the topics to obtain a good composition of topic-keywords distribution.

20 Newsgroup dataset was used and only the articles are provided to identify the topics. Topic Modelling algorithms will provide for each topic what are the important words. It is upto us to infer the topic name.

Choosing the number of topics is a difficult job in Topic Modelling. In order to choose the optimal number of topics, grid search is performed on various hypermeters. In order to choose the best model the model having the best perplexity score is choosed.

A good topic model will have non-overlapping, fairly big sized blobs for each topic.

We would clearly expect that the words that appear most frequently in one topic would appear less frequently in the other - otherwise that word wouldn't make a good choice to separate out the two topics. Therefore, we expect the topics to be orthogonal .

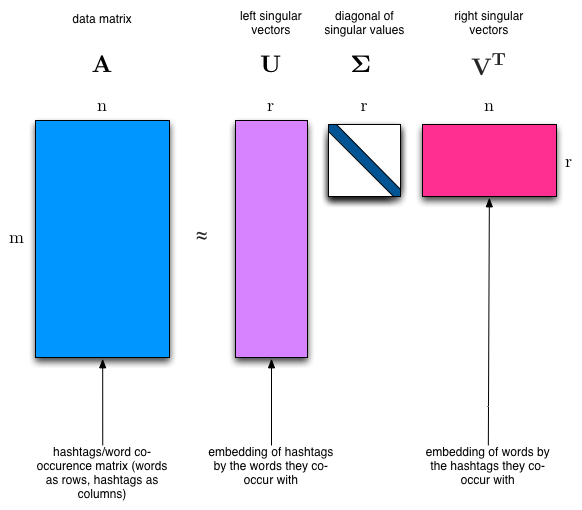

Latent Semantic Analysis (LSA) uses SVD. You will sometimes hear topic modelling referred to as LSA.

The SVD algorithm factorizes a matrix into one matrix with orthogonal columns and one with orthogonal rows (along with a diagonal matrix, which contains the relative importance of each factor).

笔记:

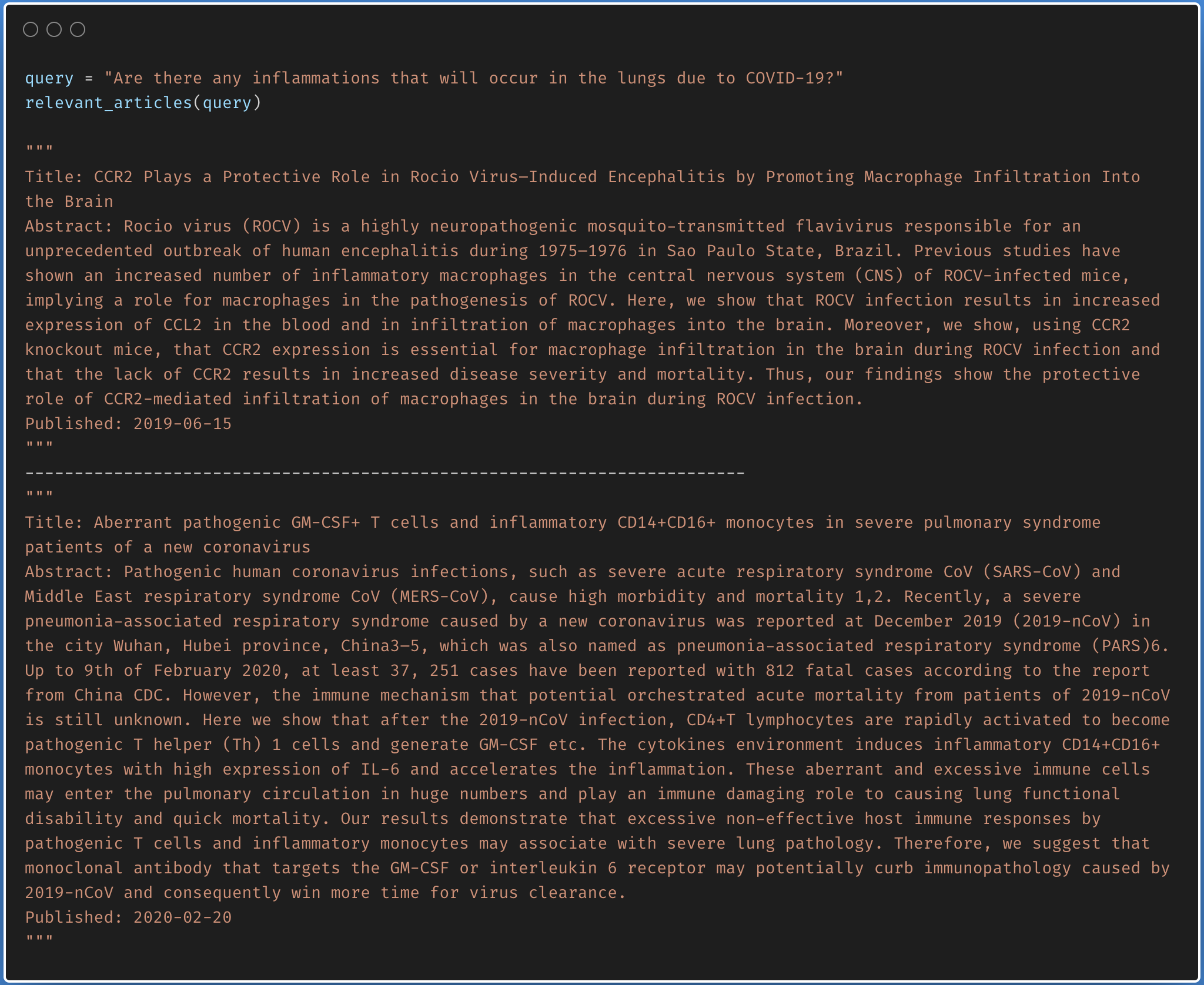

Finding the relevant article from a covid-19 research article corpus of 50K+ documents using LDA is explored.

The documents are first clustered into different topics using LDA. For a given query, dominant topic will be found using the trained LDA. Once the topic is found, most relevant articles will be fetched using the jensenshannon distance.

Only abstracts are used for the LDA model training. LDA model was trained using 35 topics.

| Factual Question Answering | Visual Question Answering | Boolean Question Answering |

| Closed Question Answering |

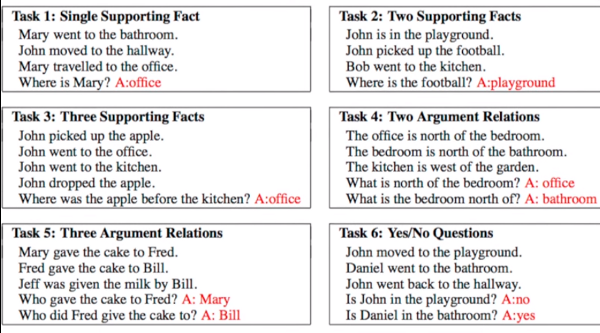

Given a set of facts, question concering them needs to be answered. Dataset used is bAbI which has 20 tasks with an amalgamation of inputs, queries and answers. See the following figure for sample.

Following varients have been explored:

Dynamic Memory Network (DMN) is a neural network architecture which processes input sequences and questions, forms episodic memories, and generates relevant answers.

The main difference between DMN+ and DMN is the improved InputModule for calculating the facts from input sentences keeping in mind the exchange of information between input sentences using a Bidirectional GRU and a improved version of MemoryModule using Attention based GRU model.

Visual Question Answering (VQA) is the task of given an image and a natural language question about the image, the task is to provide an accurate natural language answer.

Following varients have been explored:

The model uses a two layer LSTM to encode the questions and the last hidden layer of VGGNet to encode the images. The image features are then l_2 normalized. Both the question and image features are transformed to a common space and fused via element-wise multiplication, which is then passed through a fully connected layer followed by a softmax layer to obtain a distribution over answers.

To apply the DMN to visual question answering, input module is modified for images. The module splits an image into small local regions and considers each region equivalent to a sentence in the input module for text.

The input module for VQA is composed of three parts, illustrated in below fig:

Boolean question answering is to answer whether the question has answer present in the given context or not. The BoolQ dataset contains the queries for complex, non-factoid information, and require difficult entailment-like inference to solve.

Stanford Question Answering Dataset (SQuAD) is a reading comprehension dataset, consisting of questions posed by crowdworkers on a set of Wikipedia articles, where the answer to every question is a segment of text, or span, from the corresponding reading passage

Following varients have been explored:

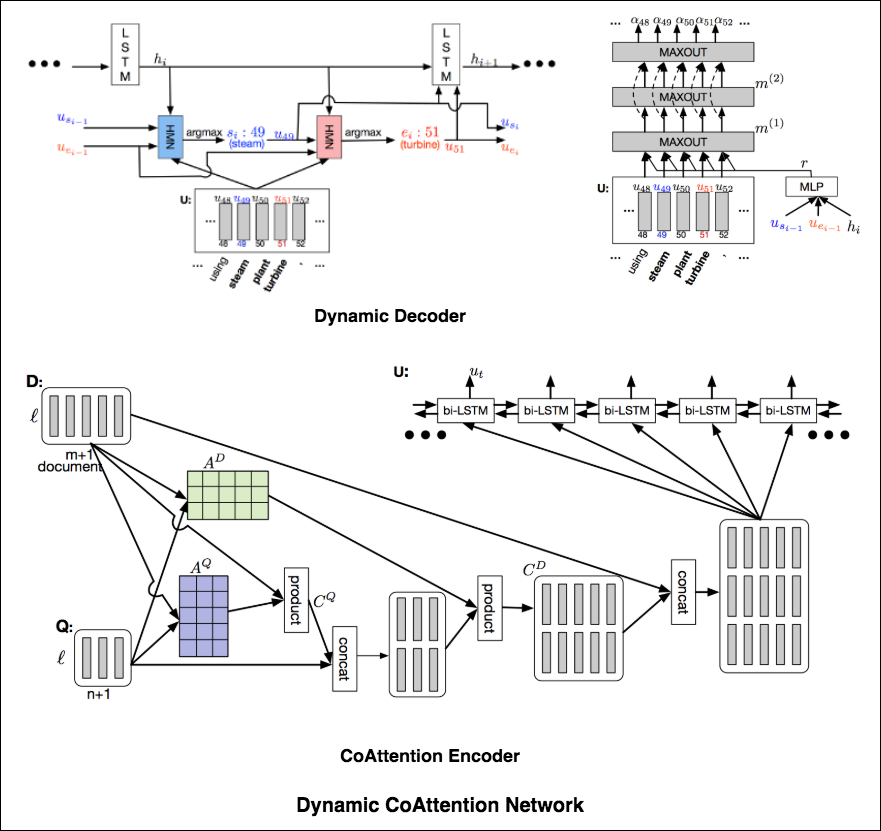

The DCN first fuses co-dependent representations of the question and the document in order to focus on relevant parts of both. Then a dynamic pointing decoder iterates over potential answer spans. This iterative procedure enables the model to recover from initial local maxima corresponding to incorrect answers.

The Dynamic Coattention Network has two major parts: a coattention encoder and a dynamic decoder.

CoAttention Encoder : The model first encodes the given document and question separately via the document and question encoder. The document and question encoders are essentially a one-directional LSTM network with one layer. Then it passes both the document and question encodings to another encoder which computes the coattention via matrix multiplications and outputs the coattention encoding from another bidirectional LSTM network.

Dynamic Decoder : Dynamic decoder is also a one-directional LSTM network with one layer. The model runs the LSTM network through several iterations . In each iteration, the LSTM takes in the final hidden state of the LSTM and the start and end word embeddings of the answer in the last iteration and outputs a new hidden state. Then, the model uses a Highway Maxout Network (HMN) to compute the new start and end word embeddings of the answer in each iteration.

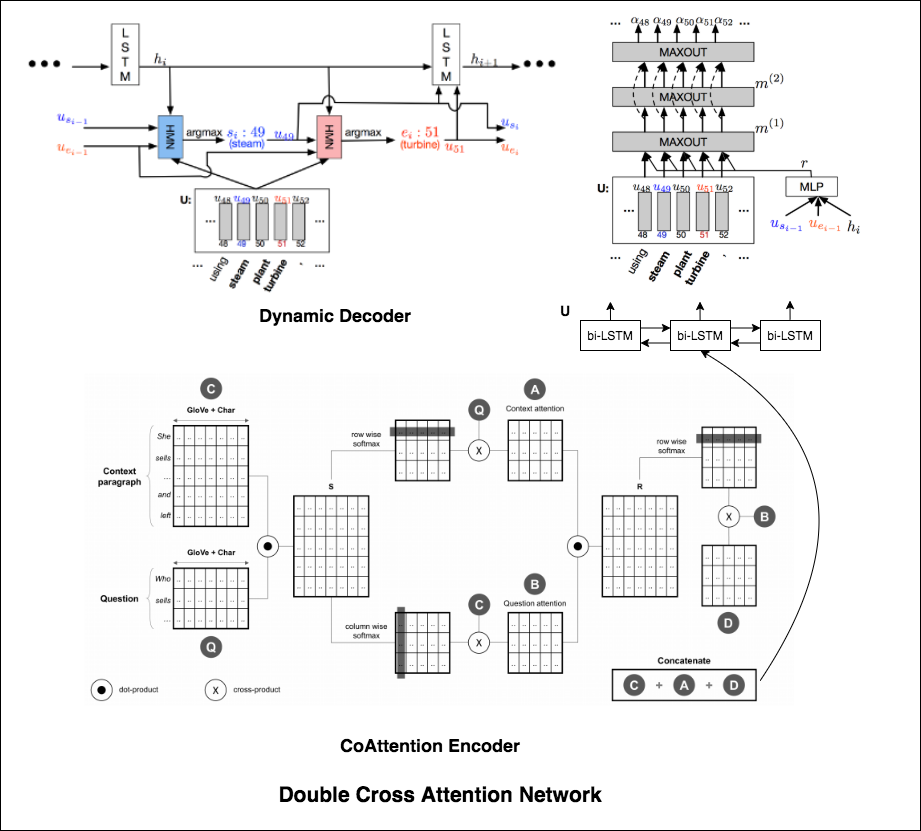

Double Cross Attention (DCA) seems to provide better results compared to both BiDAF and Dynamic Co-Attention Network (DCN). The motivation behind this approach is that first we pay attention to each context and question and then we attend those attentions with respect to each other in a slightly similar way as DCN. The intuition is that if iteratively read/attend both context and question, it should help us to search for answers easily.

I have augmented the Dynamic Decoder part from DCN model in-order to have iterative decoding process which helps finding better answer.

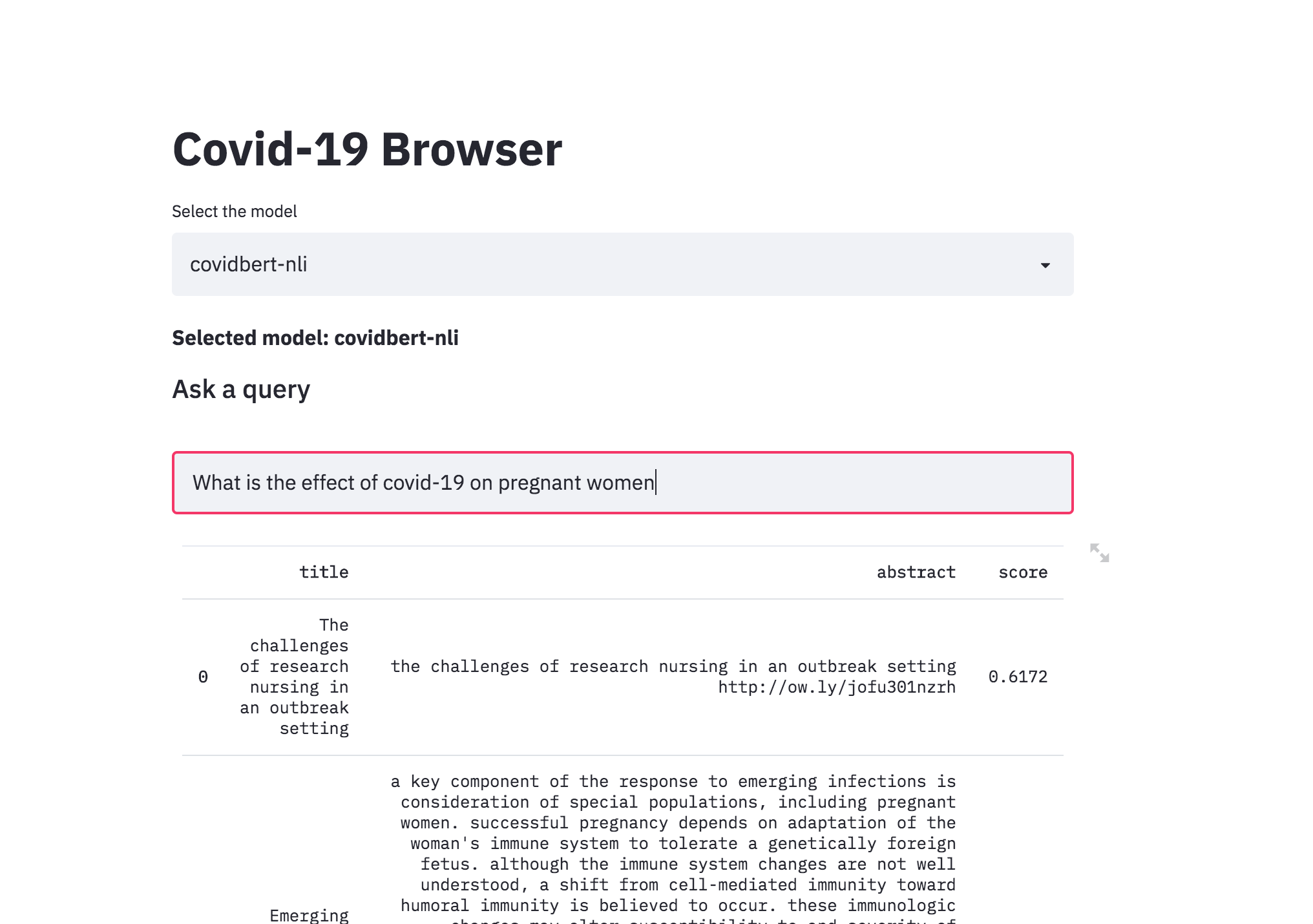

| Covid-19 Browser |

There was a kaggle problem on covid-19 research challenge which has over 1,00,000 + documents. This freely available dataset is provided to the global research community to apply recent advances in natural language processing and other AI techniques to generate new insights in support of the ongoing fight against this infectious disease. There is a growing urgency for these approaches because of the rapid acceleration in new coronavirus literature, making it difficult for the medical research community to keep up.

The procedure I have taken is to convert the abstracts into a embedding representation using sentence-transformers . When a query is asked, it will converted into an embedding and then ranked across the abstracts using cosine similarity.

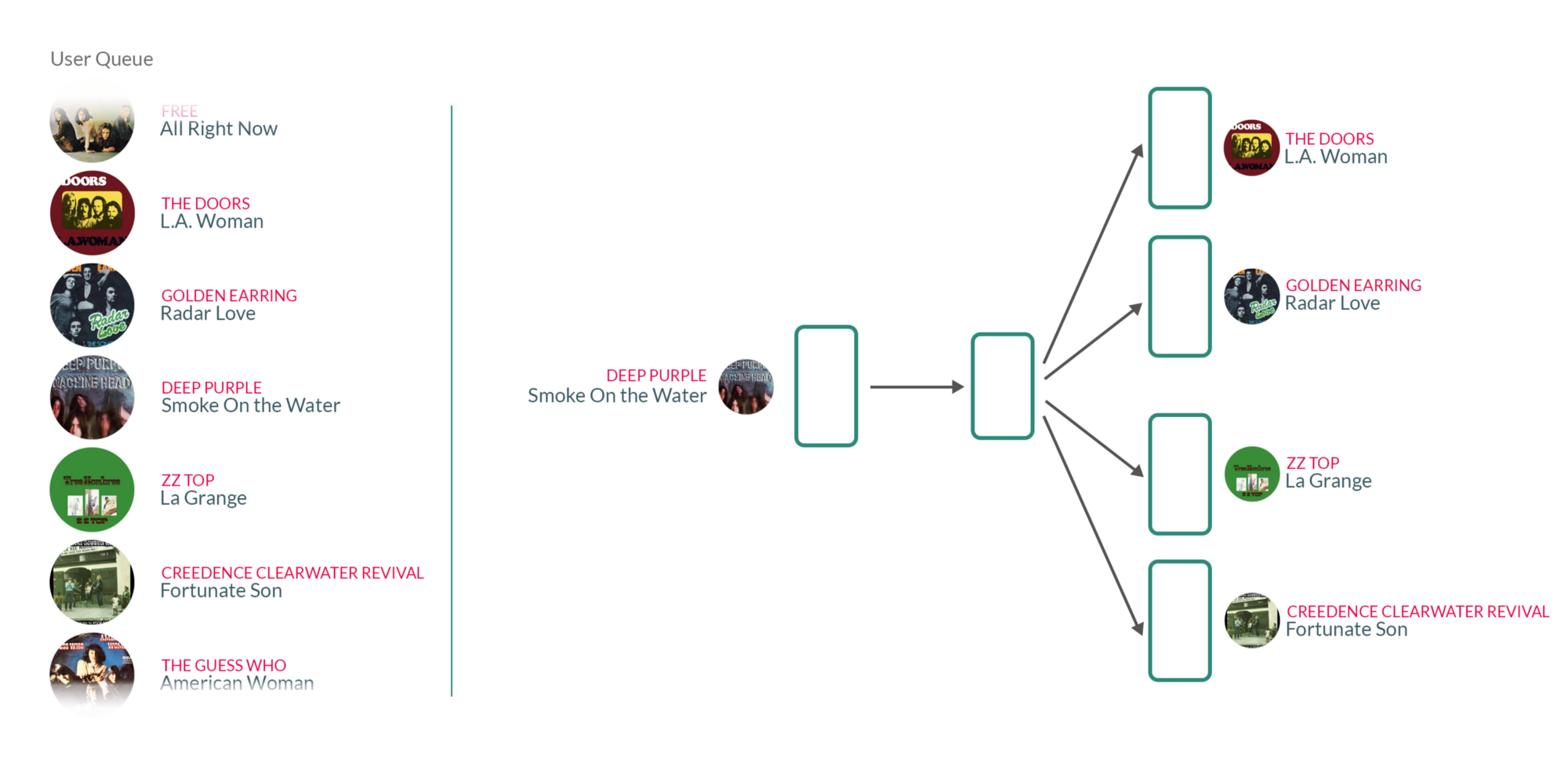

| Song Recommendation |

By taking user's listening queue as a sentence, with each word in that sentence being a song that the user has listened to, training the Word2vec model on those sentences essentially means that for each song the user has listened to in the past, we're using the songs they have listened to before and after to teach our model that those songs somehow belong to the same context.

What's interesting about those vectors is that similar songs will have weights that are closer together than songs that are unrelated.