Recently, the Allen Institute of Artificial Intelligence (AI2) released its latest large-scale language model - OLMo232B. This model not only represents the latest achievements of the OLMo2 series, but also launches powerful challenges to those closed proprietary models with its "completely open" attitude. The open source attributes of OLMo232B are its most eye-catching feature. AI2 generously discloses all data, code, weights and detailed training processes, which is in sharp contrast with some closed source models.

AI2 hopes to promote wider research and innovation through this open and collaborative approach, allowing researchers around the world to continue moving forward on the shoulders of OLMo232B. In an era of knowledge sharing, hiding is obviously not a long-term solution. The release of OLMo232B marks an important milestone in the development path of open and accessible AI.

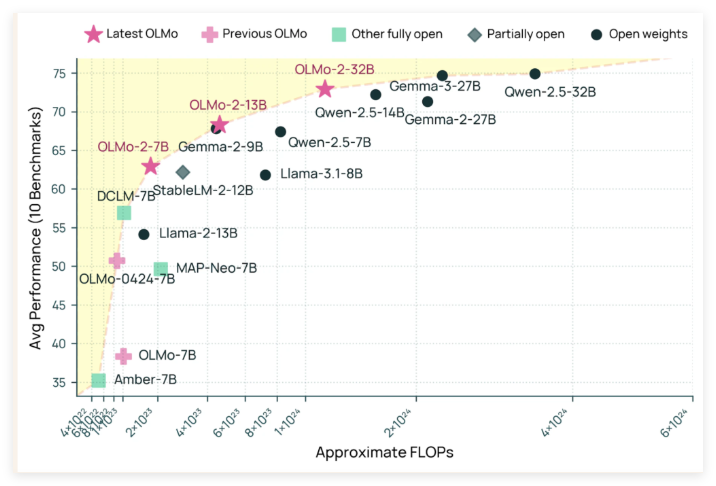

OLMo232B has 32 billion parameters, which is a considerable number, marking a significant increase in scale compared to its previous generation. In a number of widely recognized academic benchmarks, this open source model actually surpasses the GPT-3.5Turbo and GPT-4o mini! This undoubtedly injected a shot of a strong boost to the open source AI community, proving that not only "wealthy" institutions can create top AI models.

The reason why OLMo232B can achieve such impressive results is inseparable from its meticulous training process. The entire training process is divided into two main stages: pre-training and mid-term training. During the pre-training stage, the model "bited" a huge data set of about 3.9 trillion tokens, which are widely sourced, including DCLM, Dolma, Starcoder, and Proof Pile II. It's like letting the model read a lot and learn a wide range of language patterns.

The mid-term training focuses on the Dolmino dataset, a high-quality dataset containing 843 billion tokens, covering education, mathematics and academic content, further improving the model's understanding ability in specific fields. This phased and focused training method ensures that OLMo232B can have solid and meticulous language skills.

In addition to its excellent performance, OLMo232B has also shown amazing strength in training efficiency. It is said to have used only about one-third of the computing resources while reaching performance levels comparable to the leading open weight model, compared to models like Qwen2.532B require more computing power. It's like an efficient craftsman who has completed the same or even better work with less tools and time.

The release of OLMo232B is not only a new AI model, but also symbolizes an important milestone in the development path of open and accessible AI. By providing a completely open and capable solution that is comparable to or exceeds some proprietary models, AI2 strongly demonstrates that careful model design and efficient training methods can lead to huge breakthroughs. This openness will encourage global researchers and developers to actively participate, jointly promote progress in the field of artificial intelligence, and ultimately benefit the entire human society.

It can be foreseen that the emergence of OLMo232B will bring a fresh air to the field of AI research. It not only lowers the research threshold, promotes wider cooperation, but also shows us a more dynamic and innovative path to AI development. As for those AI giants who still hold the "exclusive secret recipe" tightly, they should also consider it. Only by embracing openness can they win a broader future.

github: https://github.com/allenai/OLMo-core

huggingface:https://huggingface.co/allenai/OLMo-2-0325-32B-Instruct