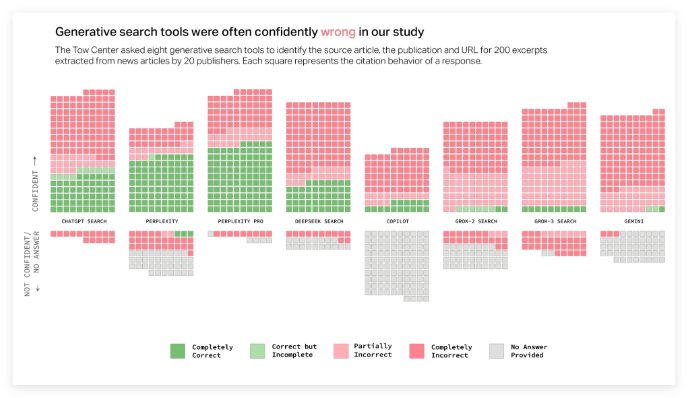

A recent study on AI search engines revealed serious problems in processing news information. Columbia News Review (CJR)’s Digital News Center conducted in-depth tests on eight AI tools with real-time search capabilities, and the results were shocking: More than 60% of news queries received inaccurate answers. This discovery has attracted widespread public attention to the reliability of AI technology.

Research shows that there are significant differences in error rates among different AI platforms. Among them, the error rate of Grok3 is as high as 94%, while the error rate of ChatGPT Search is also as high as 67%. It is worth noting that even paid versions, such as Perplexity Pro and Grok3's premium services, have failed to avoid frequent confident but incorrect answers. The researchers specifically point out that these AI models have a widespread tendency to “confidently wrong”, that is, when there is a lack of reliable information, they do not choose to reject answers, but tend to fabricate seemingly reasonable wrong answers.

In addition, the research also reveals the citation problems of AI search engines. These tools often direct users to a joint publishing platform for news content rather than the original publisher’s website. What's more serious is that some AI tools even fabricate invalid URL links, causing users to be unable to access the source of information. For example, more than half of Grok3's reference links were invalid in the test, which further exacerbated the difficulty of obtaining information.

These issues pose a serious challenge to news publishers. If AI crawlers are prevented from crawling content, the content may lose its signature completely; while crawling may face the dilemma of the content being widely used but not being able to be transported back to its own website. Times Chief Operating Officer Mark Howard expressed deep concerns about this, highlighting the need for transparency and control.

Researchers noted that about a quarter of Americans have now used AI models as a replacement for traditional search engines, and such a high error rate is worrying about the reliability of information. The study further confirms a similar report released last November, which also points to the accuracy issues that ChatGPT has when dealing with news content. Although OpenAI and Microsoft acknowledged receiving research results, they did not directly respond to specific questions, which triggered further public doubts about the transparency of AI technology.

To sum up, AI search engines' high error rates, confusing citations and poor performance of paid services in news information processing all indicate that there are still many shortcomings in current technology. As AI's role in information acquisition becomes increasingly important, solving these problems has become an urgent task.