V2A, the latest video-to-audio conversion technology released by Google DeepMind, can generate synchronized and realistic audio tracks based on video images and text prompts to soundtrack silent videos. This technology utilizes autoregressive and diffusion models, combined with AI-generated annotations, to understand the association between specific audio events and visual scenes, enabling more accurate and creative audio generation. Users can control the audio output through "positive cues" and "negative cues" to create audio tracks that match the video content and intended effects. V2A not only supports the generation of dramatic soundtracks and realistic sound effects, but also generates dialogue that matches the characters and tone of the video, providing a powerful auxiliary tool for video production.

Google DeepMind has released a video-to-audio technology, V2A. V2A technology uses video pixels and text prompts to generate rich audio tracks, create soundtracks for silent videos, and achieve synchronous audio-visual generation.

Product entrance: https://top.aibase.com/tool/deepmind-v2a

Users can guide the audio output through text descriptions of "positive prompts" or "negative prompts" to achieve precise control over audio track creation. The V2A system uses autoregressive and diffusion methods to generate audio, achieving synchronized, realistic audio output. During the training process, the system uses AI-generated annotations to help the model understand how specific audio events relate to the visual scene.

How it works:

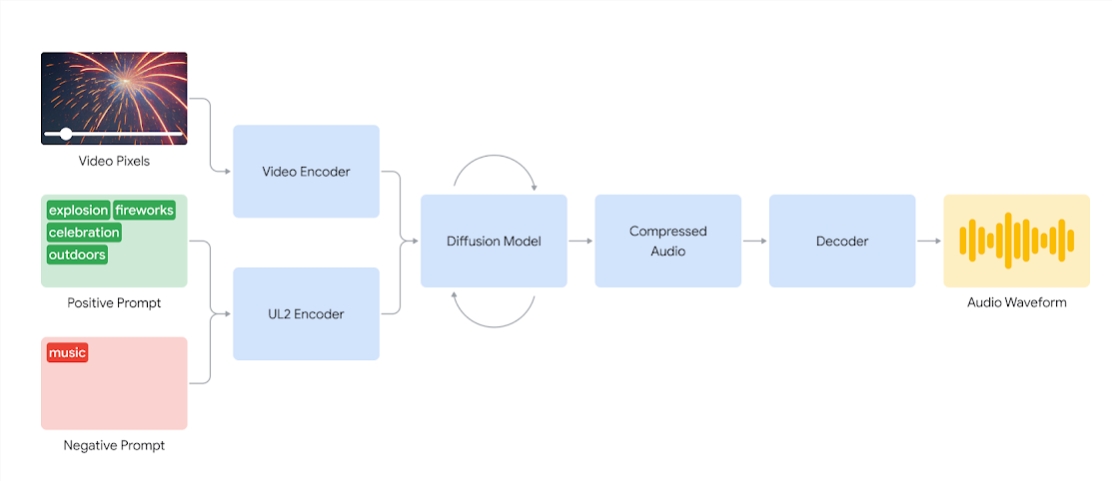

V2A systems first encode the video input into a compressed representation. The diffusion model then iteratively refines the audio from the random noise. This process is guided by visual input and natural language cues given to produce synchronized, realistic audio that closely matches the cues. Finally, the audio output is decoded, converted to an audio waveform and combined with the video data.

Diagram of a V2A system that uses video pixels and audio cue inputs to generate audio waveforms synchronized with the underlying video. First, V2A encodes video and audio cue inputs and runs iteratively through a diffusion model. Compressed audio is then generated and decoded into an audio waveform.

To generate higher quality audio and increase the ability to guide the model to produce specific sounds, more information is added during the training process, including AI-generated annotations with detailed descriptions of sounds and transcripts of spoken conversations.

By training on video, audio, and attached annotations, the technology learns to associate specific audio events with various visual scenes while responding to information provided in the annotations or recordings.

V2A features:

Audio generation: V2A automatically generates synchronized audio tracks based on video footage and user-provided text descriptions, including shots of dramatic soundtracks, realistic sound effects, or dialogue that match the characters and tone of the video.

Synchronized audio: Use autoregressive and diffusion methods to generate audio, ensuring that the generated audio is perfectly synchronized with the video content and provides realistic audio output.

Diverse audio tracks: Users can generate an unlimited number of audio tracks, try different sound effect combinations, and find the sound that best suits the video content.

Cue Control: Users can guide track generation by defining "positive cue" or "negative cue", adding control over the output and steering it away from unwanted sounds.

Use annotations during training: During the training process, the system uses AI-generated annotations to help the model understand the association between specific audio events and visual scenes.

To improve the quality of audio generation, the research team introduced more information into the training process, such as AI-generated annotations with sound descriptions and spoken dialogue recordings. Such information-rich training enables the technology to better understand video content and produce audio effects that match the visual scene.

However, there are still some challenges, and the team is working on improving lip sync for videos involving speech. V2A attempts to generate speech based on input transcribed text and synchronize it with the character's lip movements. But paired video generation models may not be conditioned on transcribed text. This results in mismatches, often resulting in weird lip sync, as the video model does not generate mouth movements that match the transcribed text.

V2A technology will undergo rigorous security evaluation and testing before being made available to the public. The following are some dubbing cases generated by V2A:

1. Audio prompt: The wolf howls at the moon

2. Audio cues: movies, thrillers, horror movies, music, tension, atmosphere, footsteps on concrete

3. Audio Cue: Drummer on concert stage surrounded by flashing lights and cheering crowd

Audio prompts: cute little dinosaur chirping, jungle atmosphere, egg cracking

Note: The videos in this article are all from Google official examples

All in all, Google DeepMind's V2A technology brings new possibilities to video content creation. Its powerful audio generation capabilities and convenient operation methods will greatly improve the efficiency of video production. Although there are still some challenges that need to be overcome, the future development prospects of V2A technology are worth looking forward to.