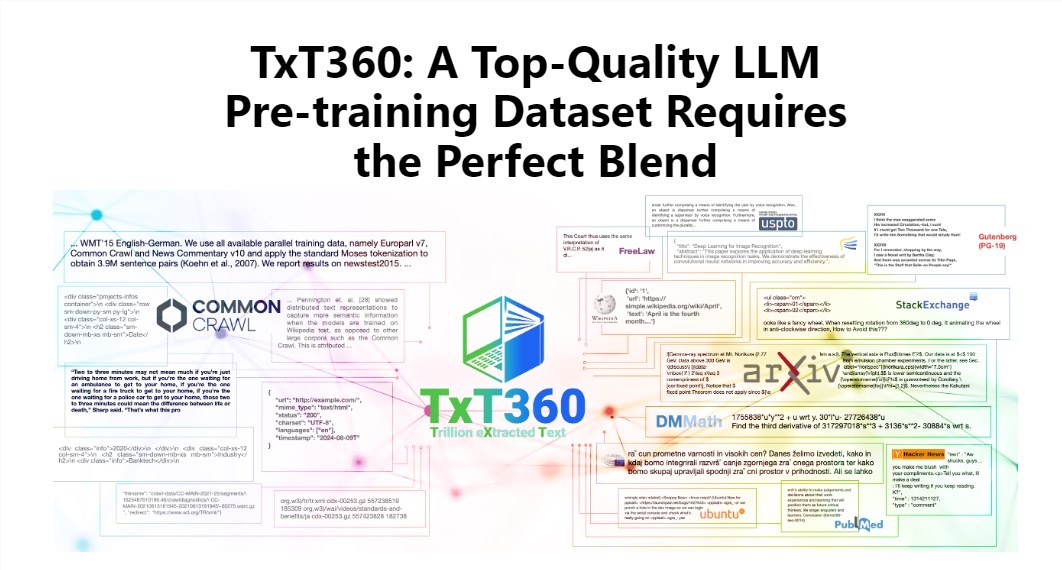

The editor of Downcodes will take you to learn about the latest TxT360 data set released by LLM360! This is a huge data set with 5.7 trillion high-quality tokens, specially designed for large language model training. Not only is it huge in scale, it is also of extremely high quality, far exceeding existing datasets such as FineWeb and RedPajama. TxT360 extracts the essence of the Internet from 99 Common Crawl snapshots and specially selects 14 high-quality data sources, such as legal documents and encyclopedias, to ensure data diversity and reliability. More importantly, it provides "data weight adjustment recipes" that allow users to flexibly adjust the weights of different data sources and control the data as finely as cooking.

The charm of TxT360 lies in its ultra-large scale and ultra-high quality, which completely outperforms existing datasets such as FineWeb and RedPajama. This data set captures the essence of the Internet from 99 Common Crawl snapshots, and also specially selects 14 high-quality data sources, such as legal documents and encyclopedias, to make its content not only rich and diverse, but also quite reliable. .

What’s even cooler is that TxT360 provides users with a “data weight adjustment recipe” that allows you to flexibly adjust the weights of different data sources according to your needs. This is like when cooking, you can mix various ingredients according to your taste to ensure that every bite is delicious.

Of course, deduplication technology is also a highlight of TxT360. Through complex deduplication operations, this data set effectively solves the problem of data redundancy and information duplication during the training process, ensuring that each token is unique. At the same time, the project team also used regular expressions to cleverly remove personally identifiable information, such as emails and IP addresses, from the documents to ensure data privacy and security.

TxT360 was designed with quality in mind as well as scale. Combining the advantages of network data and curated data sources, it allows researchers to precisely control the use and distribution of data, just like having a magic remote control that can adjust the proportion of data at will.

In terms of training effect, TxT360 is not to be outdone. It uses a simple upsampling strategy to greatly increase the amount of data, eventually creating a data set of more than 15 trillion tokens. In a series of key evaluation indicators, TxT360 performs better than FineWeb, especially in fields such as MMLU and NQ, showing strong learning capabilities. When combined with code data (such as Stack V2), the learning curve is more stable and the model performance is significantly improved.

Detailed introduction: https://huggingface.co/spaces/LLM360/TxT360

All in all, the emergence of the TxT360 data set provides new possibilities for the training of large language models. Its huge scale, high-quality data, and flexible data weight adjustment functions will undoubtedly promote the further development of large language model technology. For more information, please visit the link for a detailed introduction!