The editor of Downcodes learned that Zhiyuan Research Institute recently released Infinity-Instruct, a fine-tuned data set containing tens of millions of instructions, aiming to significantly improve the performance of language models, especially dialogue models. The data set is divided into two parts: Infinity-Instruct-7M basic instruction data set and Infinity-Instruct-Gen dialogue instruction data set. The former contains more than 7.44 million pieces of data, covering multiple fields, and is used to improve the basic capabilities of the model; the latter contains 1.49 million complex instructions are designed to enhance the model's robustness in real dialogue scenarios. According to test results, the model fine-tuned using Infinity-Instruct has achieved excellent results on multiple mainstream evaluation lists, even surpassing some official dialogue models.

Zhiyuan Research Institute has launched a tens of millions of instruction fine-tuning data set called Infinity-Instruct, aiming to improve the performance of language models in dialogue and other aspects. Recently, Infinity Instruct has completed a new round of iterations, including the Infinity-Instruct-7M basic instruction data set and the Infinity-Instruct-Gen dialogue instruction data set.

The Infinity-Instruct-7M basic instruction data set contains more than 7.44 million pieces of data, covering fields such as mathematics, code, and general knowledge Q&A, and is dedicated to improving the basic capabilities of pre-trained models. The test results show that the Llama3.1-70B and Mistral-7B-v0.1 models fine-tuned using this data set are close to the officially released conversation model in terms of comprehensive capabilities. Among them, Mistral-7B even surpasses GPT-3.5, while Llama3 .1-70B is close to GPT-4.

The Infinity-Instruct-Gen dialogue instruction data set contains 1.49 million synthetic complex instructions, with the purpose of improving the robustness of the model in real dialogue scenarios. After further fine-tuning using this dataset, the model can outperform official conversational models.

Zhiyuan Research Institute tested Infinity-Instruct on mainstream evaluation lists such as MTBench, AlpacaEval2, and Arena-Hard. The results showed that the model fine-tuned by Infinity-Instruct has surpassed the official model in terms of conversational capabilities.

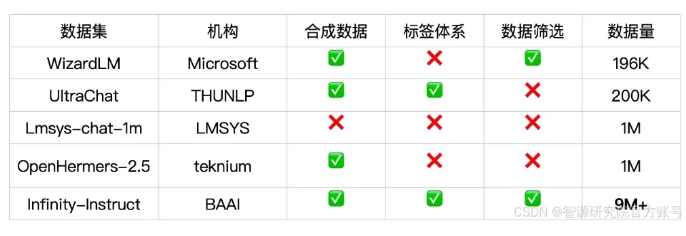

Infinity-Instruct provides detailed annotations for each instruction data, such as language, capability type, task type and data source, making it easy for users to filter data subsets according to needs. Zhiyuan Research Institute has constructed high-quality data sets through data selection and instruction synthesis to bridge the gap between open source conversation models and GPT-4.

The project also uses the FlagScale training framework to reduce fine-tuning costs, and eliminates duplicate samples through MinHash deduplication and BGE retrieval. Zhiyuan plans to open source the entire process code of data processing and model training in the future, and explore extending the Infinity-Instruct data strategy to the alignment and pre-training stages to support the full life cycle data requirements of language models.

Dataset link:

https://modelscope.cn/datasets/BAAI/Infinity-Instruct

The release of the Infinity-Instruct data set provides a new way to improve the performance of language models and contributes to the development of large model technology. The editor of Downcodes hopes that Infinity-Instruct can be further improved in the future to provide support for more researchers and developers.