The first part of this resource pool summary the resources used to solve text generation tasks using the language model GPT2, including papers, code, demo demos, and hands-on tutorials. The second part shows the application of GPT2 in the text generation tasks of machine translation, automatic summary generation, migration learning and music generation. Finally, the 15 major language models based on Transformer between 2018 and 2019 are compared.

The first part of this resource collection summarizes the resources used to solve text generation tasks using the language model GPT2, including papers, code, presentation demos and hands-on tutorials. The second part shows the application of GPT2 in text generation tasks such as machine translation, automatic summary generation, transfer learning, and music generation. Finally, we compare the important 15 language models based on Transformer from 2018 to 2019.

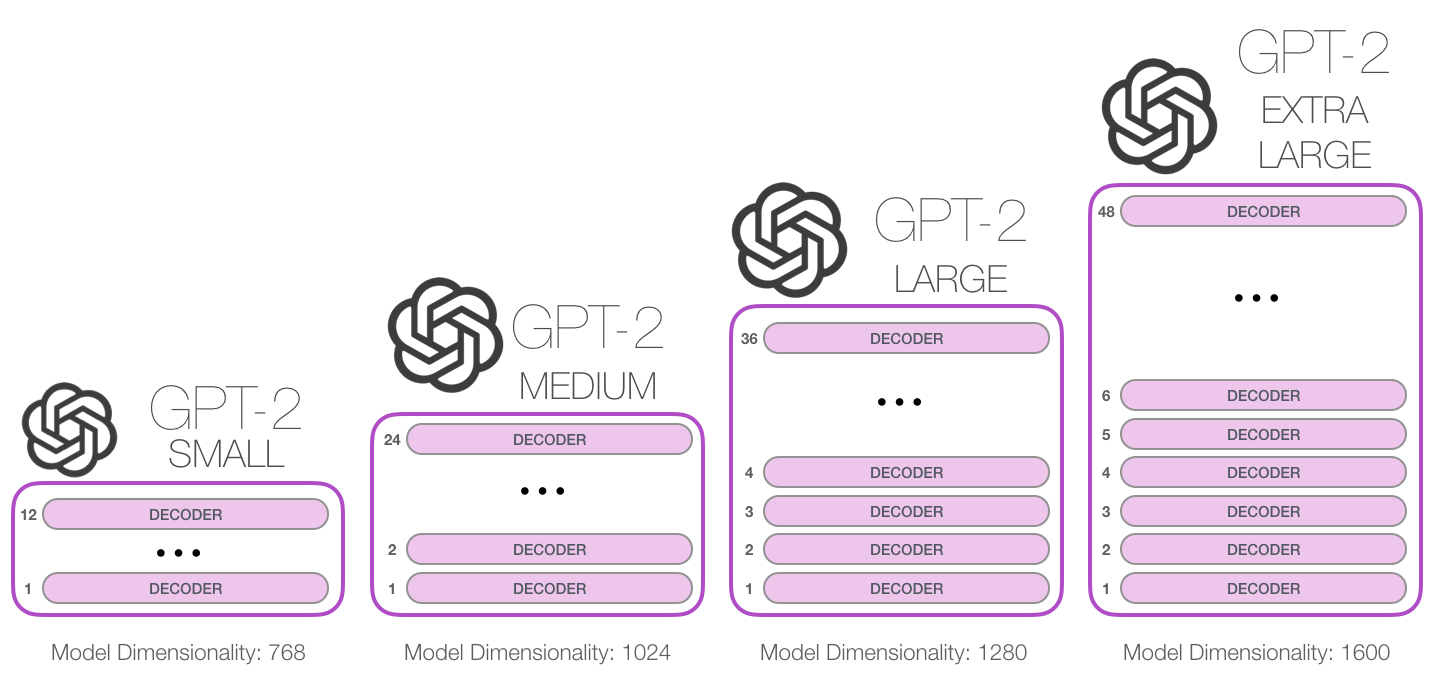

GPT-2 is a large transformer-based language model released by OpenAI in February 2019. It contains 1.5 billion parameters and is trained on a 8 million web dataset. According to reports, the model is a direct extension of the GPT model, training on more than 10 times the amount of data, the parameter amount is also 10 times more. In terms of performance, the model is capable of producing coordinate text paragraphs and achieves SOTA performance on many language modeling benchmarks. Moreover, the model can perform preliminary reading comprehension, machine translation, question and answer and automatic summary without task-specific training.

GPT-2 is a large transformer-based language model released by OpenAI in February 2019. It contains 1.5 billion parameters and is trained on an 8 million web page dataset. According to reports, this model is a direct extension of the GPT model, trained on more than 10 times the amount of data, and the number of parameters is 10 times more. In terms of performance, the model is able to produce coherent text paragraphs and achieves SOTA performance on many language modeling benchmarks. Moreover, the model can achieve preliminary reading comprehension, machine translation, question-and-answer and automatic summary without task-specific training.

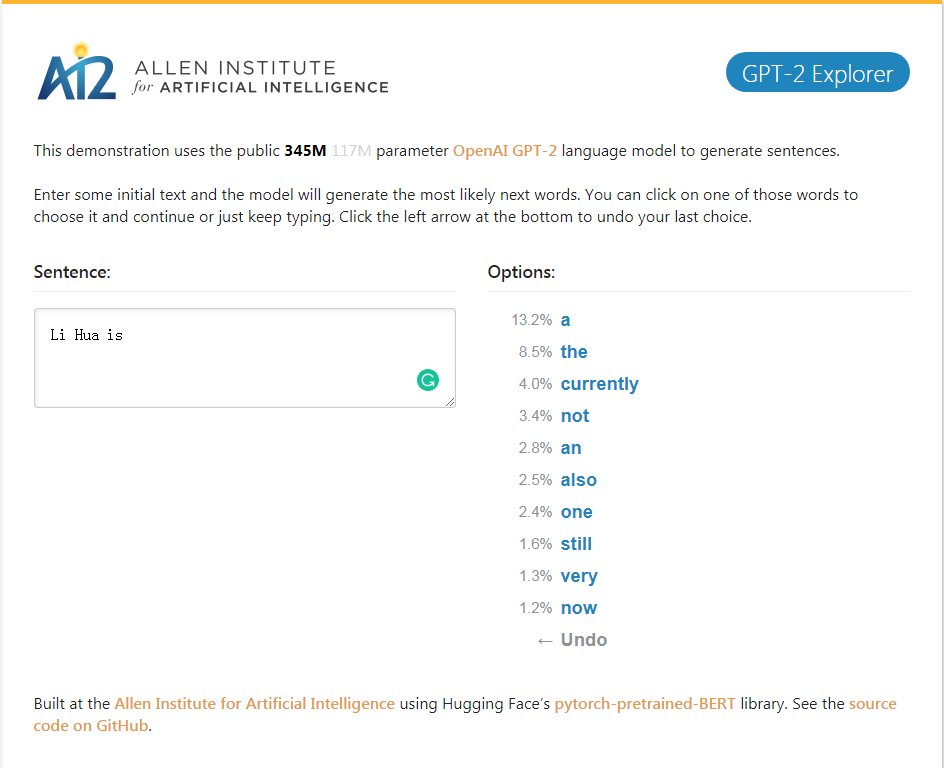

GPT-2_Explorer Demo It can give the next ten words of the possibility ranking and their corresponding probabilities according to the currently input text. You can select one of the words, then see the list of the next possible word, and so on, and finally complete one. Article.

GPT-2_Explorer Demo It can give the top ten next word in the possible range and its corresponding probability based on the currently entered text. You can select one of the words and see a list of the next possible words. This will be repeated over and over, and finally complete an article.

Click to GPT-2 Explorer Demo

GPT-2-simple Python package to easily retrain OpenAI's GPT-2 text-generating model on new texts.

The GPT-2-simple Python package can easily retrain OpenAI's GPT-2 text generation model on new text.

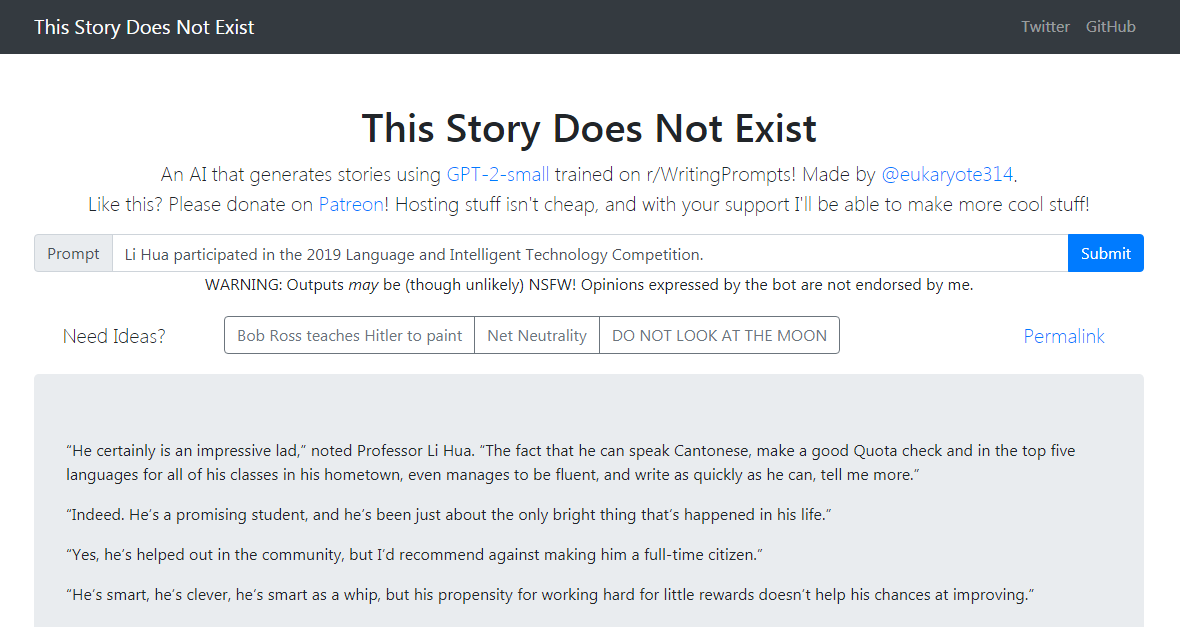

GPT-2-simple Demo Writes a follow-up story based on the current input text. GPT-2-simple Demo Writes a follow-up story based on the current input text.

Click to experience Click to gpt-2-simple Demo

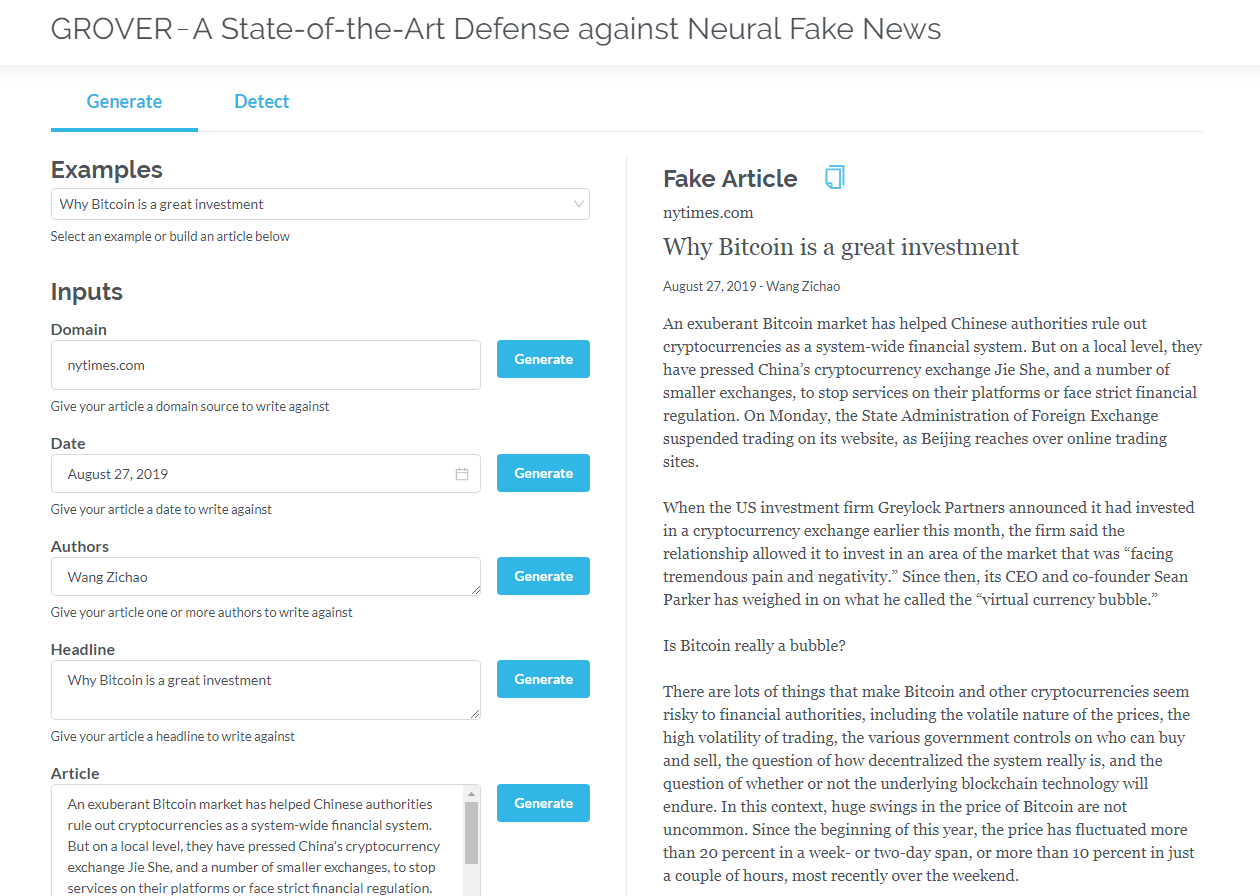

Grover is a model for Neural Fake News -- both generation and detection. Grover is a model for neural fake news - generation and detection.

Generate articles based on information such as title, author, and more. Grover can also detect if text is generated by the machine.

Generate articles based on title, author and other information. grover can also detect whether the text is generated by the machine.

Click to GROVER

Click to read the English version

Transformers that only contain decoders (such as GPT2) constantly show application prospects outside language modeling. In many applications, such models have been successful: machine translation, automatic summary generation, transfer learning, and music generation. Let's review some of these applications together.

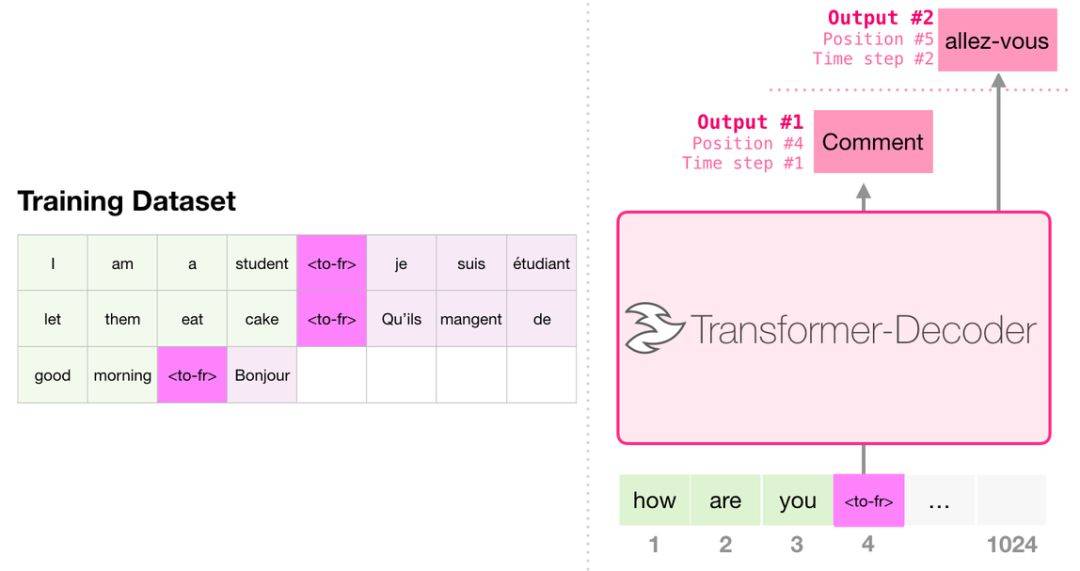

When translating, the model does not require an encoder. The same task can be solved by a transformer with only decoder:

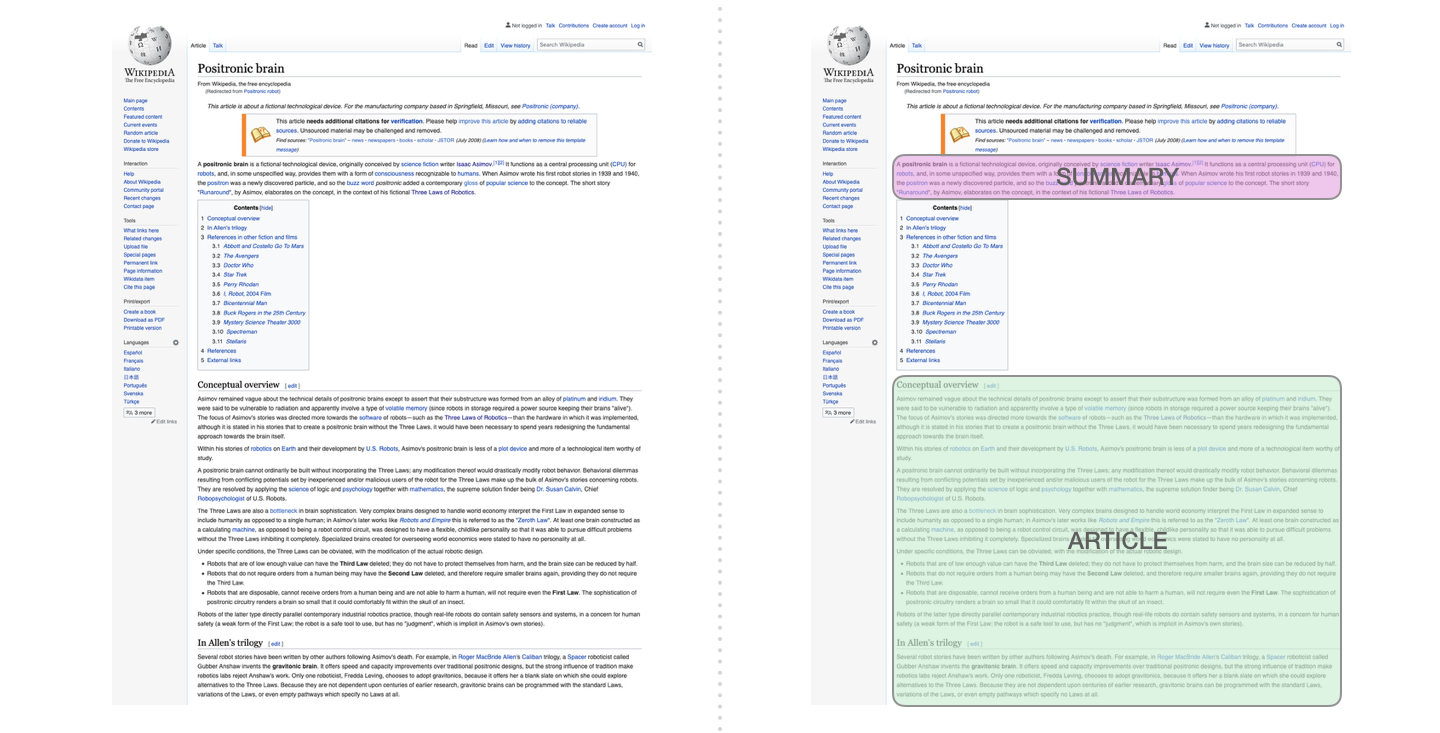

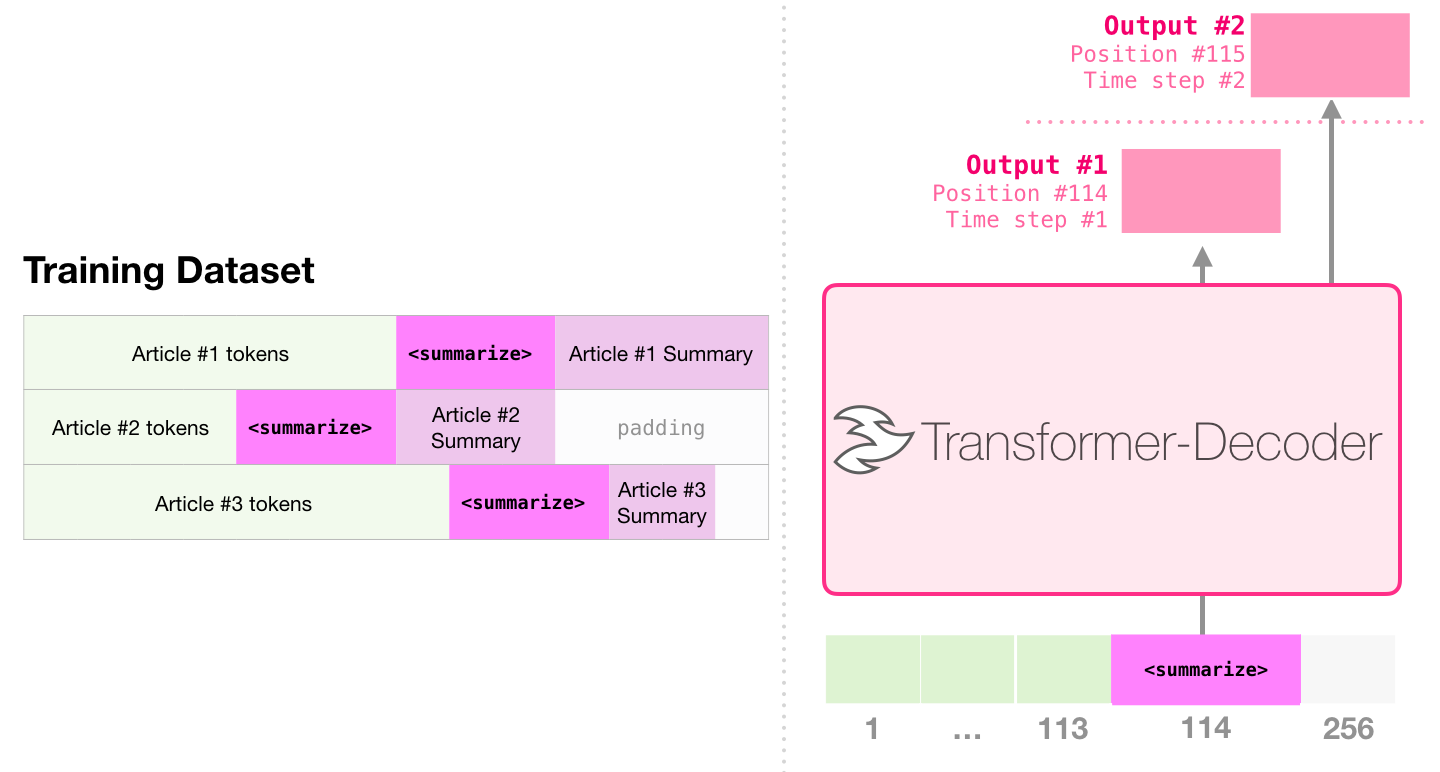

This is the first task to train a transformer that only contains the decoder. That is, the model is trained to read Wikipedia articles (without the beginning of the directory) and then generate a summary. The actual beginning of the article is used as the label for the training dataset:

The paper uses Wikipedia articles to train the model, and the trained model can generate an abstract of the article:

In the paper Sample Efficient Text Summarization Using a Single Pre-Trained Transformer, first pre-training is performed in the language modeling task using transformers that only contain decoders, and then the summary generation task is completed through tuning. The results show that in the case of limited data, this scheme achieves better results than pretrained encoder-decoder transformer. The paper of GPT2 also demonstrates the abstract generation effect obtained after pre-training the language modeling model.

Music transformer uses transformers that only contain decoders to generate music with rich rhythm and dynamics. Similar to language modeling, "music modeling" is to let the model learn music in an unsupervised way, and then let it output samples (we previously called "random work").

GPT2 is just a drop in the ocean of Transformer-based models. For comparisons of 15 important Transformer-based models from 2018 to 2019, please refer to the Post-BERT era: Comparative analysis of 15 pre-trained models and key points exploration.