Pytorchts是一種Pytorch概率時間序列預測框架,它通過將Gluonts用作後端API並用於加載,轉換和背面測試時間序列數據集,從而提供最先進的Pytorch時間序列模型。

$ pip3 install pytorchts

在這裡,我們突出顯示了API通過Gluonts Readme的變化。

import matplotlib . pyplot as plt

import pandas as pd

import torch

from gluonts . dataset . common import ListDataset

from gluonts . dataset . util import to_pandas

from pts . model . deepar import DeepAREstimator

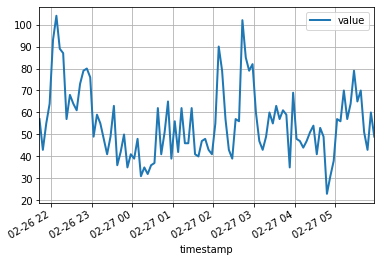

from pts import Trainer這個簡單的示例說明瞭如何在某些數據上訓練模型,然後使用它來做出預測。作為第一步,我們需要收集一些數據:在此示例中,我們將使用提及AMZN股票符號的推文卷。

url = "https://raw.githubusercontent.com/numenta/NAB/master/data/realTweets/Twitter_volume_AMZN.csv"

df = pd . read_csv ( url , header = 0 , index_col = 0 , parse_dates = True )前100個數據點看起來如下:

df [: 100 ]. plot ( linewidth = 2 )

plt . grid ( which = 'both' )

plt . show ()

現在,我們可以準備一個培訓數據集供我們的模型進行培訓。數據集本質上是詞典的集合:每個字典代表具有可能相關特徵的時間序列。在此示例中,我們只有一個條目,由"start"字段指定,即第一個數據點的時間戳,以及包含時間序列數據的"target"字段。對於培訓,我們將在2015年4月5日午夜使用數據。

training_data = ListDataset (

[{ "start" : df . index [ 0 ], "target" : df . value [: "2015-04-05 00:00:00" ]}],

freq = "5min"

)預測模型是預測對象。獲得預測因子的一種方法是訓練通訊估計器。實例化估計器需要指定將處理的時間序列的頻率以及預測的時間步驟的數量。在我們的示例中,我們使用5分鐘數據,因此req="5min" ,我們將訓練一個模型以預測下一個小時,因此prediction_length=12 。該模型的輸入將是每個時間點的大小input_size=43的向量。我們還指定了一些最小的培訓選項,特別是在device上的培訓epoch=10 。

device = torch . device ( "cuda" if torch . cuda . is_available () else "cpu" )

estimator = DeepAREstimator ( freq = "5min" ,

prediction_length = 12 ,

input_size = 19 ,

trainer = Trainer ( epochs = 10 ,

device = device ))

predictor = estimator . train ( training_data = training_data , num_workers = 4 ) 45it [00:01, 37.60it/s, avg_epoch_loss=4.64, epoch=0]

48it [00:01, 39.56it/s, avg_epoch_loss=4.2, epoch=1]

45it [00:01, 38.11it/s, avg_epoch_loss=4.1, epoch=2]

43it [00:01, 36.29it/s, avg_epoch_loss=4.05, epoch=3]

44it [00:01, 35.98it/s, avg_epoch_loss=4.03, epoch=4]

48it [00:01, 39.48it/s, avg_epoch_loss=4.01, epoch=5]

48it [00:01, 38.65it/s, avg_epoch_loss=4, epoch=6]

46it [00:01, 37.12it/s, avg_epoch_loss=3.99, epoch=7]

48it [00:01, 38.86it/s, avg_epoch_loss=3.98, epoch=8]

48it [00:01, 39.49it/s, avg_epoch_loss=3.97, epoch=9]

在培訓期間,將顯示有關進度的有用信息。要全面概述可用選項,請參閱DeepAREstimator (或其他估算器)和Trainer的源代碼。

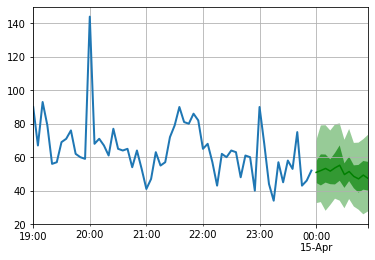

我們現在準備做出預測:我們將預測2015年4月15日午夜之後的小時。

test_data = ListDataset (

[{ "start" : df . index [ 0 ], "target" : df . value [: "2015-04-15 00:00:00" ]}],

freq = "5min"

) for test_entry , forecast in zip ( test_data , predictor . predict ( test_data )):

to_pandas ( test_entry )[ - 60 :]. plot ( linewidth = 2 )

forecast . plot ( color = 'g' , prediction_intervals = [ 50.0 , 90.0 ])

plt . grid ( which = 'both' )

請注意,該預測是根據概率分佈來顯示的:陰影區域分別代表50%和90%的預測間隔,圍繞中位數(深綠色線)。

pip install -e .

pytest test

引用這個存儲庫:

@software{pytorchgithub,

author = {Kashif Rasul},

title = {{P}yTorch{TS}},

url = {https://github.com/zalandoresearch/pytorch-ts},

version = {0.6.x},

year = {2021},

}我們使用此框架實現了以下模型:

@INPROCEEDINGS{rasul2020tempflow,

author = {Kashif Rasul and Abdul-Saboor Sheikh and Ingmar Schuster and Urs Bergmann and Roland Vollgraf},

title = {{M}ultivariate {P}robabilistic {T}ime {S}eries {F}orecasting via {C}onditioned {N}ormalizing {F}lows},

year = {2021},

url = {https://openreview.net/forum?id=WiGQBFuVRv},

booktitle = {International Conference on Learning Representations 2021},

}@InProceedings{pmlr-v139-rasul21a,

title = {{A}utoregressive {D}enoising {D}iffusion {M}odels for {M}ultivariate {P}robabilistic {T}ime {S}eries {F}orecasting},

author = {Rasul, Kashif and Seward, Calvin and Schuster, Ingmar and Vollgraf, Roland},

booktitle = {Proceedings of the 38th International Conference on Machine Learning},

pages = {8857--8868},

year = {2021},

editor = {Meila, Marina and Zhang, Tong},

volume = {139},

series = {Proceedings of Machine Learning Research},

month = {18--24 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v139/rasul21a/rasul21a.pdf},

url = {http://proceedings.mlr.press/v139/rasul21a.html},

}@misc{gouttes2021probabilistic,

title={{P}robabilistic {T}ime {S}eries {F}orecasting with {I}mplicit {Q}uantile {N}etworks},

author={Adèle Gouttes and Kashif Rasul and Mateusz Koren and Johannes Stephan and Tofigh Naghibi},

year={2021},

eprint={2107.03743},

archivePrefix={arXiv},

primaryClass={cs.LG}

}