pytorch mixtures

1.0.0

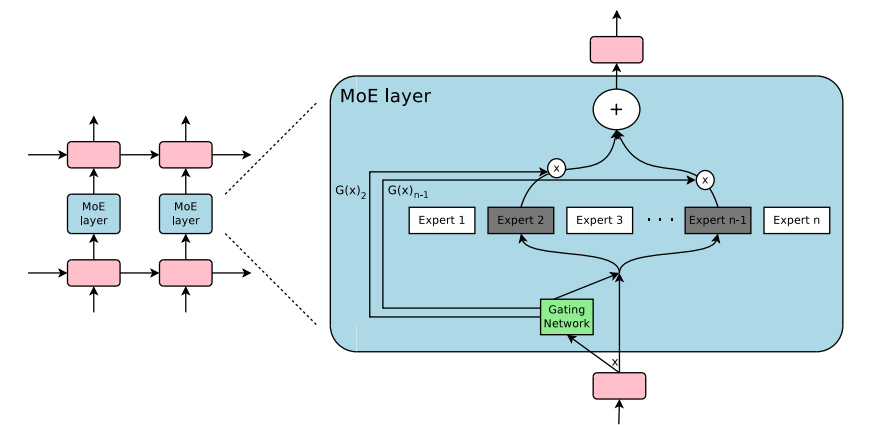

插件模块,用于pytorch中的专家混合物和混合物。您毫不费力地将MOE/MOD图层插入自定义神经网络的一站式解决方案!

- -

- -

资料来源:

只需使用pip3 install pytorch-mixtures即可安装此软件包。请注意,这要求将torch和einops预先安装为依赖项。如果您想从源构建此软件包,请运行以下命令:

git clone https://github.com/jaisidhsingh/pytorch-mixtures.git

cd pytorch-mixtures

pip3 install . pytorch-mixtures旨在为您选择的任何神经网络毫不费力地集成到您现有的代码中,例如

from pytorch_mixtures . routing import ExpertChoiceRouter

from pytorch_mixtures . moe_layer import MoELayer

import torch

import torch . nn as nn

# define some config

BATCH_SIZE = 16

SEQ_LEN = 128

DIM = 768

NUM_EXPERTS = 8

CAPACITY_FACTOR = 1.25

# first initialize the router

router = ExpertChoiceRouter ( dim = DIM , num_experts = NUM_EXPERTS )

# choose the experts you want: pytorch-mixtures just needs a list of `nn.Module` experts

# for e.g. our experts are just linear layers

experts = [ nn . Linear ( DIM , DIM ) for _ in range ( NUM_EXPERTS )]

# supply the router and experts to the MoELayer for modularity

moe = MoELayer (

num_experts = NUM_EXPERTS ,

router = router ,

experts = experts ,

capacity_factor = CAPACITY_FACTOR

)

# initialize some test input

x = torch . randn ( BATCH_SIZE , SEQ_LEN , DIM )

# pass through moe

moe_output , aux_loss , router_z_loss = moe ( x ) # shape: [BATCH_SIZE, SEQ_LEN, DIM]您也可以在自己的nn.Module类中轻松地使用它

from pytorch_mixtures . routing import ExpertChoiceRouter

from pytorch_mixtures . moe import MoELayer

from pytorch_mixtures . utils import MHSA # multi-head self-attention layer provided for ease

import torch

import torch . nn as nn

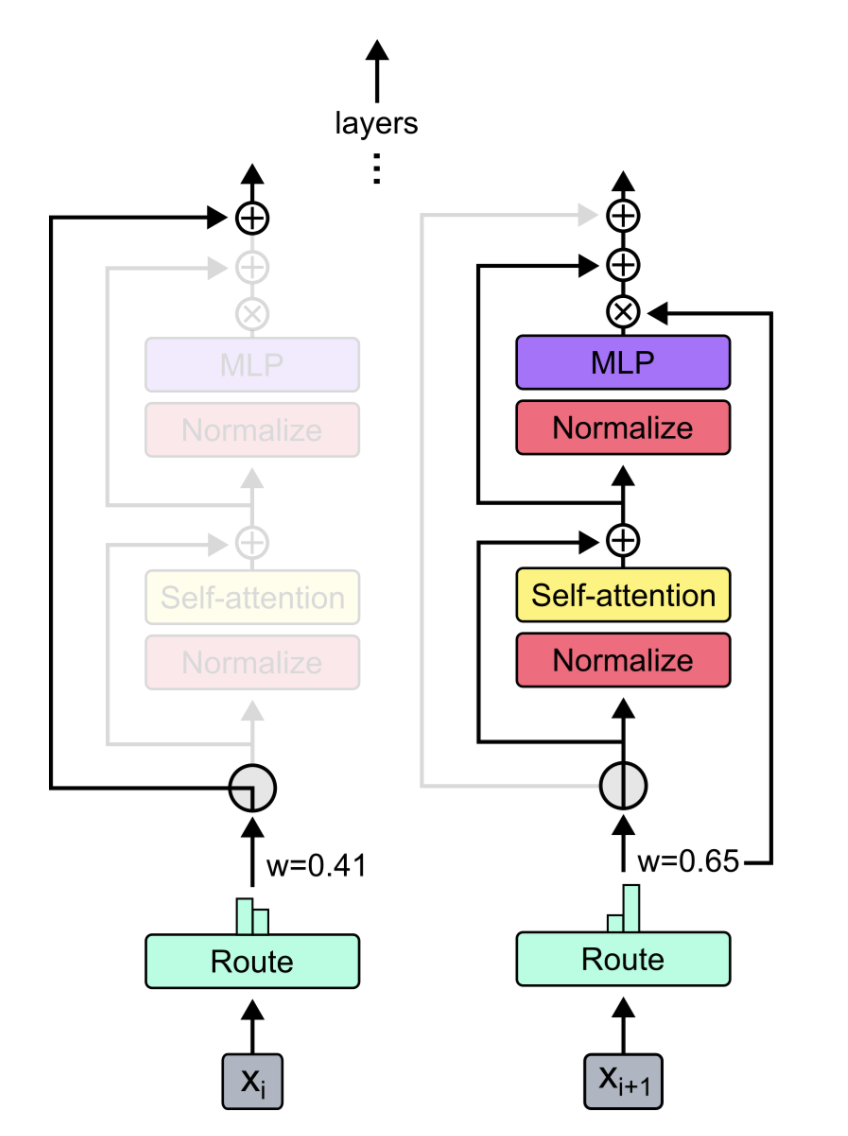

class CustomMoEAttentionBlock ( nn . Module ):

def __init__ ( self , dim , num_heads , num_experts , capacity_factor , experts ):

super (). __init__ ()

self . attn = MHSA ( dim , num_heads )

router = ExpertChoiceRouter ( dim , num_experts )

self . moe = MoELayer ( dim , router , experts , capacity_factor )

self . norm1 = nn . LayerNorm ( dim )

self . norm2 = nn . LayerNorm ( dim )

def forward ( self , x ):

x = self . norm1 ( self . attn ( x ) + x )

moe_output , aux_loss , router_z_loss = self . moe ( x )

x = self . norm2 ( moe_output + x )

return x , aux_loss , router_z_loss

experts = [ nn . Linear ( 768 , 768 ) for _ in range ( 8 )]

my_block = CustomMoEAttentionBlock (

dim = 768 ,

num_heads = 8 ,

num_experts = 8 ,

capacity_factor = 1.25 ,

experts = experts

)

# some test input

x = torch . randn ( 16 , 128 , 768 )

output , aux_loss , router_z_loss = my_block ( x ) # output shape: [16, 128, 768]该软件包使用户可以为MOE代码运行简单而可靠的absl test 。如果所有专家都以相同的模块初始化, MoELayer的输出应等于通过任何专家传递的输入张量。因此, ExpertChoiceRouter和TopkRouter都经过测试,并在测试中取得了成功。用户可以通过运行以下操作来自行运行这些测试:

from pytorch_mixtures import run_tests

run_tests ()注意:所有测试正确通过。如果测试失败,则可能是由于随机初始化中的边缘情况。再试一次,它将通过。

如果您发现此包装有用,请在您的工作中引用它:

@misc { JaisidhSingh2024 ,

author = { Singh, Jaisidh } ,

title = { pytorch-mixtures } ,

year = { 2024 } ,

publisher = { GitHub } ,

journal = { GitHub repository } ,

howpublished = { url{https://github.com/jaisidhsingh/pytorch-mixtures} } ,

}该软件包是在下面提到的开源代码的帮助下构建的: