The field of artificial intelligence has recently ushered in a dazzling new star - DeepSeek-V3-0324 large language model. This AI model developed by the DeepSeek team is reshaping the industry landscape with its amazing 641GB capacity and breakthrough technology architecture. What is most surprising is that this powerful model was released in a low-key manner on the Hugging Face platform without any preliminary publicity, continuing the company's usual pragmatic style.

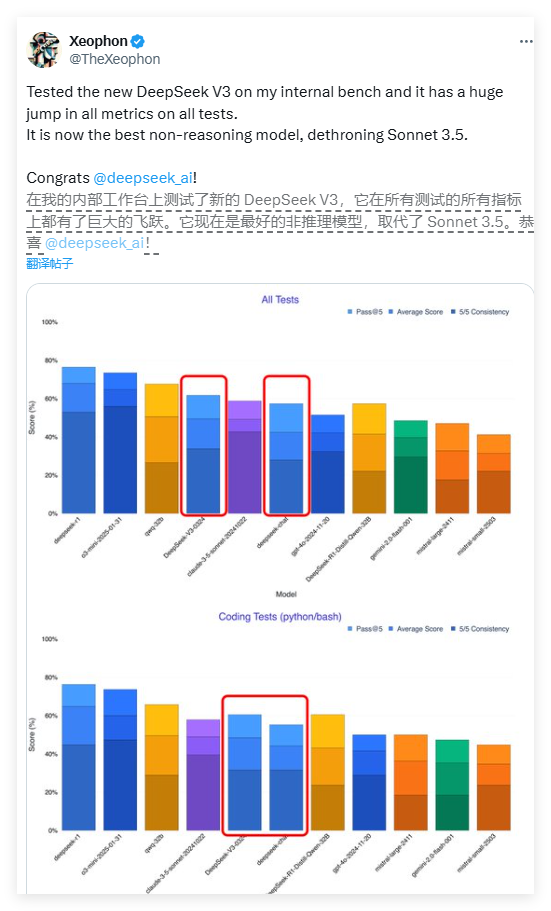

The performance of DeepSeek-V3 is amazing. According to a test report by AI researcher Xeophon on social media platform X, the model has achieved a qualitative leap in all test indicators. Its performance even surpasses Anthropic's widely acclaimed Claude Sonnet 3.5 commercial AI system, becoming one of the best non-inference models at present. This breakthrough progress has inspired the entire AI research community.

One of the most eye-catching features of DeepSeek-V3 is its fully open source feature. Unlike the business model where most Western AI companies place advanced models behind paywalls, DeepSeek-V3 adopts a MIT license, which means that anyone can download and use the model for free, even for commercial purposes. This open sharing concept is breaking the payment barriers in the field of artificial intelligence and allowing advanced technologies to benefit a wider range of developers.

In terms of technical architecture, the DeepSeek-V3 adopts a revolutionary Hybrid Expert (MoE) system. This innovative architecture allows the model to activate only about 37 billion parameters when processing a specific task, while non-traditional models require activation of all 685 billion parameters. This selective activation mechanism greatly improves computing efficiency and significantly reduces computing resource requirements while ensuring performance. This breakthrough opens up a new path for the optimization of large language models.

DeepSeek-V3 also incorporates two cutting-edge technologies: long potential attention (MLA) and multi-token prediction (MTP). MLA technology significantly enhances the contextual understanding of the model when processing long text, while MTP technology enables the generation of multiple tokens in a single step, increasing the output speed by nearly 80%. Together, these technological innovations form the technical basis for the excellent performance of DeepSeek-V3.

Surprisingly, this high-performance model is relatively hardware-friendly. Developer Simon Willison pointed out that after 4-bit quantization, the model storage occupancy can be reduced to 352GB, making it possible to run on high-end consumer devices. AI researcher Awni Hannun confirmed that on the M3 Ultra chip Mac Studio with 512GB of memory, the DeepSeek-V3 can run at more than 20 tokens per second. This localized operation capability breaks the dependence of traditional AI models on data center-level infrastructure.

Compared with previous versions, DeepSeek-V3 also undergoes a significant change in its interactive style. Early user feedback showed that the new model presented a more formal and technical expression, which contrasted with the more humanized dialogue style of the previous version. This style adjustment may reflect the developer's reconsideration of the professional positioning of the model, making it more suitable for technical application scenarios.

The release strategy of DeepSeek-V3 reflects the significant differences in business models between Chinese AI companies and Western peers. In the environment where advanced chips are limited, Chinese companies pay more attention to algorithm optimization and efficiency improvement. This "innovation under hardware limitation" may become a unique competitive advantage. Chinese technology giants including Baidu, Alibaba and Tencent have also followed up on open source strategies to jointly promote a more open AI ecosystem.

Industry experts believe that DeepSeek-V3 is likely to be the basis of its next-generation inference model, DeepSeek-R2. Considering that NVIDIA CEO Hwang Junxun once pointed out that the calculation consumption of DeepSeek's R1 model is 100 times that of non-inference AI, it is even more valuable to achieve such performance under resource constraints. If R2 continues this development trajectory, it is likely to pose a substantial challenge to OpenAI's upcoming GPT-5.

Currently, developers can download the full model weights through Hugging Face, or experience the API interface through platforms such as OpenRouter. DeepSeek's open strategy is redefining the global AI development pattern, indicating that a new era of more popularization and innovation and openness is coming.

<|end of sentence|>