In the field of artificial intelligence, visual reasoning has always been a very challenging topic. The Groundlight research team recently announced the open source of a new AI framework, which is expected to completely change the performance of AI in the field of vision. This framework not only allows AI to recognize objects in images, but also allows them to infer deeper information from images like detectives.

Currently, AI has made significant progress in image recognition, but there are still obvious shortcomings in understanding the logical relationships behind the images. Groundlight researchers point out that existing visual language models (VLMs) are often out of their mind when dealing with tasks that require in-depth interpretation. This is mainly because they still have limitations in understanding the image itself, let alone perform complex reasoning.

Despite the great success of large language models (LLM) in textual reasoning, similar breakthroughs in the field of vision are still limited. Existing VLMs often perform poorly when they need to combine visual and textual cues for logical deduction, which exposes a key flaw in their capabilities. It is far from enough to identify objects in the image, and understanding the relationships and context information between objects is the key.

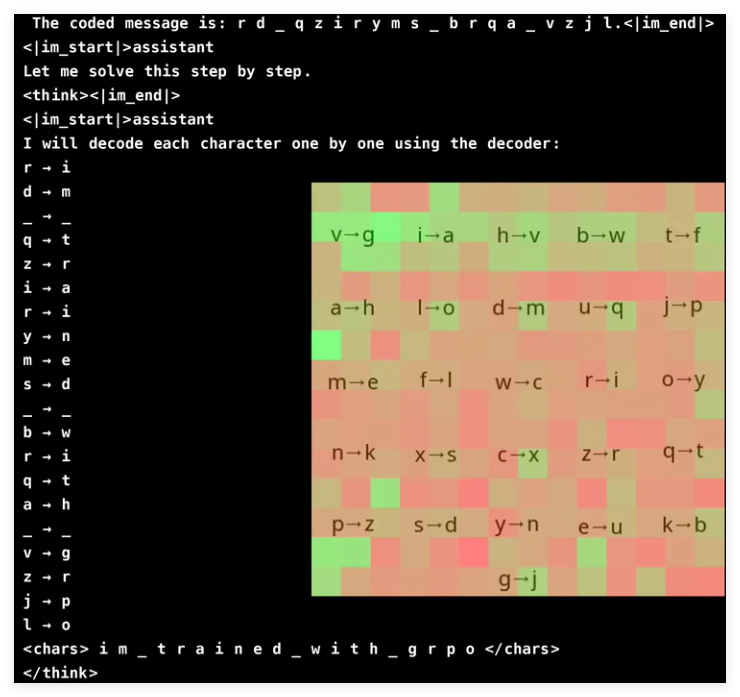

In order to improve VLM's visual reasoning ability, Groundlight's research team innovatively adopted reinforcement learning methods and used GRPO (Gradient Ratio Policy Optimization) to improve learning efficiency. This method has achieved remarkable results in the cryptographic cracking task, and a model with only 3 billion parameters has achieved an accuracy rate of 96%. Attention analysis shows that the model is able to actively participate in visual input when solving tasks and focus on the relevant decoder area.

However, training VLM with GRPO is not smooth sailing, especially when it comes to word segmentation and reward design. Since models often process text as lexical rather than single characters, it can be difficult for tasks that require precise character-level reasoning. To solve this problem, the researchers added spaces between the letters of the message to simplify the decoding process.

Reward design is another crucial link, as reinforcement learning models require well-structured feedback to learn effectively. The researchers used three types of rewards: format rewards to ensure consistency of outputs; decoding rewards to encourage meaningful conversions of garbled texts; and correctness rewards to improve accuracy. By carefully balancing these rewards, the researchers successfully avoided the unexpected "shortcuts" that the model learned, ensuring that it truly improved its cryptographic deciphering capabilities.

GRPO optimizes the learning process by comparing multiple outputs rather than relying on direct gradient calculations, which brings higher stability to training. This approach achieves a smoother learning curve by generating multiple responses for each query and evaluating each other. This study also highlights the potential of VLM in reasoning-based tasks, but also acknowledges the high computational costs of complex visual models.

To solve the efficiency problem, the Groundlight team proposed technologies such as selective model upgrades, that is, use more expensive models only in vague situations. In addition, they suggest integrating pretrained object detection, segmentation, and depth estimation models to enhance inference without significantly increasing computational overhead. This tool-based approach provides a scalable alternative to training large end-to-end models, emphasizing both efficiency and accuracy.

The Groundlight team has made significant progress in enhancing VLM by integrating reinforcement learning technologies, especially GRPO. They tested their method in a password-breaking task, and the model demonstrated impressive accuracy. This breakthrough not only demonstrates the potential of VLM in complex visual reasoning tasks, but also provides new directions for future AI research.

Project: https://github.com/groundlight/r1_vlm

demo:https://huggingface.co/spaces/Groundlight/grpo-vlm-decoder