On February 21, 2025, Alibaba's internationalization team officially announced the open source of its latest multimodal large language model Ovis2 series. This major move marks Alibaba's further breakthrough in the field of artificial intelligence, and also provides global developers with powerful technical tools to promote the development and application of multimodal large models.

Ovis2 is the latest version of the Ovis series model of Alibaba's international team. Compared with the preface version 1.6, Ovis2 has significantly optimized data construction and training methods. It not only improves the capability density of small-scale models, but also greatly enhances the chain of thinking (CoT) reasoning capabilities through instruction fine-tuning and preference learning. In addition, Ovis2 has added video and multi-image processing functions, and has made significant progress in multilingual capabilities and OCR capabilities in complex scenarios, further improving the practicality and generalization capabilities of the model.

The open source Ovis2 series this time includes six versions: 1B, 2B, 4B, 8B, 16B and 34B, each version reaches the same size SOTA (State of the Art) level. Among them, Ovis2-34B performed particularly well on the authoritative evaluation list OpenCompass. On the multimodal general capability list, Ovis2-34B ranks second in all open source models, surpassing many 70B open source flagship models with less than half of the parameter size. On the multimodal mathematical reasoning list, Ovis2-34B ranks first, and other size versions also show excellent reasoning skills. These achievements not only verify the effectiveness of the Ovis architecture, but also demonstrate the huge potential of the open source community in promoting the development of multimodal large models.

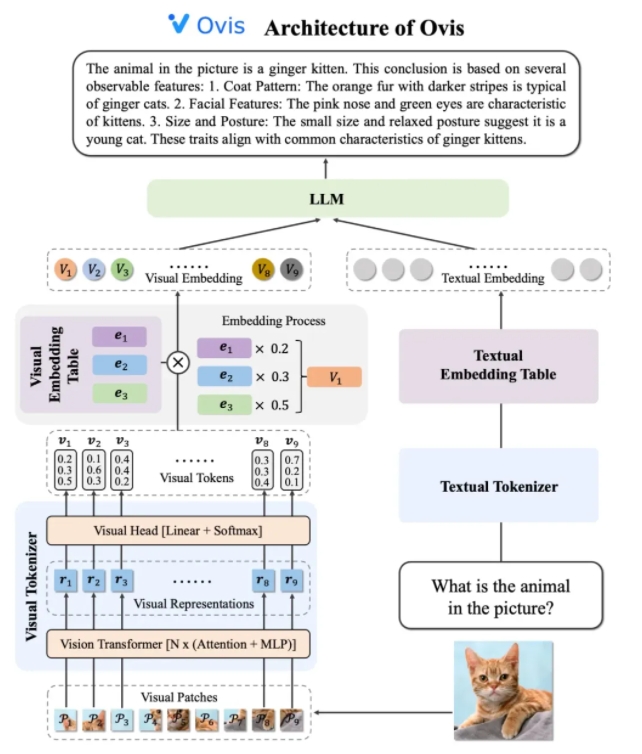

Ovis2's architecture design cleverly solves the limitations of the differences in embedding strategies between modals. It consists of three core components: visual tokenizer, visual embedding table and LLM (large language model). The visual tokenizer divides the input image into multiple image blocks, uses the visual Transformer to extract features, and matches the features to "visual words" through the visual head layer to generate a probabilistic visual token. The visual embedding table stores the embedding vectors corresponding to each visual word, while LLM splices the visual embedding vector and the text embedding vector for processing, and finally generates text output to complete the multimodal task.

In terms of training strategies, Ovis2 adopts a four-stage training method to fully stimulate its multimodal understanding ability. The first stage freezes most LLM and ViT (Visual Transformer) parameters, focusing on training vision modules, learning visual features to embedding transformations. The second stage further improves the feature extraction capabilities of the visual module and enhances high-resolution image understanding, multilingual and OCR capabilities. The third stage aligns the dialogue format of visual embedding with LLM through visual Caption data in the form of dialogue. The fourth stage is to carry out multimodal instruction training and preference learning to further improve the model's ability to follow user instructions and output quality under multiple modes.

To improve video comprehension capabilities, Ovis2 has developed an innovative keyframe selection algorithm. The algorithm selects the most useful video frames based on the correlation between frames and text, the combination diversity between frames and the sequence of frames. Through high-dimensional conditional similarity calculations, determinant point process (DPP), and Markov decision-making process (MDP), the algorithm can efficiently select keyframes in a limited visual context, thereby significantly improving the performance of video comprehension.

The Ovis2 series model has performed particularly well on the OpenCompass multimodal evaluation list. Models of different sizes have achieved SOTA results on multiple Benchmarks. For example, Ovis2-34B ranked second and first in the multimodal general capability and mathematical reasoning list, respectively, showing its powerful performance. In addition, Ovis2 also achieved leading results in the video understanding list, further proving its advantages in multimodal tasks.

Alibaba's internationalization team said that open source is a key force in promoting the progress of AI technology. By publicly sharing Ovis2's research results, the team looks forward to exploring the cutting-edge of multimodal large models with global developers and inspiring more innovative applications. Currently, Ovis2's code has been open sourced to GitHub, and the model can be obtained on the Hugging Face and Modelscope platforms, and online demos are provided for user experience. Related research papers have also been published on arXiv for reference by developers and researchers.

Code: https://github.com/AIDC-AI/Ovis

Model (Huggingface): https://huggingface.co/AIDC-AI/Ovis2-34B

Models: https://modelscope.cn/collections/Ovis2-1e2840cb4f7d45

Demo: https://huggingface.co/spaces/AIDC-AI/Ovis2-16B

arXiv:https://arxiv.org/abs/2405.20797