IBM took an important step in May, announcing the open source of its Granite13B Big Language Model (LLM) for enterprise application scenarios. This move not only demonstrates IBM's leading position in the field of artificial intelligence, but also provides enterprise users with powerful tools to help them better deal with complex business needs. Recently, Armand Ruiz, vice president of product at IBM AI platform, further disclosed the complete data set used to train Granite13B. The total amount of this data set is as high as 6.48TB, covering rich information in multiple fields.

It is worth noting that this huge data set has been strictly preprocessed and reduced to 2.07TB, a 68% reduction. Ruiz emphasized when publishing the data that this preprocessing step is crucial to ensuring high quality, unbiased and ethical and legal requirements of the data set. Enterprise application scenarios have extremely high requirements for data accuracy and reliability, so IBM invested a lot of resources in this process to ensure that the final data set can meet these needs.

The dataset has a wide range of content sources and covers authoritative data in multiple fields. These include more than 2.4 million scientific papers preprints from arXiv, Common Crawl's open network crawl, and DeepMind Mathematics' mathematical Q&A. In addition, the dataset contains Free Law from the U.S. courts, GitHub Clean code data provided by CodeParrot, and Hacker News Computer Science and Entrepreneur News from 2007 to 2018.

Other important data sources include OpenWeb Text (an open source version of the OpenAI Web Text corpus), Project Gutenberg (a free e-book focused on early work), Pubmed Central’s biomedical and life science papers, and the U.S. Securities and Exchange Commission (SEC) 10-K/Q submissions (1934-2022). In addition, the dataset includes user-contributed content on the Stack Exchange network, US patents (USPTOs) awarded between 1975 and May 2023, unstructured web content provided by Webhose, and content from eight English Wikimedia projects .

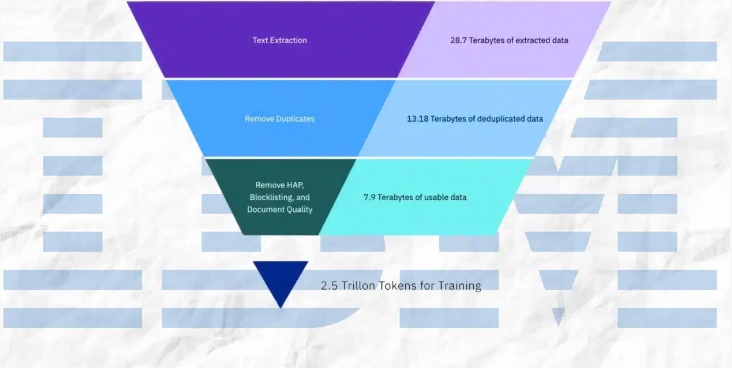

During the preprocessing process, IBM has adopted a variety of technical means, including text extraction, deduplication, language recognition, sentence segmentation, and labeling of hatred, abuse and swear words. In addition, steps such as document quality annotation, URL masking annotation, filtering and tokenization are also applied in the dataset. These steps ensure the purity and high quality of the dataset, laying a solid foundation for model training.

IBM not only disclosed the data set, but also released four versions of the Granite code model, with parameters ranging from 3 billion to 34 billion. These models performed well in a range of benchmarks and surpassed other comparable models in many tasks such as Code Llama and Llama3. This achievement further proves IBM's technical strength and innovative capabilities in the field of artificial intelligence.

In summary, IBM's series of measures not only provide powerful tools for enterprise users, but also make important contributions to the development of the field of artificial intelligence. By exposing high-quality data sets and excellent performance models, IBM is promoting the widespread popularity of AI technology in enterprise applications and paving the way for future innovation.