DeepSeek recently released its new open source model DeepSeek-Coder-V2, which surpasses GPT-4-Turbo in terms of code and mathematical capabilities, and significantly improves multi-language support and context processing length. It adopts a Mix of Experts (MoE) architecture and is specifically optimized for code generation and mathematical reasoning capabilities. Its performance is among the best in the world, and it provides two scale options of 236B parameters and 16B parameters to meet different application needs. All codes, papers and models are open source and can be used for free commercially without application.

Webmaster's Home (ChinaZ.com) News on June 18: DeepSeek recently announced the release of an open source model called DeepSeek-Coder-V2, which surpasses GPT-4-Turbo in terms of code and mathematical capabilities. And has been significantly expanded in multi-language support and context processing length. Based on the model structure of DeepSeek-V2, DeepSeek-Coder-V2 adopts a Mix of Experts (MoE) architecture specifically designed to enhance code and mathematical reasoning capabilities.

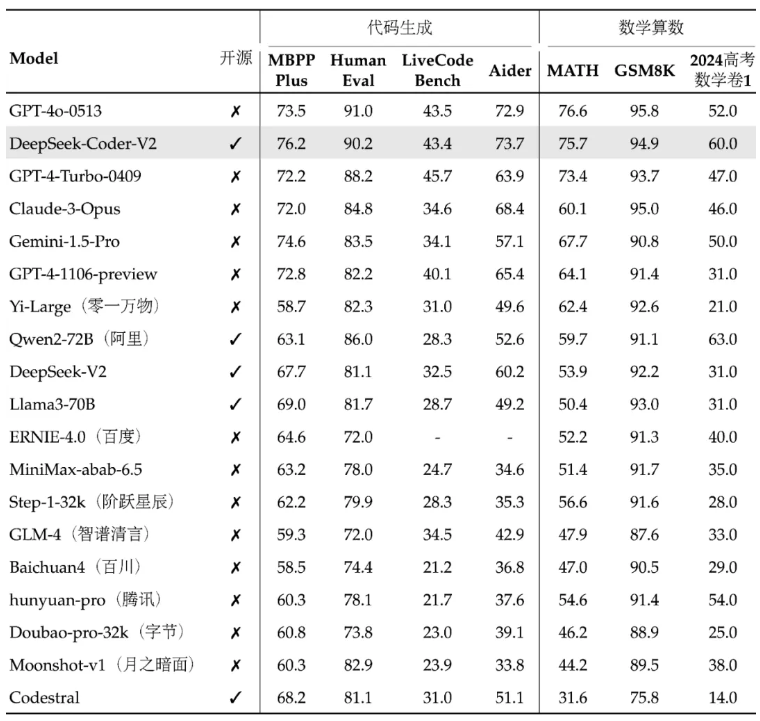

The performance of DeepSeek-Coder-V2 ranks among the best in the world, and its code generation and mathematical arithmetic capabilities are particularly outstanding. This model and its related codes and papers have all been open source and are available for free commercial use without application. The model is available in two sizes: 236B parameters and 16B parameters to meet different application needs.

In terms of multi-language support, the programming languages that DeepSeek-Coder-V2 can support have been expanded from 86 to 338, adapting to more diverse development needs. At the same time, the context length it supports is expanded from 16K to 128K, enabling it to handle longer input content. DeepSeek-Coder-V2 also provides API services, supports 32K context, and the price is the same as DeepSeek-V2.

In standard benchmark tests, DeepSeek-Coder-V2 outperforms some closed-source models in code generation, code completion, code repair, and mathematical reasoning. Users can download different versions of the DeepSeek-Coder-V2 model, including basic version and instruction version, as well as versions with different parameter scales.

DeepSeek also provides an online experience platform and GitHub links, as well as technical reports, to facilitate users to further understand and use DeepSeek-Coder-V2. The release of this model not only brings powerful code and mathematical processing capabilities to the open source community, but also helps promote the development and application of related technologies.

Project address: https://top.aibase.com/tool/deepseek-coder-v2

Online experience: https://chat.deepseek.com/sign_in

The open source release of DeepSeek-Coder-V2 provides developers with powerful tools and marks a significant advancement in open source large model technology. Its free commercial use and convenient online experience platform will further promote the popularization and application of artificial intelligence technology, and it is worth looking forward to its future development and application.