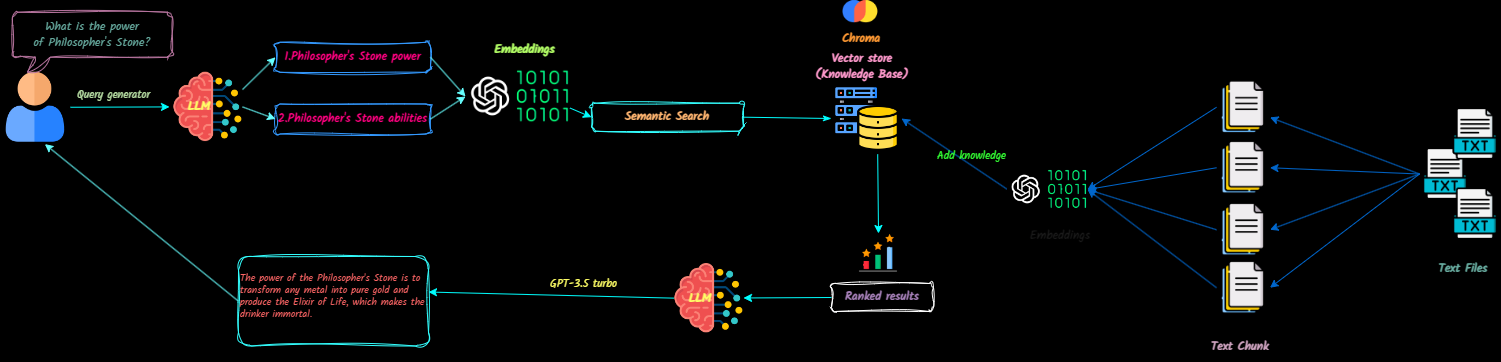

QnA-With-RAG is a full-stack application that transforms text documents into context for Large Language Models (LLMs) to reference during chats. You can easily add, delete documents, and create multiple knowledge bases.

This project aims to create a production-ready chatbot using the OpenAI API. It incorporates VectorDB and Langsmith to enhance its functionality.

Watch a demo of the application here.

Future updates will include:

Clone the Repository

git clone [email protected]:Ja-yy/QnA-With-RAG.gitSet Up Environment Variables

Create a .env file with the following content:

OPENAI_API_KEY='<your_open_ai_key>'

EMBEDDING_MODEL='text-embedding-ada-002'

CHAT_MODEL='gpt-3.5-turbo'

TEMPERATURE=0

MAX_RETRIES=2

REQUEST_TIMEOUT=15

CHROMADB_HOST="chromadb"

CHROMADB_PORT="8000"

CHROMA_SERVER_AUTH_CREDENTIALS="<test-token>"

CHROMA_SERVER_AUTH_CREDENTIALS_PROVIDER="chromadb.auth.token.TokenConfigServerAuthCredentialsProvider"

CHROMA_SERVER_AUTH_PROVIDER="chromadb.auth.token.TokenAuthServerProvider"

CHROMA_SERVER_AUTH_TOKEN_TRANSPORT_HEADER="AUTHORIZATION"

Build and Run the Application

Execute the following command:

docker-compose up -d --buildAccess the Application

Open your web browser and go to localhost to start using the app.

NGINX Configuration

nginx directory for optimal performance.What should I do if I encounter issues?

docker-compose logs for troubleshooting.How can I contribute?

Enjoy using the app! :)