The Proposed Healthcare Monitoring System using IoT and NLP aims to create an integrated platform that includes a smart band, mobile application, and generative question-answering system to facilitate efficient healthcare monitoring and medical assistance for patients and doctors. The smart band collects vital signs and stores them in a database for real-time access by both patients and healthcare providers. BioGPT-PubMedQA-Prefix-Tuning Model, implemented as a chatbot, assists patients with medical inquiries and provides initial prescriptions. Additionally, the chatbot serves as a doctor's assistant, assisting physicians with medical questions during patient consultations. The mobile application serves as the primary interface for users, both patients and doctors. It includes separate portals for patients and doctors, offering distinct features tailored to their needs

Check our Demo, Presentation, and Documentation

| Demo | Presentation | Documentation |

|---|---|---|

The mobile application serves as the primary interface for users, both patients, and doctors. It includes separate portals for patients and doctors, offering distinct features tailored to their needs.

API and Tokens used in App

API_URL = "https://api-inference.huggingface.co/models/Amira2045/BioGPT-Finetuned"

headers = {"Authorization": "Bearer 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"}

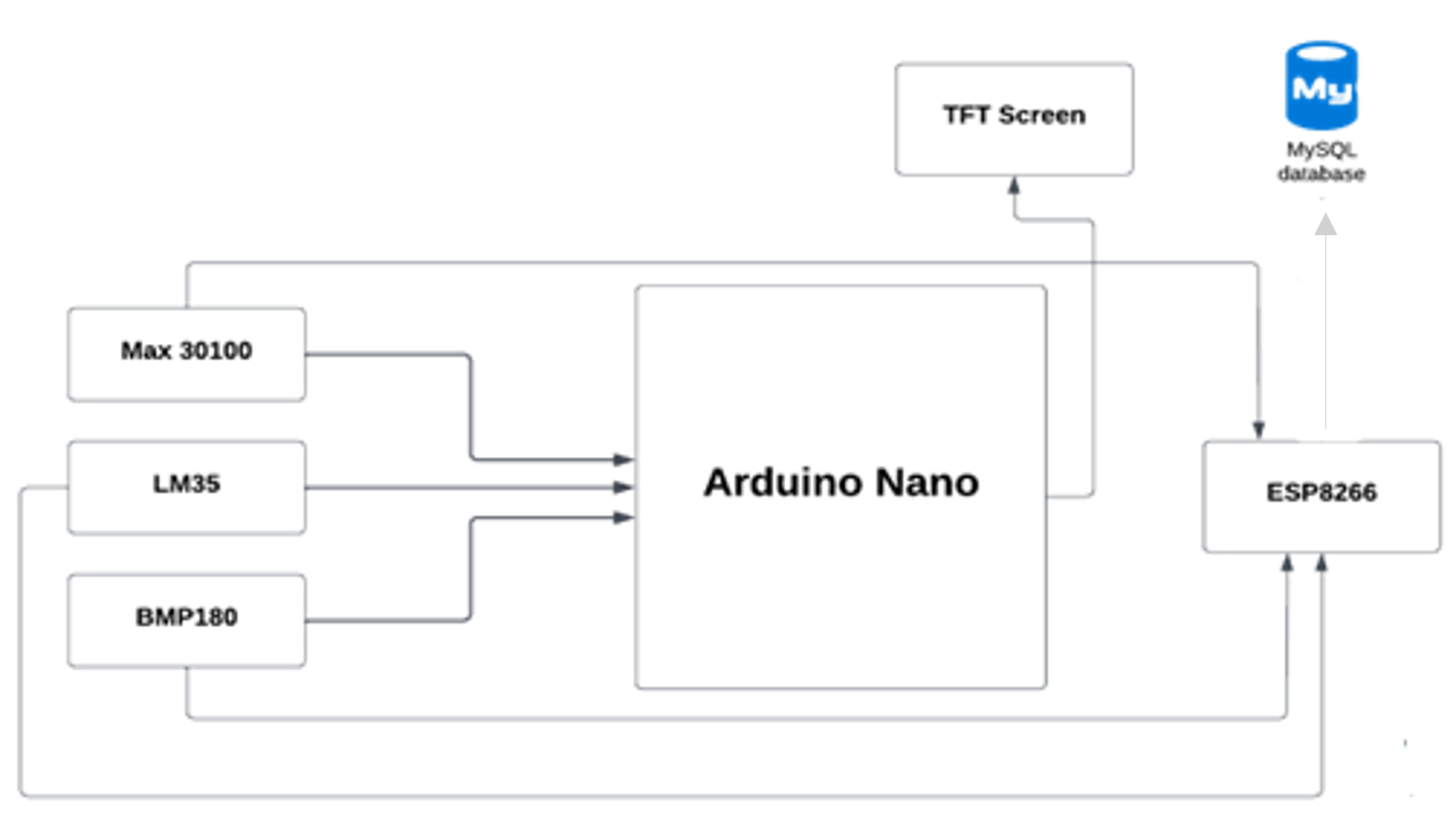

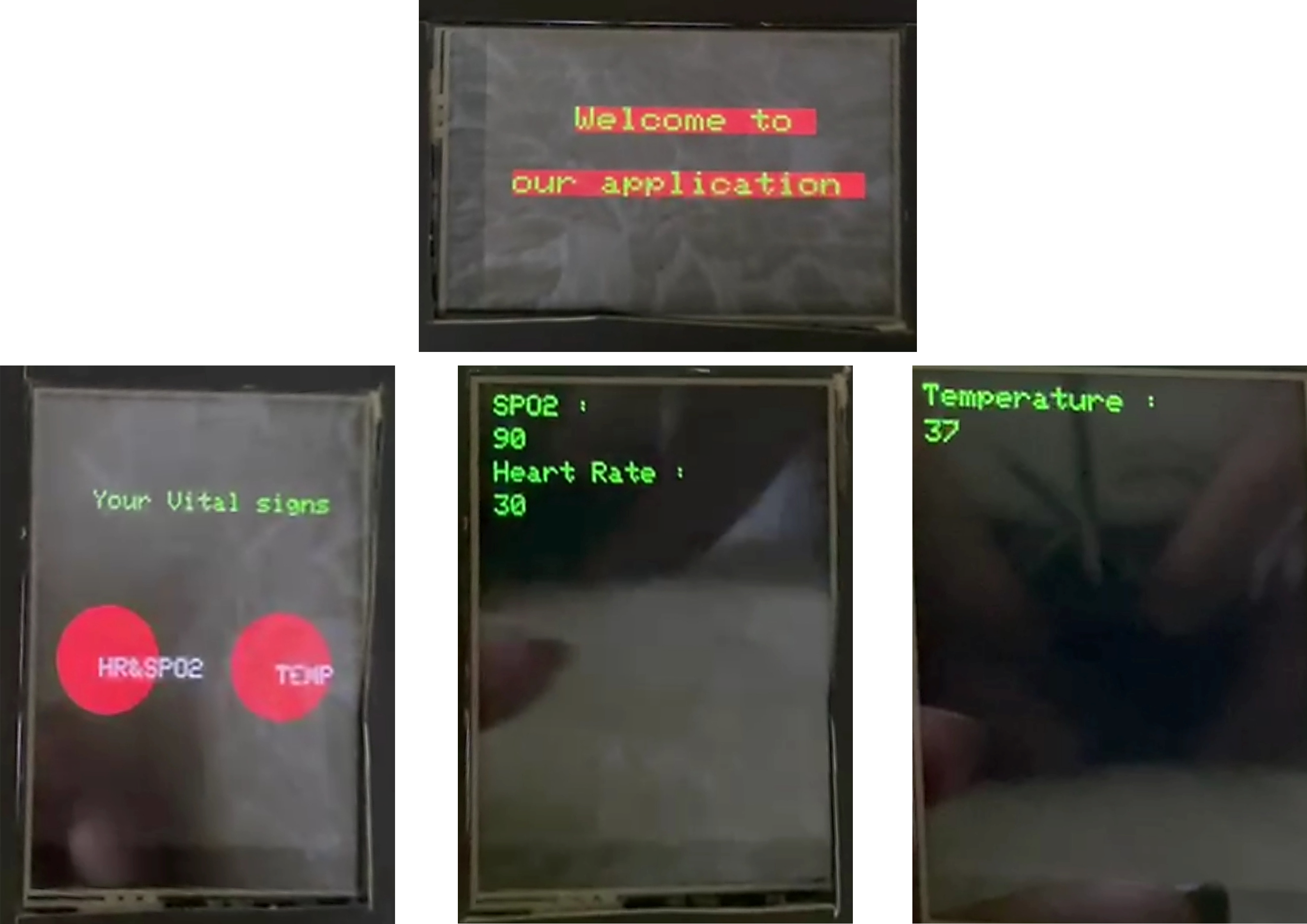

The MicroController(Arduino Nano) sends vital signs to our database using the Wi-Fi module ESP8266, then the mobile application fetches the data from the database.

BioGPT-PubMedQA-Prefix-Tuning model used for fine-tuning is BioGPT, a Large Language Model (LLM) used in the medical domain, the goal of the fine-tuned model is to answer medical questions. The model is deployed as a chatbot within the mobile application, allowing users to ask health-related queries and receive accurate responses. The chatbot acts as a virtual medical assistant, providing initial prescriptions and guiding users based on their symptoms and medical history.

| BioGPT-Large | BioGPT-PubMedQA-Prefix-Tuning | |

|---|---|---|

| Loss | 12.37 | 9.20 |

| Perplexity | 237016.3 | 1350.9 |

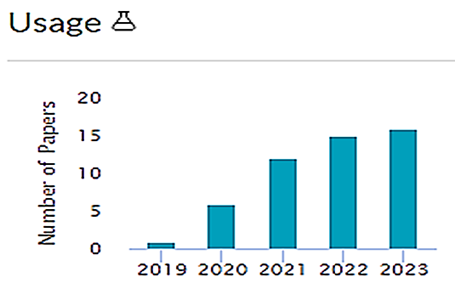

PubMedQA _ Closed-domain question answering given PubMed abstract: the dataset contains questions on biomedical research that cover a wide range of biomedical topics, including diseases, treatments, genes, proteins, and more. PubMedQA is one of the MultiMedQA datasets (A benchmark for medical question answering). PubMedQA consists of 1k expert labeled, 61.2k unlabeled, and 211.3k artificially generated QA instances with yes/no/maybe multiple-choice answers and long answers given a question together with a PubMed abstract as context.

BioGPT, which was announced by Microsoft, can be used to analyze biomedical research with the aim of answering biomedical questions and can be especially relevant in helping researchers gain new insights.

BioGPT is a type of generative language model, which is trained on millions of biomedical research articles that have already been published. This essentially means that BioGPT can use this information to perform other tasks like answering questions, extracting relevant data, and generating text relevant to biomedical.

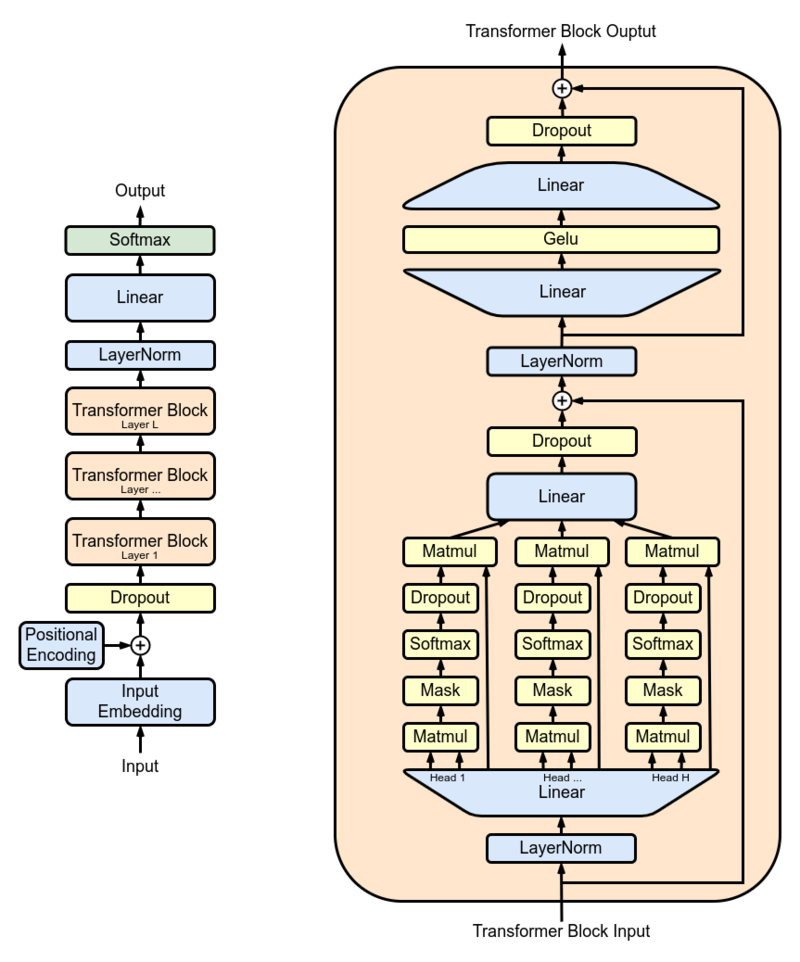

The researchers used GPT-2 XL as the primary model and trained it on 15 million PubMed abstracts before using it in the real world. GPT-2 XL is a Transformer decoder that has 48 layers, 1600 hidden sizes and 25 attention heads resulting in 1.5B parameters in total.

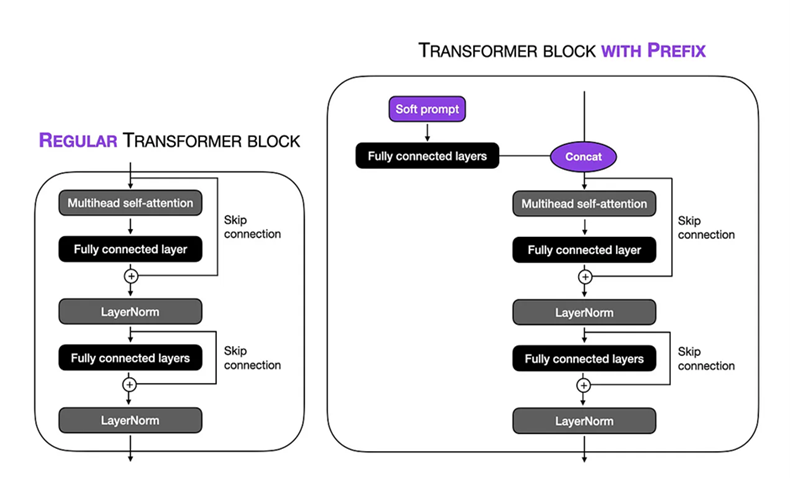

Fine-Tuning Setup: we performed soft prompt in prefix tuning technique on the BioGPT large 1.5B model. The virtual tokens length was set to 10, allowing us to focus on a specific context within the input sequence. By freezing the remaining parts of the model, we limited the number of trainable parameters to 1.5 million. During the training process, we utilized a TPU VM v3-8 with a batch size of 8 and num_warmup_steps = 1000 and gradient_accumulation_steps = 4 and weight_decay= 0.1,this enabled us to execute the training procedure over 24 steps, with each step involving the processing of 1024 tokens. The Adam optimizer was employed, utilizing a peak learning rate of 1×10−5 to optimize the model's performance over the course of 3 epochs.

Finetuned BioGPT model is hosted on Hugging Face, we used the following API to deploy the model on our mobile app.

API_URL = "https://api-inference.huggingface.co/models/Amira2045/BioGPT-Finetuned"

headers = {"Authorization": "Bearer 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"}

The MicroController(Arduino Nano) sends vital signs to our database using the Wi-Fi module ESP8266, then the mobile application fetches the data from the database.