HiOllama

1.0.0

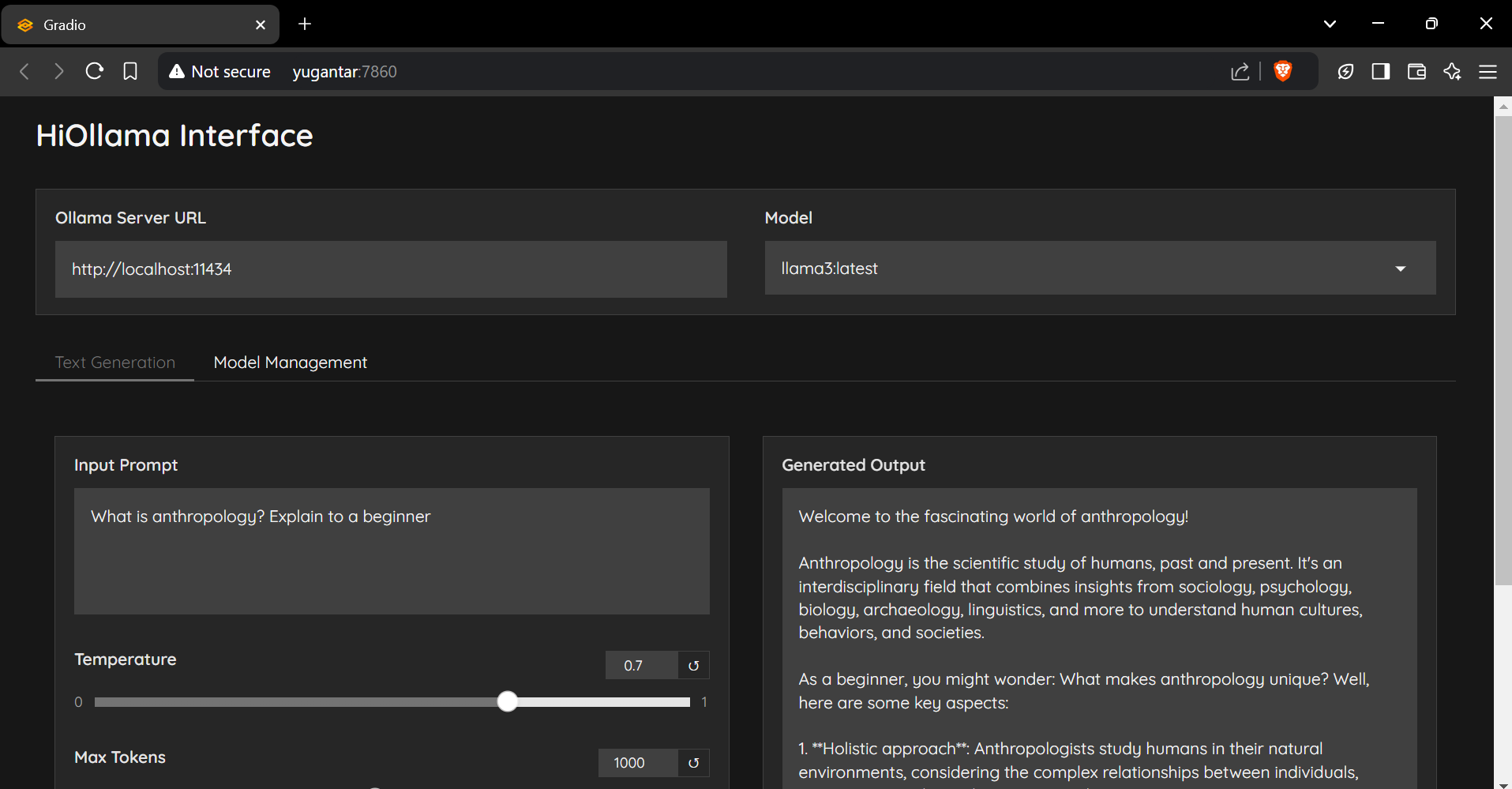

一個時尚且用戶友好的界面,可與Python和Gradio構建的Ollama型號進行交互。

git clone https://github.com/smaranjitghose/HiOllama.git

cd HiOllama # Windows

python -m venv env

. e nv S cripts a ctivate

# Linux/Mac

python3 -m venv env

source env/bin/activatepip install -r requirements.txt # Linux/Mac

curl -fsSL https://ollama.ai/install.sh | sh

# For Windows, install WSL2 first, then run the above command ollama servepython main.py http://localhost:7860

默認設置可以在main.py中修改:

DEFAULT_OLLAMA_URL = "http://localhost:11434"

DEFAULT_MODEL_NAME = "llama3" 連接錯誤

ollama serve )找不到模型

ollama pull model_nameollama list港口衝突

main.py的端口: app . launch ( server_port = 7860 ) # Change to another port 歡迎捐款!請隨時提交拉動請求。

git checkout -b feature/AmazingFeature )git commit -m 'Add some AmazingFeature' )git push origin feature/AmazingFeature )該項目是根據MIT許可證獲得許可的 - 有關詳細信息,請參見許可證文件。

由Smaranjit Ghose製成的❤️