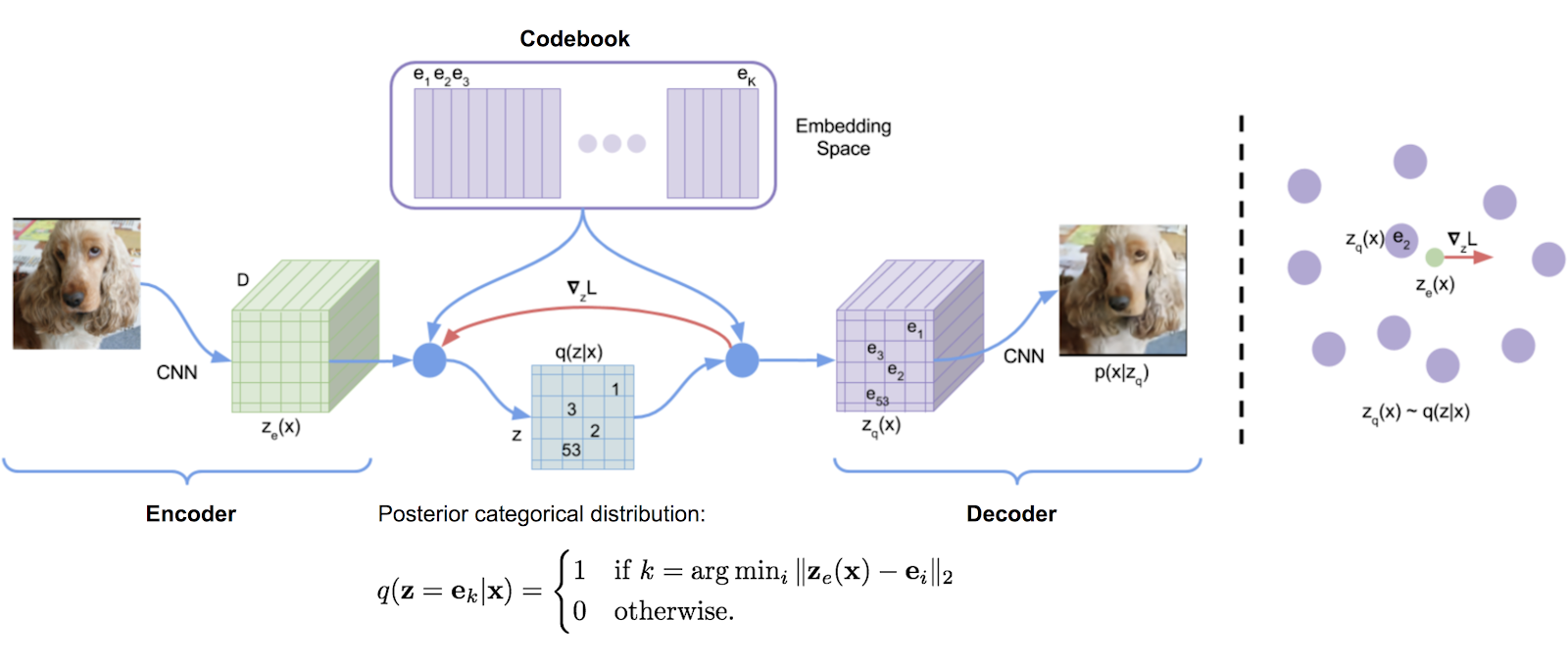

矢量量化库最初从DeepMind的TensorFlow实现中转录,并将其方便地制成包装。它使用指数移动平均值来更新字典。

VQ已被DeepMind和Openai成功使用,用于高质量的图像(VQ-VAE-2)和音乐(Jukebox)。

$ pip install vector-quantize-pytorch import torch

from vector_quantize_pytorch import VectorQuantize

vq = VectorQuantize (

dim = 256 ,

codebook_size = 512 , # codebook size

decay = 0.8 , # the exponential moving average decay, lower means the dictionary will change faster

commitment_weight = 1. # the weight on the commitment loss

)

x = torch . randn ( 1 , 1024 , 256 )

quantized , indices , commit_loss = vq ( x ) # (1, 1024, 256), (1, 1024), (1) 本文建议使用多个矢量量化器递归量化波形的残差。您可以将其与ResidualVQ类和一个额外的初始化参数一起使用。

import torch

from vector_quantize_pytorch import ResidualVQ

residual_vq = ResidualVQ (

dim = 256 ,

num_quantizers = 8 , # specify number of quantizers

codebook_size = 1024 , # codebook size

)

x = torch . randn ( 1 , 1024 , 256 )

quantized , indices , commit_loss = residual_vq ( x )

print ( quantized . shape , indices . shape , commit_loss . shape )

# (1, 1024, 256), (1, 1024, 8), (1, 8)

# if you need all the codes across the quantization layers, just pass return_all_codes = True

quantized , indices , commit_loss , all_codes = residual_vq ( x , return_all_codes = True )

# (8, 1, 1024, 256)此外,本文使用残差VQ来构建RQ-VAE,以生成具有更多压缩代码的高分辨率图像。

他们进行了两个修改。首先是在所有量词中共享代码簿。第二个是随机采样代码,而不是总是采取最接近的匹配。您可以将这两个功能与两个额外的关键字参数一起使用。

import torch

from vector_quantize_pytorch import ResidualVQ

residual_vq = ResidualVQ (

dim = 256 ,

num_quantizers = 8 ,

codebook_size = 1024 ,

stochastic_sample_codes = True ,

sample_codebook_temp = 0.1 , # temperature for stochastically sampling codes, 0 would be equivalent to non-stochastic

shared_codebook = True # whether to share the codebooks for all quantizers or not

)

x = torch . randn ( 1 , 1024 , 256 )

quantized , indices , commit_loss = residual_vq ( x )

# (1, 1024, 256), (1, 1024, 8), (1, 8)最近的一篇论文进一步提议在特征维度组上进行残留VQ,在使用较少的代码簿时显示出与Encodec的等效结果。您可以通过导入GroupedResidualVQ来使用它

import torch

from vector_quantize_pytorch import GroupedResidualVQ

residual_vq = GroupedResidualVQ (

dim = 256 ,

num_quantizers = 8 , # specify number of quantizers

groups = 2 ,

codebook_size = 1024 , # codebook size

)

x = torch . randn ( 1 , 1024 , 256 )

quantized , indices , commit_loss = residual_vq ( x )

# (1, 1024, 256), (2, 1, 1024, 8), (2, 1, 8) Soundstream论文提出,应该由第一批的Kmeans Centroid初始化代码书。您可以ResidualVQ一个标志kmeans_init = True VectorQuantize打开此功能

import torch

from vector_quantize_pytorch import ResidualVQ

residual_vq = ResidualVQ (

dim = 256 ,

codebook_size = 256 ,

num_quantizers = 4 ,

kmeans_init = True , # set to True

kmeans_iters = 10 # number of kmeans iterations to calculate the centroids for the codebook on init

)

x = torch . randn ( 1 , 1024 , 256 )

quantized , indices , commit_loss = residual_vq ( x )

# (1, 1024, 256), (1, 1024, 4), (1, 4) 传统上,VQ-VAE是通过直通估算器(Ste)进行训练的。在向后传递期间,梯度围绕VQ层而不是通过它流动。旋转技巧纸提出了通过VQ层转换梯度的,因此将输入矢量和量化输出之间的相对角度和幅度编码为梯度。您可以在VectorQuantize类中使用rotation_trick=True/False启用或禁用此功能。

from vector_quantize_pytorch import VectorQuantize

vq_layer = VectorQuantize (

dim = 256 ,

codebook_size = 256 ,

rotation_trick = True , # Set to False to use the STE gradient estimator or True to use the rotation trick.

)该存储库将包含一些从各种论文到战斗“死”代码书条目的技术,这是使用向量量化器时常见的问题。

改进的VQGAN论文建议将代码手册保持在较低的维度中。量化后,在投影回到高维之前,将对编码器值投射下来。您可以使用codebook_dim Hypergarameter设置此设置。

import torch

from vector_quantize_pytorch import VectorQuantize

vq = VectorQuantize (

dim = 256 ,

codebook_size = 256 ,

codebook_dim = 16 # paper proposes setting this to 32 or as low as 8 to increase codebook usage

)

x = torch . randn ( 1 , 1024 , 256 )

quantized , indices , commit_loss = vq ( x )

# (1, 1024, 256), (1, 1024), (1,)改进的VQGAN纸还建议L2标准化代码和编码向量,该代码归结为使用余弦相似性。他们声称在球体上执行向量会导致代码使用和下游重建的改进。您可以通过设置use_cosine_sim = True来打开此操作

import torch

from vector_quantize_pytorch import VectorQuantize

vq = VectorQuantize (

dim = 256 ,

codebook_size = 256 ,

use_cosine_sim = True # set this to True

)

x = torch . randn ( 1 , 1024 , 256 )

quantized , indices , commit_loss = vq ( x )

# (1, 1024, 256), (1, 1024), (1,)最后,Soundstream Paper具有一个方案,它们可以用当前批处理的随机选择向量替换具有低于一定阈值的代码。您可以使用threshold_ema_dead_code关键字设置此阈值。

import torch

from vector_quantize_pytorch import VectorQuantize

vq = VectorQuantize (

dim = 256 ,

codebook_size = 512 ,

threshold_ema_dead_code = 2 # should actively replace any codes that have an exponential moving average cluster size less than 2

)

x = torch . randn ( 1 , 1024 , 256 )

quantized , indices , commit_loss = vq ( x )

# (1, 1024, 256), (1, 1024), (1,)VQ-VAE / VQ-GAN正在迅速越来越受欢迎。最近的一篇论文提出,在图像上使用矢量量化时,执行代码本为正交导致离散代码的翻译等效性,从而导致下游文本的大量改进到图像生成任务。

您可以通过简单地将orthogonal_reg_weight设置为大于0来使用此功能,在这种情况下,正交正则化将添加到该模块输出的辅助损耗中。

import torch

from vector_quantize_pytorch import VectorQuantize

vq = VectorQuantize (

dim = 256 ,

codebook_size = 256 ,

accept_image_fmap = True , # set this true to be able to pass in an image feature map

orthogonal_reg_weight = 10 , # in paper, they recommended a value of 10

orthogonal_reg_max_codes = 128 , # this would randomly sample from the codebook for the orthogonal regularization loss, for limiting memory usage

orthogonal_reg_active_codes_only = False # set this to True if you have a very large codebook, and would only like to enforce the loss on the activated codes per batch

)

img_fmap = torch . randn ( 1 , 256 , 32 , 32 )

quantized , indices , loss = vq ( img_fmap ) # (1, 256, 32, 32), (1, 32, 32), (1,)

# loss now contains the orthogonal regularization loss with the weight as assigned有许多论文提出了具有多头方法的离散潜在表示的变体(每个功能多个代码)。我决定提供一种变体,其中使用相同的代码簿在输入head数时量化量化。

您还可以使用NWT纸的更验证的方法(模式)

import torch

from vector_quantize_pytorch import VectorQuantize

vq = VectorQuantize (

dim = 256 ,

codebook_dim = 32 , # a number of papers have shown smaller codebook dimension to be acceptable

heads = 8 , # number of heads to vector quantize, codebook shared across all heads

separate_codebook_per_head = True , # whether to have a separate codebook per head. False would mean 1 shared codebook

codebook_size = 8196 ,

accept_image_fmap = True

)

img_fmap = torch . randn ( 1 , 256 , 32 , 32 )

quantized , indices , loss = vq ( img_fmap )

# (1, 256, 32, 32), (1, 32, 32, 8), (1,)本文首先建议使用随机投影量化量化器进行掩盖语音建模,其中信号用随机初始化的矩阵投影,然后与随机的初始化代码簿进行匹配。因此,一个人不需要学习量化器。 Google的通用语音模型使用了这项技术来实现SOTA进行语音到文本建模。

USM进一步建议使用多个代码簿,以及具有多柔软目标的蒙版语音建模。您可以通过将num_codebooks设置为大于1来轻松执行此操作

import torch

from vector_quantize_pytorch import RandomProjectionQuantizer

quantizer = RandomProjectionQuantizer (

dim = 512 , # input dimensions

num_codebooks = 16 , # in USM, they used up to 16 for 5% gain

codebook_dim = 256 , # codebook dimension

codebook_size = 1024 # codebook size

)

x = torch . randn ( 1 , 1024 , 512 )

indices = quantizer ( x )

# (1, 1024, 16)该存储库还应在多进程设置中自动同步代码簿。如果不是某种程度上,请打开一个问题。您可以通过设置sync_codebook = True | False

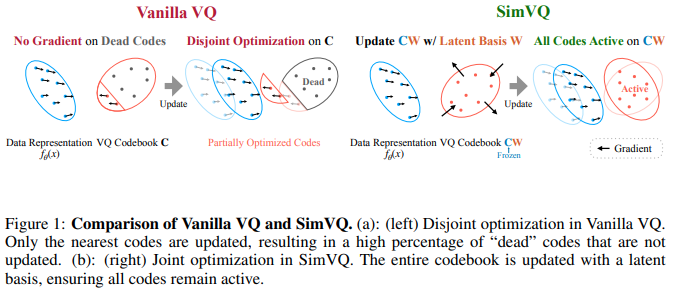

新的ICLR 2025论文提出了一个方案,该方案被冻结了,并且代码是通过线性投影隐式生成的。作者声称此设置会导致较少的代码簿崩溃,并且更容易收敛。我发现,当与Fifty等人的旋转技巧配对时,它的性能甚至更好,并将线性投影扩展到一个小层MLP。您可以尝试进行试验

更新:听到不同的结果

import torch

from vector_quantize_pytorch import SimVQ

sim_vq = SimVQ (

dim = 512 ,

codebook_size = 1024 ,

rotation_trick = True # use rotation trick from Fifty et al.

)

x = torch . randn ( 1 , 1024 , 512 )

quantized , indices , commit_loss = sim_vq ( x )

assert x . shape == quantized . shape

assert torch . allclose ( quantized , sim_vq . indices_to_codes ( indices ), atol = 1e-6 )对于残留的味道,只需进口ResidualSimVQ即

import torch

from vector_quantize_pytorch import ResidualSimVQ

residual_sim_vq = ResidualSimVQ (

dim = 512 ,

num_quantizers = 4 ,

codebook_size = 1024 ,

rotation_trick = True # use rotation trick from Fifty et al.

)

x = torch . randn ( 1 , 1024 , 512 )

quantized , indices , commit_loss = residual_sim_vq ( x )

assert x . shape == quantized . shape

assert torch . allclose ( quantized , residual_sim_vq . get_output_from_indices ( indices ), atol = 1e-6 )

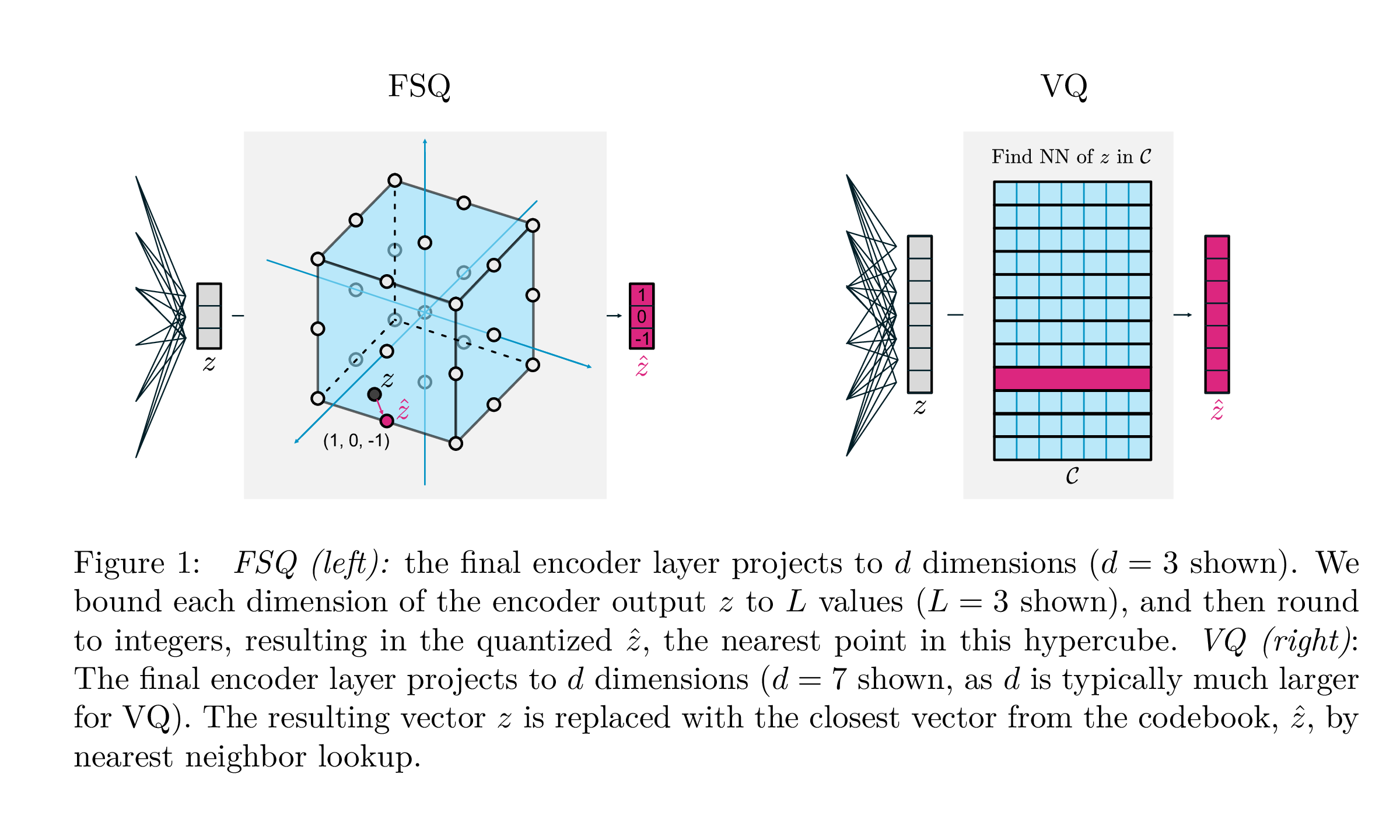

| VQ | FSQ | |

|---|---|---|

| 量化 | argmin_c || ZC || | 圆(f(z)) |

| 梯度 | 直接通过估计(Ste) | Ste |

| 辅助损失 | 承诺,代码书,熵损失,... | N/A。 |

| 技巧 | ema在代码簿上,代码簿拆分,预测,... | N/A。 |

| 参数 | 代码本 | N/A。 |

Google DeepMind的这项工作旨在极大地简化用于生成建模,消除承诺损失的需求,EMA更新代码书的需求以及解决CodeBook倒塌或利用不足的问题的方式。他们只需直接通过梯度将每个标量围成离散的水平。这些代码变成了HyperCube中的统一点。

感谢@sekstini在记录时间内对此实现进行移植!

import torch

from vector_quantize_pytorch import FSQ

quantizer = FSQ (

levels = [ 8 , 5 , 5 , 5 ]

)

x = torch . randn ( 1 , 1024 , 4 ) # 4 since there are 4 levels

xhat , indices = quantizer ( x )

# (1, 1024, 4), (1, 1024)

assert torch . all ( xhat == quantizer . indices_to_codes ( indices ))即兴剩余的FSQ,以尝试改进音频编码。

荣誉归@sekstini最初在这里构成这个想法

import torch

from vector_quantize_pytorch import ResidualFSQ

residual_fsq = ResidualFSQ (

dim = 256 ,

levels = [ 8 , 5 , 5 , 3 ],

num_quantizers = 8

)

x = torch . randn ( 1 , 1024 , 256 )

residual_fsq . eval ()

quantized , indices = residual_fsq ( x )

# (1, 1024, 256), (1, 1024, 8)

quantized_out = residual_fsq . get_output_from_indices ( indices )

# (1, 1024, 256)

assert torch . all ( quantized == quantized_out )

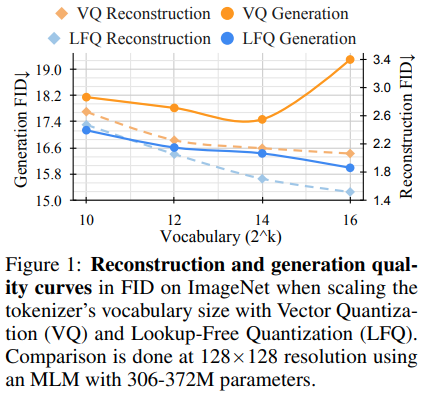

Magvit背后的研究团队发布了新的SOTA结果,用于生成视频建模。 V1和V2之间的核心变化包括一种新型的量化,无查找的量化(LFQ),该量化完全消除了代码簿并完全嵌入查找。

本文提出了使用独立二进制潜在的简单LFQ量化器。存在LFQ的其他实现。但是,该团队表明,具有LFQ的MAGVIT-V2在Imagenet基准上有了显着改善。 LFQ和2级FSQ之间的差异包括熵正规以及保持承诺损失。

在没有密码期的情况下开发更先进的LFQ量化方法可以彻底改变生成建模。

您可以简单地使用如下。将在magvit2 pytorch港口被狗食

import torch

from vector_quantize_pytorch import LFQ

# you can specify either dim or codebook_size

# if both specified, will be validated against each other

quantizer = LFQ (

codebook_size = 65536 , # codebook size, must be a power of 2

dim = 16 , # this is the input feature dimension, defaults to log2(codebook_size) if not defined

entropy_loss_weight = 0.1 , # how much weight to place on entropy loss

diversity_gamma = 1. # within entropy loss, how much weight to give to diversity of codes, taken from https://arxiv.org/abs/1911.05894

)

image_feats = torch . randn ( 1 , 16 , 32 , 32 )

quantized , indices , entropy_aux_loss = quantizer ( image_feats , inv_temperature = 100. ) # you may want to experiment with temperature

# (1, 16, 32, 32), (1, 32, 32), ()

assert ( quantized == quantizer . indices_to_codes ( indices )). all ()您还可以将视频功能传递为(batch, feat, time, height, width)或序列(batch, seq, feat)

import torch

from vector_quantize_pytorch import LFQ

quantizer = LFQ (

codebook_size = 65536 ,

dim = 16 ,

entropy_loss_weight = 0.1 ,

diversity_gamma = 1.

)

seq = torch . randn ( 1 , 32 , 16 )

quantized , * _ = quantizer ( seq )

assert seq . shape == quantized . shape

video_feats = torch . randn ( 1 , 16 , 10 , 32 , 32 )

quantized , * _ = quantizer ( video_feats )

assert video_feats . shape == quantized . shape或支持多个密码手册

import torch

from vector_quantize_pytorch import LFQ

quantizer = LFQ (

codebook_size = 4096 ,

dim = 16 ,

num_codebooks = 4 # 4 codebooks, total codebook dimension is log2(4096) * 4

)

image_feats = torch . randn ( 1 , 16 , 32 , 32 )

quantized , indices , entropy_aux_loss = quantizer ( image_feats )

# (1, 16, 32, 32), (1, 32, 32, 4), ()

assert image_feats . shape == quantized . shape

assert ( quantized == quantizer . indices_to_codes ( indices )). all ()简化的残留LFQ,以查看是否可以改善音频压缩。

import torch

from vector_quantize_pytorch import ResidualLFQ

residual_lfq = ResidualLFQ (

dim = 256 ,

codebook_size = 256 ,

num_quantizers = 8

)

x = torch . randn ( 1 , 1024 , 256 )

residual_lfq . eval ()

quantized , indices , commit_loss = residual_lfq ( x )

# (1, 1024, 256), (1, 1024, 8), (8)

quantized_out = residual_lfq . get_output_from_indices ( indices )

# (1, 1024, 256)

assert torch . all ( quantized == quantized_out )分离对于表示学习至关重要,因为它可以促进可解释性,概括,改善学习和鲁棒性。它符合捕获数据的有意义和独立的特征的目标,从而促进在各种应用程序中更有效地利用学习的表示形式。为了获得更好的分解,面临的挑战是在没有明确的地面真相信息的情况下删除数据集中的基本变化。这项工作引入了旨在在有组织的潜在空间内编码和解码的关键感应偏置。该策略结合了通过为每个维度使用单个可学习的标量代码簿分配离散的代码向量来包括离散潜在空间。这种方法使他们的模型能够有效地超越强大的先验方法。

请注意,他们必须使用很高的重量衰减来进行本文的结果。

import torch

from vector_quantize_pytorch import LatentQuantize

# you can specify either dim or codebook_size

# if both specified, will be validated against each other

quantizer = LatentQuantize (

levels = [ 5 , 5 , 8 ], # number of levels per codebook dimension

dim = 16 , # input dim

commitment_loss_weight = 0.1 ,

quantization_loss_weight = 0.1 ,

)

image_feats = torch . randn ( 1 , 16 , 32 , 32 )

quantized , indices , loss = quantizer ( image_feats )

# (1, 16, 32, 32), (1, 32, 32), ()

assert image_feats . shape == quantized . shape

assert ( quantized == quantizer . indices_to_codes ( indices )). all ()您还可以将视频功能传递为(batch, feat, time, height, width)或序列(batch, seq, feat)

import torch

from vector_quantize_pytorch import LatentQuantize

quantizer = LatentQuantize (

levels = [ 5 , 5 , 8 ],

dim = 16 ,

commitment_loss_weight = 0.1 ,

quantization_loss_weight = 0.1 ,

)

seq = torch . randn ( 1 , 32 , 16 )

quantized , * _ = quantizer ( seq )

# (1, 32, 16)

video_feats = torch . randn ( 1 , 16 , 10 , 32 , 32 )

quantized , * _ = quantizer ( video_feats )

# (1, 16, 10, 32, 32)或支持多个密码手册

import torch

from vector_quantize_pytorch import LatentQuantize

model = LatentQuantize (

levels = [ 4 , 8 , 16 ],

dim = 9 ,

num_codebooks = 3

)

input_tensor = torch . randn ( 2 , 3 , dim )

output_tensor , indices , loss = model ( input_tensor )

# (2, 3, 9), (2, 3, 3), ()

assert output_tensor . shape == input_tensor . shape

assert indices . shape == ( 2 , 3 , num_codebooks )

assert loss . item () >= 0 @misc { oord2018neural ,

title = { Neural Discrete Representation Learning } ,

author = { Aaron van den Oord and Oriol Vinyals and Koray Kavukcuoglu } ,

year = { 2018 } ,

eprint = { 1711.00937 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.LG }

} @misc { zeghidour2021soundstream ,

title = { SoundStream: An End-to-End Neural Audio Codec } ,

author = { Neil Zeghidour and Alejandro Luebs and Ahmed Omran and Jan Skoglund and Marco Tagliasacchi } ,

year = { 2021 } ,

eprint = { 2107.03312 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.SD }

} @inproceedings { anonymous2022vectorquantized ,

title = { Vector-quantized Image Modeling with Improved {VQGAN} } ,

author = { Anonymous } ,

booktitle = { Submitted to The Tenth International Conference on Learning Representations } ,

year = { 2022 } ,

url = { https://openreview.net/forum?id=pfNyExj7z2 } ,

note = { under review }

} @inproceedings { lee2022autoregressive ,

title = { Autoregressive Image Generation using Residual Quantization } ,

author = { Lee, Doyup and Kim, Chiheon and Kim, Saehoon and Cho, Minsu and Han, Wook-Shin } ,

booktitle = { Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition } ,

pages = { 11523--11532 } ,

year = { 2022 }

} @article { Defossez2022HighFN ,

title = { High Fidelity Neural Audio Compression } ,

author = { Alexandre D'efossez and Jade Copet and Gabriel Synnaeve and Yossi Adi } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2210.13438 }

} @inproceedings { Chiu2022SelfsupervisedLW ,

title = { Self-supervised Learning with Random-projection Quantizer for Speech Recognition } ,

author = { Chung-Cheng Chiu and James Qin and Yu Zhang and Jiahui Yu and Yonghui Wu } ,

booktitle = { International Conference on Machine Learning } ,

year = { 2022 }

} @inproceedings { Zhang2023GoogleUS ,

title = { Google USM: Scaling Automatic Speech Recognition Beyond 100 Languages } ,

author = { Yu Zhang and Wei Han and James Qin and Yongqiang Wang and Ankur Bapna and Zhehuai Chen and Nanxin Chen and Bo Li and Vera Axelrod and Gary Wang and Zhong Meng and Ke Hu and Andrew Rosenberg and Rohit Prabhavalkar and Daniel S. Park and Parisa Haghani and Jason Riesa and Ginger Perng and Hagen Soltau and Trevor Strohman and Bhuvana Ramabhadran and Tara N. Sainath and Pedro J. Moreno and Chung-Cheng Chiu and Johan Schalkwyk and Franccoise Beaufays and Yonghui Wu } ,

year = { 2023 }

} @inproceedings { Shen2023NaturalSpeech2L ,

title = { NaturalSpeech 2: Latent Diffusion Models are Natural and Zero-Shot Speech and Singing Synthesizers } ,

author = { Kai Shen and Zeqian Ju and Xu Tan and Yanqing Liu and Yichong Leng and Lei He and Tao Qin and Sheng Zhao and Jiang Bian } ,

year = { 2023 }

} @inproceedings { Yang2023HiFiCodecGV ,

title = { HiFi-Codec: Group-residual Vector quantization for High Fidelity Audio Codec } ,

author = { Dongchao Yang and Songxiang Liu and Rongjie Huang and Jinchuan Tian and Chao Weng and Yuexian Zou } ,

year = { 2023 }

} @inproceedings { huh2023improvedvqste ,

title = { Straightening Out the Straight-Through Estimator: Overcoming Optimization Challenges in Vector Quantized Networks } ,

author = { Huh, Minyoung and Cheung, Brian and Agrawal, Pulkit and Isola, Phillip } ,

booktitle = { International Conference on Machine Learning } ,

year = { 2023 } ,

organization = { PMLR }

} @inproceedings { rogozhnikov2022einops ,

title = { Einops: Clear and Reliable Tensor Manipulations with Einstein-like Notation } ,

author = { Alex Rogozhnikov } ,

booktitle = { International Conference on Learning Representations } ,

year = { 2022 } ,

url = { https://openreview.net/forum?id=oapKSVM2bcj }

} @misc { shin2021translationequivariant ,

title = { Translation-equivariant Image Quantizer for Bi-directional Image-Text Generation } ,

author = { Woncheol Shin and Gyubok Lee and Jiyoung Lee and Joonseok Lee and Edward Choi } ,

year = { 2021 } ,

eprint = { 2112.00384 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @misc { mentzer2023finite ,

title = { Finite Scalar Quantization: VQ-VAE Made Simple } ,

author = { Fabian Mentzer and David Minnen and Eirikur Agustsson and Michael Tschannen } ,

year = { 2023 } ,

eprint = { 2309.15505 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @misc { yu2023language ,

title = { Language Model Beats Diffusion -- Tokenizer is Key to Visual Generation } ,

author = { Lijun Yu and José Lezama and Nitesh B. Gundavarapu and Luca Versari and Kihyuk Sohn and David Minnen and Yong Cheng and Agrim Gupta and Xiuye Gu and Alexander G. Hauptmann and Boqing Gong and Ming-Hsuan Yang and Irfan Essa and David A. Ross and Lu Jiang } ,

year = { 2023 } ,

eprint = { 2310.05737 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @inproceedings { Zhao2024ImageAV ,

title = { Image and Video Tokenization with Binary Spherical Quantization } ,

author = { Yue Zhao and Yuanjun Xiong and Philipp Krahenbuhl } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:270380237 }

} @misc { hsu2023disentanglement ,

title = { Disentanglement via Latent Quantization } ,

author = { Kyle Hsu and Will Dorrell and James C. R. Whittington and Jiajun Wu and Chelsea Finn } ,

year = { 2023 } ,

eprint = { 2305.18378 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.LG }

} @inproceedings { Irie2023SelfOrganisingND ,

title = { Self-Organising Neural Discrete Representation Learning `a la Kohonen } ,

author = { Kazuki Irie and R'obert Csord'as and J{"u}rgen Schmidhuber } ,

year = { 2023 } ,

url = { https://api.semanticscholar.org/CorpusID:256901024 }

} @article { Huijben2024ResidualQW ,

title = { Residual Quantization with Implicit Neural Codebooks } ,

author = { Iris Huijben and Matthijs Douze and Matthew Muckley and Ruud van Sloun and Jakob Verbeek } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2401.14732 } ,

url = { https://api.semanticscholar.org/CorpusID:267301189 }

} @article { Fifty2024Restructuring ,

title = { Restructuring Vector Quantization with the Rotation Trick } ,

author = { Christopher Fifty, Ronald G. Junkins, Dennis Duan, Aniketh Iyengar, Jerry W. Liu, Ehsan Amid, Sebastian Thrun, Christopher Ré } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2410.06424 } ,

url = { https://api.semanticscholar.org/CorpusID:273229218 }

} @inproceedings { Zhu2024AddressingRC ,

title = { Addressing Representation Collapse in Vector Quantized Models with One Linear Layer } ,

author = { Yongxin Zhu and Bocheng Li and Yifei Xin and Linli Xu } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:273812459 }

}