Still going crazy about the "speed" of processing long texts in big models? Don't worry! Tsinghua University has launched the "king bomb" technology - APB sequence parallel reasoning framework, and directly equipped the "turbocharged" engine to the big models! Actual tests show that when processing ultra-long text, this black technology is actually 10 times faster than Flash Attention! That's right, you heard it right, it's 10 times!

You should know that with the popularity of big models such as ChatGPT, AI's "reading" ability has also increased, and it is no problem to process long articles worth tens of thousands of words. However, in the face of massive information, the "brain" of traditional big models is a bit stuck - although the Transformer architecture is strong, its core attention mechanism is like a "super scanner". The longer the text, the exponential expansion of the scanning range, and the slower the speed.

In order to solve this "bottleneck" problem, scientists from Tsinghua University have joined hands with many research institutions and technology giants to find a different approach and launched an APB framework. The core mystery of this framework lies in the clever combination of "sequence parallel + sparse attention".

Simply put, the APB framework is like an efficient "cooperation" team. It "dismembers" long text into small pieces and allocates it to multiple GPUs "team members" to process it in parallel. What's even more amazing is that APB also equips each "team member" with "local KV cache compression" and "simplified communication" skills, allowing them to efficiently share key information while handling their respective tasks and jointly solve complex semantic dependencies in long texts.

What’s even more surprising is that the APB framework does not trade speed at the expense of performance. On the contrary, in the 128K ultra-long text test, APB not only had its speed soared, but its performance surpassed the traditional Flash Attention! Even the Star Attention, which Nvidia strongly promoted, was knocked down by APB, with a speed increase of 1.6 times, making it a "all-round ACE".

The most direct application of this breakthrough technology is to significantly shorten the first token response time of large models for processing long text requests. This means that when facing the long instructions of users' "spread" by the APB framework in the future, the big model equipped with the APB framework can instantly understand, respond in seconds, and completely bid farewell to the long wait of "loading...".

So, how does the APB framework achieve such a "non-the-nature" speed-up effect?

It turns out that the APB framework is well aware of the "pain points" of long text processing - calculation amount. The calculation amount of the traditional attention mechanism is proportional to the square of the text length, and long text is the "black hole" of calculation. In order to break through this bottleneck, the APB framework has launched two "magic moves":

The first move: improve parallelism and make "the fire is high for everyone to pick up firewood"

The APB framework takes full advantage of distributed computing to distribute computing tasks across multiple GPUs, just like "multi-player collaboration", with natural efficiency. Especially in terms of sequence parallelism, the APB framework shows extremely strong scalability, and is not limited by the model structure, and can easily cope with the text no matter how long it is.

The second trick: reduce invalid calculations and let "good steel be used on the blade"

The APB framework introduces a sparse attention mechanism, which is not "grab the eyebrows and beards at once", but "selectively" to calculate attention. It is like an expert with "fire eyes" that focuses only on key information in the text and ignores irrelevant parts, thus greatly reducing the amount of calculations.

However, the two tricks of "parallel" and "sparse" seem simple, but in fact they "hide mysteries". How to achieve efficient sparse attention calculation under the sequence parallel framework? This is the real "hard core" of the APB framework.

You should know that in a sequence parallel environment, each GPU only has part of the text information. If you want to achieve "global perception", it is like "a blind man touching an elephant", and the difficulty can be imagined. Previous methods such as Star Attention and APE either sacrificed performance or were limited in applicable scenarios, which failed to perfectly solve this problem.

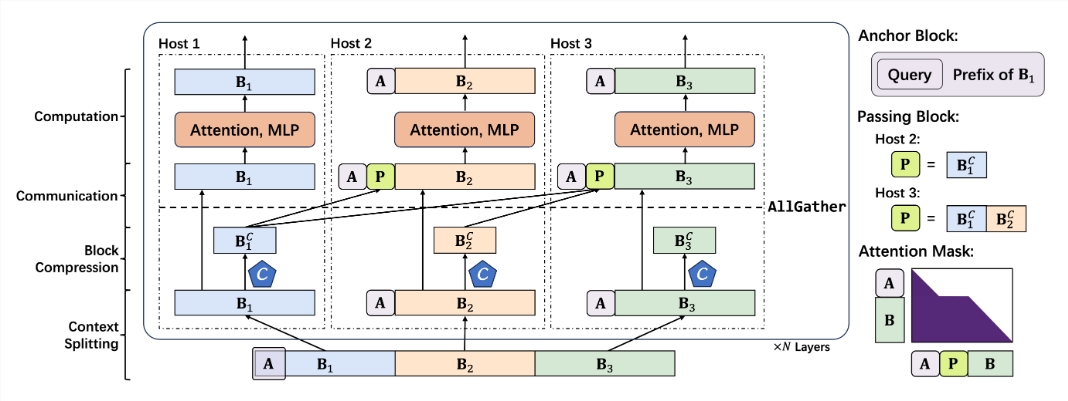

The APB framework cleverly avoids the "pit" of "large-scale communication" and takes a different approach to construct a low-communication sparse attention mechanism for sequence parallel scenarios. The core components of this mechanism include:

Smaller Anchor block: Anchor block is like a "navigator" that guides the attention mechanism to focus on key information. The APB framework innovatively reduces the size of the Anchor block, making it lighter and more flexible and reduces computing overhead.

Original Passing block: Passing block is the "soul" component of the APB framework, which cleverly solves the problem of long-distance semantic dependence. By "compressing and packaging" the key information processed by the preamble GPU and passing it to the subsequent GPU, each "team member" can "view the overall situation" and understand the "context" context of long text.

Query-aware context compression: The APB framework also introduces a "query-aware" mechanism, allowing the context compressor to "understand the problem", filter and retain key information related to the query more accurately, and further improve efficiency and accuracy.

Based on the above "unique skills", the APB framework has built a smooth and reasonable reasoning process:

Context segmentation: Distribute long text evenly to each GPU, and splice Anchor block at the beginning to "bury" query the problem.

Context compression: Use the retention header introduced by Locret to perform "smart compression" of the KV cache.

Efficient communication: Through the AllGather operator, the compressed KV cache is "passed" to the subsequent GPU to build a Passing block.

Speedy calculation: Use a specially made Flash Attention Kernel, and perform efficient calculations with an optimized attention mask. Passing block "retirescue" after the calculation is completed and does not participate in subsequent calculations.

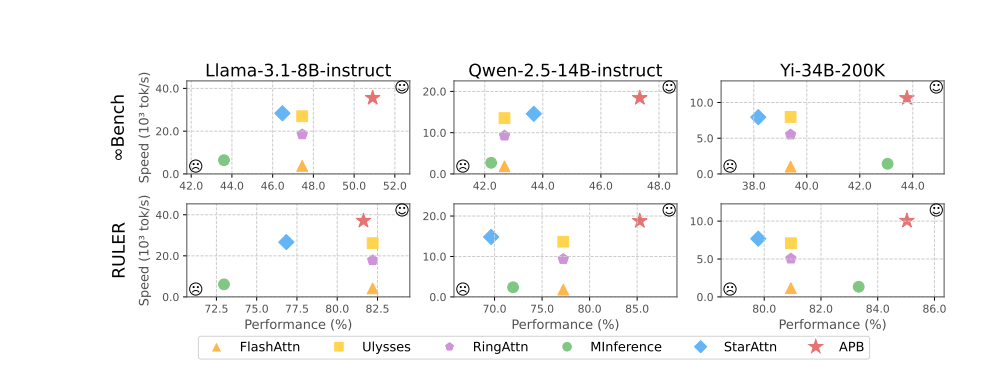

The experimental results eloquently demonstrate the excellent performance of the APB framework. In tests such as Llama-3.1-8B-instruct, Qwen-2.5-14B-instruct, Yi-34B-200K and multiple benchmarks such as InfiniteBench and RULER, the APB framework surpassed the crowd, achieving the best balance between performance and speed.

It is particularly worth mentioning that as the text length increases, the speed advantage of APB frameworks becomes more and more obvious, and it truly realizes the miraculous effect of "getting faster and faster". The mystery behind this is that the APB framework is much less computational than other methods, and the gap widens as text length increases.

More in-depth pre-filling time teardown analysis shows that sequence parallelism technology itself can significantly reduce the computation time of FFN (feedforward neural network). The sparse attention mechanism of the APB framework further compresses attention calculation time to the extreme. Compared with Star Attention, the APB framework cleverly uses Passing block to pass long-distance semantic dependencies, greatly reducing the size of the Anchor block, effectively reducing the additional overhead of FFN, and achieving the perfect effect of "both fish and bear's paw".

What is even more exciting is that the APB framework demonstrates excellent compatibility, can flexibly adapt to different distributed environments and model scales, and can maintain high performance and high efficiency "as stable as a rock" under various "hard" conditions.

It can be foreseen that with the advent of the APB framework, the "bottleneck" of large-scale long text reasoning will be completely broken, and the imagination space of AI applications will be infinitely expanded. In the future, whether it is intelligent customer service, financial analysis, scientific research and exploration, and content creation, we will usher in a new era of AI that is "faster, stronger, and smarter"!

Project address: https://github.com/thunlp/APB

Paper address: https://arxiv.org/pdf/2502.12085