In the field of artificial intelligence, a disruptive technology is quietly emerging. Recently, Inception Labs announced the launch of the Mercury series of diffusion large language models (dLLMs), a new generation of language models designed to generate high-quality text quickly and efficiently. Compared with the traditional autoregressive large language model, Mercury has increased generation speed by up to 10 times, enabling more than 1,000 markers per second on NVIDIA H100 graphics cards, a speed that was previously achieved only by custom chips.

Mercury Coder, the first product in the Mercury series, has been unveiled in public testing. The model focuses on code generation, demonstrates excellent performance and surpasses many existing speed optimization models in multiple programming benchmarks, such as the GPT-4o Mini and Claude3.5Haiku, while also nearly 10 times faster in speed. According to developer feedback, Mercury's code completion is more popular. In the test of C opilot Arena, the Mercury Coder Mini ranks among the top in performance and is one of the fastest models.

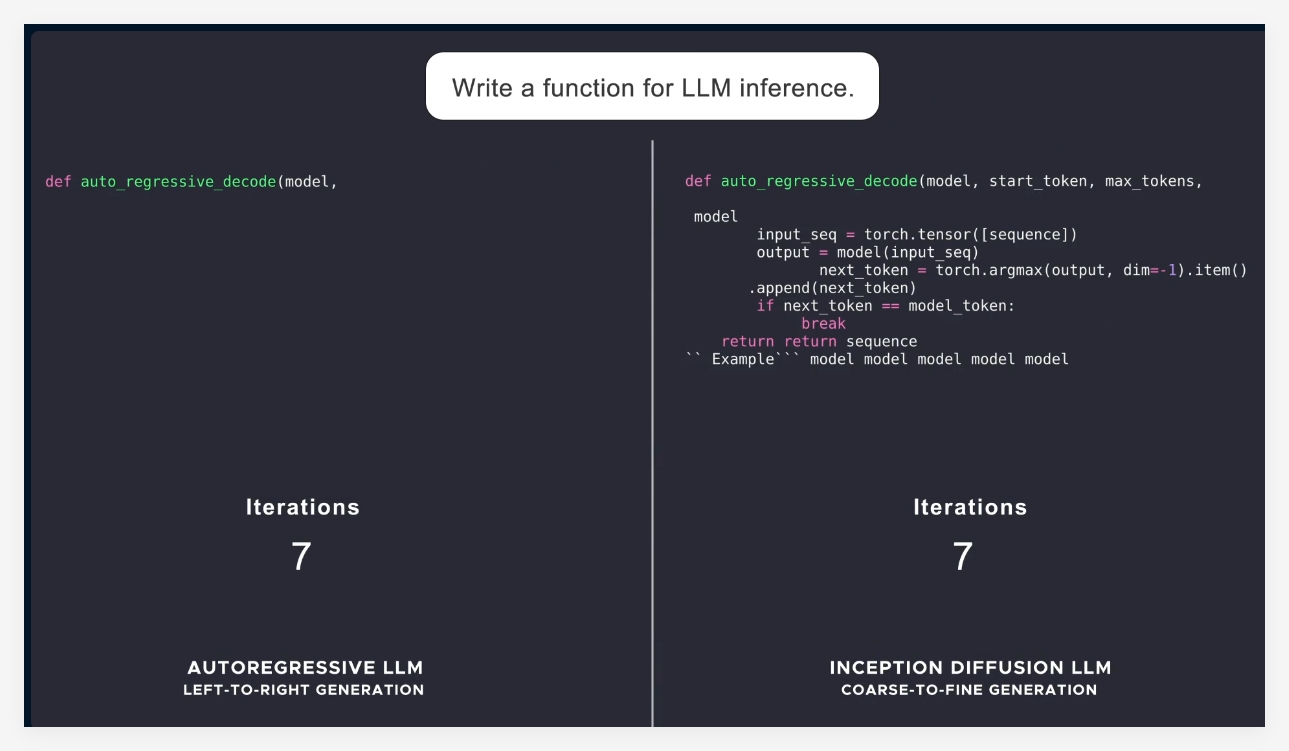

Most current language models adopt autoregression, that is, generate tags one by one from left to right, resulting in the generation process inevitably being sequential, with high latency and calculation costs. Mercury uses a "coarse to fine" generation method, starting from pure noise, and gradually refines the output after several "denoising" steps. This enables Mercury models to be processed in parallel with multiple tags at generation, enabling better inference and structured responsiveness.

With the launch of the Mercury series, Inception Labs demonstrates the great potential of diffusion models in the fields of text and code generation. Next, the company also plans to launch a language model suitable for chat applications to further expand the application scenarios of the diffusion language model. These new models will have stronger intelligent proxy capabilities, capable of complex planning and long-term generation. At the same time, their efficiency makes it work well on resource-constrained devices, such as smartphones and laptops.

Overall, the launch of Mercury marks an important advance in artificial intelligence technology, not only greatly improving speed and efficiency, but also providing higher quality solutions to the industry.

Official introduction: https://www.inceptionlabs.ai/news

Online experience: https://chat.inceptionlabs.ai/

Key points:

The Mercury series of diffusion large language models (dLLMs) are launched, with generation speeds increased to 1,000 markers per second.

Mercury Coder focuses on code generation and outperforms many existing models in benchmarking and performs well.

The innovative ways of diffusion models make text generation more efficient and accurate, providing new possibilities for smart proxy applications.