Alibaba's Qwen team recently released its latest open source large language model (LLM) family member - QwQ-32B. This inference model with 32 billion parameters significantly improves performance in complex problem-solving tasks through reinforcement learning (RL) technology. The launch of QwQ-32B marks Alibaba's further breakthrough in the field of artificial intelligence, especially in the application and optimization of inference models.

QwQ-32B is open sourced under the Apache 2.0 license on the Hugging Face and ModelScope platforms, meaning it is not only for research but also for commercial purposes. Businesses can integrate the model directly into their products or services, including those paid applications. In addition, individual users can also access the model through Qwen Chat and enjoy their powerful inference capabilities.

QwQ, full name Qwen-with-Questions, is an open source reasoning model first launched by Alibaba in November 2024, aiming to compete with OpenAI's o1-preview. This model significantly enhances logical reasoning and planning skills by self-reviewing and improving answers during the reasoning process, especially in mathematical and coding tasks. The launch of QwQ-32B further consolidates Alibaba's leading position in this field.

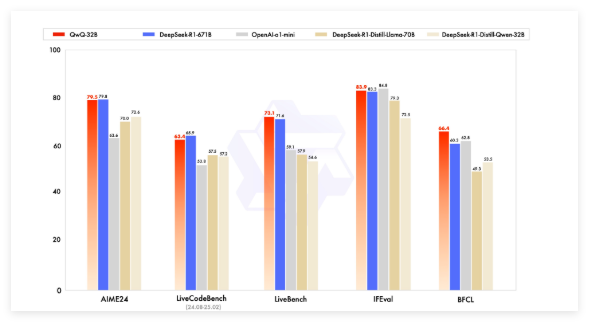

Early QwQ models have surpassed OpenAI's o1-preview in mathematical benchmarks such as AIME and MATH and scientific reasoning tasks such as GPQA. However, in programming benchmarks (such as LiveCodeBench), its performance is relatively weak and there are problems such as language mixing and loop argumentation. Nevertheless, Alibaba chose to release the model under the Apache 2.0 license, distinguishing it from OpenAI's proprietary solutions, allowing developers and businesses to adapt and commercialize freely.

With the development of the field of artificial intelligence, the limitations of traditional LLMs have gradually emerged, and the performance improvement brought about by large-scale expansion has also begun to slow down. This drives interest in large inference models (LRM), which improves accuracy through inference-time reasoning and self-reflection. QwQ-32B further improves its performance by integrating reinforcement learning and structured self-questioning and becoming an important competitor in the field of reasoning AI.

QwQ-32B competed with leading models such as DeepSeek-R1 and o1-mini in the benchmark test, and achieved competitive results when the parameter volume was smaller than some competitors. For example, DeepSeek-R1 has 671 billion parameters, while the QwQ-32B has a smaller memory requirement when its performance is comparable, usually only 24GB of vRAM is required on the GPU, while running a full DeepSeek R1 requires more than 1500GB of vRAM.

QwQ-32B adopts a causal language model architecture and has carried out multiple optimizations, including 64 Transformer layers, RoPE, SwiGLU, RMSNorm and Attention QKV bias. It also employs generalized query attention (GQA), has an extended context length of 131,072 tokens, and undergoes multi-stage training including pre-training, supervised fine-tuning, and reinforcement learning.

The reinforcement learning process of QwQ-32B is divided into two stages: the first stage focuses on mathematics and coding capabilities, and uses accuracy validators and code execution servers for training; the second stage is reward training through a general reward model and a rule-based validator to improve instruction following, human alignment and proxy reasoning capabilities, while not affecting its mathematical and coding capabilities.

In addition, QwQ-32B also has agent capabilities, which can dynamically adjust the inference process based on environmental feedback. The Qwen team recommends using specific inference settings for optimal performance and supports deployment using vLLM.

The Qwen team regards QwQ-32B as the first step to enhance reasoning capabilities through extended reinforcement learning. In the future, it plans to further explore expanding reinforcement learning, integrating agents and reinforcement learning to achieve long-term reasoning, and continue to develop basic models optimized for reinforcement learning, and ultimately move towards general artificial intelligence (AGI).

Model: https://qwenlm.github.io/blog/qwq-32b/