On July 3, 2024, Shanghai Artificial Intelligence Laboratory joined hands with SenseTime, jointly released a new generation of large language model - Scholar Puyu 2.5 (InternLM2.5). This release marks an important step in China's technological innovation in the field of artificial intelligence, especially in the research and application of large language models.

The InternLM2.5-7B model has been officially open source, and other models of scale will be opened to the public one after another. Shanghai Artificial Intelligence Laboratory promises to continue to provide free commercial licensing and support the innovation and development of global communities through high-quality open source models. This move not only lowers the application threshold of artificial intelligence technology, but also provides more innovative opportunities for global developers.

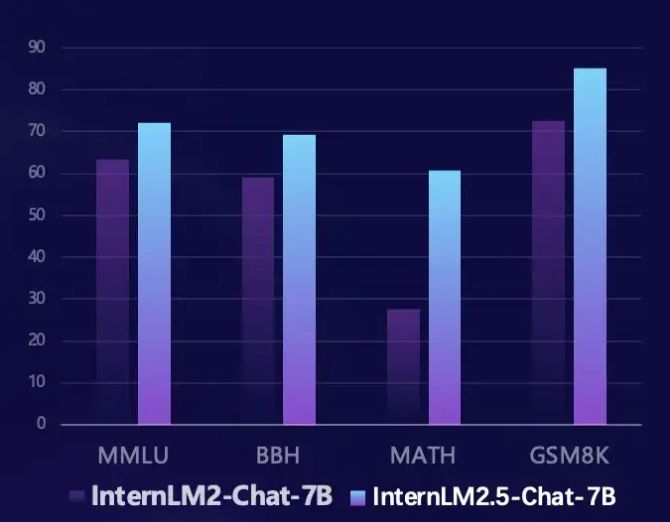

InternLM2.5 has achieved significant improvements in several key areas. First, its inference ability has been significantly enhanced, and the performance of some dimensions even surpasses the Llama3-70B model. Especially on the mathematical evaluation set MATH, InternLM2.5 achieves 100% performance improvement and has an accuracy of 60%, which is comparable to the GPT-4 Turbo 1106 version. This breakthrough progress provides a more powerful tool for solving complex problems.

Secondly, InternLM2.5 supports context processing capabilities of up to 1M tokens and can process long articles of about 1.2 million Chinese characters. By increasing context length and synthetic data, the model is optimized for long document understanding and agent interaction, making it more handy when dealing with complex text.

In addition, InternLM2.5 also has the ability to independently plan and call tool. It can search and integrate information from hundreds of web pages, and simulate human thinking processes through MindSearch multi-agent framework to effectively integrate network information. This function provides a new solution for information retrieval and knowledge integration, greatly improving the practicality and intelligence of the model.

Developers can obtain more information and resources about InternLM2.5 through the following links:

Github link: https://github.com/InternLM/InternLM

Model link: https://www.modelscope.cn/models/Shanghai_AI_Laboratory/internlm2_5-7b-chat

Scholar Puyu homepage: https://internlm.intern-ai.org.cn/