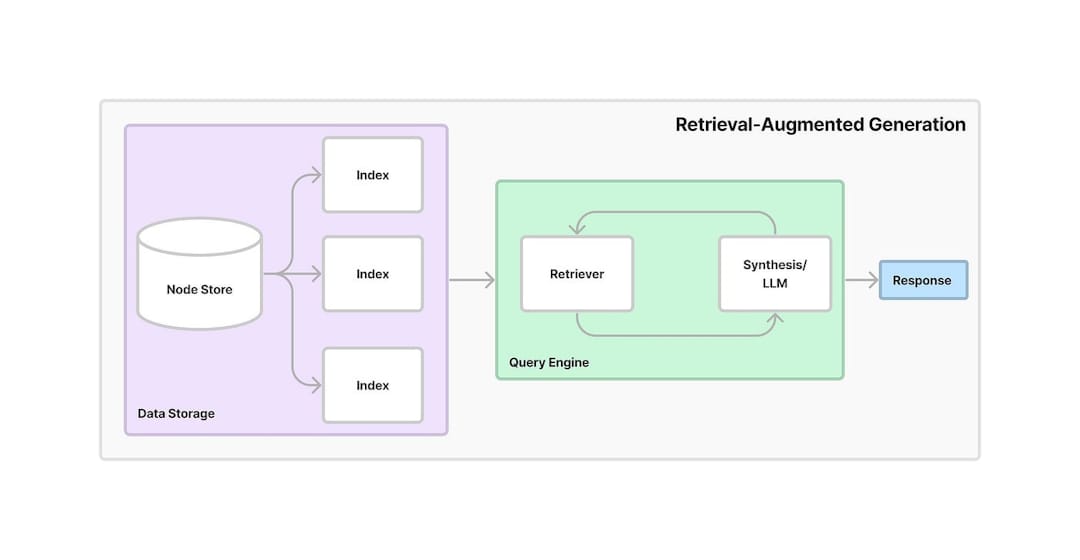

Retrieval-Augmented Generation (RAG) is a framework that combines information retrieval with generative AI. It allows models to retrieve relevant information from external sources or databases and use that data to generate more accurate and contextually relevant responses. By leveraging both retrieval and generation, RAG improves the accuracy and reliability of AI models, particularly in providing up-to-date information or handling complex questions.

This project provides an AI-based conversational assistant that leverages Retrieval-Augmented Generation (RAG) to extract knowledge from PDF documents. The system combines text embeddings, vector search, and an LLM to provide answers to user questions. Below is a detailed step-by-step workflow of how the application operates:

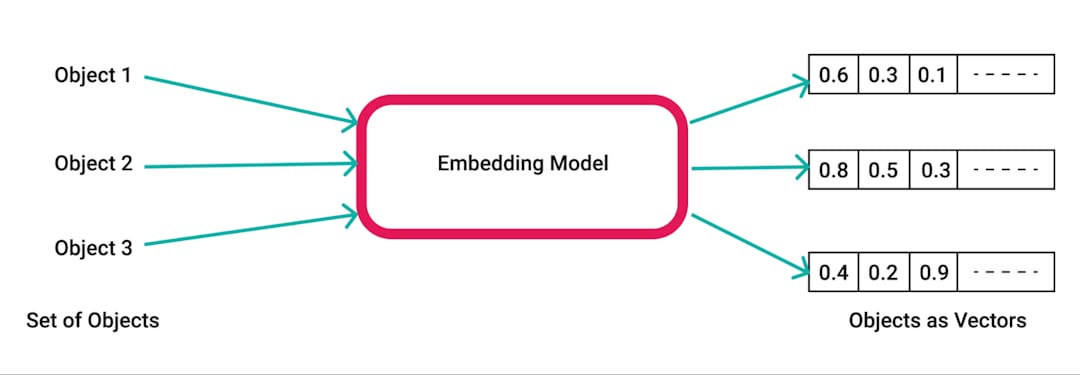

pdfplumber, a Python library for extracting text from PDFs.pdfplumber library to extract raw text from the uploaded PDF. Each page of the document is parsed, and the resulting text is prepared for further processing.RecursiveCharacterTextSplitter. This ensures the content is manageable for embeddings and retrieval, typically with a chunk size of 500 characters and an overlap of 50 characters.SpacyEmbeddings. These embeddings represent the semantic meaning of the chunks, enabling efficient search.

Chroma library, where the embeddings are stored. The vector database allows fast and efficient retrieval of relevant information based on user queries.ConversationalRetrievalChain is established using LangChain, combining the embeddings stored in Chroma with a conversational memory buffer to track chat history and context.ChatGoogleGenerativeAI (Google's Gemini LLM) to generate relevant and intelligent responses to the user's questions based on the retrieved chunks of text from the vector store.

Efficient Knowledge Retrieval: By leveraging the power of RAG, the system combines retrieval and generation to answer specific questions accurately based on the content of uploaded PDF documents.

Scalability and Flexibility: With text chunking and embeddings, the app can handle large documents while ensuring fast and precise information retrieval.

Conversational AI: The conversation history memory makes the system more interactive, as it keeps track of previous questions and answers, maintaining context over long conversations.

Integration of Modern AI Tools: This project demonstrates the use of advanced tools like Chroma for vector storage, LangChain for conversation management, and Google's Gemini LLM for generating human-like answers.