Website Twitter

AI RAG Chatbot for FictionX Stories.

It's powered by Llama Index, Together AI, Together Embeddings and Next.js. It embeds the stories in story/data and stores embeddings into story/cache as vector database locally. Then it role play as the character of the story and answer users' questions.

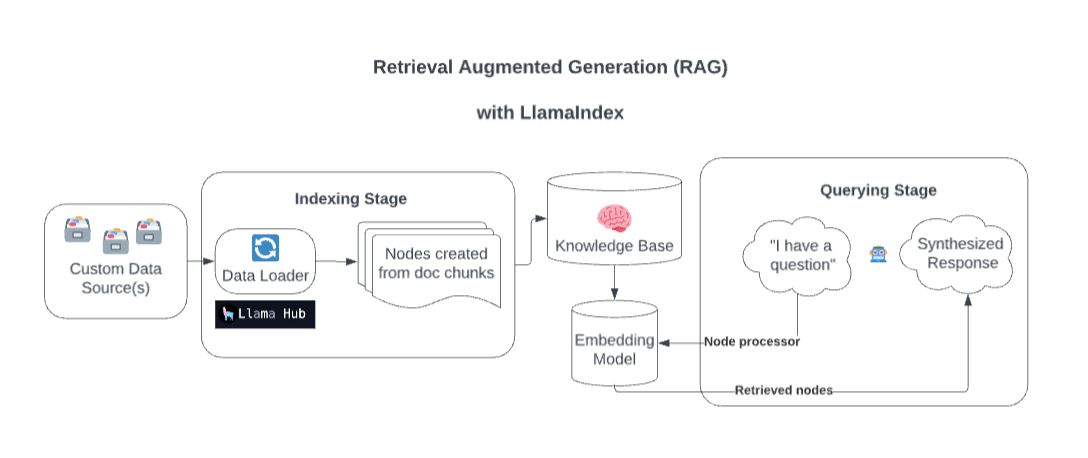

Behind the scenes, LlamaIndex enriches your model with custom data sources through Retrieval Augmented Generation (RAG).

Overly simplified, this process generally consists of two stages:

An indexing stage. LlamaIndex prepares the knowledge base by ingesting data and converting it into Documents. It parses metadata from those documents (text, relationships, and so on) into nodes and creates queryable indices from these chunks into the Knowledge Base.

A querying stage. Relevant context is retrieved from the knowledge base to assist the model in responding to queries. The querying stage ensures the model can access data not included in its original training data.

source: Streamlit

Copy your .example.env file into a .env and replace the TOGETHER_API_KEY with your own. Specify a dummy OPENAI_API_KEY value in this .env to make sure it works (temporary hack)

npm install

http://localhost:3200/api/generate.npm run dev

http://localhost:3200/http://localhost:3200/api/chatIt supports streaming response or JSON response.