LLM Survey

1.0.0

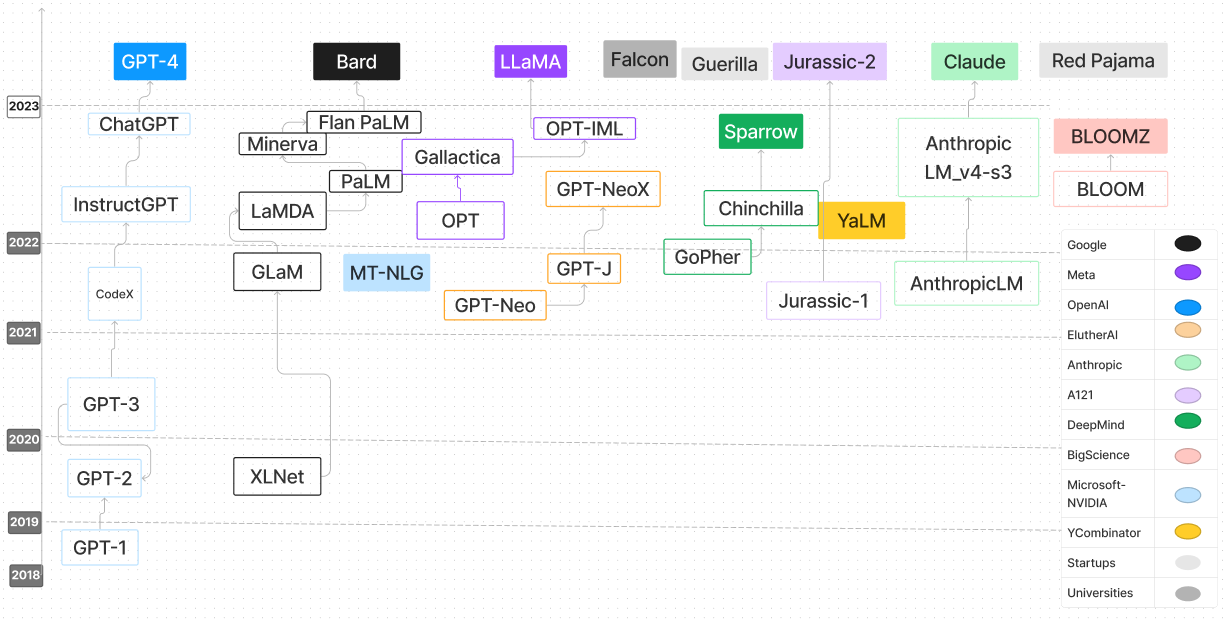

The Large Language Models Survey repository is a comprehensive compendium dedicated to the exploration and understanding of Large Language Models (LLMs). It houses an assortment of resources including research papers, blog posts, tutorials, code examples, and more to provide an in-depth look at the progression, methodologies, and applications of LLMs. This repo is an invaluable resource for AI researchers, data scientists, or enthusiasts interested in the advancements and inner workings of LLMs. We encourage contributions from the wider community to promote collaborative learning and continue pushing the boundaries of LLM research.

| Language Model | Release Date | Checkpoints | Paper/Blog | Params (B) | Context Length | Licence | Try it |

|---|---|---|---|---|---|---|---|

| T5 | 2019/10 | T5 & Flan-T5, Flan-T5-xxl (HF) | Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer | 0.06 - 11 | 512 | Apache 2.0 | T5-Large |

| UL2 | 2022/10 | UL2 & Flan-UL2, Flan-UL2 (HF) | UL2 20B: An Open Source Unified Language Learner | 20 | 512, 2048 | Apache 2.0 | |

| Cohere | 2022/06 | Checkpoint | Code | 54 | 4096 | Model | Website |

| Cerebras-GPT | 2023/03 | Cerebras-GPT | Cerebras-GPT: A Family of Open, Compute-efficient, Large Language Models (Paper) | 0.111 - 13 | 2048 | Apache 2.0 | Cerebras-GPT-1.3B |

| Open Assistant (Pythia family) | 2023/03 | OA-Pythia-12B-SFT-8, OA-Pythia-12B-SFT-4, OA-Pythia-12B-SFT-1 | Democratizing Large Language Model Alignment | 12 | 2048 | Apache 2.0 | Pythia-2.8B |

| Pythia | 2023/04 | pythia 70M - 12B | Pythia: A Suite for Analyzing Large Language Models Across Training and Scaling | 0.07 - 12 | 2048 | Apache 2.0 | |

| Dolly | 2023/04 | dolly-v2-12b | Free Dolly: Introducing the World's First Truly Open Instruction-Tuned LLM | 3, 7, 12 | 2048 | MIT | |

| DLite | 2023/05 | dlite-v2-1_5b | Announcing DLite V2: Lightweight, Open LLMs That Can Run Anywhere | 0.124 - 1.5 | 1024 | Apache 2.0 | DLite-v2-1.5B |

| RWKV | 2021/08 | RWKV, ChatRWKV | The RWKV Language Model (and my LM tricks) | 0.1 - 14 | infinity (RNN) | Apache 2.0 | |

| GPT-J-6B | 2023/06 | GPT-J-6B, GPT4All-J | GPT-J-6B: 6B JAX-Based Transformer | 6 | 2048 | Apache 2.0 | |

| GPT-NeoX-20B | 2022/04 | GPT-NEOX-20B | GPT-NeoX-20B: An Open-Source Autoregressive Language Model | 20 | 2048 | Apache 2.0 | |

| Bloom | 2022/11 | Bloom | BLOOM: A 176B-Parameter Open-Access Multilingual Language Model | 176 | 2048 | OpenRAIL-M v1 | |

| StableLM-Alpha | 2023/04 | StableLM-Alpha | Stability AI Launches the First of its StableLM Suite of Language Models | 3 - 65 | 4096 | CC BY-SA-4.0 | |

| FastChat-T5 | 2023/04 | fastchat-t5-3b-v1.0 | We are excited to release FastChat-T5: our compact and commercial-friendly chatbot! | 3 | 512 | Apache 2.0 | |

| h2oGPT | 2023/05 | h2oGPT | Building the World’s Best Open-Source Large Language Model: H2O.ai’s Journey | 12 - 20 | 256 - 2048 | Apache 2.0 | |

| MPT-7B | 2023/05 | MPT-7B, MPT-7B-Instruct | Introducing MPT-7B: A New Standard for Open-Source, Commercially Usable LLMs | 7 | 84k (ALiBi) | Apache 2.0, CC BY-SA-3.0 | |

| PanGU-Σ | 2023/3 | PanGU | Model | 1085 | - | Model | Page |

| RedPajama-INCITE | 2023/05 | RedPajama-INCITE | Releasing 3B and 7B RedPajama-INCITE family of models including base, instruction-tuned & chat models | 3 - 7 | 2048 | Apache 2.0 | RedPajama-INCITE-Instruct-3B-v1 |

| OpenLLaMA | 2023/05 | open_llama_3b, open_llama_7b, open_llama_13b | OpenLLaMA: An Open Reproduction of LLaMA | 3, 7 | 2048 | Apache 2.0 | OpenLLaMA-7B-Preview_200bt |

| Falcon | 2023/05 | Falcon-180B, Falcon-40B, Falcon-7B | The RefinedWeb Dataset for Falcon LLM: Outperforming Curated Corpora with Web Data, and Web Data Only | 180, 40, 7 | 2048 | Apache 2.0 | |

| MPT-30B | 2023/06 | MPT-30B, MPT-30B-instruct | MPT-30B: Raising the bar for open-source foundation models | 30 | 8192 | Apache 2.0, CC BY-SA-3.0 | MPT 30B inference code using CPU |

| LLaMA 2 | 2023/06 | LLaMA 2 Weights | Llama 2: Open Foundation and Fine-Tuned Chat Models | 7 - 70 | 4096 | Custom Free if you have under 700M users and you cannot use LLaMA outputs to train other LLMs besides LLaMA and its derivatives | HuggingChat |

| OpenLM | 2023/09 | OpenLM 1B, OpenLM 7B | Open LM: a minimal but performative language modeling (LM) repository | 1, 7 | 2048 | MIT | |

| Mistral 7B | 2023/09 | Mistral-7B-v0.1, Mistral-7B-Instruct-v0.1 | Mistral 7B | 7 | 4096-16K with Sliding Windows | Apache 2.0 | Mistral Transformer |

| OpenHermes | 2023/09 | OpenHermes-7B, OpenHermes-13B | Nous Research | 7, 13 | 4096 | MIT | OpenHermes-V2 Finetuned on Mistral 7B |

| SOLAR | 2023/12 | Solar-10.7B | Upstage | 10.7 | 4096 | apache-2.0 | |

| phi-2 | 2023/12 | phi-2 2.7B | Microsoft | 2.7 | 2048 | MIT | |

| SantaCoder | 2023/01 | santacoder | SantaCoder: don't reach for the stars! | 1.1 | 2048 | OpenRAIL-M v1 | SantaCoder |

| StarCoder | 2023/05 | starcoder | StarCoder: A State-of-the-Art LLM for Code, StarCoder: May the source be with you! | 1.1-15 | 8192 | OpenRAIL-M v1 | |

| StarChat Alpha | 2023/05 | starchat-alpha | Creating a Coding Assistant with StarCoder | 16 | 8192 | OpenRAIL-M v1 | |

| Replit Code | 2023/05 | replit-code-v1-3b | Training a SOTA Code LLM in 1 week and Quantifying the Vibes — with Reza Shabani of Replit | 2.7 | infinity? (ALiBi) | CC BY-SA-4.0 | Replit-Code-v1-3B |

| CodeGen2 | 2023/04 | codegen2 1B-16B | CodeGen2: Lessons for Training LLMs on Programming and Natural Languages | 1 - 16 | 2048 | Apache 2.0 | |

| CodeT5+ | 2023/05 | CodeT5+ | CodeT5+: Open Code Large Language Models for Code Understanding and Generation | 0.22 - 16 | 512 | BSD-3-Clause | Codet5+-6B |

| XGen-7B | 2023/06 | XGen-7B-8K-Base | Long Sequence Modeling with XGen: A 7B LLM Trained on 8K Input Sequence Length | 7 | 8192 | Apache 2.0 | |

| CodeGen2.5 | 2023/07 | CodeGen2.5-7B-multi | CodeGen2.5: Small, but mighty | 7 | 2048 | Apache 2.0 | |

| DeciCoder-1B | 2023/08 | DeciCoder-1B | Introducing DeciCoder: The New Gold Standard in Efficient and Accurate Code Generation | 1.1 | 2048 | Apache 2.0 | DeciCoder Demo |

| Code Llama | 2023 | Inference Code for CodeLlama models | Code Llama: Open Foundation Models for Code | 7 - 34 | 4096 | Model | HuggingChat |

| Sparrow | 2022/09 | Inference Code | Code | 70 | 4096 | Model | Webpage |

| Mistral | 2023/09 | Inference Code | Code | 7 | 8000 | Model | Webpage |

| Koala | 2023/04 | Inference Code | Code | 13 | 4096 | Model | Webpage |

| PaLM 2 | 2024 | N/A | Google AI | 540 | N/A | N/A | N/A |

| Tongyi Qianwen | 2024 | N/A | Alibaba Cloud | N/A | N/A | N/A | N/A |

| Cohere Command | 2024 | N/A | Cohere | 6 - 52 | N/A | N/A | N/A |

| Vicuna 33B | 2024 | N/A | Meta AI | 33 | N/A | N/A | N/A |

| Guanaco-65B | 2024 | N/A | Meta AI | 65 | N/A | N/A | N/A |

| Amazon Q | 2024 | N/A | AWS | N/A | N/A | N/A | N/A |

| Falcon 180B | 2024 | Falcon-180B | Technology Innovation Institute | 180 | N/A | Apache 2.0 | N/A |

| YI 34B | 2024 | N/A | 01 AI | 34 | Up to 32K | N/A | N/A |

| Mixtral 8x7B | 2023 | Mixtral 8X 7B | Mistral AI | 46.7 (12.9 per token) | N/A | Apache 2.0 | N/A |

If you find our survey useful for your research, please cite the following paper:

@article{hadi2024large,

title={Large language models: a comprehensive survey of its applications, challenges, limitations, and future prospects},

author={Hadi, Muhammad Usman and Al Tashi, Qasem and Shah, Abbas and Qureshi, Rizwan and Muneer, Amgad and Irfan, Muhammad and Zafar, Anas and Shaikh, Muhammad Bilal and Akhtar, Naveed and Wu, Jia and others},

journal={Authorea Preprints},

year={2024},

publisher={Authorea}

}