| 作者 | Jian Zhao |

|---|---|

| 首頁 | https://zhaoj9014.github.io |

face.evolve守則是根據MIT許可發布的。

✅ CLOSED 02 September 2021 :百度槳板正式合併了面部。

✅ CLOSED 03 July 2021 :為PaddlePaddle框架提供訓練代碼。

✅ CLOSED 04 July 2019 :我們將在面部反欺騙/LIVISET檢測上共享幾個公開可用的數據集,以促進相關研究和分析。

✅ CLOSED 07 June 2019 :我們正在MS-CELEB-1M_ALIGN_112X112上訓練一個表現更好的IR-152模型,並將盡快發布該模型。

✅ CLOSED 23 May 2019 :我們共享三個公開可用的數據集,以促進有關面部識別和分析的研究。請參考SEC。數據動物園以獲取詳細信息。

✅ CLOSED 23 Jan 2019 :我們共享幾個廣泛使用的面部識別數據集的名稱列表和配對重疊列表,以幫助研究人員/工程師快速刪除其自己的私人數據集和公共數據集之間的重疊部分。請參考SEC。數據動物園以獲取詳細信息。

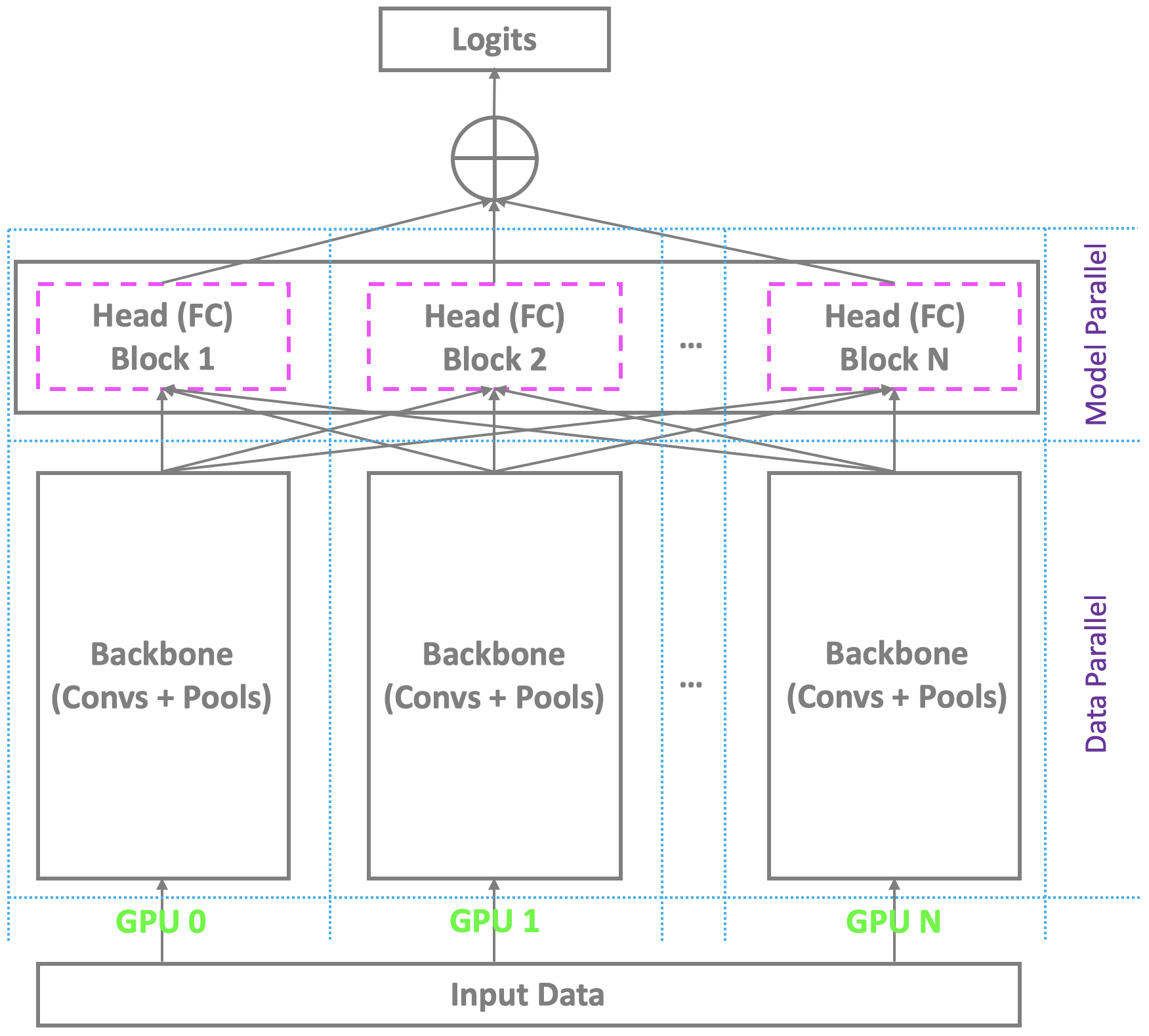

✅ CLOSED 23 Jan 2019 :當前在Pytorch和其他主流平台下使用多GPU的分佈式訓練架構與多GPU的主鏈相似,同時依靠單個主人來計算最終的瓶頸(完全連接/軟的max)層。對於具有適度身份的常規面部識別而言,這不是問題。但是,它在大規模的面部識別中掙扎,這需要認識到現實世界中數百萬個身份。主人幾乎無法持有超大的最後一層,而奴隸仍然擁有冗餘的計算資源,從而導緻小批量培訓甚至訓練失敗。為了解決這個問題,我們正在使用Pytorch下的多GPU開發高度,有效,有效的分佈式培訓模式,不僅支持主鏈,而且還支持具有完全連接(SoftMax)層的頭部,以促進高表現的大型大型面部識別。我們將將此支持添加到我們的回購中。

✅ CLOSED 22 Jan 2019 :我們發布了兩個功能提取API,用於從預訓練模型中提取特徵,分別使用Pytorch Build-In功能和OpenCV實現。請檢查./util/extract_feature_v1.py和./util/extract_feature_v2.py 。

✅ CLOSED 22 Jan 2019 :我們正在微調發布的Assia Face Data上發布的IR-50模型,該模型將很快發布,以促進高性能的亞洲面部識別。

✅ CLOSED 21 Jan 2019 :我們正在MS-CELEB-1M_ALIGN_112X112上訓練一個表現更好的IR-50模型,並將很快替換當前模型。

?

?

pip install torch torchvision )pip install mxnet-cu90 )pip install tensorflow-gpu )pip install tensorboardX )pip install opencv-python )pip install bcolz )雖然不需要,但為了獲得最佳性能,強烈建議使用啟用CUDA的GPU運行代碼。我們並行使用了4-8個Nvidia tesla P40。

?

git clone https://github.com/ZhaoJ9014/face.evoLVe.PyTorch.git 。mkdir data checkpoint log以存儲您的火車/Val/測試數據,檢查點和培訓日誌。 ./data/db_name/

-> id1/

-> 1.jpg

-> ...

-> id2/

-> 1.jpg

-> ...

-> ...

-> ...

-> ...

?

./align from PIL import Image

from detector import detect_faces

from visualization_utils import show_results

img = Image . open ( 'some_img.jpg' ) # modify the image path to yours

bounding_boxes , landmarks = detect_faces ( img ) # detect bboxes and landmarks for all faces in the image

show_results ( img , bounding_boxes , landmarks ) # visualize the resultssource_root中執行面部檢測,具有里程碑式的定位和與仿射轉換的對齊方式,如Sec。使用中所示的目錄結構,並將對齊結果存儲到具有相同目錄結構的新文件夾dest_root中): python face_align.py -source_root [source_root] -dest_root [dest_root] -crop_size [crop_size]

# python face_align.py -source_root './data/test' -dest_root './data/test_Aligned' -crop_size 112

*.DS_Store文件可能會破壞您的數據,因為在運行腳本時將自動刪除它們。face_align.py時指定source_root , dest_root和crop_size的參數; 2)將定制的min_face_size , thresholds和nms_thresholds值傳遞到detector.py的detect_faces函數,以符合您的實際要求; 3)如果您發現使用face Arignment API的速度有點慢,則可以調用面部大小API,首先調整較小尺寸大於閾值的圖像大小(在調用source_root , dest_root和min_side的參數為您自己的值之前,請在調用face Api Api: python face_resize.py

./balanceroot中刪除少於min_num樣本的低彈藥類,並帶有目錄結構,如SEC中所示的數據平衡和有效模型培訓所示): python remove_lowshot.py -root [root] -min_num [min_num]

# python remove_lowshot.py -root './data/train' -min_num 10

remove_lowshot.py時,將root和min_num的參數指定為您自己的值。☕

文件夾: ./

配置API(配置您的整體設置以進行培訓和驗證) config.py :

import torch

configurations = {

1 : dict (

SEED = 1337 , # random seed for reproduce results

DATA_ROOT = '/media/pc/6T/jasonjzhao/data/faces_emore' , # the parent root where your train/val/test data are stored

MODEL_ROOT = '/media/pc/6T/jasonjzhao/buffer/model' , # the root to buffer your checkpoints

LOG_ROOT = '/media/pc/6T/jasonjzhao/buffer/log' , # the root to log your train/val status

BACKBONE_RESUME_ROOT = './' , # the root to resume training from a saved checkpoint

HEAD_RESUME_ROOT = './' , # the root to resume training from a saved checkpoint

BACKBONE_NAME = 'IR_SE_50' , # support: ['ResNet_50', 'ResNet_101', 'ResNet_152', 'IR_50', 'IR_101', 'IR_152', 'IR_SE_50', 'IR_SE_101', 'IR_SE_152']

HEAD_NAME = 'ArcFace' , # support: ['Softmax', 'ArcFace', 'CosFace', 'SphereFace', 'Am_softmax']

LOSS_NAME = 'Focal' , # support: ['Focal', 'Softmax']

INPUT_SIZE = [ 112 , 112 ], # support: [112, 112] and [224, 224]

RGB_MEAN = [ 0.5 , 0.5 , 0.5 ], # for normalize inputs to [-1, 1]

RGB_STD = [ 0.5 , 0.5 , 0.5 ],

EMBEDDING_SIZE = 512 , # feature dimension

BATCH_SIZE = 512 ,

DROP_LAST = True , # whether drop the last batch to ensure consistent batch_norm statistics

LR = 0.1 , # initial LR

NUM_EPOCH = 125 , # total epoch number (use the firt 1/25 epochs to warm up)

WEIGHT_DECAY = 5e-4 , # do not apply to batch_norm parameters

MOMENTUM = 0.9 ,

STAGES = [ 35 , 65 , 95 ], # epoch stages to decay learning rate

DEVICE = torch . device ( "cuda:0" if torch . cuda . is_available () else "cpu" ),

MULTI_GPU = True , # flag to use multiple GPUs; if you choose to train with single GPU, you should first run "export CUDA_VISILE_DEVICES=device_id" to specify the GPU card you want to use

GPU_ID = [ 0 , 1 , 2 , 3 ], # specify your GPU ids

PIN_MEMORY = True ,

NUM_WORKERS = 0 ,

),

}火車和驗證API(所有有關培訓和驗證的人,即,進口軟件包,超參數和數據加載器,模型與損失與優化器,火車和驗證以及保存檢查點) train.py 。由於MS-CELEB-1M在面部識別中充當成像網,因此我們在MS-CELEB-1M上預先訓練了face.evolve模型,並在LFW,CFP_FF,CFP_FP,CFP_FP,AGEDB,CALFW,CALFW,CPLFW,CPLFW和VGGFAW和VGGFACE2_FP上執行驗證。讓我們一起研究細節。

import torch

import torch . nn as nn

import torch . optim as optim

import torchvision . transforms as transforms

import torchvision . datasets as datasets

from config import configurations

from backbone . model_resnet import ResNet_50 , ResNet_101 , ResNet_152

from backbone . model_irse import IR_50 , IR_101 , IR_152 , IR_SE_50 , IR_SE_101 , IR_SE_152

from head . metrics import ArcFace , CosFace , SphereFace , Am_softmax

from loss . focal import FocalLoss

from util . utils import make_weights_for_balanced_classes , get_val_data , separate_irse_bn_paras , separate_resnet_bn_paras , warm_up_lr , schedule_lr , perform_val , get_time , buffer_val , AverageMeter , accuracy

from tensorboardX import SummaryWriter

from tqdm import tqdm

import os cfg = configurations [ 1 ]

SEED = cfg [ 'SEED' ] # random seed for reproduce results

torch . manual_seed ( SEED )

DATA_ROOT = cfg [ 'DATA_ROOT' ] # the parent root where your train/val/test data are stored

MODEL_ROOT = cfg [ 'MODEL_ROOT' ] # the root to buffer your checkpoints

LOG_ROOT = cfg [ 'LOG_ROOT' ] # the root to log your train/val status

BACKBONE_RESUME_ROOT = cfg [ 'BACKBONE_RESUME_ROOT' ] # the root to resume training from a saved checkpoint

HEAD_RESUME_ROOT = cfg [ 'HEAD_RESUME_ROOT' ] # the root to resume training from a saved checkpoint

BACKBONE_NAME = cfg [ 'BACKBONE_NAME' ] # support: ['ResNet_50', 'ResNet_101', 'ResNet_152', 'IR_50', 'IR_101', 'IR_152', 'IR_SE_50', 'IR_SE_101', 'IR_SE_152']

HEAD_NAME = cfg [ 'HEAD_NAME' ] # support: ['Softmax', 'ArcFace', 'CosFace', 'SphereFace', 'Am_softmax']

LOSS_NAME = cfg [ 'LOSS_NAME' ] # support: ['Focal', 'Softmax']

INPUT_SIZE = cfg [ 'INPUT_SIZE' ]

RGB_MEAN = cfg [ 'RGB_MEAN' ] # for normalize inputs

RGB_STD = cfg [ 'RGB_STD' ]

EMBEDDING_SIZE = cfg [ 'EMBEDDING_SIZE' ] # feature dimension

BATCH_SIZE = cfg [ 'BATCH_SIZE' ]

DROP_LAST = cfg [ 'DROP_LAST' ] # whether drop the last batch to ensure consistent batch_norm statistics

LR = cfg [ 'LR' ] # initial LR

NUM_EPOCH = cfg [ 'NUM_EPOCH' ]

WEIGHT_DECAY = cfg [ 'WEIGHT_DECAY' ]

MOMENTUM = cfg [ 'MOMENTUM' ]

STAGES = cfg [ 'STAGES' ] # epoch stages to decay learning rate

DEVICE = cfg [ 'DEVICE' ]

MULTI_GPU = cfg [ 'MULTI_GPU' ] # flag to use multiple GPUs

GPU_ID = cfg [ 'GPU_ID' ] # specify your GPU ids

PIN_MEMORY = cfg [ 'PIN_MEMORY' ]

NUM_WORKERS = cfg [ 'NUM_WORKERS' ]

print ( "=" * 60 )

print ( "Overall Configurations:" )

print ( cfg )

print ( "=" * 60 )

writer = SummaryWriter ( LOG_ROOT ) # writer for buffering intermedium results train_transform = transforms . Compose ([ # refer to https://pytorch.org/docs/stable/torchvision/transforms.html for more build-in online data augmentation

transforms . Resize ([ int ( 128 * INPUT_SIZE [ 0 ] / 112 ), int ( 128 * INPUT_SIZE [ 0 ] / 112 )]), # smaller side resized

transforms . RandomCrop ([ INPUT_SIZE [ 0 ], INPUT_SIZE [ 1 ]]),

transforms . RandomHorizontalFlip (),

transforms . ToTensor (),

transforms . Normalize ( mean = RGB_MEAN ,

std = RGB_STD ),

])

dataset_train = datasets . ImageFolder ( os . path . join ( DATA_ROOT , 'imgs' ), train_transform )

# create a weighted random sampler to process imbalanced data

weights = make_weights_for_balanced_classes ( dataset_train . imgs , len ( dataset_train . classes ))

weights = torch . DoubleTensor ( weights )

sampler = torch . utils . data . sampler . WeightedRandomSampler ( weights , len ( weights ))

train_loader = torch . utils . data . DataLoader (

dataset_train , batch_size = BATCH_SIZE , sampler = sampler , pin_memory = PIN_MEMORY ,

num_workers = NUM_WORKERS , drop_last = DROP_LAST

)

NUM_CLASS = len ( train_loader . dataset . classes )

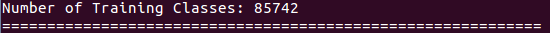

print ( "Number of Training Classes: {}" . format ( NUM_CLASS ))

lfw , cfp_ff , cfp_fp , agedb , calfw , cplfw , vgg2_fp , lfw_issame , cfp_ff_issame , cfp_fp_issame , agedb_issame , calfw_issame , cplfw_issame , vgg2_fp_issame = get_val_data ( DATA_ROOT ) BACKBONE_DICT = { 'ResNet_50' : ResNet_50 ( INPUT_SIZE ),

'ResNet_101' : ResNet_101 ( INPUT_SIZE ),

'ResNet_152' : ResNet_152 ( INPUT_SIZE ),

'IR_50' : IR_50 ( INPUT_SIZE ),

'IR_101' : IR_101 ( INPUT_SIZE ),

'IR_152' : IR_152 ( INPUT_SIZE ),

'IR_SE_50' : IR_SE_50 ( INPUT_SIZE ),

'IR_SE_101' : IR_SE_101 ( INPUT_SIZE ),

'IR_SE_152' : IR_SE_152 ( INPUT_SIZE )}

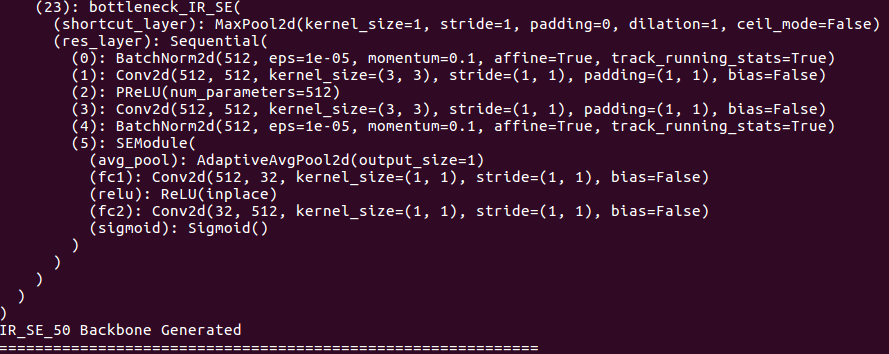

BACKBONE = BACKBONE_DICT [ BACKBONE_NAME ]

print ( "=" * 60 )

print ( BACKBONE )

print ( "{} Backbone Generated" . format ( BACKBONE_NAME ))

print ( "=" * 60 )

HEAD_DICT = { 'ArcFace' : ArcFace ( in_features = EMBEDDING_SIZE , out_features = NUM_CLASS , device_id = GPU_ID ),

'CosFace' : CosFace ( in_features = EMBEDDING_SIZE , out_features = NUM_CLASS , device_id = GPU_ID ),

'SphereFace' : SphereFace ( in_features = EMBEDDING_SIZE , out_features = NUM_CLASS , device_id = GPU_ID ),

'Am_softmax' : Am_softmax ( in_features = EMBEDDING_SIZE , out_features = NUM_CLASS , device_id = GPU_ID )}

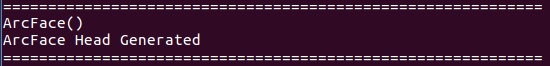

HEAD = HEAD_DICT [ HEAD_NAME ]

print ( "=" * 60 )

print ( HEAD )

print ( "{} Head Generated" . format ( HEAD_NAME ))

print ( "=" * 60 ) LOSS_DICT = { 'Focal' : FocalLoss (),

'Softmax' : nn . CrossEntropyLoss ()}

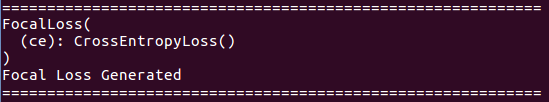

LOSS = LOSS_DICT [ LOSS_NAME ]

print ( "=" * 60 )

print ( LOSS )

print ( "{} Loss Generated" . format ( LOSS_NAME ))

print ( "=" * 60 ) if BACKBONE_NAME . find ( "IR" ) >= 0 :

backbone_paras_only_bn , backbone_paras_wo_bn = separate_irse_bn_paras ( BACKBONE ) # separate batch_norm parameters from others; do not do weight decay for batch_norm parameters to improve the generalizability

_ , head_paras_wo_bn = separate_irse_bn_paras ( HEAD )

else :

backbone_paras_only_bn , backbone_paras_wo_bn = separate_resnet_bn_paras ( BACKBONE ) # separate batch_norm parameters from others; do not do weight decay for batch_norm parameters to improve the generalizability

_ , head_paras_wo_bn = separate_resnet_bn_paras ( HEAD )

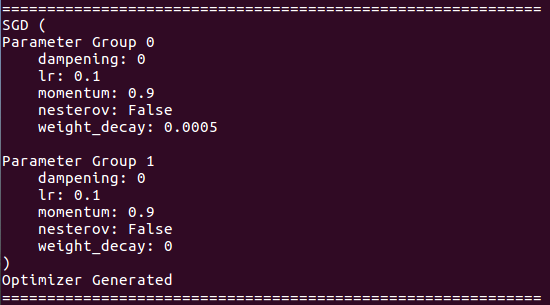

OPTIMIZER = optim . SGD ([{ 'params' : backbone_paras_wo_bn + head_paras_wo_bn , 'weight_decay' : WEIGHT_DECAY }, { 'params' : backbone_paras_only_bn }], lr = LR , momentum = MOMENTUM )

print ( "=" * 60 )

print ( OPTIMIZER )

print ( "Optimizer Generated" )

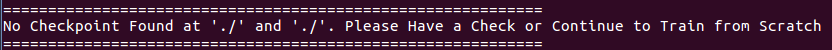

print ( "=" * 60 ) if BACKBONE_RESUME_ROOT and HEAD_RESUME_ROOT :

print ( "=" * 60 )

if os . path . isfile ( BACKBONE_RESUME_ROOT ) and os . path . isfile ( HEAD_RESUME_ROOT ):

print ( "Loading Backbone Checkpoint '{}'" . format ( BACKBONE_RESUME_ROOT ))

BACKBONE . load_state_dict ( torch . load ( BACKBONE_RESUME_ROOT ))

print ( "Loading Head Checkpoint '{}'" . format ( HEAD_RESUME_ROOT ))

HEAD . load_state_dict ( torch . load ( HEAD_RESUME_ROOT ))

else :

print ( "No Checkpoint Found at '{}' and '{}'. Please Have a Check or Continue to Train from Scratch" . format ( BACKBONE_RESUME_ROOT , HEAD_RESUME_ROOT ))

print ( "=" * 60 ) if MULTI_GPU :

# multi-GPU setting

BACKBONE = nn . DataParallel ( BACKBONE , device_ids = GPU_ID )

BACKBONE = BACKBONE . to ( DEVICE )

else :

# single-GPU setting

BACKBONE = BACKBONE . to ( DEVICE ) DISP_FREQ = len ( train_loader ) // 100 # frequency to display training loss & acc

NUM_EPOCH_WARM_UP = NUM_EPOCH // 25 # use the first 1/25 epochs to warm up

NUM_BATCH_WARM_UP = len ( train_loader ) * NUM_EPOCH_WARM_UP # use the first 1/25 epochs to warm up

batch = 0 # batch index for epoch in range ( NUM_EPOCH ): # start training process

if epoch == STAGES [ 0 ]: # adjust LR for each training stage after warm up, you can also choose to adjust LR manually (with slight modification) once plaueau observed

schedule_lr ( OPTIMIZER )

if epoch == STAGES [ 1 ]:

schedule_lr ( OPTIMIZER )

if epoch == STAGES [ 2 ]:

schedule_lr ( OPTIMIZER )

BACKBONE . train () # set to training mode

HEAD . train ()

losses = AverageMeter ()

top1 = AverageMeter ()

top5 = AverageMeter ()

for inputs , labels in tqdm ( iter ( train_loader )):

if ( epoch + 1 <= NUM_EPOCH_WARM_UP ) and ( batch + 1 <= NUM_BATCH_WARM_UP ): # adjust LR for each training batch during warm up

warm_up_lr ( batch + 1 , NUM_BATCH_WARM_UP , LR , OPTIMIZER )

# compute output

inputs = inputs . to ( DEVICE )

labels = labels . to ( DEVICE ). long ()

features = BACKBONE ( inputs )

outputs = HEAD ( features , labels )

loss = LOSS ( outputs , labels )

# measure accuracy and record loss

prec1 , prec5 = accuracy ( outputs . data , labels , topk = ( 1 , 5 ))

losses . update ( loss . data . item (), inputs . size ( 0 ))

top1 . update ( prec1 . data . item (), inputs . size ( 0 ))

top5 . update ( prec5 . data . item (), inputs . size ( 0 ))

# compute gradient and do SGD step

OPTIMIZER . zero_grad ()

loss . backward ()

OPTIMIZER . step ()

# dispaly training loss & acc every DISP_FREQ

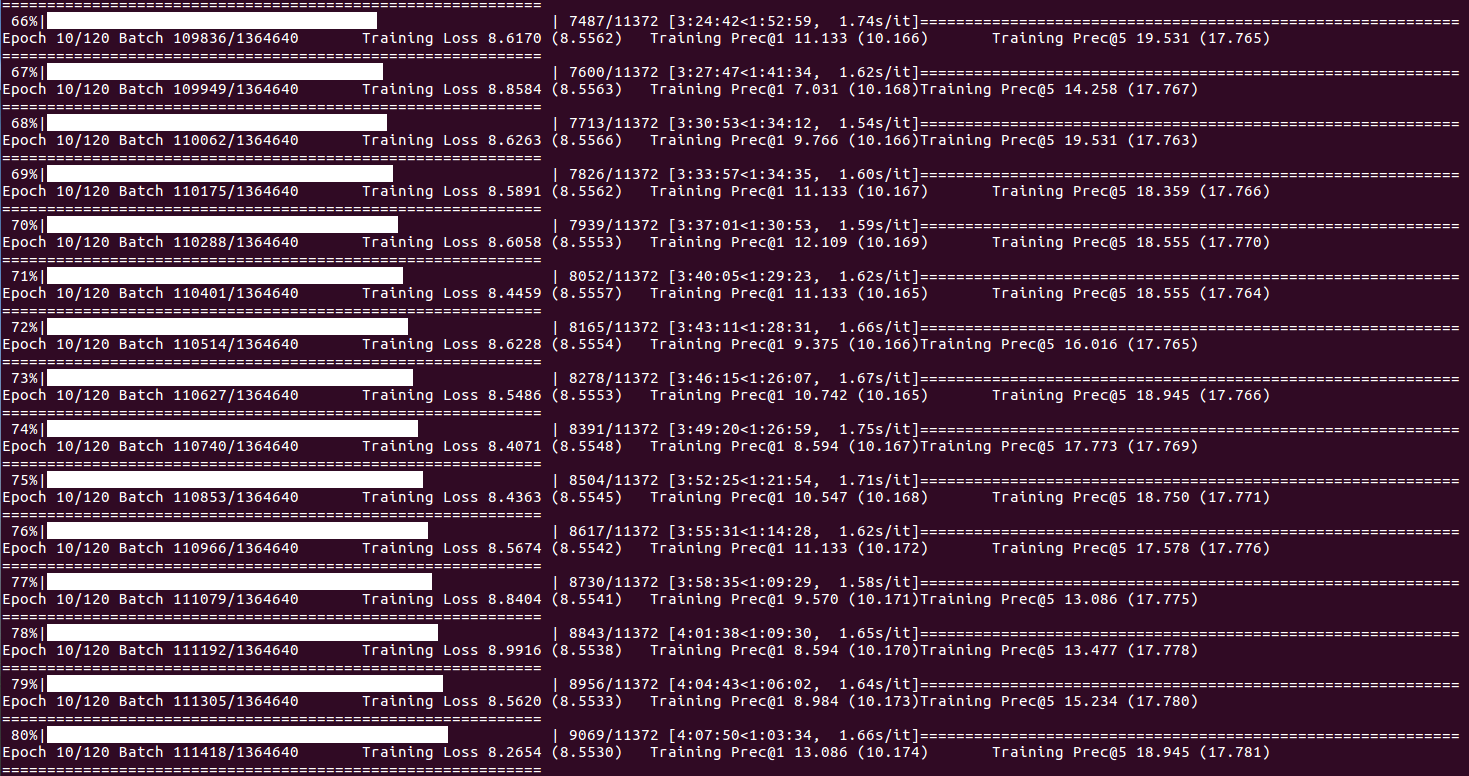

if (( batch + 1 ) % DISP_FREQ == 0 ) and batch != 0 :

print ( "=" * 60 )

print ( 'Epoch {}/{} Batch {}/{} t '

'Training Loss {loss.val:.4f} ({loss.avg:.4f}) t '

'Training Prec@1 {top1.val:.3f} ({top1.avg:.3f}) t '

'Training Prec@5 {top5.val:.3f} ({top5.avg:.3f})' . format (

epoch + 1 , NUM_EPOCH , batch + 1 , len ( train_loader ) * NUM_EPOCH , loss = losses , top1 = top1 , top5 = top5 ))

print ( "=" * 60 )

batch += 1 # batch index

# training statistics per epoch (buffer for visualization)

epoch_loss = losses . avg

epoch_acc = top1 . avg

writer . add_scalar ( "Training_Loss" , epoch_loss , epoch + 1 )

writer . add_scalar ( "Training_Accuracy" , epoch_acc , epoch + 1 )

print ( "=" * 60 )

print ( 'Epoch: {}/{} t '

'Training Loss {loss.val:.4f} ({loss.avg:.4f}) t '

'Training Prec@1 {top1.val:.3f} ({top1.avg:.3f}) t '

'Training Prec@5 {top5.val:.3f} ({top5.avg:.3f})' . format (

epoch + 1 , NUM_EPOCH , loss = losses , top1 = top1 , top5 = top5 ))

print ( "=" * 60 )

# perform validation & save checkpoints per epoch

# validation statistics per epoch (buffer for visualization)

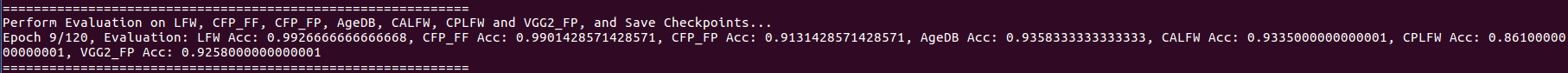

print ( "=" * 60 )

print ( "Perform Evaluation on LFW, CFP_FF, CFP_FP, AgeDB, CALFW, CPLFW and VGG2_FP, and Save Checkpoints..." )

accuracy_lfw , best_threshold_lfw , roc_curve_lfw = perform_val ( MULTI_GPU , DEVICE , EMBEDDING_SIZE , BATCH_SIZE , BACKBONE , lfw , lfw_issame )

buffer_val ( writer , "LFW" , accuracy_lfw , best_threshold_lfw , roc_curve_lfw , epoch + 1 )

accuracy_cfp_ff , best_threshold_cfp_ff , roc_curve_cfp_ff = perform_val ( MULTI_GPU , DEVICE , EMBEDDING_SIZE , BATCH_SIZE , BACKBONE , cfp_ff , cfp_ff_issame )

buffer_val ( writer , "CFP_FF" , accuracy_cfp_ff , best_threshold_cfp_ff , roc_curve_cfp_ff , epoch + 1 )

accuracy_cfp_fp , best_threshold_cfp_fp , roc_curve_cfp_fp = perform_val ( MULTI_GPU , DEVICE , EMBEDDING_SIZE , BATCH_SIZE , BACKBONE , cfp_fp , cfp_fp_issame )

buffer_val ( writer , "CFP_FP" , accuracy_cfp_fp , best_threshold_cfp_fp , roc_curve_cfp_fp , epoch + 1 )

accuracy_agedb , best_threshold_agedb , roc_curve_agedb = perform_val ( MULTI_GPU , DEVICE , EMBEDDING_SIZE , BATCH_SIZE , BACKBONE , agedb , agedb_issame )

buffer_val ( writer , "AgeDB" , accuracy_agedb , best_threshold_agedb , roc_curve_agedb , epoch + 1 )

accuracy_calfw , best_threshold_calfw , roc_curve_calfw = perform_val ( MULTI_GPU , DEVICE , EMBEDDING_SIZE , BATCH_SIZE , BACKBONE , calfw , calfw_issame )

buffer_val ( writer , "CALFW" , accuracy_calfw , best_threshold_calfw , roc_curve_calfw , epoch + 1 )

accuracy_cplfw , best_threshold_cplfw , roc_curve_cplfw = perform_val ( MULTI_GPU , DEVICE , EMBEDDING_SIZE , BATCH_SIZE , BACKBONE , cplfw , cplfw_issame )

buffer_val ( writer , "CPLFW" , accuracy_cplfw , best_threshold_cplfw , roc_curve_cplfw , epoch + 1 )

accuracy_vgg2_fp , best_threshold_vgg2_fp , roc_curve_vgg2_fp = perform_val ( MULTI_GPU , DEVICE , EMBEDDING_SIZE , BATCH_SIZE , BACKBONE , vgg2_fp , vgg2_fp_issame )

buffer_val ( writer , "VGGFace2_FP" , accuracy_vgg2_fp , best_threshold_vgg2_fp , roc_curve_vgg2_fp , epoch + 1 )

print ( "Epoch {}/{}, Evaluation: LFW Acc: {}, CFP_FF Acc: {}, CFP_FP Acc: {}, AgeDB Acc: {}, CALFW Acc: {}, CPLFW Acc: {}, VGG2_FP Acc: {}" . format ( epoch + 1 , NUM_EPOCH , accuracy_lfw , accuracy_cfp_ff , accuracy_cfp_fp , accuracy_agedb , accuracy_calfw , accuracy_cplfw , accuracy_vgg2_fp ))

print ( "=" * 60 )

# save checkpoints per epoch

if MULTI_GPU :

torch . save ( BACKBONE . module . state_dict (), os . path . join ( MODEL_ROOT , "Backbone_{}_Epoch_{}_Batch_{}_Time_{}_checkpoint.pth" . format ( BACKBONE_NAME , epoch + 1 , batch , get_time ())))

torch . save ( HEAD . state_dict (), os . path . join ( MODEL_ROOT , "Head_{}_Epoch_{}_Batch_{}_Time_{}_checkpoint.pth" . format ( HEAD_NAME , epoch + 1 , batch , get_time ())))

else :

torch . save ( BACKBONE . state_dict (), os . path . join ( MODEL_ROOT , "Backbone_{}_Epoch_{}_Batch_{}_Time_{}_checkpoint.pth" . format ( BACKBONE_NAME , epoch + 1 , batch , get_time ())))

torch . save ( HEAD . state_dict (), os . path . join ( MODEL_ROOT , "Head_{}_Epoch_{}_Batch_{}_Time_{}_checkpoint.pth" . format ( HEAD_NAME , epoch + 1 , batch , get_time ())))現在,您可以開始使用face.evolve並運行train.py 。用戶友好的信息將在您的終端上彈出:

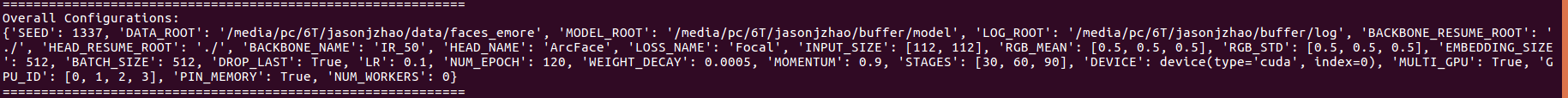

關於整體配置:

關於培訓課程的數量:

關於骨幹細節:

關於頭部細節:

關於損失細節:

關於優化器詳細信息:

關於簡歷培訓:

關於培訓狀態和統計數據(當批次索引到達DISP_FREQ或每個時期結束時):

關於驗證統計和保存檢查點(在每個時期的末尾):

用watch -d -n 0.01 nvidia-smi監視Fly GPU佔用。

請參考SEC。特定模型權重和相應性能的模型動物園。

功能提取API(從預訓練模型中提取功能) ./util/extract_feature_v1.py extract_feature_v1.py(使用Pytorch build-In-In函數實施)和./util/extract_feature_v2.py (用OpenCV實施)。

使用TensorBoardX可視化培訓和驗證統計信息(請參閱第二節模型動物園):

tensorboard --logdir /media/pc/6T/jasonjzhao/buffer/log

?

| 資料庫 | 版本 | #身份 | #圖像 | #框架 | #影片 | 下載鏈接 |

|---|---|---|---|---|---|---|

| LFW | 生的 | 5,749 | 13,233 | - | - | Google Drive,Baidu Drive |

| LFW | Align_250x250 | 5,749 | 13,233 | - | - | Google Drive,Baidu Drive |

| LFW | Align_112x112 | 5,749 | 13,233 | - | - | Google Drive,Baidu Drive |

| 小牛 | 生的 | 4,025 | 12,174 | - | - | Google Drive,Baidu Drive |

| 小牛 | Align_112x112 | 4,025 | 12,174 | - | - | Google Drive,Baidu Drive |

| CPLFW | 生的 | 3,884 | 11,652 | - | - | Google Drive,Baidu Drive |

| CPLFW | Align_112x112 | 3,884 | 11,652 | - | - | Google Drive,Baidu Drive |

| casia-webface | RAW_V1 | 10,575 | 494,414 | - | - | 百度開車 |

| casia-webface | RAW_V2 | 10,575 | 494,414 | - | - | Google Drive,Baidu Drive |

| casia-webface | 乾淨的 | 10,575 | 455,594 | - | - | Google Drive,Baidu Drive |

| MS-CELEB-1M | 乾淨的 | 100,000 | 5,084,127 | - | - | Google Drive |

| MS-CELEB-1M | Align_112x112 | 85,742 | 5,822,653 | - | - | Google Drive |

| vggface2 | 乾淨的 | 8,631 | 3,086,894 | - | - | Google Drive |

| vggface2_fp | Align_112x112 | - | - | - | - | Google Drive,Baidu Drive |

| 老化 | 生的 | 570 | 16,488 | - | - | Google Drive,Baidu Drive |

| 老化 | Align_112x112 | 570 | 16,488 | - | - | Google Drive,Baidu Drive |

| ijb-a | 乾淨的 | 500 | 5,396 | 20,369 | 2,085 | Google Drive,Baidu Drive |

| IJB-B | 生的 | 1,845 | 21,798 | 55,026 | 7,011 | Google Drive |

| CFP | 生的 | 500 | 7,000 | - | - | Google Drive,Baidu Drive |

| CFP | Align_112x112 | 500 | 7,000 | - | - | Google Drive,Baidu Drive |

| umdfaces | Align_112x112 | 8,277 | 367,888 | - | - | Google Drive,Baidu Drive |

| Celeba | 生的 | 10,177 | 202,599 | - | - | Google Drive,Baidu Drive |

| CACD-VS | 生的 | 2,000 | 163,446 | - | - | Google Drive,Baidu Drive |

| ytf | Align_344x344 | 1,595 | - | 3,425 | 621,127 | Google Drive,Baidu Drive |

| deepglint | Align_112x112 | 180,855 | 6,753,545 | - | - | Google Drive |

| utkface | Align_200x200 | - | 23,708 | - | - | Google Drive,Baidu Drive |

| buaa-visnir | Align_287x287 | 150 | 5,952 | - | - | Baidu Drive,PW:XMBC |

| CASIA NIR-VIS 2.0 | Align_128x128 | 725 | 17,580 | - | - | Baidu Drive,PW:883B |

| Oulu-Casia | 生的 | 80 | 65,000 | - | - | Baidu Drive,PW:XXP5 |

| nuaa-imposterdb | 生的 | 15 | 12,614 | - | - | Baidu Drive,PW:IF3N |

| 卡西亞河 | 生的 | 1,000 | - | - | 21,000 | Baidu Drive,PW:IZB3 |

| casia-fasd | 生的 | 50 | - | - | 600 | Baidu Drive,PW:H5UN |

| CASIA-MFSD | 生的 | 50 | - | - | 600 | |

| 重播攻擊 | 生的 | 50 | - | - | 1200 | |

| Webface260m | 生的 | 24m | 2m | - | https://www.face-benchmark.org/ |

unzip casia-maxpy-clean.zip

cd casia-maxpy-clean

zip -F CASIA-maxpy-clean.zip --out CASIA-maxpy-clean_fix.zip

unzip CASIA-maxpy-clean_fix.zip

import numpy as np

import bcolz

import os

def get_pair ( root , name ):

carray = bcolz . carray ( rootdir = os . path . join ( root , name ), mode = 'r' )

issame = np . load ( '{}/{}_list.npy' . format ( root , name ))

return carray , issame

def get_data ( data_root ):

agedb_30 , agedb_30_issame = get_pair ( data_root , 'agedb_30' )

cfp_fp , cfp_fp_issame = get_pair ( data_root , 'cfp_fp' )

lfw , lfw_issame = get_pair ( data_root , 'lfw' )

vgg2_fp , vgg2_fp_issame = get_pair ( data_root , 'vgg2_fp' )

return agedb_30 , cfp_fp , lfw , vgg2_fp , agedb_30_issame , cfp_fp_issame , lfw_issame , vgg2_fp_issame

agedb_30 , cfp_fp , lfw , vgg2_fp , agedb_30_issame , cfp_fp_issame , lfw_issame , vgg2_fp_issame = get_data ( DATA_ROOT )MS-Celeb-1M_Top1M_MID2Name.tsv (Google Drive, Baidu Drive), VGGface2_ID2Name.csv (Google Drive, Baidu Drive), VGGface2_FaceScrub_Overlap.txt (Google Drive, Baidu Drive), VGGface2_LFW_Overlap.txt (Google Drive, Baidu Drive), CASIA-WebFace_ID2Name.txt (Google Drive, Baidu Drive), CASIA-WebFace_FaceScrub_Overlap.txt (Google Drive, Baidu Drive), CASIA-WebFace_LFW_Overlap.txt (Google Drive, Baidu Drive), FaceScrub_Name.txt (Google Drive, Baidu Drive), LFW_Name.txt (Google Drive, Baidu Drive), LFW_Log.txt (Google Drive,Baidu Drive)幫助研究人員/工程師快速刪除其自己的私人數據集和公共數據集之間的重疊零件。?

模型

| 骨幹 | 頭 | 損失 | 培訓數據 | 下載鏈接 |

|---|---|---|---|---|

| IR-50 | 弧形 | 焦點 | MS-CELEB-1M_ALIGN_112X112 | Google Drive,Baidu Drive |

環境

INPUT_SIZE: [112, 112]; RGB_MEAN: [0.5, 0.5, 0.5]; RGB_STD: [0.5, 0.5, 0.5]; BATCH_SIZE: 512 (drop the last batch to ensure consistent batch_norm statistics); Initial LR: 0.1; NUM_EPOCH: 120; WEIGHT_DECAY: 5e-4 (do not apply to batch_norm parameters); MOMENTUM: 0.9; STAGES: [30, 60, 90]; Augmentation: Random Crop + Horizontal Flip; Imbalanced Data Processing: Weighted Random Sampling; Solver: SGD; GPUs: 4 NVIDIA Tesla P40 in Parallel

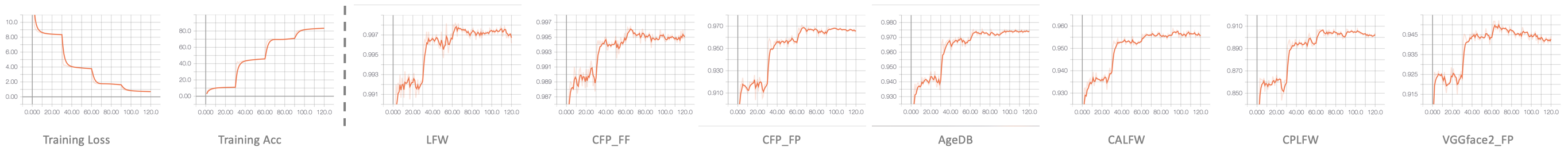

培訓和驗證統計

表現

| LFW | CFP_FF | CFP_FP | 老化 | 小牛 | CPLFW | vggface2_fp |

|---|---|---|---|---|---|---|

| 99.78 | 99.69 | 98.14 | 97.53 | 95.87 | 92.45 | 95.22 |

模型

| 骨幹 | 頭 | 損失 | 培訓數據 | 下載鏈接 |

|---|---|---|---|---|

| IR-50 | 弧形 | 焦點 | 私人亞洲面對數據 | Google Drive,Baidu Drive |

環境

INPUT_SIZE: [112, 112]; RGB_MEAN: [0.5, 0.5, 0.5]; RGB_STD: [0.5, 0.5, 0.5]; BATCH_SIZE: 1024 (drop the last batch to ensure consistent batch_norm statistics); Initial LR: 0.01 (initialize weights from the above model pre-trained on MS-Celeb-1M_Align_112x112); NUM_EPOCH: 80; WEIGHT_DECAY: 5e-4 (do not apply to batch_norm parameters); MOMENTUM: 0.9; STAGES: [20, 40, 60]; Augmentation: Random Crop + Horizontal Flip; Imbalanced Data Processing: Weighted Random Sampling; Solver: SGD; GPUs: 8 NVIDIA Tesla P40 in Parallel

績效(請按您自己的亞洲面對基準數據集進行評估)

模型

| 骨幹 | 頭 | 損失 | 培訓數據 | 下載鏈接 |

|---|---|---|---|---|

| IR-152 | 弧形 | 焦點 | MS-CELEB-1M_ALIGN_112X112 | Baidu Drive,PW:B197 |

環境

INPUT_SIZE: [112, 112]; RGB_MEAN: [0.5, 0.5, 0.5]; RGB_STD: [0.5, 0.5, 0.5]; BATCH_SIZE: 256 (drop the last batch to ensure consistent batch_norm statistics); Initial LR: 0.01; NUM_EPOCH: 120; WEIGHT_DECAY: 5e-4 (do not apply to batch_norm parameters); MOMENTUM: 0.9; STAGES: [30, 60, 90]; Augmentation: Random Crop + Horizontal Flip; Imbalanced Data Processing: Weighted Random Sampling; Solver: SGD; GPUs: 4 NVIDIA Geforce RTX 2080 Ti in Parallel

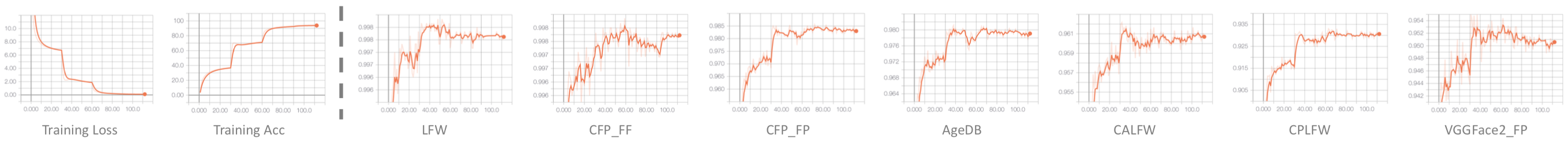

培訓和驗證統計

表現

| LFW | CFP_FF | CFP_FP | 老化 | 小牛 | CPLFW | vggface2_fp |

|---|---|---|---|---|---|---|

| 99.82 | 99.83 | 98.37 | 98.07 | 96.03 | 93.05 | 95.50 |

?

2017年ICCV 2017 MS-CELEB-1M大規模面部識別硬設置/隨機設置/低射擊學習挑戰。微信新聞,NUS ECE新聞,NUS ECE海報,Track-1獎勵證書,Track-2的獎勵證書,頒獎典禮。

2017年國家標準技術研究所(NIST)IARPA JANUS BENCHMARC A(IJB-A)無約束的面部驗證挑戰和識別挑戰的第一號。微信新聞。

最先進的表現

?

?

請諮詢並考慮引用以下論文:

@article{wu20223d,

title={3D-Guided Frontal Face Generation for Pose-Invariant Recognition},

author={Wu, Hao and Gu, Jianyang and Fan, Xiaojin and Li, He and Xie, Lidong and Zhao, Jian},

journal={T-IST},

year={2022}

}

@article{wang2021face,

title={Face.evoLVe: A High-Performance Face Recognition Library},

author={Wang, Qingzhong and Zhang, Pengfei and Xiong, Haoyi and Zhao, Jian},

journal={arXiv preprint arXiv:2107.08621},

year={2021}

}

@article{tu2021joint,

title={Joint Face Image Restoration and Frontalization for Recognition},

author={Tu, Xiaoguang and Zhao, Jian and Liu, Qiankun and Ai, Wenjie and Guo, Guodong and Li, Zhifeng and Liu, Wei and Feng, Jiashi},

journal={T-CSVT},

year={2021}

}

@article{zhao2020towards,

title={Towards age-invariant face recognition},

author={Zhao, Jian and Yan, Shuicheng and Feng, Jiashi},

journal={T-PAMI},

year={2020}

}

@article{zhao2019recognizing,

title={Recognizing Profile Faces by Imagining Frontal View},

author={Zhao, Jian and Xing, Junliang and Xiong, Lin and Yan, Shuicheng and Feng, Jiashi},

journal={IJCV},

pages={1--19},

year={2019}

}

@inproceedings{zhao2019multi,

title={Multi-Prototype Networks for Unconstrained Set-based Face Recognition},

author={Zhao, Jian and Li, Jianshu and Tu, Xiaoguang and Zhao, Fang and Xin, Yuan and Xing, Junliang and Liu, Hengzhu and Yan, Shuicheng and Feng, Jiashi},

booktitle={IJCAI},

year={2019}

}

@inproceedings{zhao2019look,

title={Look Across Elapse: Disentangled Representation Learning and Photorealistic Cross-Age Face Synthesis for Age-Invariant Face Recognition},

author={Zhao, Jian and Cheng, Yu and Cheng, Yi and Yang, Yang and Lan, Haochong and Zhao, Fang and Xiong, Lin and Xu, Yan and Li, Jianshu and Pranata, Sugiri and others},

booktitle={AAAI},

year={2019}

}

@article{zhao20183d,

title={3D-Aided Dual-Agent GANs for Unconstrained Face Recognition},

author={Zhao, Jian and Xiong, Lin and Li, Jianshu and Xing, Junliang and Yan, Shuicheng and Feng, Jiashi},

journal={T-PAMI},

year={2018}

}

@inproceedings{zhao2018towards,

title={Towards Pose Invariant Face Recognition in the Wild},

author={Zhao, Jian and Cheng, Yu and Xu, Yan and Xiong, Lin and Li, Jianshu and Zhao, Fang and Jayashree, Karlekar and Pranata, Sugiri and Shen, Shengmei and Xing, Junliang and others},

booktitle={CVPR},

pages={2207--2216},

year={2018}

}

@inproceedings{zhao3d,

title={3D-Aided Deep Pose-Invariant Face Recognition},

author={Zhao, Jian and Xiong, Lin and Cheng, Yu and Cheng, Yi and Li, Jianshu and Zhou, Li and Xu, Yan and Karlekar, Jayashree and Pranata, Sugiri and Shen, Shengmei and others},

booktitle={IJCAI},

pages={1184--1190},

year={2018}

}

@inproceedings{zhao2018dynamic,

title={Dynamic Conditional Networks for Few-Shot Learning},

author={Zhao, Fang and Zhao, Jian and Yan, Shuicheng and Feng, Jiashi},

booktitle={ECCV},

pages={19--35},

year={2018}

}

@inproceedings{zhao2017dual,

title={Dual-agent gans for photorealistic and identity preserving profile face synthesis},

author={Zhao, Jian and Xiong, Lin and Jayashree, Panasonic Karlekar and Li, Jianshu and Zhao, Fang and Wang, Zhecan and Pranata, Panasonic Sugiri and Shen, Panasonic Shengmei and Yan, Shuicheng and Feng, Jiashi},

booktitle={NeurIPS},

pages={66--76},

year={2017}

}

@inproceedings{zhao122017marginalized,

title={Marginalized cnn: Learning deep invariant representations},

author={Zhao12, Jian and Li, Jianshu and Zhao, Fang and Yan13, Shuicheng and Feng, Jiashi},

booktitle={BMVC},

year={2017}

}

@inproceedings{cheng2017know,

title={Know you at one glance: A compact vector representation for low-shot learning},

author={Cheng, Yu and Zhao, Jian and Wang, Zhecan and Xu, Yan and Jayashree, Karlekar and Shen, Shengmei and Feng, Jiashi},

booktitle={ICCVW},

pages={1924--1932},

year={2017}

}

@inproceedings{wangconditional,

title={Conditional Dual-Agent GANs for Photorealistic and Annotation Preserving Image Synthesis},

author={Wang, Zhecan and Zhao, Jian and Cheng, Yu and Xiao, Shengtao and Li, Jianshu and Zhao, Fang and Feng, Jiashi and Kassim, Ashraf},

booktitle={BMVCW},

}