webarena

v0.2.0

網站•紙•排行榜

重要的

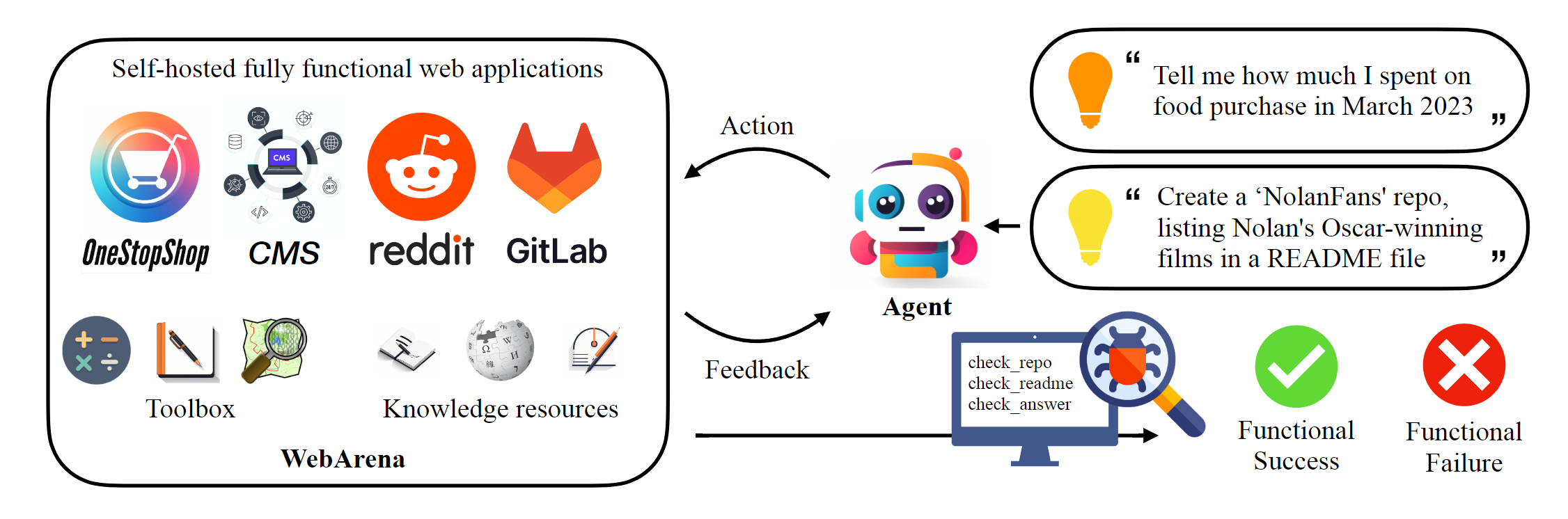

該存儲庫託管Webarena的規範實現,以重現本文報告的結果。 AgentLab通過browsergym引入了幾個關鍵特徵,引入了幾個關鍵特徵,該網絡導航基礎架構得到了顯著增強,(1)支持使用瀏覽器的平行實驗,(2)在統一的框架中集成流行的Web導航基準(例如,VisualWebarena),在統一的框架中,(3)統一的排行榜報告,以及(4)(4)(4)(4)(4)改善環境的處理案例。我們強烈建議將此框架用於您的實驗。

# Python 3.10+

conda create -n webarena python=3.10 ; conda activate webarena

pip install -r requirements.txt

playwright install

pip install -e .

# optional, dev only

pip install -e " .[dev] "

mypy --install-types --non-interactive browser_env agents evaluation_harness

pip install pre-commit

pre-commit install查看此腳本,以便快速演練如何設置瀏覽器環境並使用我們託管的演示站點進行交互。該腳本僅出於教育目的,要執行可重複的實驗,請查看下一節。簡而言之,使用Webarena與使用OpenAi體育館非常相似。以下代碼段顯示瞭如何與環境互動。

from browser_env import ScriptBrowserEnv , create_id_based_action

# init the environment

env = ScriptBrowserEnv (

headless = False ,

observation_type = "accessibility_tree" ,

current_viewport_only = True ,

viewport_size = { "width" : 1280 , "height" : 720 },

)

# prepare the environment for a configuration defined in a json file

config_file = "config_files/0.json"

obs , info = env . reset ( options = { "config_file" : config_file })

# get the text observation (e.g., html, accessibility tree) through obs["text"]

# create a random action

id = random . randint ( 0 , 1000 )

action = create_id_based_action ( f"click [id]" )

# take the action

obs , _ , terminated , _ , info = env . step ( action )重要的

為了確保正確的評估,請在第1步和步驟2之後設置您自己的Webarena網站。演示網站僅用於瀏覽目的,以幫助您更好地了解內容。評估812個示例後,按照說明將環境重置為初始狀態。

設置獨立環境。請查看此頁面以獲取詳細信息。

為每個網站配置URL。

export SHOPPING= " <your_shopping_site_domain>:7770 "

export SHOPPING_ADMIN= " <your_e_commerce_cms_domain>:7780/admin "

export REDDIT= " <your_reddit_domain>:9999 "

export GITLAB= " <your_gitlab_domain>:8023 "

export MAP= " <your_map_domain>:3000 "

export WIKIPEDIA= " <your_wikipedia_domain>:8888/wikipedia_en_all_maxi_2022-05/A/User:The_other_Kiwix_guy/Landing "

export HOMEPAGE= " <your_homepage_domain>:4399 " # this is a placeholder鼓勵您更新GitHub工作流程中的環境變量,以確保單位測試的正確性

python scripts/generate_test_data.py您將看到config_files文件夾中生成的*.json文件。每個文件包含一個測試示例的配置。

mkdir -p ./.auth

python browser_env/auto_login.py

導出OPENAI_API_KEY=your_key ,有效的OpenAI API鍵以sk-開頭

啟動評估

python run.py

--instruction_path agent/prompts/jsons/p_cot_id_actree_2s.json # this is the reasoning agent prompt we used in the paper

--test_start_idx 0

--test_end_idx 1

--model gpt-3.5-turbo

--result_dir < your_result_dir >該腳本將使用GPT-3.5推理代理運行第一個示例。該軌跡將保存在<your_result_dir>/0.html中

prompt = {

"intro" : < The overall guideline which includes the task description , available action , hint and others > ,

"examples" : [

(

example_1_observation ,

example_1_response

),

(

example_2_observation ,

example_2_response

),

...

],

"template" : < How to organize different information such as observation , previous action , instruction , url > ,

"meta_data" : {

"observation" : < Which observation space the agent uses > ,

"action_type" : < Which action space the agent uses > ,

"keywords" : < The keywords used in the template , the program will later enumerate all keywords in the template to see if all of them are correctly replaced with the content > ,

"prompt_constructor" : < Which prompt construtor is in used , the prompt constructor will construct the input feed to an LLM and extract the action from the generation , more details below > ,

"action_splitter" : < Inside which splitter can we extract the action , used by the prompt constructor >

}

}construct :將輸入提要構造到LLM_extract_action :從LLM給定生成,如何提取與動作相對應的短語如果您使用我們的環境或數據,請引用我們的論文:

@article{zhou2023webarena,

title={WebArena: A Realistic Web Environment for Building Autonomous Agents},

author={Zhou, Shuyan and Xu, Frank F and Zhu, Hao and Zhou, Xuhui and Lo, Robert and Sridhar, Abishek and Cheng, Xianyi and Bisk, Yonatan and Fried, Daniel and Alon, Uri and others},

journal={arXiv preprint arXiv:2307.13854},

year={2023}

}