AgentOps幫助開發人員構建,評估和監視AI代理。從原型到生產。

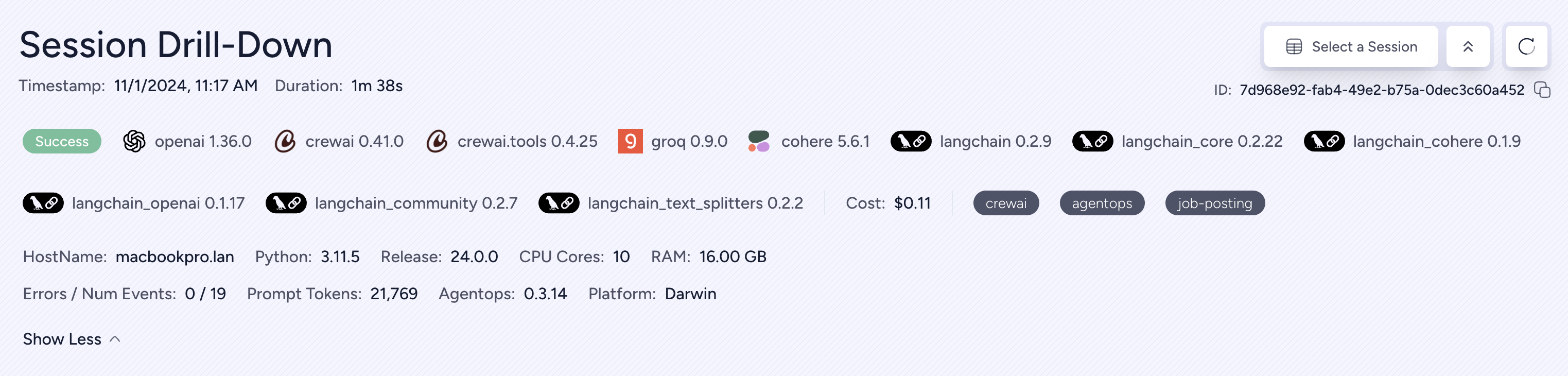

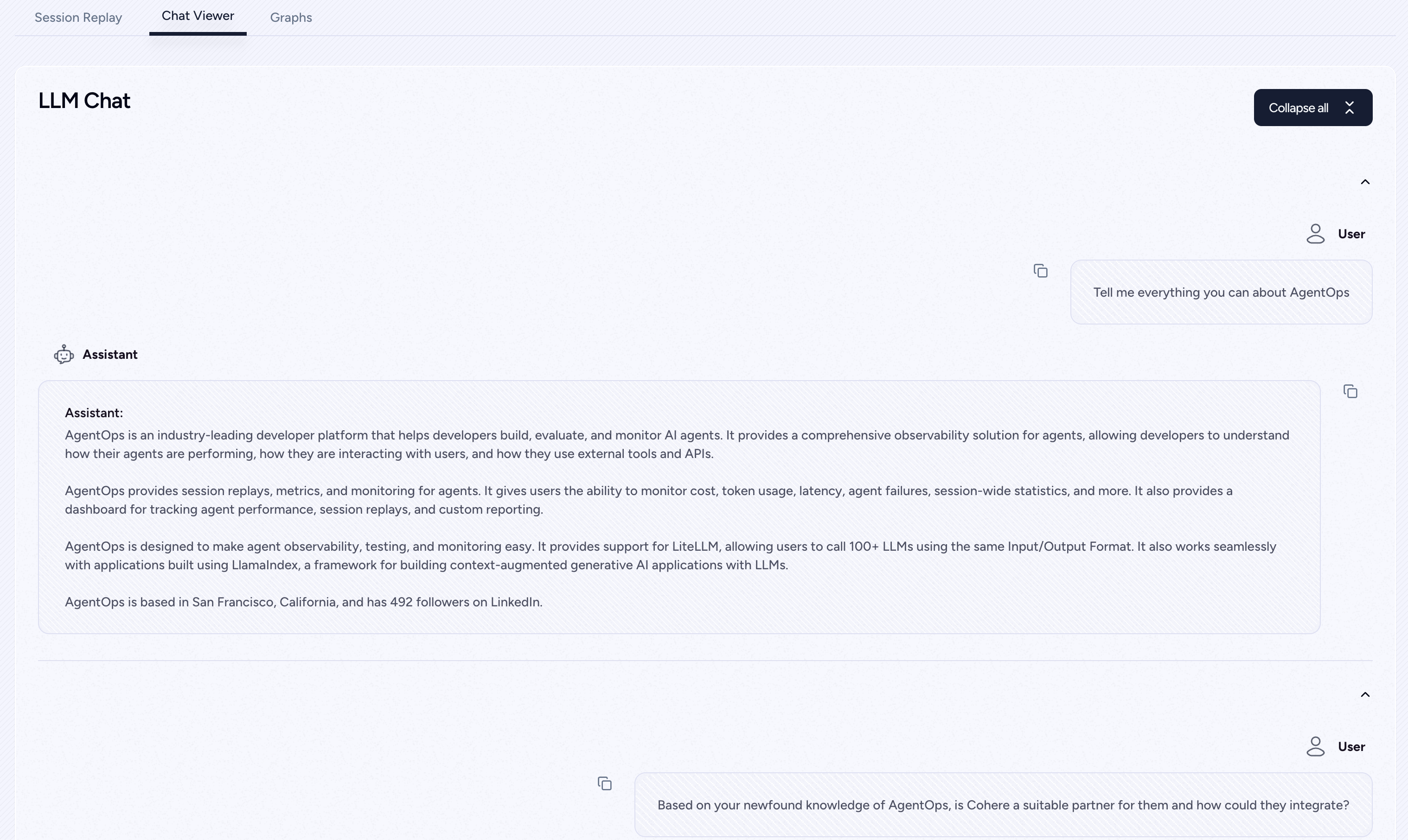

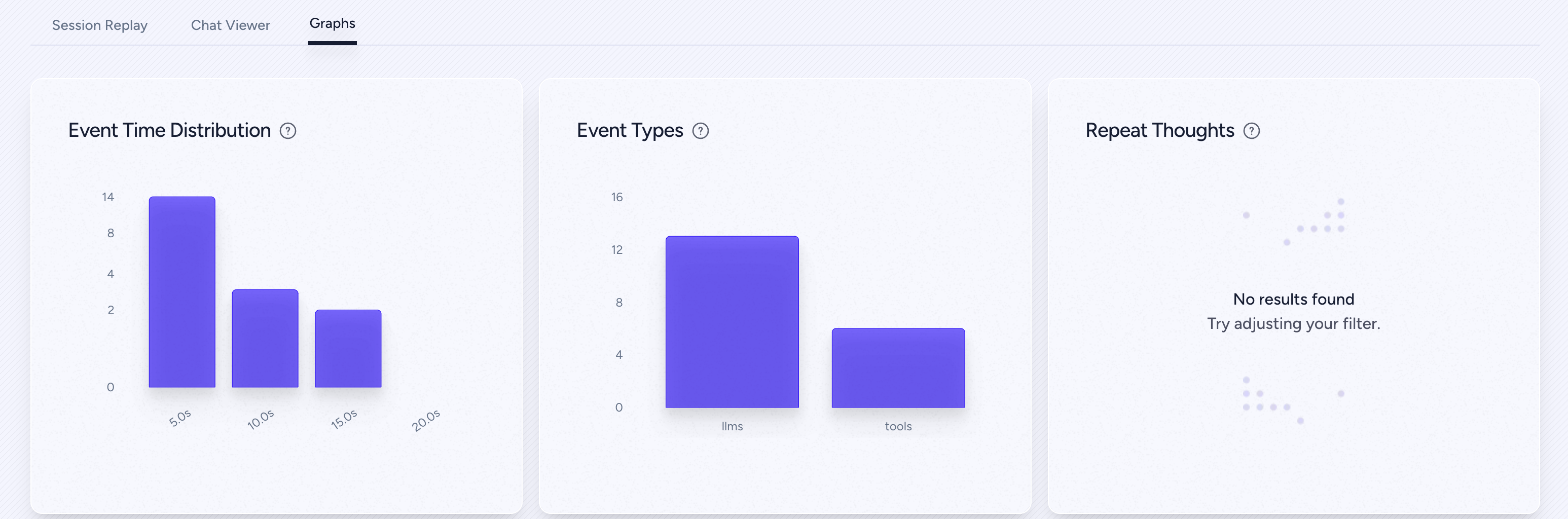

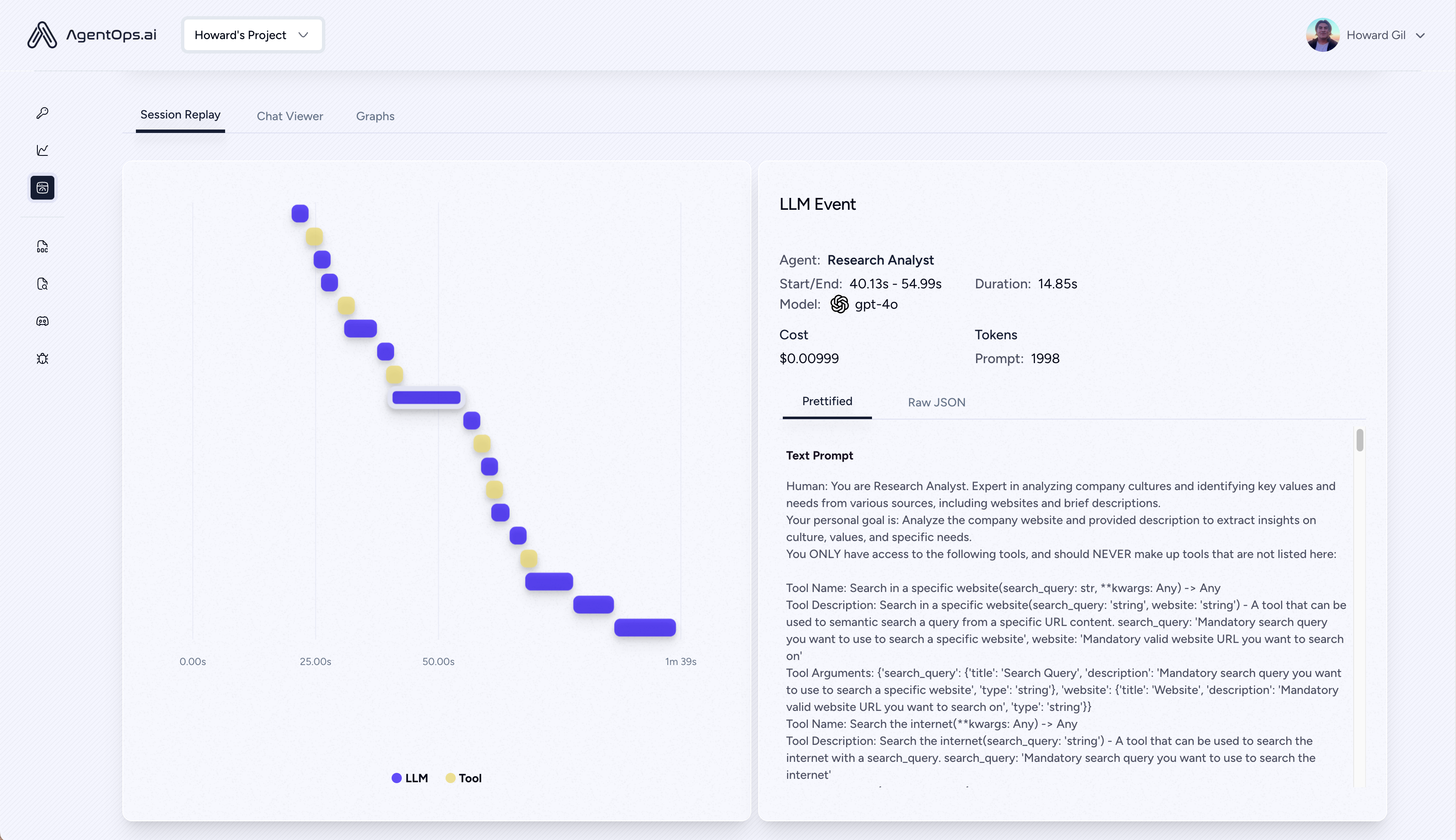

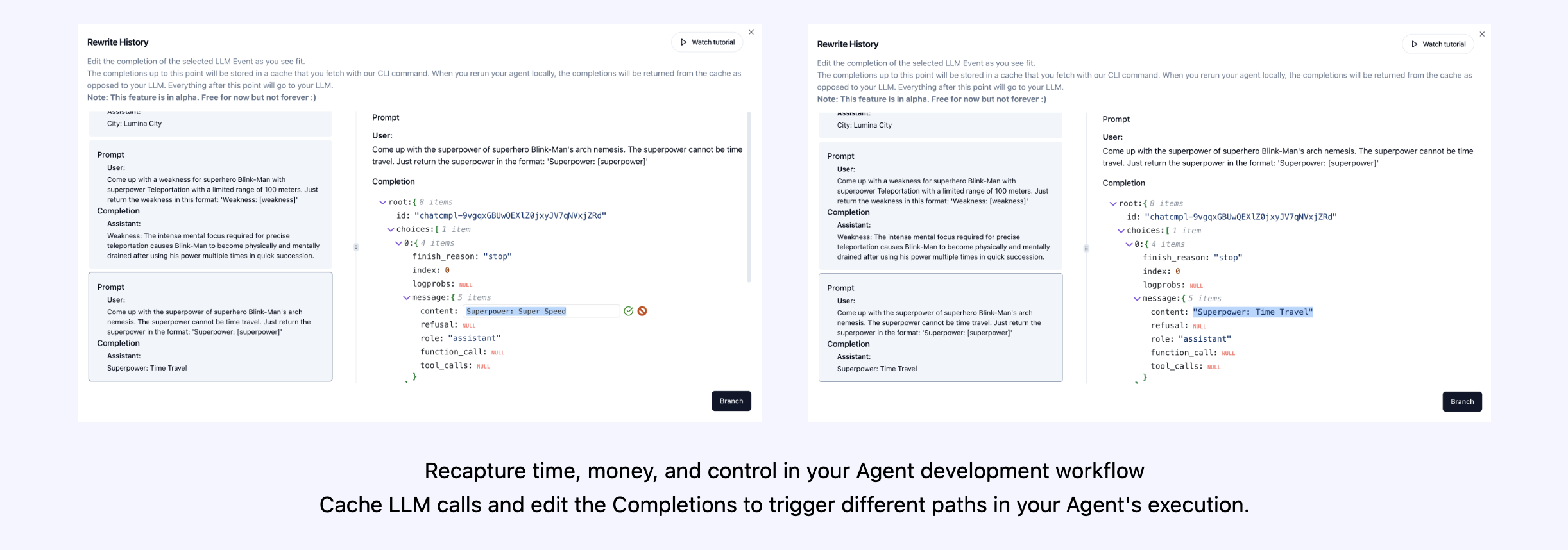

| 重播分析和調試 | 分步代理執行圖 |

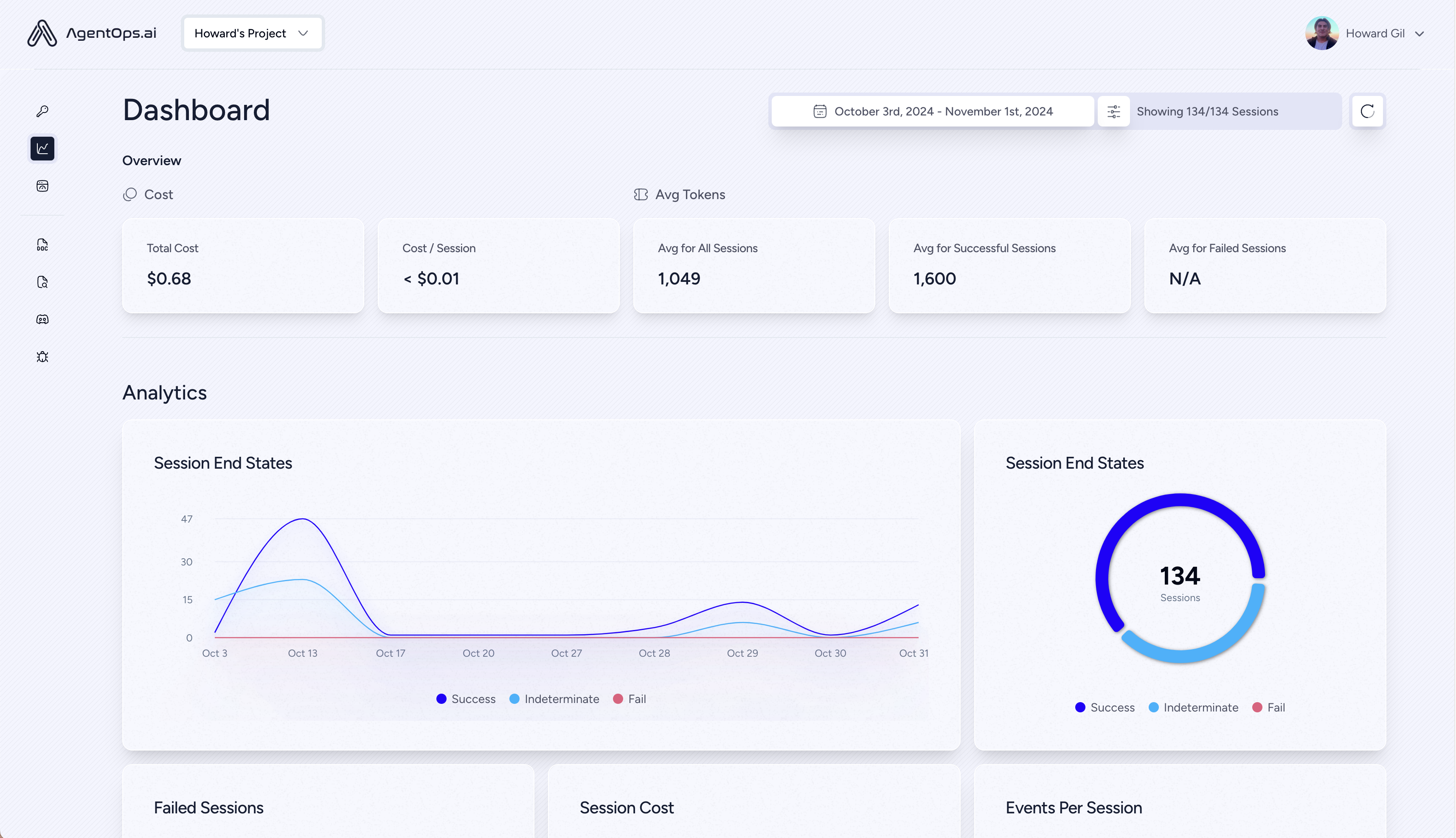

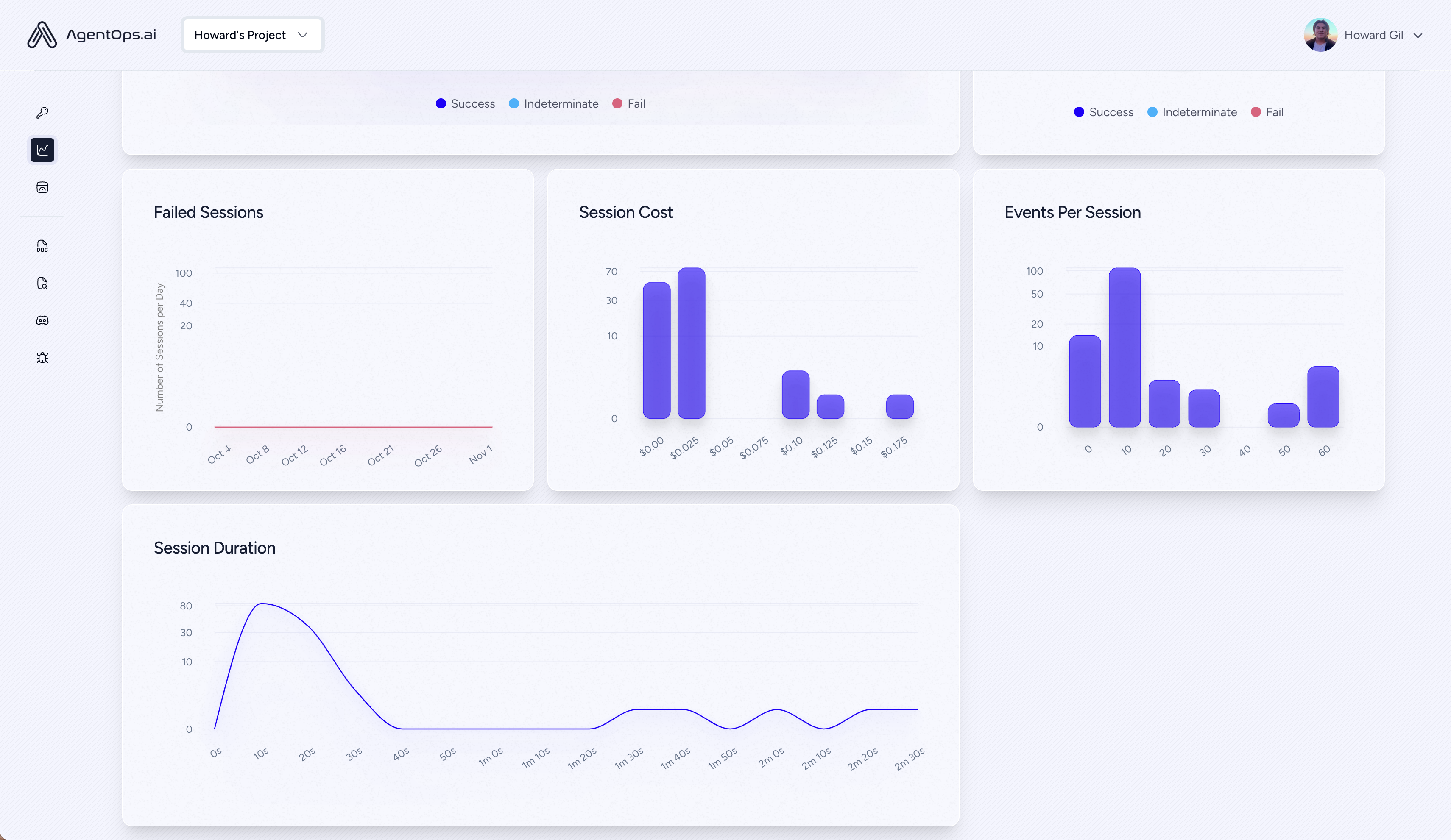

| ? LLM成本管理 | LLM基金會模型提供商的踪跡支出 |

| ?代理基準測試 | 測試您的代理商針對1,000多個Evals |

| ?合規性和安全性 | 檢測常見的提示注射和數據滲透漏洞 |

| ?框架集成 | 與Crewai,Autogen和Langchain的本地集成 |

pip install agentops初始化AgensOps客戶端,並自動在所有LLM呼叫上獲取分析。

獲取API鍵

import agentops

# Beginning of your program (i.e. main.py, __init__.py)

agentops . init ( < INSERT YOUR API KEY HERE > )

...

# End of program

agentops . end_session ( 'Success' )您所有的會話都可以在AgensOps儀表板上查看

在代理,工具和功能中添加強大的可觀察性,並儘可能少:一次一行。

參考我們的文檔

# Automatically associate all Events with the agent that originated them

from agentops import track_agent

@ track_agent ( name = 'SomeCustomName' )

class MyAgent :

... # Automatically create ToolEvents for tools that agents will use

from agentops import record_tool

@ record_tool ( 'SampleToolName' )

def sample_tool (...):

... # Automatically create ActionEvents for other functions.

from agentops import record_action

@ agentops . record_action ( 'sample function being record' )

def sample_function (...):

... # Manually record any other Events

from agentops import record , ActionEvent

record ( ActionEvent ( "received_user_input" ))構建具有可觀察性的機組人員,只有2行代碼。只需在您的環境中設置AGENTOPS_API_KEY ,您的工作人員將在AgensOps儀表板上自動監視。

pip install ' crewai[agentops] '只有兩行代碼,為Autogen劑添加了完整的可觀察性和監視。在您的環境中設置AGENTOPS_API_KEY並呼叫agentops.init()

AgentOps與使用Langchain構建的應用程序無縫地工作。要使用處理程序,請安裝Langchain作為可選的依賴性:

pip install agentops[langchain]使用處理程序,導入並設置

import os

from langchain . chat_models import ChatOpenAI

from langchain . agents import initialize_agent , AgentType

from agentops . partners . langchain_callback_handler import LangchainCallbackHandler

AGENTOPS_API_KEY = os . environ [ 'AGENTOPS_API_KEY' ]

handler = LangchainCallbackHandler ( api_key = AGENTOPS_API_KEY , tags = [ 'Langchain Example' ])

llm = ChatOpenAI ( openai_api_key = OPENAI_API_KEY ,

callbacks = [ handler ],

model = 'gpt-3.5-turbo' )

agent = initialize_agent ( tools ,

llm ,

agent = AgentType . CHAT_ZERO_SHOT_REACT_DESCRIPTION ,

verbose = True ,

callbacks = [ handler ], # You must pass in a callback handler to record your agent

handle_parsing_errors = True )查看Langchain示例筆記本,以獲取更多詳細信息,包括異步處理程序。

對Cohere的一流支持(> = 5.4.0)。這是一個生活整合,如果您需要任何附加功能,請在Discord上給我們發消息!

pip install cohere import cohere

import agentops

# Beginning of program's code (i.e. main.py, __init__.py)

agentops . init ( < INSERT YOUR API KEY HERE > )

co = cohere . Client ()

chat = co . chat (

message = "Is it pronounced ceaux-hear or co-hehray?"

)

print ( chat )

agentops . end_session ( 'Success' ) import cohere

import agentops

# Beginning of program's code (i.e. main.py, __init__.py)

agentops . init ( < INSERT YOUR API KEY HERE > )

co = cohere . Client ()

stream = co . chat_stream (

message = "Write me a haiku about the synergies between Cohere and AgentOps"

)

for event in stream :

if event . event_type == "text-generation" :

print ( event . text , end = '' )

agentops . end_session ( 'Success' )用擬人化Python SDK構建的軌道代理(> = 0.32.0)。

pip install anthropic import anthropic

import agentops

# Beginning of program's code (i.e. main.py, __init__.py)

agentops . init ( < INSERT YOUR API KEY HERE > )

client = anthropic . Anthropic (

# This is the default and can be omitted

api_key = os . environ . get ( "ANTHROPIC_API_KEY" ),

)

message = client . messages . create (

max_tokens = 1024 ,

messages = [

{

"role" : "user" ,

"content" : "Tell me a cool fact about AgentOps" ,

}

],

model = "claude-3-opus-20240229" ,

)

print ( message . content )

agentops . end_session ( 'Success' )流

import anthropic

import agentops

# Beginning of program's code (i.e. main.py, __init__.py)

agentops . init ( < INSERT YOUR API KEY HERE > )

client = anthropic . Anthropic (

# This is the default and can be omitted

api_key = os . environ . get ( "ANTHROPIC_API_KEY" ),

)

stream = client . messages . create (

max_tokens = 1024 ,

model = "claude-3-opus-20240229" ,

messages = [

{

"role" : "user" ,

"content" : "Tell me something cool about streaming agents" ,

}

],

stream = True ,

)

response = ""

for event in stream :

if event . type == "content_block_delta" :

response += event . delta . text

elif event . type == "message_stop" :

print ( " n " )

print ( response )

print ( " n " )異步

import asyncio

from anthropic import AsyncAnthropic

client = AsyncAnthropic (

# This is the default and can be omitted

api_key = os . environ . get ( "ANTHROPIC_API_KEY" ),

)

async def main () -> None :

message = await client . messages . create (

max_tokens = 1024 ,

messages = [

{

"role" : "user" ,

"content" : "Tell me something interesting about async agents" ,

}

],

model = "claude-3-opus-20240229" ,

)

print ( message . content )

await main ()用擬人化Python SDK構建的軌道代理(> = 0.32.0)。

pip install mistralai同步

from mistralai import Mistral

import agentops

# Beginning of program's code (i.e. main.py, __init__.py)

agentops . init ( < INSERT YOUR API KEY HERE > )

client = Mistral (

# This is the default and can be omitted

api_key = os . environ . get ( "MISTRAL_API_KEY" ),

)

message = client . chat . complete (

messages = [

{

"role" : "user" ,

"content" : "Tell me a cool fact about AgentOps" ,

}

],

model = "open-mistral-nemo" ,

)

print ( message . choices [ 0 ]. message . content )

agentops . end_session ( 'Success' )流

from mistralai import Mistral

import agentops

# Beginning of program's code (i.e. main.py, __init__.py)

agentops . init ( < INSERT YOUR API KEY HERE > )

client = Mistral (

# This is the default and can be omitted

api_key = os . environ . get ( "MISTRAL_API_KEY" ),

)

message = client . chat . stream (

messages = [

{

"role" : "user" ,

"content" : "Tell me something cool about streaming agents" ,

}

],

model = "open-mistral-nemo" ,

)

response = ""

for event in message :

if event . data . choices [ 0 ]. finish_reason == "stop" :

print ( " n " )

print ( response )

print ( " n " )

else :

response += event . text

agentops . end_session ( 'Success' )異步

import asyncio

from mistralai import Mistral

client = Mistral (

# This is the default and can be omitted

api_key = os . environ . get ( "MISTRAL_API_KEY" ),

)

async def main () -> None :

message = await client . chat . complete_async (

messages = [

{

"role" : "user" ,

"content" : "Tell me something interesting about async agents" ,

}

],

model = "open-mistral-nemo" ,

)

print ( message . choices [ 0 ]. message . content )

await main ()異步流

import asyncio

from mistralai import Mistral

client = Mistral (

# This is the default and can be omitted

api_key = os . environ . get ( "MISTRAL_API_KEY" ),

)

async def main () -> None :

message = await client . chat . stream_async (

messages = [

{

"role" : "user" ,

"content" : "Tell me something interesting about async streaming agents" ,

}

],

model = "open-mistral-nemo" ,

)

response = ""

async for event in message :

if event . data . choices [ 0 ]. finish_reason == "stop" :

print ( " n " )

print ( response )

print ( " n " )

else :

response += event . text

await main ()AgensOps提供了對LITELLM(> = 1.3.1)的支持,允許您使用相同的輸入/輸出格式調用100+ LLM。

pip install litellm # Do not use LiteLLM like this

# from litellm import completion

# ...

# response = completion(model="claude-3", messages=messages)

# Use LiteLLM like this

import litellm

...

response = litellm . completion ( model = "claude-3" , messages = messages )

# or

response = await litellm . acompletion ( model = "claude-3" , messages = messages )AgentOps與使用LlamainDex構建的應用程序無縫合作,該應用程序是一個框架,用於使用LLMS構建上下文啟動的生成AI應用程序。

pip install llama-index-instrumentation-agentops使用處理程序,導入並設置

from llama_index . core import set_global_handler

# NOTE: Feel free to set your AgentOps environment variables (e.g., 'AGENTOPS_API_KEY')

# as outlined in the AgentOps documentation, or pass the equivalent keyword arguments

# anticipated by AgentOps' AOClient as **eval_params in set_global_handler.

set_global_handler ( "agentops" )查看LlamainDex文檔以獲取更多詳細信息。

嘗試一下!

(即將推出!)

| 平台 | 儀表板 | evals |

|---|---|---|

| python SDK | ✅多課程和跨課程指標 | ✅自定義評估指標 |

| ?評估構建器API | ✅自定義事件標籤跟踪 | 特工記分卡 |

| ✅javaScript/打字率SDK | ✅會話重播 | 評估遊樂場 +排行榜 |

| 性能測試 | 環境 | LLM測試 | 推理和執行測試 |

|---|---|---|---|

| ✅事件延遲分析 | 非平穩環境測試 | LLM非確定功能檢測 | ?無限循環和遞歸思想檢測 |

| ✅代理工作流執行定價 | 多模式環境 | ?令牌極限溢出標誌 | 推理檢測錯誤 |

| ?成功驗證器(外部) | 執行容器 | 上下文限制溢出標誌 | 生成代碼驗證器 |

| 代理控制器/技能測試 | ✅蜜罐和及時注射檢測 | API帳單跟踪 | 錯誤斷點分析 |

| 信息上下文約束測試 | 反特定障礙(即驗證碼) | CI/CD集成檢查 | |

| 回歸測試 | 多代理框架可視化 |

沒有正確的工具,AI代理人的速度很慢,昂貴且不可靠。我們的使命是將您的代理商從原型帶到生產。這就是Agentops脫穎而出的原因:

AgensOps旨在使代理可觀察性,測試和監視容易。

查看我們在社區中的增長:

| 存儲庫 | 星星 |

|---|---|

| Geekan / metagpt | 42787 |

| run-lalama / llama_index | 34446 |

| Crewaiinc / Crewai | 18287 |

| 駱駝 /駱駝 | 5166 |

| 超級代理 /超級代理 | 5050 |

| Iyaja / Llama-fs | 4713 |

| 基於智能 / OMI | 2723 |

| Mervinpraison / Praisonai | 2007 |

| AgentOps-ai / Jaiqu | 272 |

| Strnad / Crewai-Studio | 134 |

| Alejandro-ao / exa-crewai | 55 |

| tonykipkemboi / youtube_yapper_trapper | 47 |

| Sethcoast / Cover-Letter-Builder | 27 |

| Bhancockio / Chatgpt4O分析 | 19 |

| breakstring / agentic_story_book_workflow | 14 |

| 多支出 /多葉py | 13 |

使用github依賴性人Info生成,由Nicolas Vuillamy生成