Awesome Efficient LLM

1.0.0

有效的大语言模型的精选清单

如果您想包含您的论文,或者需要更新任何详细信息,例如会议信息或代码URL,请随时提交拉动请求。您可以通过填写generate_item.py中的信息并执行python generate_item.py来生成所需的每篇论文的降价格式。我们非常感谢您对此列表的贡献。另外,您可以通过向我发送纸张和代码的链接给我发送电子邮件,我最早的方便时间将您的论文添加到列表中。

对于每个主题,我们都策划了一系列推荐的论文列表,这些论文获得了许多Github星星或引用。

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

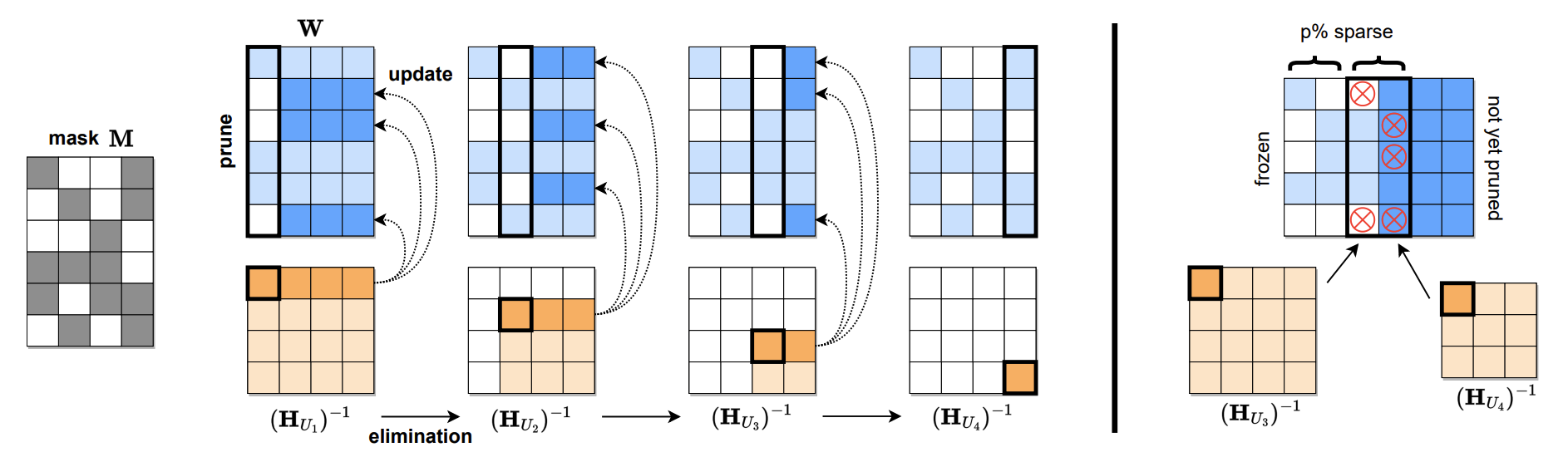

跨度:可以一击精确修剪大型语言模型 Elias Frantar,Dan Alistarh |  | Github纸 |

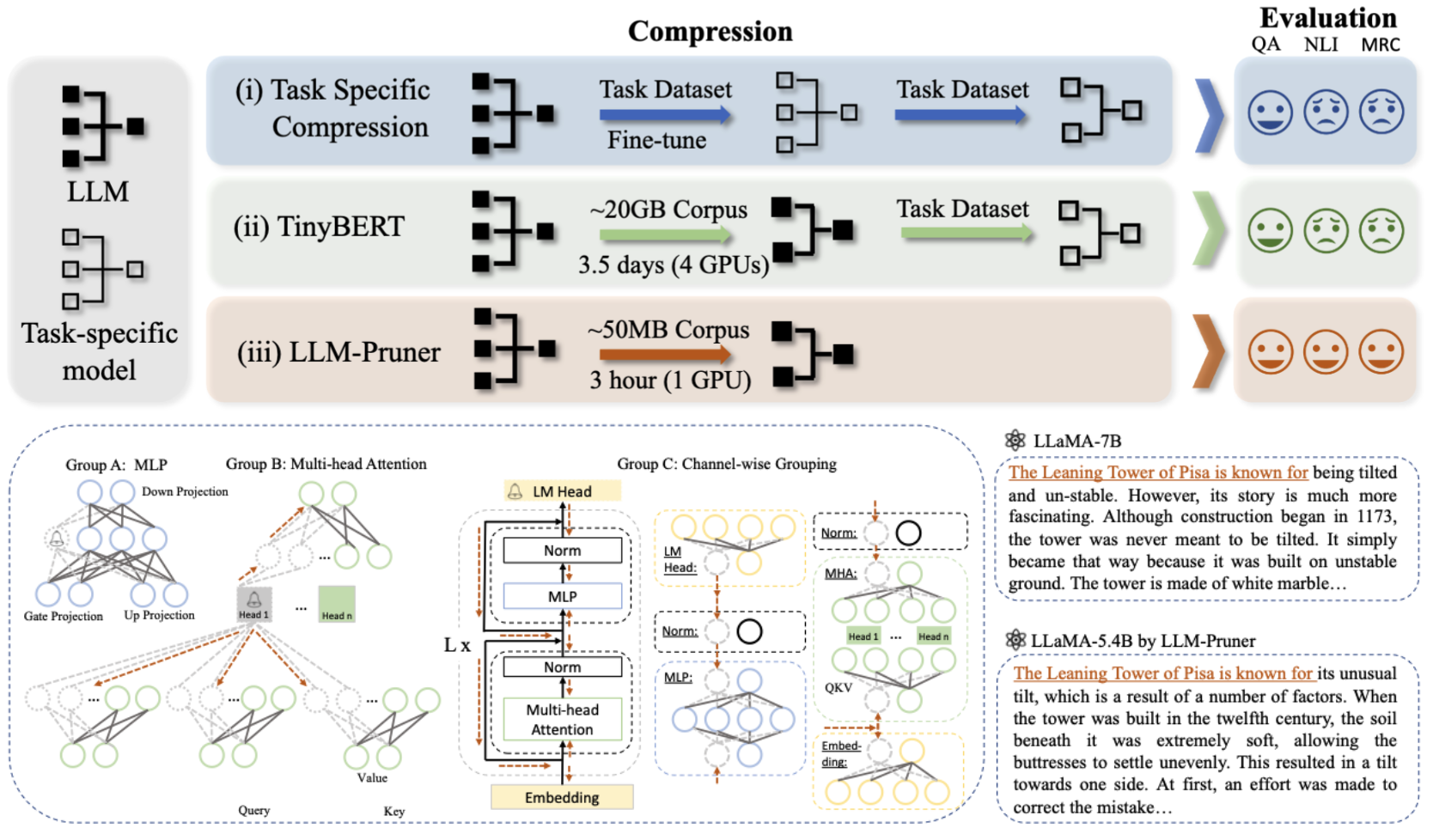

LLM-Pruner:关于大语言模型的结构修剪 Xinyin MA,Gongfan Fang,Xinchao Wang |  | Github纸 |

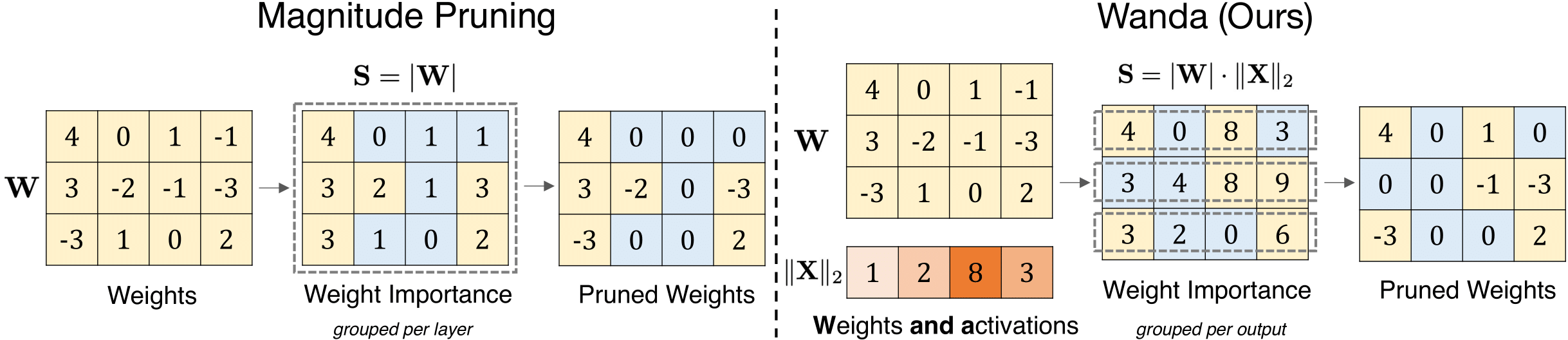

大语模型的简单有效的修剪方法 Mingjie Sun,Zhuang Liu,Anna Bair,J。ZicoKolter |  | github 纸 |

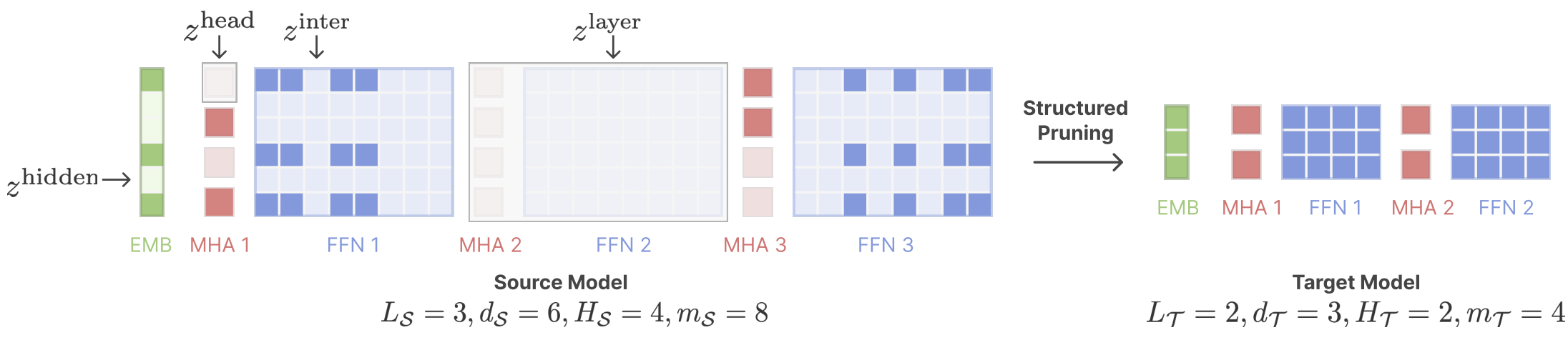

剪切的美洲驼:通过结构化修剪加速语言模型预训练 Mengzhou Xia,Tianyu Gao,Zhiyuan Zeng,Danqi Chen |  | github 纸 |

| 使用动态输入修剪和高速缓存掩盖的有效LLM推理 Marco Federici,Davide Belli,Mart Van Baalen,Amir Jalalirad,Andrii Skliar,Bence Major,Markus Nagel,Paul Whatmough | 纸 | |

| 难题:基于蒸馏的NAS用于推理优化的LLM Akhiad Bercovich,Tomer Ronen,Talor Abramovich,Nir Ailon,Nave Assaf,Mohammad Dabbah等人 | 纸 | |

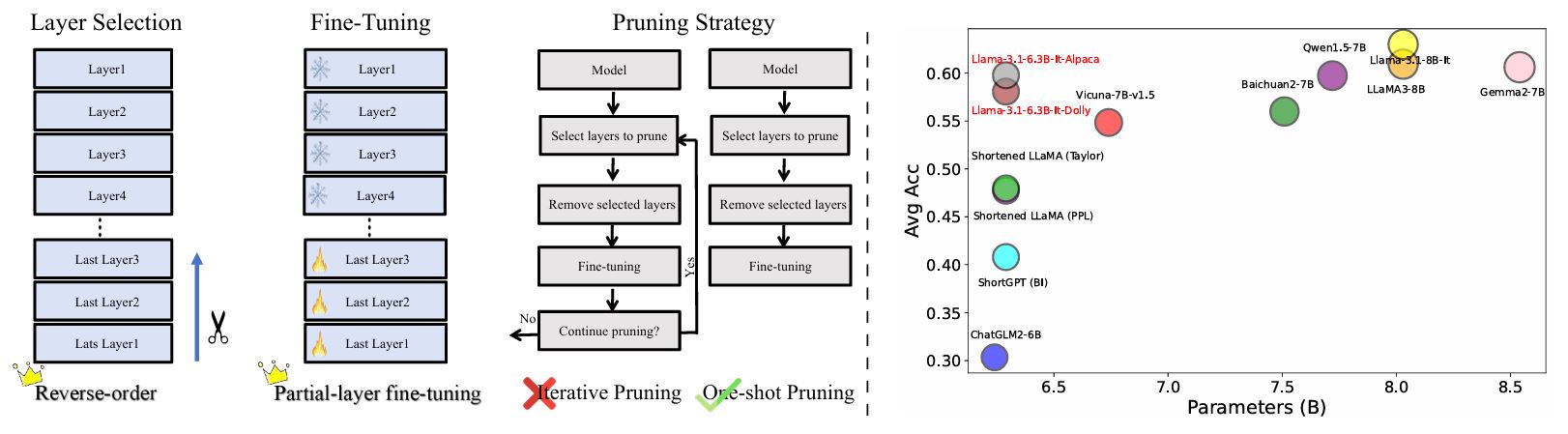

LLMS中的重新评估层修剪:新的见解和方法 lu,Hao Cheng,Yujie Fang,Zeyu Wang,Jiaheng Wei,Dongwei Xu,Qi Xuan,Xiaoniu Yang,Zhaowei Zhu |  | github 纸 |

| 通过增强的激活方差 - 比较在大语言模型中的重要性和幻觉分析 Zichen Song,Sitan Huang,Yuxin Wu,中芬康 | 纸 | |

AMOEBALLM:构建任何形状的大型语言模型,以进行有效和即时部署 Yonggan Fu,Zhongzhi Yu,Junwei Li,Jiayi Qian,Yongan Zhang,Xiangchi Yuan,Dachuan Shi,Roman Yakunin,Yingyan Celine Lin | github 纸 | |

| 修剪模型后训练后训练定律 小陈,Yuxuan Hu,Jing Zhang,Xiaokang Zhang,Cuiping Li,Hong Chen | 纸 | |

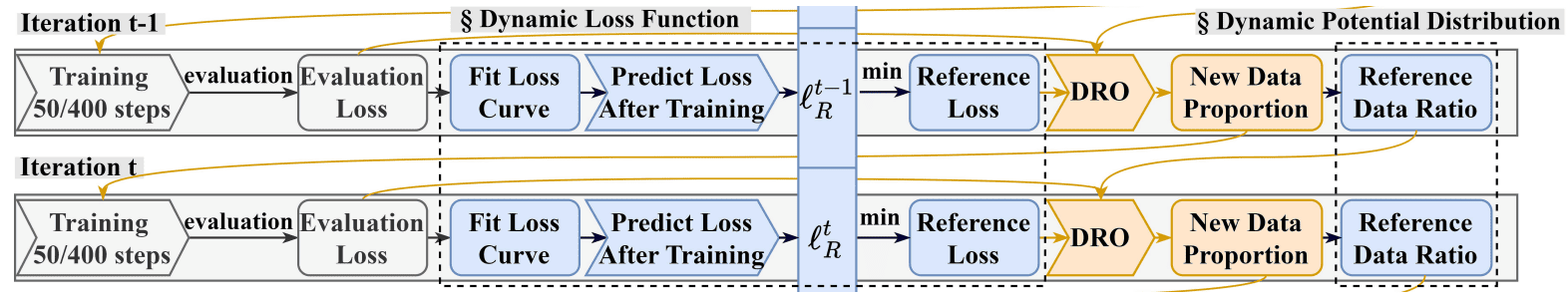

Drpruning:通过分布强大的优化进行有效的大语言模型修剪 Hexuan Deng,Wenxiang Jiao,Xuebo Liu,Min Zhang,Zhaopeng tu |  | github 纸 |

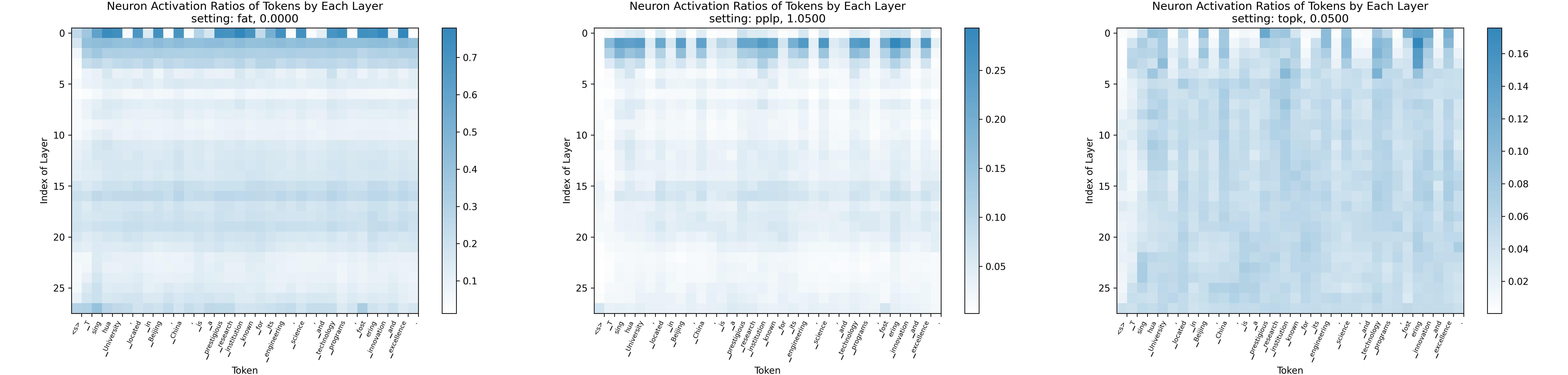

稀疏法:朝着更大激活稀疏性的大型语言模型 Yuqi Luo,Chenyang Song,Xu Han,Yingfa Chen,Chaojun Xiao,Zhiyuan Liu,Maosong Sun |  | github 纸 |

| AVSS:通过激活方差 - 表格分析中的大语言模型中的层重要性评估 Zichen Song,Yuxin Wu,Sitan Huang,方芬 | 纸 | |

| 量身定制的lalama:通过特定于任务提示,在修剪的骆驼模型中优化几乎没有学习的学习 Danyal Aftab,史蒂文·戴维(Steven Davy) | 纸 | |

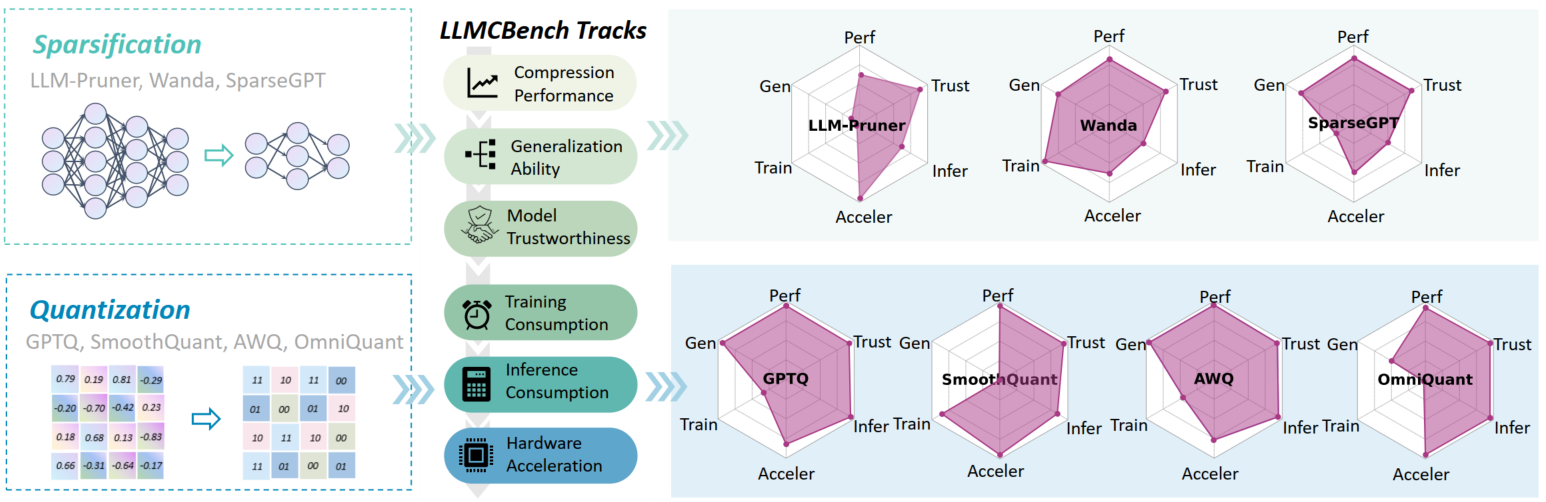

llmcbench:基准测试大型语言模型压缩以进行有效部署 Ge Yang,Changyi He,Jinyang Guo,Jianyu Wu,Yifu ding,Aishan Liu,Haotong Qin,Pengliang JI,Xianglong Liu |  | github 纸 |

| 超过2:4:探索V:N:M稀疏性,用于高效变压器推断GPU Kang Zhao,Tao Yuan,Han Bao,Zhenfeng Su,Chang Gao,Zhaofeng Sun,Zichen Liang,Liping Jing,Jianfei Chen | 纸 | |

evopress:通过进化搜索迈向最佳动态模型压缩 Oliver Sieberling,Denis Kuznedelev,Eldar Kurtic,Dan Alistarh |  | github 纸 |

| FEDSPALLM:大型语言模型的联合修剪 Guangji Bai,Yijiang Li,Zilinghan Li,Liang Zhao,Kibaek Kim | 纸 | |

修剪基础模型,以高精度而无需再培训 Pu Zhao,Fei Sun,Xuan Shen,Pinrui Yu,Zhenglun Kong,Yanzhi Wang,Xue Lin | github 纸 | |

| 语言模型量化和修剪的自我校准 迈尔斯·威廉姆斯(Miles Williams | 纸 | |

| 提防修剪大语模型的校准数据 Yixin JI,Yang Xiang,Juntao Li,Qingrong Xia,Ping Li,Xinyu Duan,Zhefeng Wang,Min Zhang | 纸 | |

字母训练:使用重尾的自我正则化理论,以改进大型语言模型的层次修剪 Haiquan Lu,Yefan Zhou,Shiwei Liu,Zhangyang Wang,Michael W. Mahoney,Yaoqing Yang | github 纸 | |

| 超越线性近似:一种新型的修剪方法,用于注意矩阵 Yingyu Liang,Jiangxuan Long,Zhenmei Shi,Zhao Song,Yufa Zhou | 纸 | |

disp-llm:大语模型无关维度的结构修剪 上海高,志林,丁·惠,唐宁,Yilin Shen,Hongxia Jin,Yen-chang hsu | 纸 | |

用于修剪大语模型中恢复质量的自DATA蒸馏 Vithursan Thangarasa,Ganesh Venkatesh,Nish Sinnadurai,Sean Lie | 纸 | |

| llm rank:一种修剪大语模型的理论方法 David Hoffmann,Kailash Budhathoki,Matthaeus Kleindessner | 纸 | |

C4数据集是用于修剪的最佳选择吗? LLM修剪的校准数据调查 Abhinav Bandari,Lu Yin,Cheng-Yu Hsieh,Ajay Kumar Jaiswal,Tianlong Chen,Li Shen,Ranjay Krishna,Shiwei Liu | github 纸 | |

| 通过神经元修剪来缓解副本学习中的复制偏见 Ameen Ali,Lior Wolf,Ivan Titov |  | 纸 |

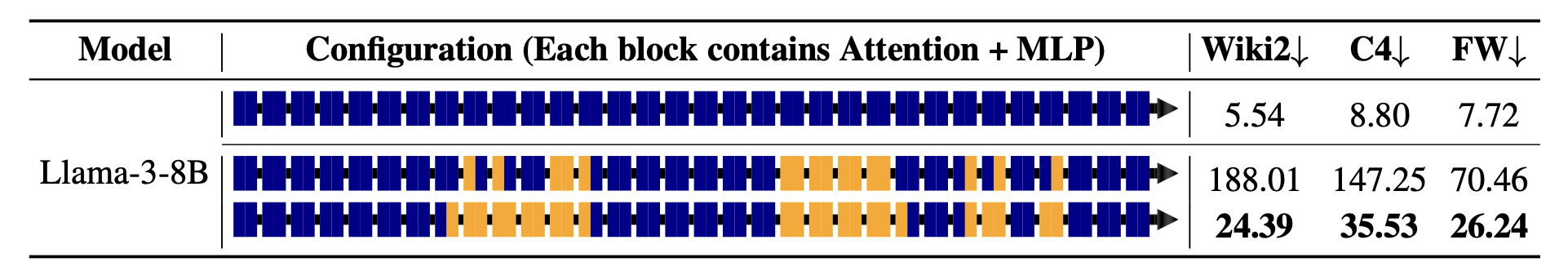

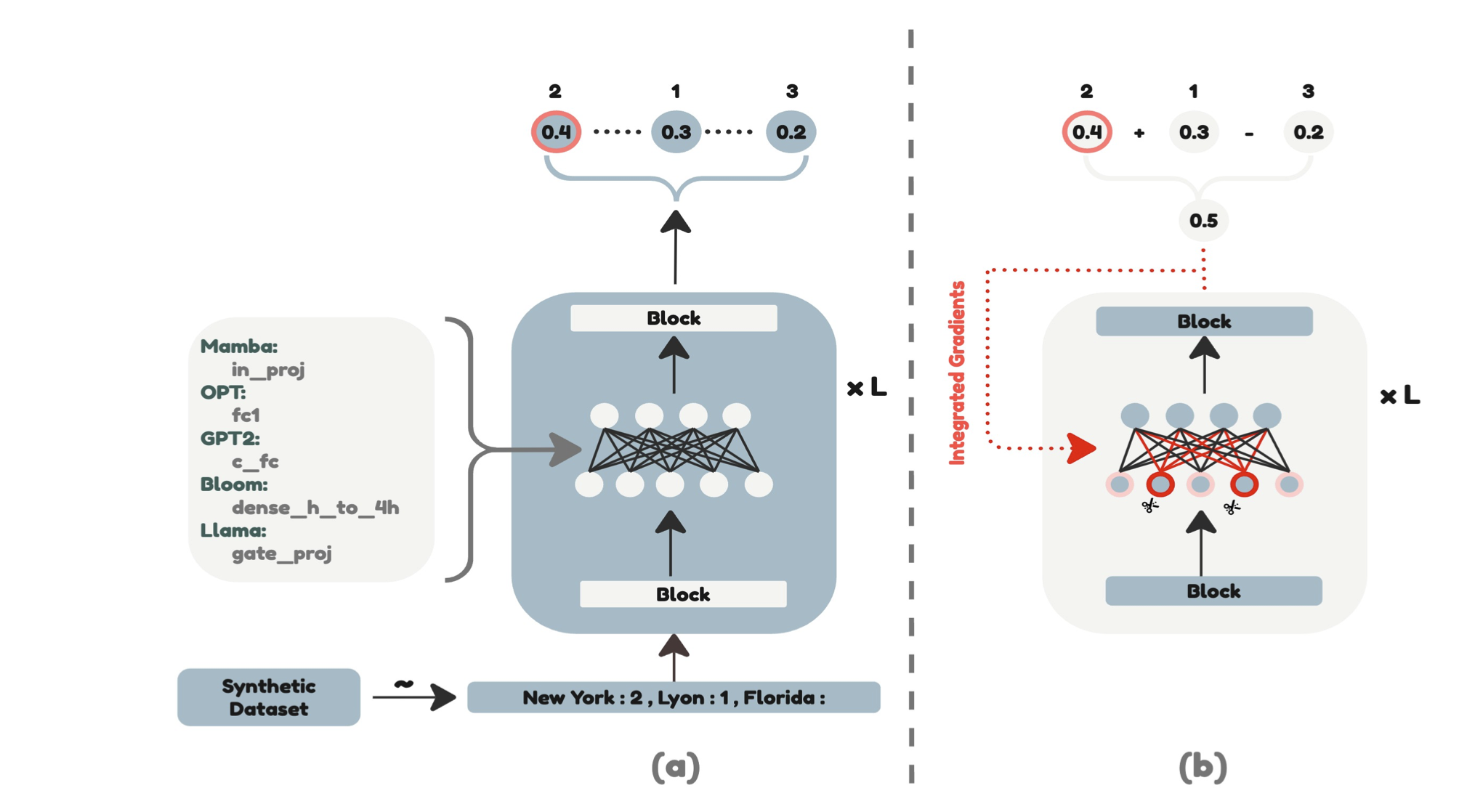

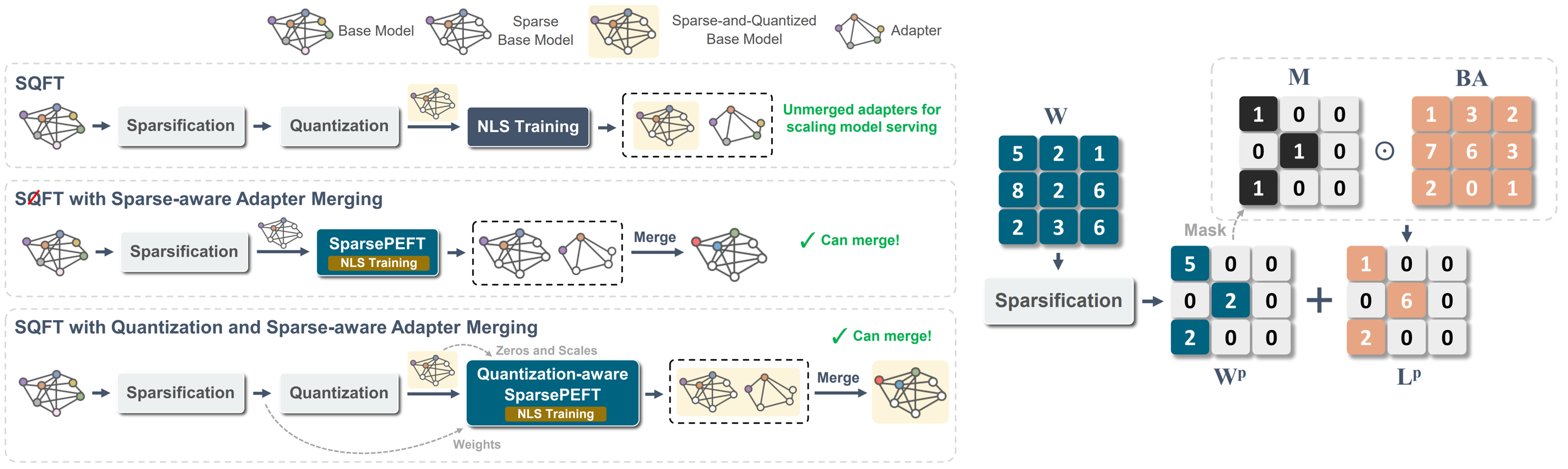

SQFT:低精确稀疏基础模型中的低成本模型适应 Juan Pablo Munoz,Jinjie Yuan,Nilesh Jain |  | github 纸 |

maskllm:大语言模型的可学习半结构性稀疏性 Gongfan Fang,Hongxu Yin,Saurav Muralidharan,Greg Heinrich,Jeff Pool,Jan Kautz,Pavlo Molchanov,Xinchao Wang |  | github 纸 |

搜索有效的大语言模型 Xuan Shen,Pu Zhao,Yifan Gong,Zhenglun Kong,Zheng Zhan,Yushu Wu,Ming Lin,Chao Wu,Xue Lin,Yanzhi Wang | 纸 | |

CFSP:具有粗到1个激活信息的LLM的有效结构化修剪框架 Yuxin Wang,Minghua MA,Zekun Wang,Jingchang Chen,Huiming Fan,Liping Shan,Qing Yang,Dongliang Xu,Ming Liu,Bing Qin | github 纸 | |

| 燕麦:通过稀疏和低等级分解进行异常觉 斯蒂芬·张,瓦丹·帕皮恩 | 纸 | |

| KVPruner:结构修剪,以更快,记忆高效的大语言模型 Bo LV,Quan Zhou,Xuanang Ding,Yan Wang,Zeming MA | 纸 | |

| 评估压缩技术对大语模型特定任务性能的影响 Bishwash Khanal,Jeffery M. Capone | 纸 | |

| Stun:结构化的,然后是无结构的修剪,用于可伸缩的MOE修剪 Jaeseong Lee,Seung-Won Hwang,Aurick Qiao,Daniel F Campos,Zhewei Yao,Yuxiong他 | 纸 | |

PAT:大语模型的修剪意识调整 Yijiang Liu,Huanrui Yang,Youxin Chen,Rongyu Zhang,Miao Wang,Yuan du,Li du |  | github 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

| 大型语言模型的知识蒸馏 Yuxian Gu,Li Dong,Furu Wei,Minlie Huang |  | github 纸 |

| 通过反馈驱动的蒸馏提高小语言模型的数学推理能力 Xunyu Zhu,Jian Li,Can Ma,Weiping Wang | 纸 | |

生成上下文蒸馏 Haebin Shin,Lei JI,Yeyun Gong,Sungdong Kim,Eunbi Choi,Minjoon Seo |  | github 纸 |

| 切换:与老师一起研究大语模型的知识蒸馏 Jahyun Koo,Yerin Hwang,Yongil Kim,Taegwan Kang,Hyunkyung Bae,Kyomin Jung |  | 纸 |

超越自动进展:通过时间进行自我介绍的快速llms 贾斯汀·德克纳(Justin Deschenaux),卡格拉(Caglar Gulcehre) | github 纸 | |

| 大型语言模型的培训前蒸馏:设计空间探索 Hao Peng,Xin LV,Yushi Bai,Zijun Yao,Jiajie Zhang,Lei Hou,Juanzi Li | 纸 | |

小型:培训前语言模型的知识蒸馏 Yuxian Gu,Hao Zhou,Fandong Meng,Jie Zhou,Minlie Huang |  | github 纸 |

| 投机知识蒸馏:通过交错抽样弥合教师差距 Wenda Xu,Rujun Han,Zifeng Wang,Long T. Le,Dhruv Madeka,Lei Li,William Yang Wang,Rishabh Agarwal,Chen-Yu Lee,Tomas Pfister | 纸 | |

| 语言模型对齐的进化对比度蒸馏 朱利安·卡兹·萨穆尔(Julian Katz-Samuels),郑李(Zheng Li),Yun Yun,Priyanka Nigam,Yi Xu,Vaclav Petricek,Bing Yin,Trishul Chilimbi | 纸 | |

| Babyllama-2:合奏缩放的模型始终超过有限数据的老师 Jean-Loup Tastet,Inar Timiryasov | 纸 | |

| Echoatt:参加,复制,然后调整以获取更有效的大语言模型 Hossein Rajabzadeh,Aref Jafari,Aman Sharma,Benyamin Jami,Hyock Ju Kwon,Ali Ghodsi,Ali Ghodsi,Boxing Chen,Mehdi Rezagholizadeh | 纸 | |

Skintern:内部化符号知识,用于将更好的COT功能提炼成小语言模型 Huanxuan Liao,Shizhu He,Yupu Hao,Xiang Li,Yuanzhe Zhang,Kang Liu,Jun Zhao | github 纸 | |

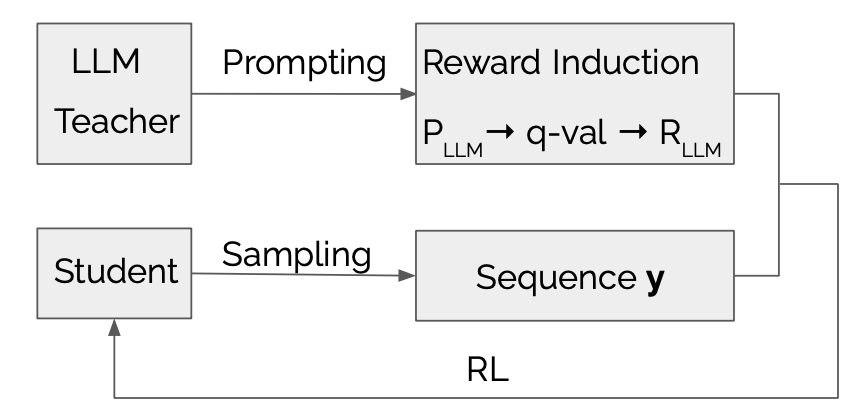

LLMR:具有大型语言模型引起的奖励的知识蒸馏 Dongheng Li,Yongchang Hao,Lili Mou |  | github 纸 |

| 探索和增强自回归语言模型知识蒸馏中分配的转移 Jun Rao,Xuebo Liu,Zepeng Lin,Liang ding,Jing Li,Dacheng Tao | 纸 | |

| 有效的知识蒸馏:通过教师模型洞察力赋予小语言模型 Mohamad Ballout,Ulf Krumnack,Gunther Heidemann,Kai-uweKühnberger | 纸 | |

美洲驼的曼巴(Mamba):蒸馏和加速混合模型 Junxiong Wang,Daniele Paliotta,Avner May,Alexander M. Rush,Tri Dao | github 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

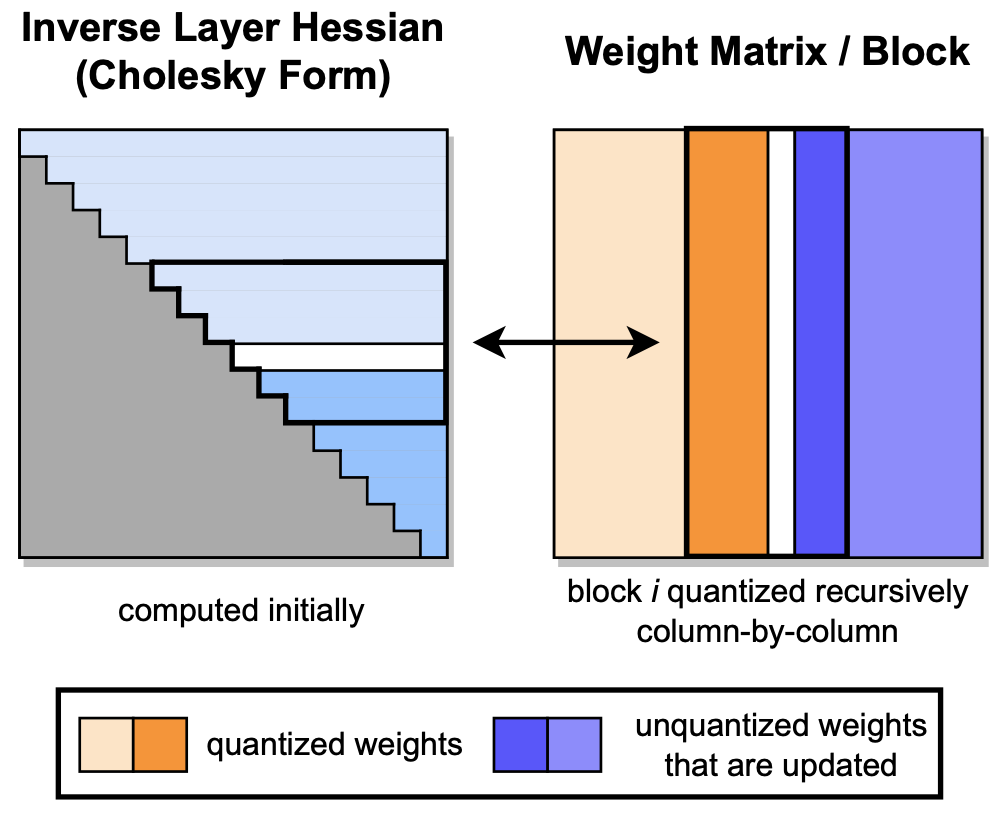

GPTQ:生成预训练的变压器的准确训练后量化 Elias Frantar,Saleh Ashkboos,Torsten Hoefler,Dan Alistarh |  | github 纸 |

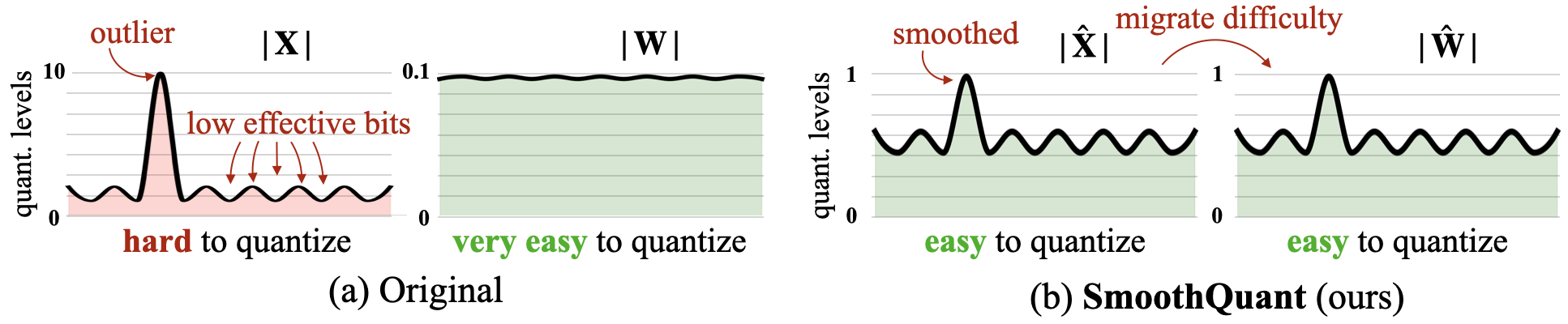

平滑:大语言模型的准确有效的培训量化 Guangxuan Xiao,Ji Lin,Mickael Seznec,Hao Wu,Julien Demouth,Song Han |  | github 纸 |

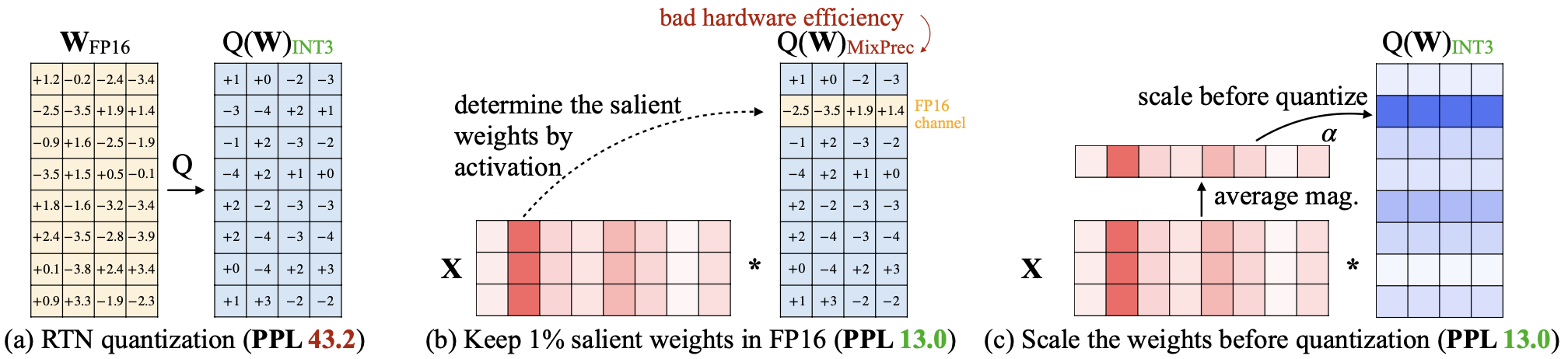

AWQ:LLM压缩和加速度的激活意识重量量化 Ji Lin,Jiaming Tang,Haotian Tang,Shang Yang,Xingyu Dang,Song Han |  | github 纸 |

全语:大语言模型的全向校准量化 Wenqi Shao,Mengzhao Chen,Zhaoyang Zhang,Peng Xu,Lirui Zhao,Zhiqian Li,Kaipeng Zhang,Peng Gao,Yu Qiao,Ping Luo |  | github 纸 |

| 略读:任何位量化都以推动训练后量化的限制 Runsheng Bai,Qiang Liu,Bo Liu | 纸 | |

| cptquant-一种新颖的混合精度训练后量化技术,用于大语言模型 Amitash Nanda,Sree Bhargavi Balija,Debashis Sahoo | 纸 | |

ANDA:使用可变长度分组激活数据格式解锁有效的LLM推论 Chao Fang,Man Shi,Robin Geens,Arne Symons,Zhongfeng Wang,Marian Verhelst | 纸 | |

| Mixpe:有效LLM推理的量化和硬件共同设计 Yu Zhang,Mingzi Wang,Lancheng Zou,Wulong Liu,Hui-Ling Zhen,Mingxuan Yuan,Bei Yu | 纸 | |

BitMod:Datatype LLM加速度的比特系列混合物 Yuzong Chen,Ahmed F. Abouelhamayed,Xilai dai,Yang Wang,Marta Andronic,George A. Constantinides,Mohamed S. Abdelfattah | github 纸 | |

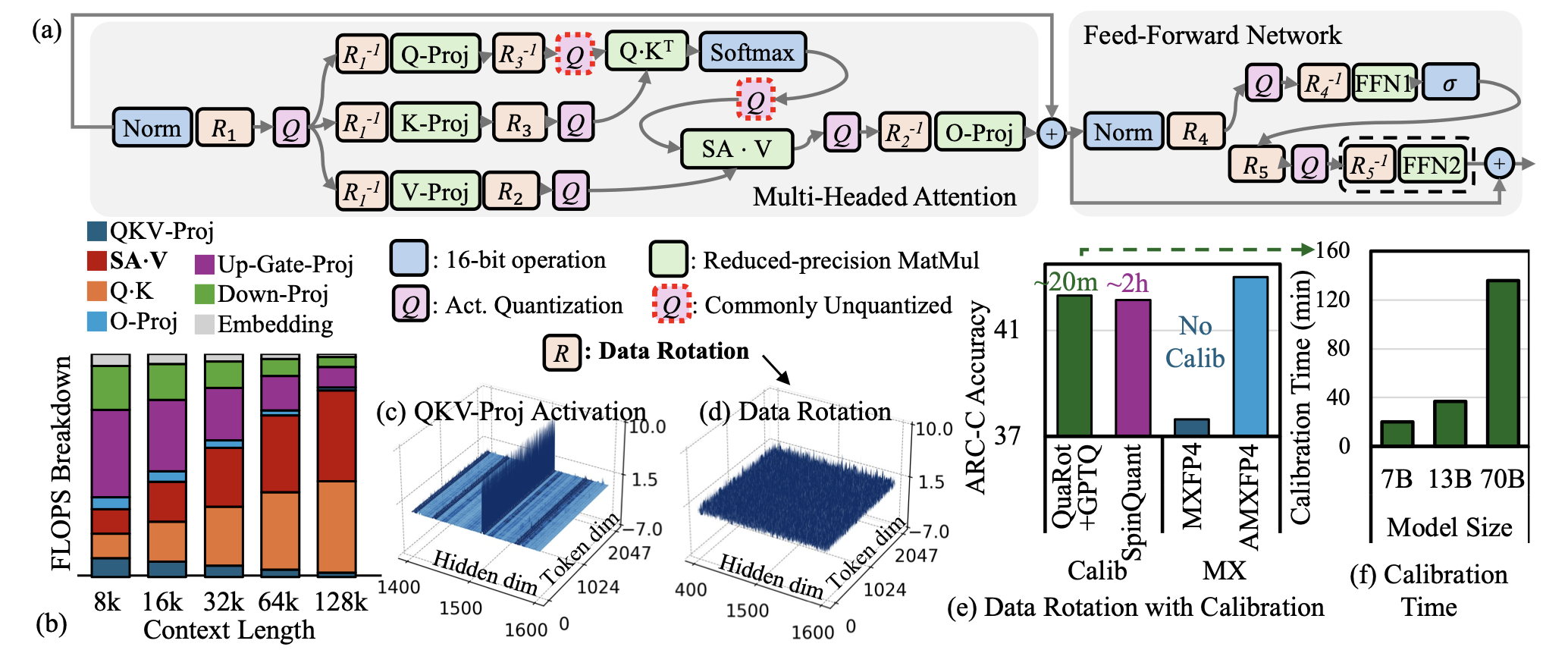

| AMXFP4:针对4位LLM推断的不对称显微镜浮点的驯服激活异常值 Janghwan Lee,Jiwoong Park,Jinseok Kim,Yongjik Kim,Jungju OH,Jinwook OH,Jungwook Choi |  | 纸 |

| BI-MAMBA:迈向准确的1位状态空间模型 Shengkun Tang,Liqun MA,Haonan Li,Mingjie Sun,Zhiqiang Shen | 纸 | |

| “给我BF16还是给我死亡”? LLM量化中的准确性绩效权衡取舍 Eldar Kurtic,Alexandre Marques,Shubhra Pandit,Mark Kurtz,Dan Alistarh | 纸 | |

| GWQ:大语言模型的梯度感知权重量化 Yihua Shao,Siyu Liang,小林,Zijian Ling,Zixian Zhu等人 | 纸 | |

| 关于大语模型的量化技术的全面研究 Jiedong Lang,Zhehao Guo,Shuyu Huang | 纸 | |

| 比特网A4.8:1位LLM的4位激活 Hongyu Wang,MA,Furu Wei | 纸 | |

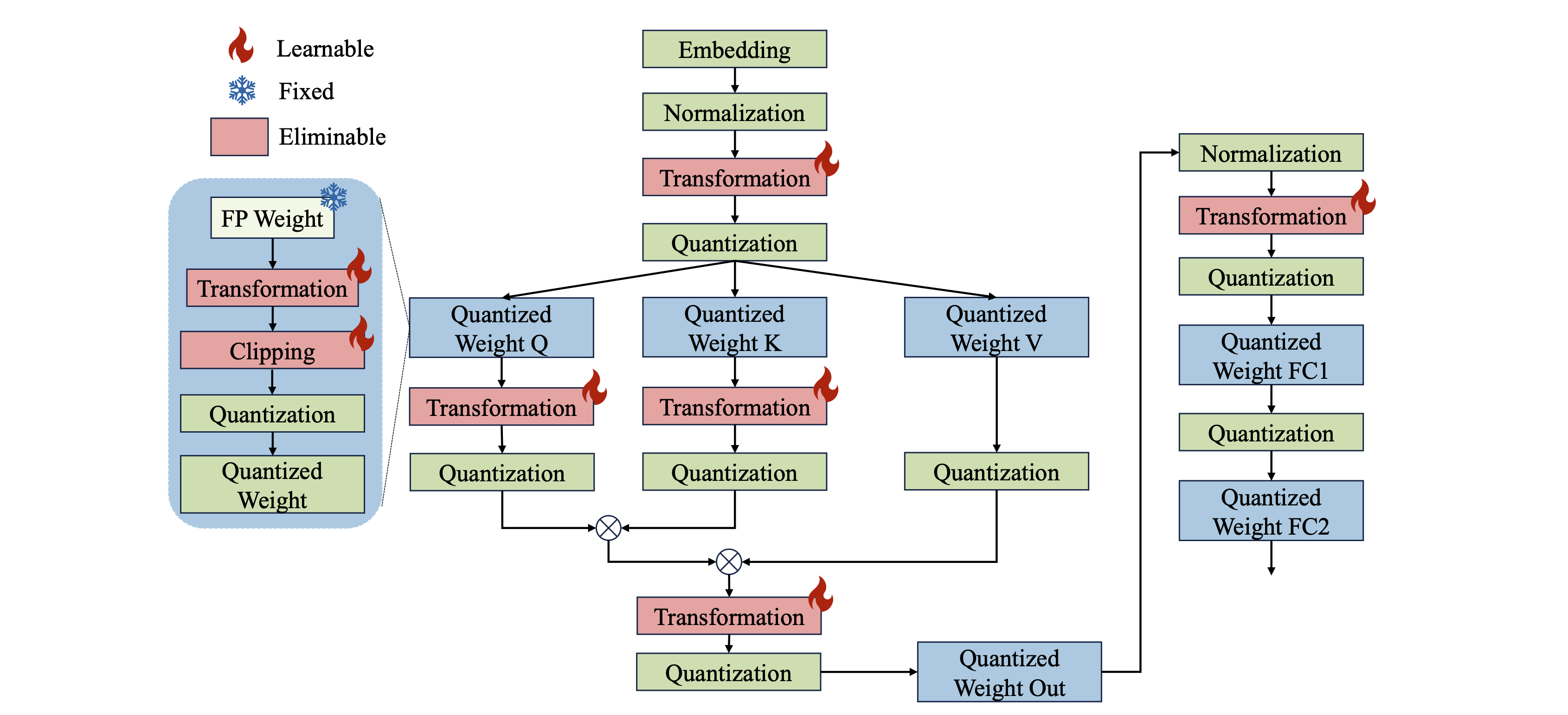

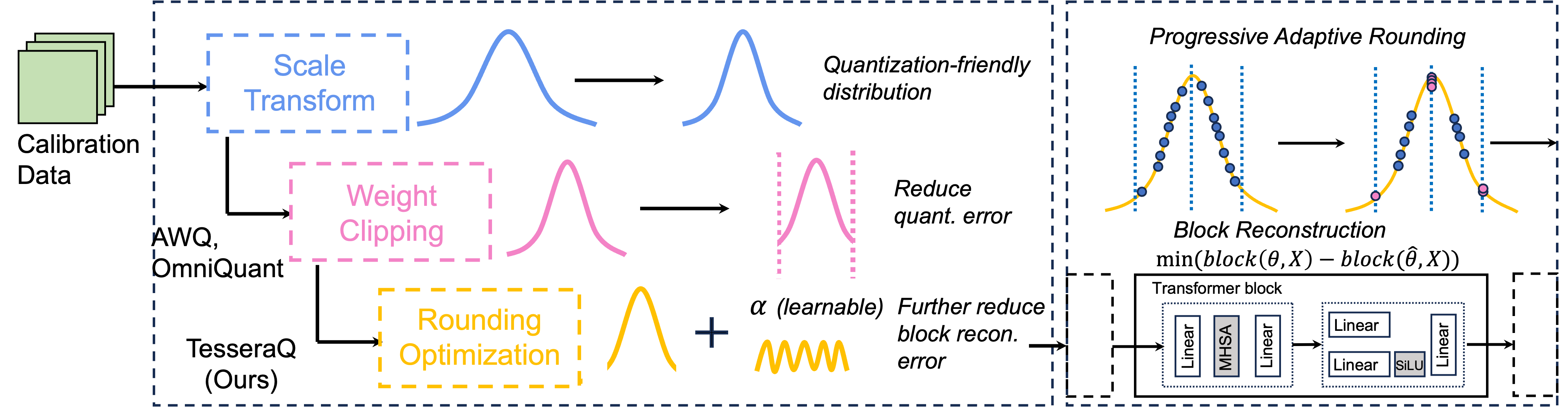

Tesseraq:超低位LLM通过块重建后训练后量化 Yuhang Li,Priyadarshini Panda |  | github 纸 |

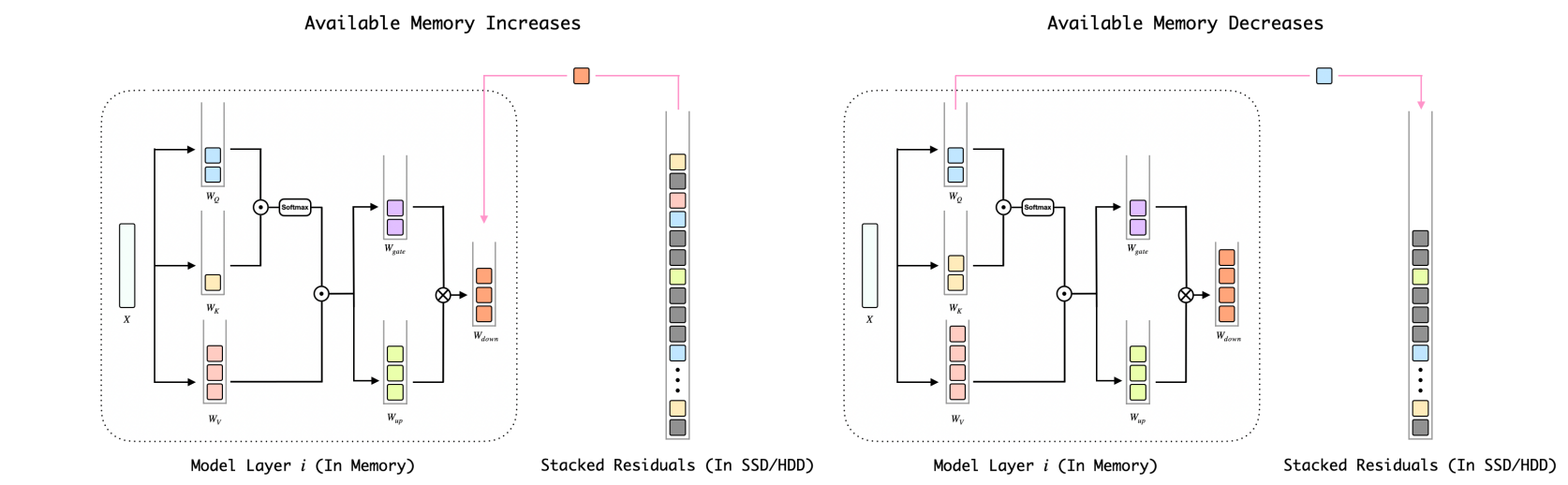

BITSTACK:在可变内存环境中压缩大语言模型的细粒度尺寸控制 Xinghao Wang,Pengyu Wang,Bo Wang,Dong Zhang,Yunhua Zhou,Xipeng Qiu |  | github 纸 |

| 推理加速策略对LLM偏差的影响 Elisabeth Kirsten,Ivan Habernal,Vedant Nanda,Muhammad Bilal Zafar | 纸 | |

| 了解大语言模型的低精度训练后量化的难度 Zifei Xu,Sayeh Sharify,Wanzin Yazar,Tristan Webb,Xin Wang | 纸 | |

1位AI Instra:第1.1部分,快速而无损的BITNET B1.58推断CPU 金正恩(Jinheng Wang),汉港(Hansong Zhou),Ting Song,Shaoguang Mao,Shuming Ma,Hongyu Wang,Yan Xia,Furu Wei | github 纸 | |

| Quailora:Lora的量化初始化 Neal Lawton,Aishwarya Padmakumar,Judith Gaspers,Jack Fitzgerald,Anoop Kumar,Greg Ver Steeg,Aram Galstyan | 纸 | |

| 评估量化的大型语言模型,用于低资源语言基准上的代码生成 Enkhbold Nyamsuren | 纸 | |

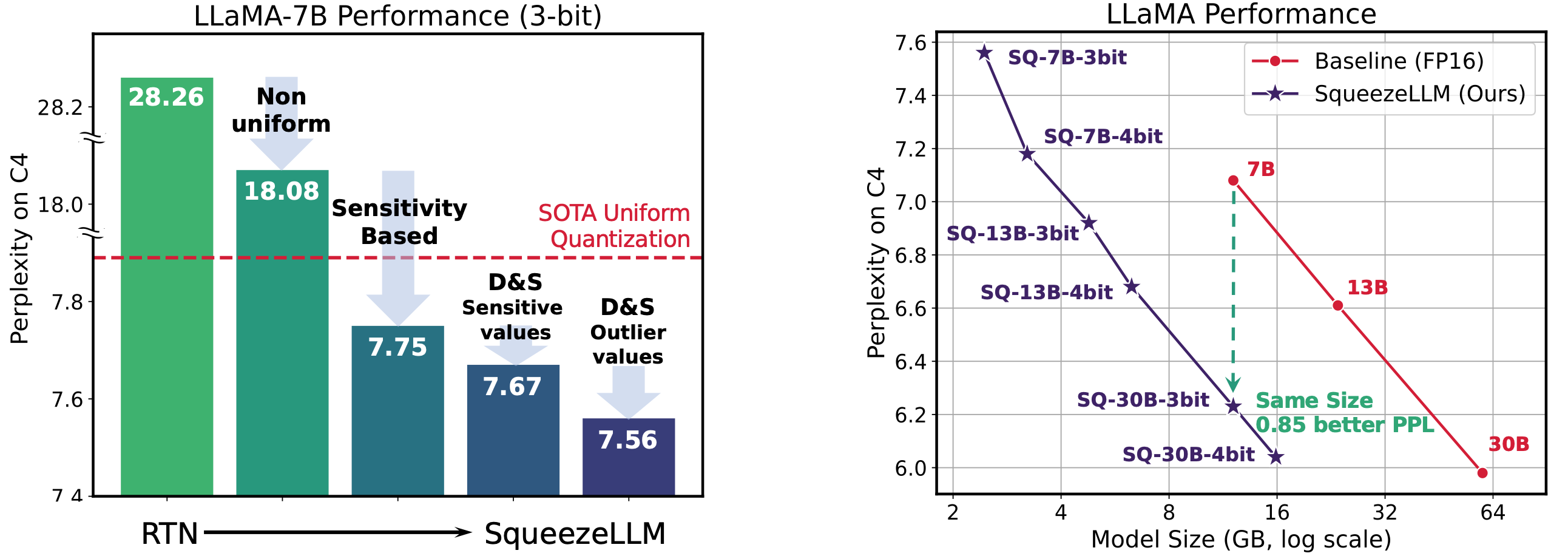

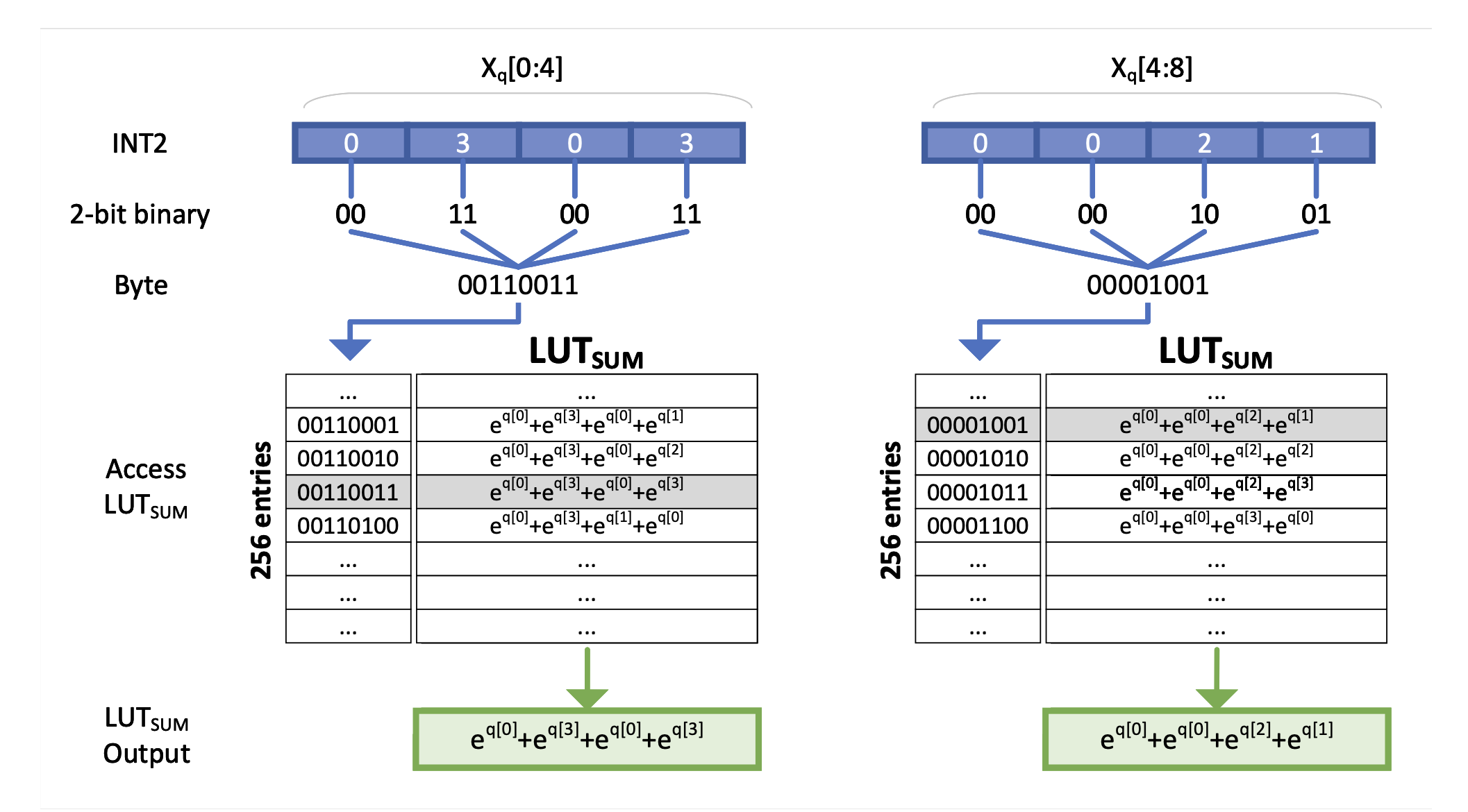

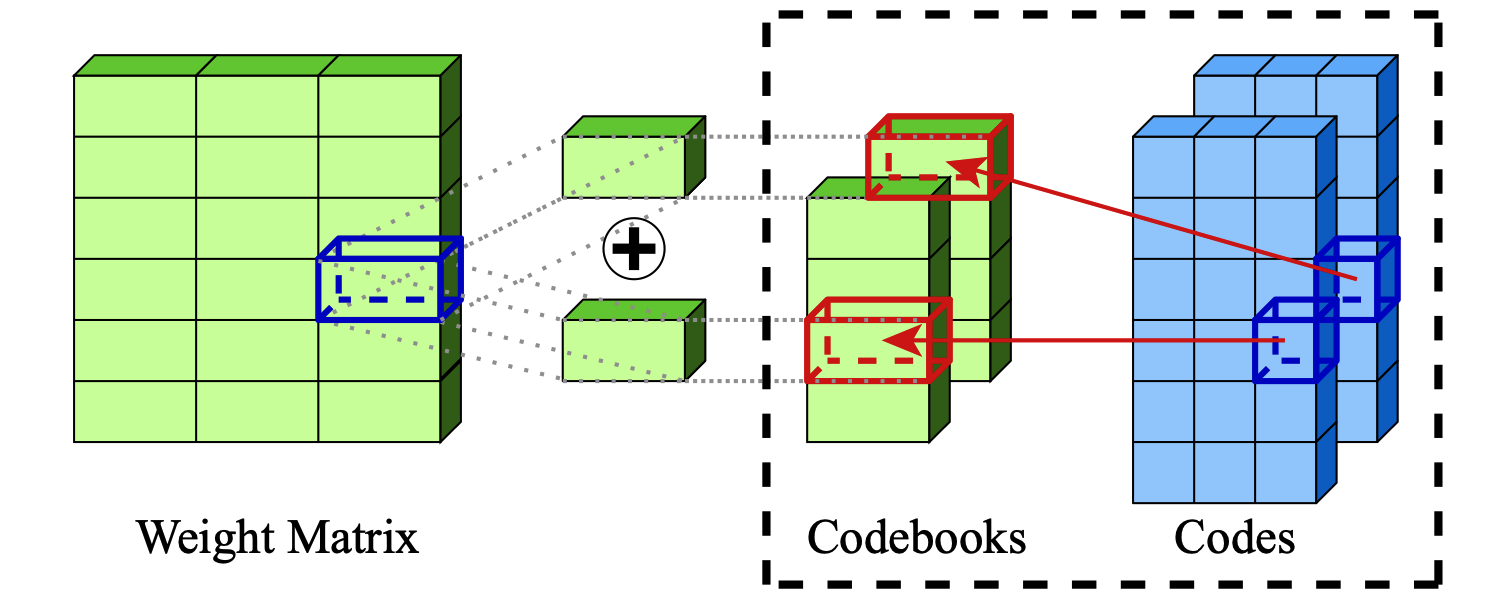

Squeezellm:密集量的量化 Sehoon Kim,Coleman Hooper,Amir Gholami,Zhen Dong,Xiuyu Li,Sheng Shen,Michael W. Mahoney,Kurt Keutzer |  | github 纸 |

| LLM的金字塔载体量化 Tycho Fa van der Ouderaa,Maximilian L. Croci,Agrin Hilmkil,James Hensman | 纸 | |

| 幼苗:将LLM的重量压缩到伪随机发电机的种子中 Rasoul Shafipour,David Harrison,Maxwell Horton,Jeffrey Marker,Houman Bedayat,Sachin Mehta,Mohammad Rastegari,Mahyar Najibi,Saman Naderiparizi | 纸 | |

llm量化的扁平度很重要 Yuxuan Sun,Ruikang Liu,Haoli Bai,Han Bao,Kang Zhao,Yuening Li,Jiaxin Hu,Xianzhi Yu,Lu Hou,Chun Yuan,Xin Jiang,wulong Liu,Jun Yao,Jun Yao | github 纸 | |

Slim:单发量化的稀疏加上LLM的低级别近似值 Mohammad Mozaffari,Maryam Mehri Dehnavi | github 纸 | |

| 训练后量化法律量化大型语言模型 Zifei Xu,Alexander Lan,Wanzin Yazar,Tristan Webb,Sayeh Shosify,Xin Wang | 纸 | |

| 连续近似来改善LLM的量化意识培训 他李,江港,Yuanzhuo Wu,Snehal Adbol,Zonglin li | 纸 | |

DAQ:LLMS的密度感知训练后重量量化 杨伦,春 | github 纸 | |

Quamba:选择性状态空间模型的训练后量化配方 Hung-Yueh Chiang,Chi-Chih Chang,Natalia Frumkin,Kai-Chiang Wu,Diana Marculescu | github 纸 | |

| ASYMKV:通过通过层的不对称量化配置启用KV高速缓存的1位量化 Qian Tao,Wenyuan Yu,Jingren Zhou | 纸 | |

| 大型语言模型的渠道混合精确量化 Zihan Chen,Bike Xie,Jundong Li,Cong Shen | 纸 | |

| 进行有效LLM推断的渐进混合精液解码 Hao Mark Chen,Fuwen Tan,Alexandros Kouris,Royson Lee,Hongxiang粉丝,Stylianos I. Venieris | 纸 | |

EXAQ:LLMS加速的指数意识量化 Moran Shkolnik,Maxim Fishman,Brian Chmiel,Hilla Ben-Yaacov,Ron Banner,Kfir Yehuda Levy |  | github 纸 |

前缀:静态量化通过LLMS中的前缀异常值击败动态 Mengzhao Chen,Yi Liu,Jiahao Wang,Yi bin,Wenqi Shao,Ping Luo | github 纸 | |

通过添加量化对大语言模型的极端压缩 Vage Egiazarian,Andrei Panferov,Denis Kuznedelev,Elias Frantar,Artem Babenko,Dan Alistarh |  | github 纸 |

| 大语模型中混合量化的缩放定律 Zeyu Cao,Cheng Zhang,Pedro Gimenes,Jianqiao Lu,Jianyi Cheng,Yiren Zhao |  | 纸 |

| PARMBENCH:移动平台上压缩大语言模型的全面基准 Yilong Li,Jingyu Liu,Hao Zhang,M Badri Narayanan,Utkarsh Sharma,Shuai Zhang,Pan Hu,Yijing Zeng,Jayaram Raghuram,Suman Banerjee, |  | 纸 |

| CrossQuant:一种具有较小量化内核的训练后量化方法,用于精确的大语言模型压缩 Wenyuan Liu,Xindian MA,Peng Zhang,Yan Wang | 纸 | |

| SageTention:插件推理的准确8位注意力 Jintao Zhang,Jia Wei,Pengle Zhang,Jun Zhu,Jianfei Chen | 纸 | |

| 增加节能语言模型所需的一切 Hongyin Luo,Wei Sun | 纸 | |

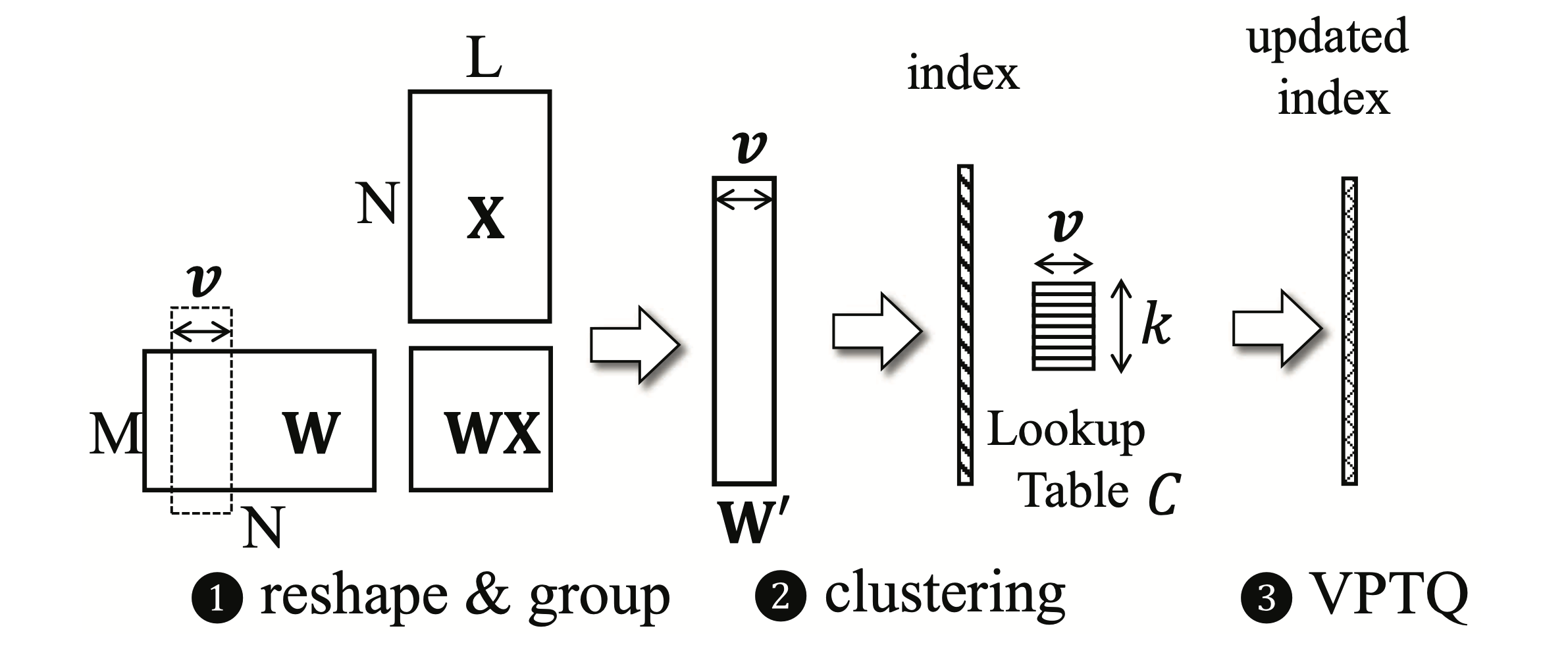

VPTQ:大语言模型的极端低位矢量训练后量化 Yifei Liu,Jicheng Wen,Yang Wang,Shengyu Ye,Li Lyna Zhang,Ting Cao,Cheng Li,Mao Yang |  | github 纸 |

int-flashattention:引起int8量化的闪光灯 Shimao Chen,Zirui Liu,Zhiying Wu,Ce Zheng,Peizhuang Cong,Zihan Jiang,Yuhan Wu,Lei Su,Tong Yang | github 纸 | |

| 累加器感知的训练后量化 Ian Colbert,Fabian Grob,Giuseppe Franco,Jinjie Zhang,Rayan Saab | 纸 | |

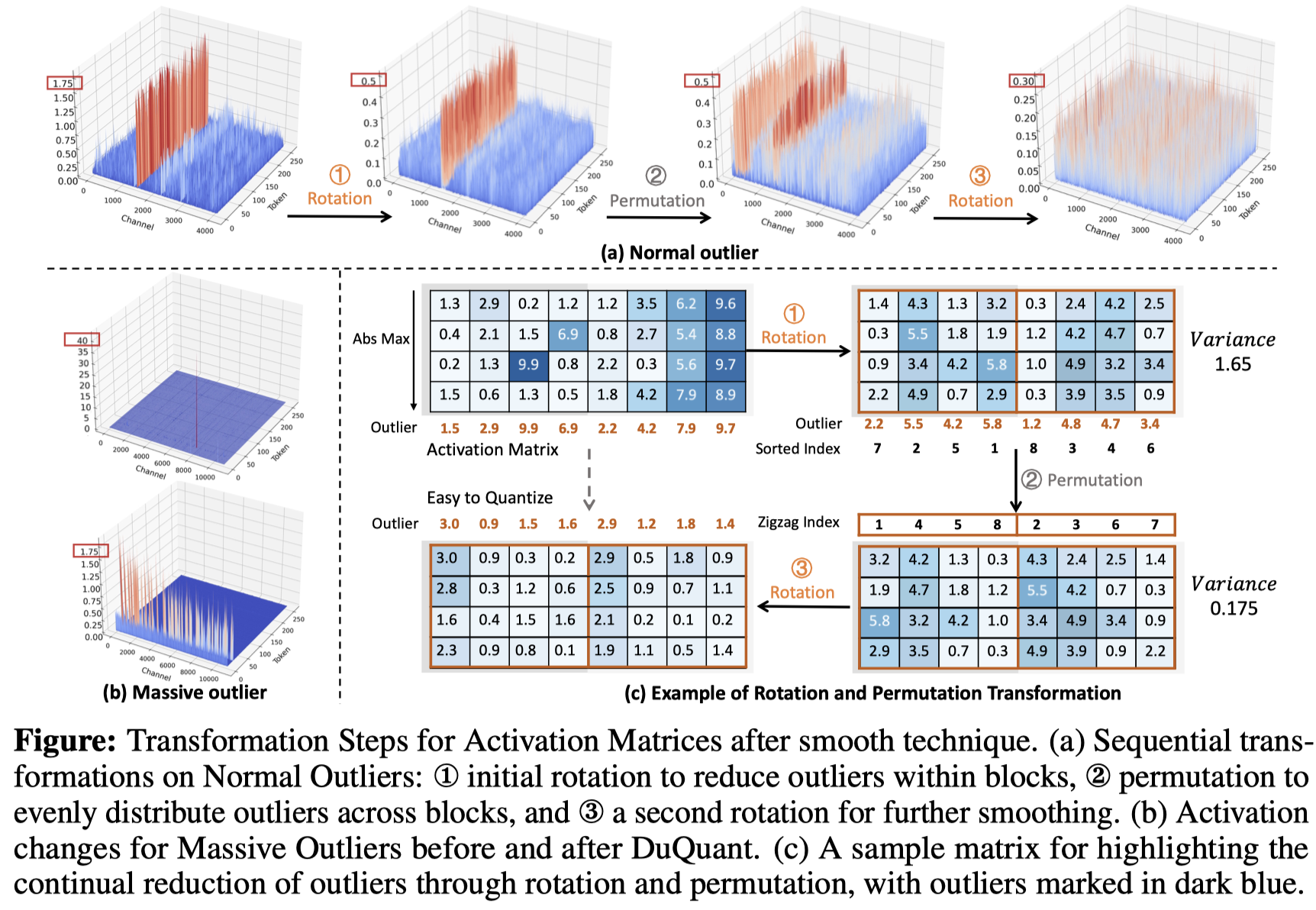

DUQUANT:通过双重变换分发异常值使更强的量化LLM Haokun Lin,Haobo Xu,Yichen Wu,Jingzhi Cui,Yingtao Zhang,Linzhan Mou,Linqi Song,Zhenan Sun,Ying Wei |  | github 纸 |

| 对量化指导调整的大语言模型的全面评估:最高405B的实验分析 Jemin Lee,Sihyeong Park,Jinse Kwon,Jihun OH,Yongin Kwon | 纸 | |

| 通过通道量化的Llama3-70b的独特性:一项经验研究 Minghai Qin | 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

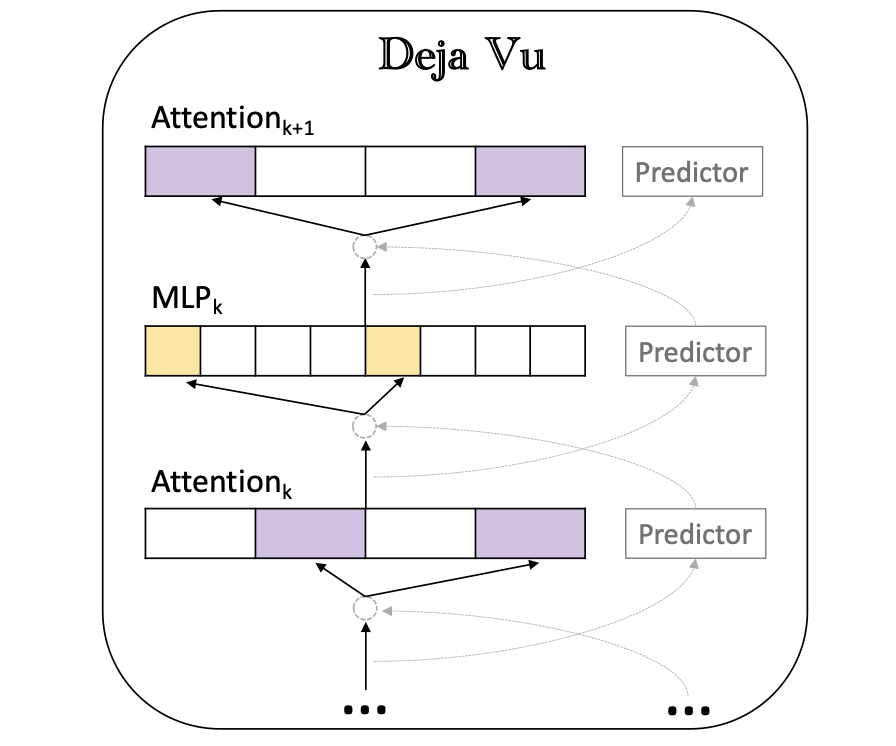

DEJA VU:推理时有效LLM的上下文稀疏性 Zichang Liu,Jue Wang,Tri Dao,Tianyi Zhou,Binhang Yuan,Zhao Song,Anshumali Shrivastava,Ce Zhang,Yuandong Tian,Christopher RE,Beidi Chen,Beidi Chen |  | github 纸 |

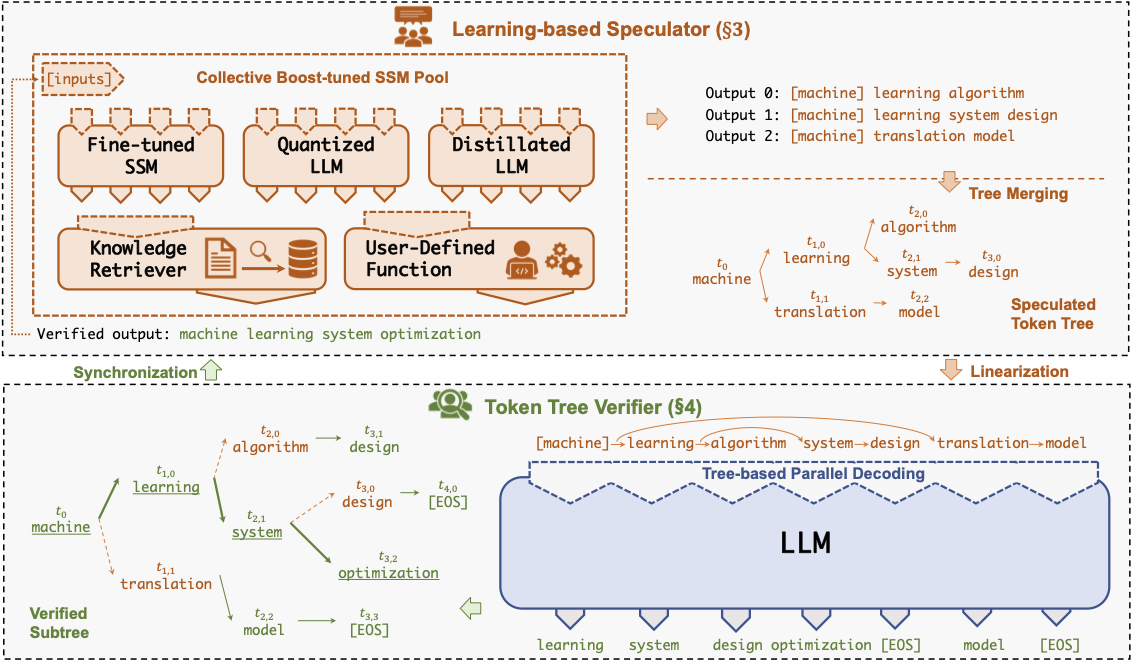

Specinfer:加速使用投机推理和令牌树验证的生成LLM Xupeng Miao,Gabriele Oliaro,Zhihao Zhang,Xinhao Cheng,Zeyu Wang,Rae Ying Ying Yee Wong,Zhuoming Chen,Daiyaan Arfeen,Reyna Abhyankar,Zhihao Jia Jia |  | github 纸 |

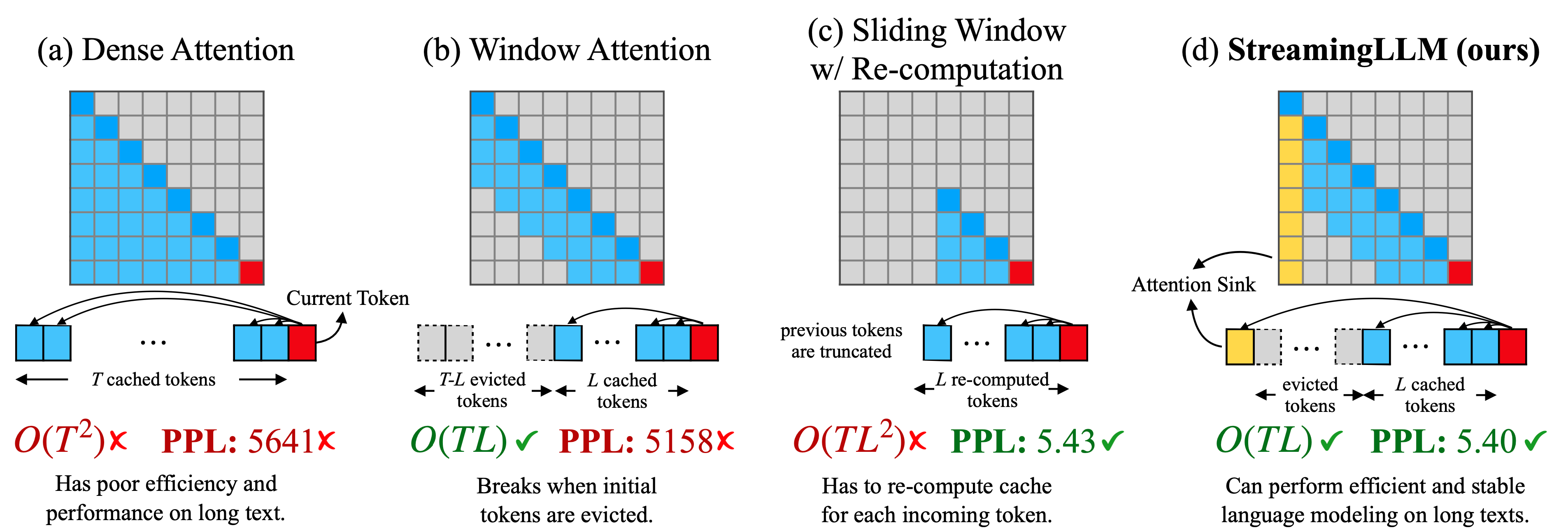

有效的流式语言模型带有注意力集 广X山,Yuandong Tian,Beidi Chen,Song Han,Mike Lewis |  | github 纸 |

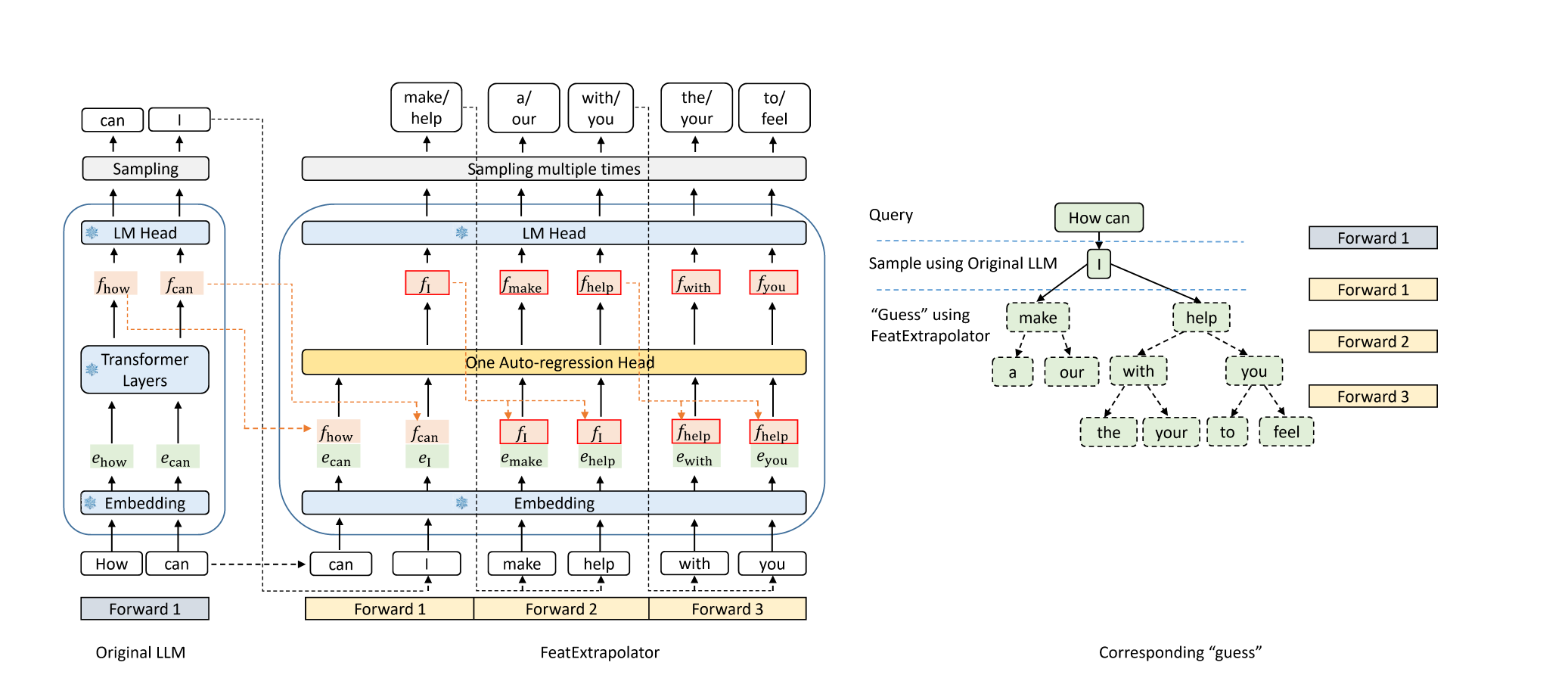

鹰:LLM通过特征外推解码的无损加速度 Yuhui Li,Chao Zhang和Hongyang Zhang |  | github 博客 |

美杜莎:简单的LLM推理加速框架,带有多个解码头 Tianle Cai,Yuhong Li,Zhengyang Geng,Hongwu Peng,Jason D. Lee,Deming Chen,Tri Dao | github 纸 | |

| 使用LLM推理加速的基于CTC的草稿模型进行投机解码 Zhuofan Wen,上海Gui,杨冯 | 纸 | |

| PLD+:通过利用语言模型工件加速LLM推论 Shwetha Somasundaram,Anirudh Phukan,Apoorv Saxena | 纸 | |

Fastdraft:如何训练草稿 Ofir Zafrir,Igor Margulis,Dorin Shteyman,Guy Boudoukh | 纸 | |

Smoa:改善具有稀疏代理混合物的多门语言模型 Dawei Li,Zhen Tan,Peijia Qian,Yifan Li,Kumar Satvik Chaudhary,Lijie Hu,Jiayi Shen |  | github 纸 |

| N-Grammys:通过无学习的猜测加速自回旋推理 劳伦斯·斯图尔特(Lawrence Stewart),马修·特拉格(Matthew Trager),苏扬·库马尔·贡多德拉(Sujan Kumar Gonugondla) | 纸 | |

| 通过动态执行方法加速AI推论 Haim Barad,Jascha Achterberg,Tien Pei Chou,Jean Yu | 纸 | |

| suffixDecoding:一种无模型的方法来加快大型语言模型推理 Gabriele Oliaro,Zhihao Jia,Daniel Campos,Aurick Qiao | 纸 | |

| 通过大语言模型回答有效问题的动态策略计划 Tanmay Parekh,Pradyot Prakash,Alexander Radovic,Akshay Shekher,Denis Savenkov | 纸 | |

MagicPig:LSH抽样有效的LLM生成 Zhuoming Chen,Ranajoy Sadhukhan,Zihao Ye,Yang Zhou,Jianyu Zhang,Niklas Nolte,Yuandong Tian,Matthijs Douze,Leon Bottou,Zhihao Jia,Beidi Chen,Beidi Chen | github 纸 | |

| 使用张量分解的更快的语言模型,具有更好的多句预测 Artem Basharin,Andrei Chertkov,Ivan Oseledets |  | 纸 |

| 增强大语言模型的有效推断 Rana Shahout,Cong Liang,Shiji Xin,Qianru Lao,Yong Cui,Minlan Yu,Michael Mitzenmacher | 纸 | |

早期外观LLM中的动态词汇修剪 jort Vincenti,Karim Abdel Sadek,Joan Velja,Matteo Nulli,Metod Jazbec |  | github 纸 |

Coreinfer:以语义为灵感的自适应稀疏激活加速大型语言模型推断 Qinsi Wang,Saeed Vahidian,Hancheng Ye,Jianyang Gu,Jianyi Zhang,Yiran Chen | github 纸 | |

二重奏:有效的长篇文化LLM推断带有检索和流式的头部 Guangxuan Xiao,Jiaming Tang,Jingwei Zuo,Junxian Guo,Shang Yang,Haotian Tang,Yao Fu,Song Han |  | github 纸 |

| DYSPEC:使用动态令牌树结构更快的投机解码 Yunfan Xiong,Ruoyu Zhang,Yanzeng Li,Tianhao Wu,Lei Zou | 纸 | |

| QSPEC:使用互补量化方案进行投机解码 Juntao Zhao,Wenhao Lu,Sheng Wang,Lingpeng Kong,Chuan Wu | 纸 | |

| Tidaldecode:快速准确的LLM解码,位置持续稀疏注意 lijie Yang,Zhihao Zhang,Zhuofu Chen,Zikun Li,Zhihao jia | 纸 | |

| ParallelsPec:平行起草者,用于有效投机解码 Zilin Xiao,Hongming Zhang,Tao GE,Siru Ouyang,Vicente Ordonez,Dong Yu | 纸 | |

Swift:LLM推理加速度的自然自我指导解码 海明Xia,Yongqi li,Jun Zhang,Cunxiao du,Wenjie li |  | github 纸 |

TurrOrag:加速带有预先计算的KV缓存,以加速带有块状文本 Songshuo Lu,Hua Wang,Yutian Rong,Zhi Chen,Yaohua Tang |  | github 纸 |

| 一点点走很长一段路:有效的长篇小说培训和对部分环境的推断 Suyu GE,Xihui Lin,Yunan Zhang,Jiawei Han,Hao Peng | 纸 | |

| Mnemosyne:有效服务数百万上下文长度LLM推理请求的并行化策略,而无需近似 Amey Agrawal,Junda Chen,íññigoGoiri,Ramachandran Ramjee,Chaojie Zhang,Alexey Tumanov,Esha Choukse | 纸 | |

在早期发现宝石:以1000倍的输入令牌减少加速长篇小说LLM Zhenmei Shi,Yifei Ming,Xuan-Phi Nguyen,Yingyu Liang,Shafiq Joty | github 纸 | |

| 有效LLM推断的动态宽度投机梁解码 Zongyue Qin,Zifan HE,Neha Prakriya,Jason Cong,Yizhou Sun | 纸 | |

Critiprefill:一种基于细分的基于批判性的方法,用于预填充LLMS Junlin LV,Yuan Feng,Xike Xie,Xin Jia,Qirong Peng,Guiming Xie | github 纸 | |

| 检索:通过矢量检索加速长篇小说LLM推断 Di Liu, Meng Chen, Baotong Lu, Huiqiang Jiang, Zhenhua Han, Qianxi Zhang, Qi Chen, Chengruidong Zhang, Bailu Ding, Kai Zhang, Chen Chen, Fan Yang, Yuqing Yang, Lili Qiu | 纸 | |

小天狼星:有效LLM的上下文稀疏性和校正 Yang Zhou,Zhuoming Chen,Zhaozhuo Xu,Victoria Lin,Beidi Chen | github 纸 | |

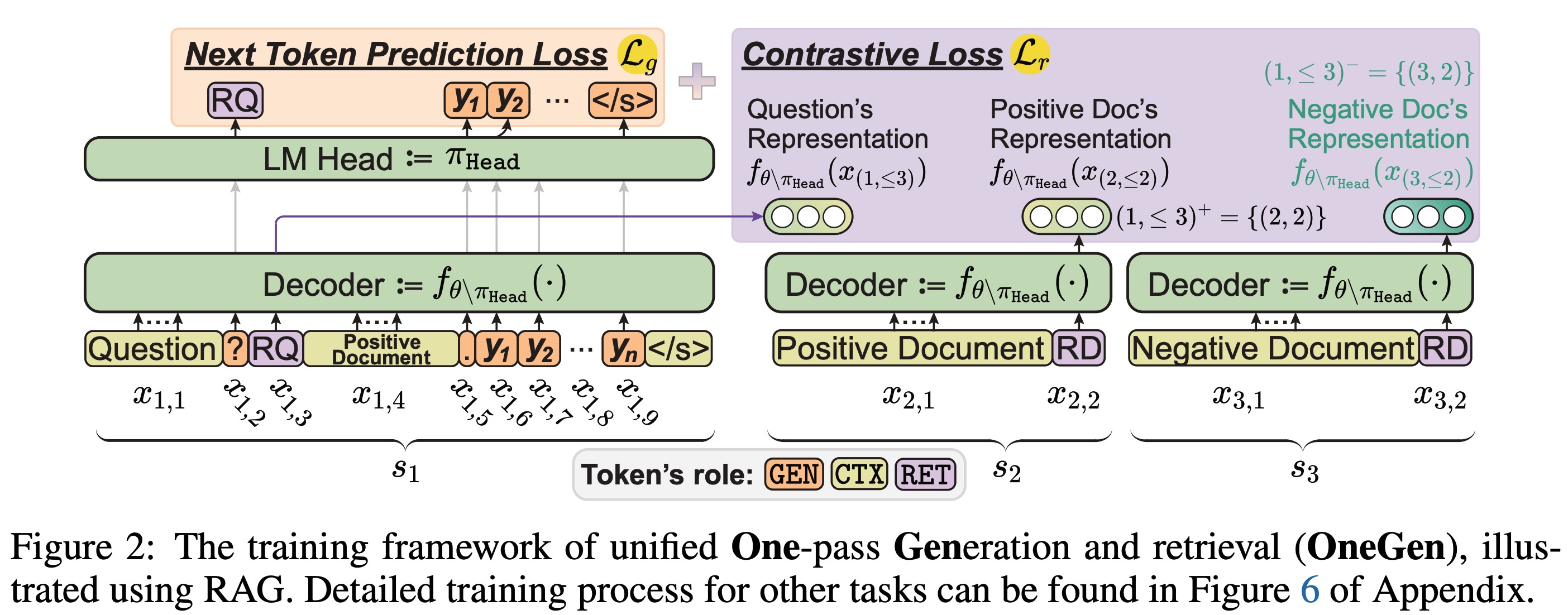

OneGen:LLM的有效的一通统一生成和检索 Jintian Zhang,Cheng Peng,Mengshu Sun,Xiang Chen,Lei Liang,Zhiqiang Zhang,Jun Zhou,Huajun Chen,Ningyu Zhang |  | github 纸 |

| 路径矛盾:在LLM中有效推断的前缀增强 Jiace Zhu,Yingtao Shen,Jie Zhao,Zou | 纸 | |

| 通过特征抽样和部分比对蒸馏来提高无损投机解码 Lujun Gui,Bin Xiao,Lei Su,Weipeng Chen | 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

与卸载的混合物语言模型的快速推断 Artyom Eliseev,Denis Mazur |  | github 纸 |

凝结,而不仅仅是修剪:提高MOE层修剪的效率和性能 Mingyu Cao,Li,Ji Ji,Jiaqi Zhang,Siaolong MA,Shiwei Liu,Lu Yin | github 纸 | |

| 高效移动设备推理的高速缓存专家的混合物 Andrii Skliar,领带Van Rozendaal,Romain Lepert,Todor Boinovski,Mart Van Baalen,Markus Nagel,Paul Whatmough,Babak Ehteshami Bejnordi | 纸 | |

MONTA:与网络交通感知的平行优化加速培训的混合物训练 Jingming Guo,Yan Liu,Yu Meng,Zhiwei Tao,Banglan Liu,Gang Chen,Xiang Li | github 纸 | |

MOE-I2:专家模型的压缩混合物通过跨式植物修剪和内部杂货低级分解 Cheng Yang,Yang Sui,Jinqi Xiao,Lingyi Huang,Yu Gong,Yuanlin Duan,Wenqi Jia,Miao Yin,Yu Cheng,Yu Cheng,Bo Yuan | github 纸 | |

| 霍比特人:快速MOE推理的混合精确专家卸载系统 Peng Tang,Jiacheng Liu,Xiaofeng Hou,Yifei PU,Jing Wang,Pheng-Ann Heng,Chao Li,Minyi Guo | 纸 | |

| promoe:基于MOE快速的LLM使用主动缓存服务 Xiaoniu Song,Zihang Zhong,Rong Chen | 纸 | |

| 专家流:优化的专家激活和代币分配,以进行有效的专家推理 XIN HE,Shunkang Zhang,Yuxin Wang,Haiyan Yin,Zihao Zeng,Shaohuai Shi,Zhenheng Tang,Xiaowen Chu,Ivor Tsang,Ong Yew很快 | 纸 | |

| EPS-MOE:具有成本效益的MOE推理的专家管道调度程序 Yulei Qian,Fancun Li,Xiangyang JI,Xiaoyu Zhao,Jianchao Tan,Kefeng Zhang,Xunliang Cai | 纸 | |

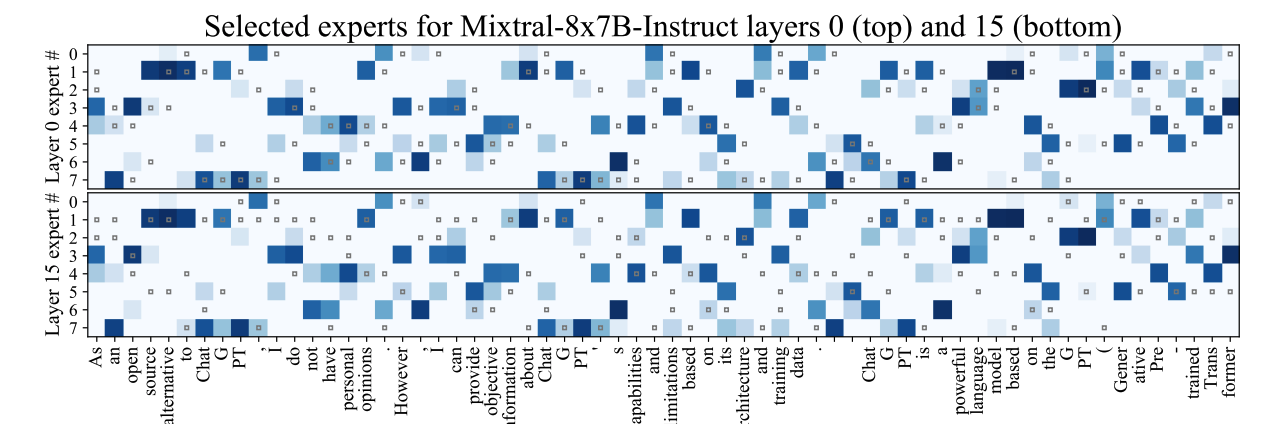

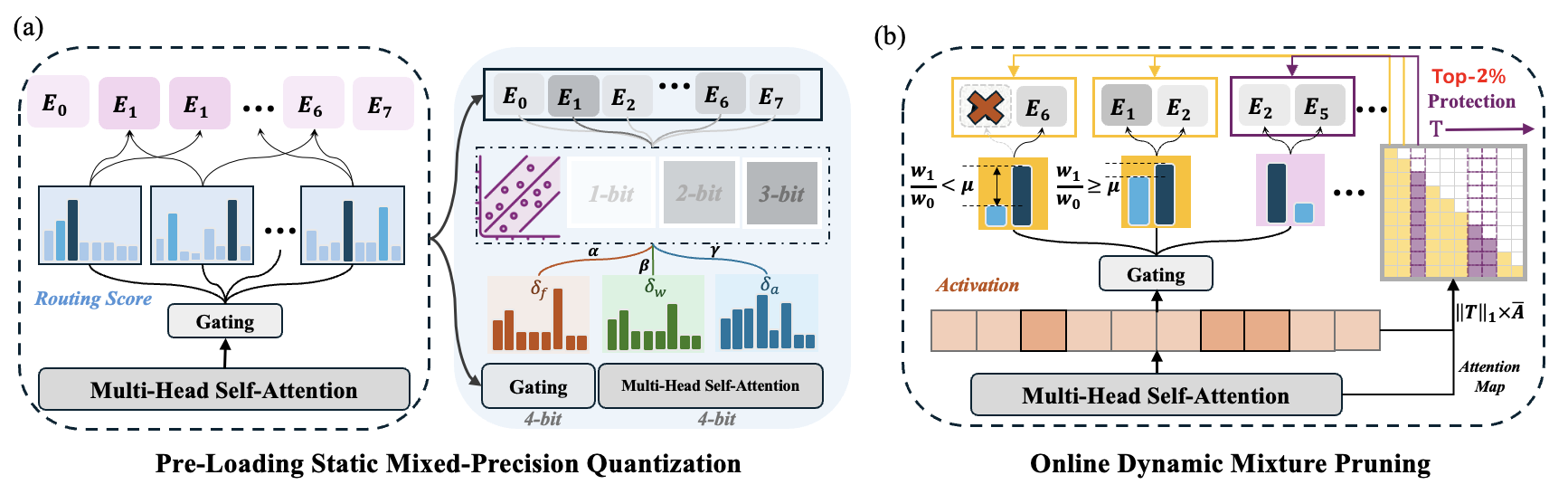

MC-MOE:混合物的混合物llms llms的混合压缩机增加了 Wei Huang,Yue Liao,Jianhui Liu,Ruifei He,Haoru Tan,Shiming Zhang,Hongsheng Li,Si Liu,si liu,xiaojuan Qi |  | github 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

Mobillama:朝着准确且轻巧的完全透明的GPT Omkar Thawakar,Ashmal Vayani,Salman Khan,Hisham Cholakal,Rao M. Anwer,Michael Felsberg,Tim Baldwin,Eric P. Xing,Fahad Shahbaz Khan |  | github 纸 模型 |

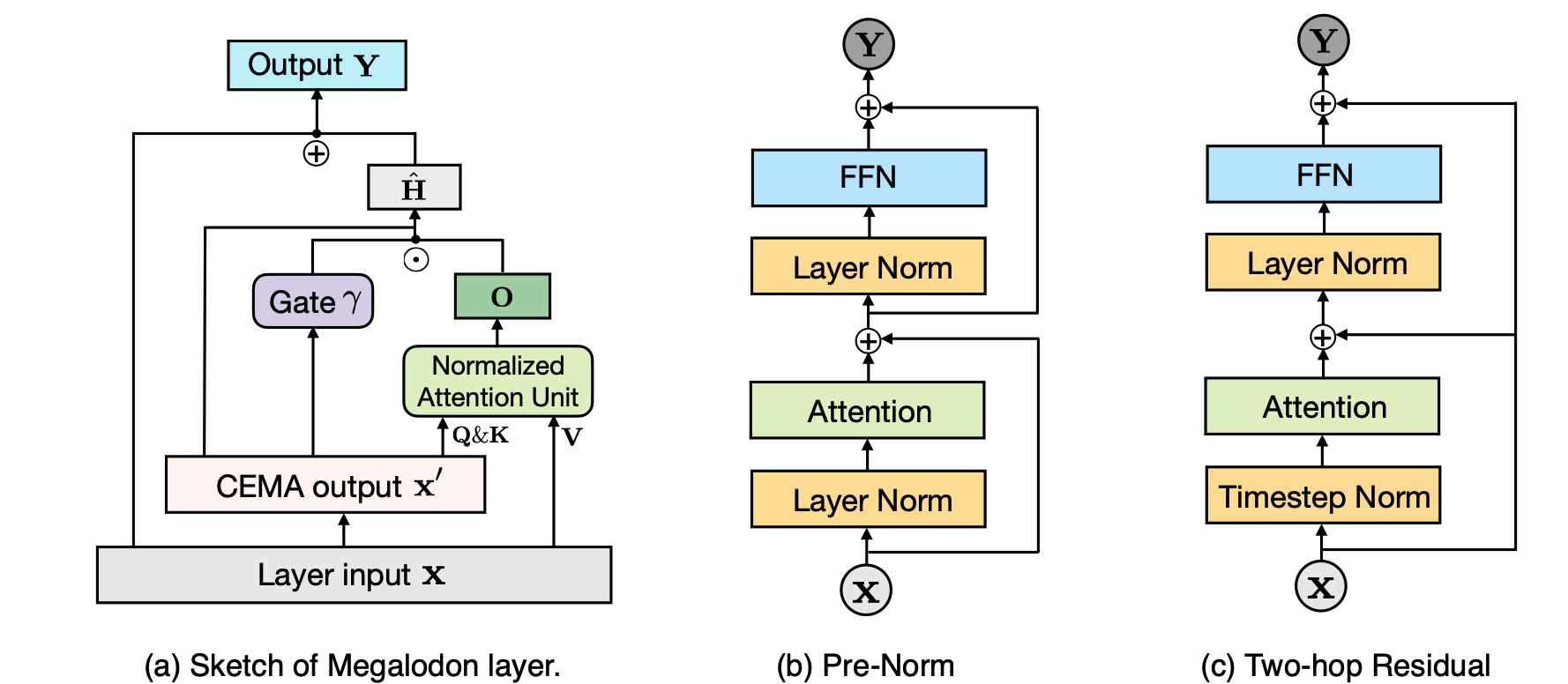

Megalodon:有效的LLM预处理和无限上下文长度的推断 Xuezhe MA,Yang Yang,Wenhan Xiong,Beidi Chen,Lili Yu,Hao Zhang,Jonathan May,Luke Zettlemoyer,Omer Levy,Chunting Zhou |  | github 纸 |

| Taipan:具有选择性关注的高效和表达状态空间语言模型 Chien van nguyen,Huy Huu Nguyen,Thang M. Pham,Ruiyi Zhang,Hanieh Deilamsalehy,Puneet Mathur,Ryan A. Rossi,Trung Bui,Trung Bui,Viet Dac Lai,Franck Dernoncourt,Thien Huu Nguyen | 纸 | |

声明:在LLMS中学习固有的稀疏关注 Yizhao Gao,Zhichen Zeng,Dayou du,Shijie Cao,Hayden Kwok-hay So,Ting Cao,Fan Yang,Mao Yang Yang Yang | github 纸 | |

基础共享:大语言模型压缩的跨层参数共享 Jingcun Wang,Yu-Guang Chen,Ing-Chao Lin,Bing Li,Grace Li Zhang | github 纸 | |

| Rodimus*:通过有效的注意力打破准确的效率折衷 Zhihao He,Hang Yu,Zi Gong,Shizhan Liu,Jianguo Li,Weiyao Lin | 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

| 模型告诉您要丢弃什么:LLMS的自适应KV缓存压缩 Suyu GE,Yunan Zhang,Liyuan Liu,Minjia Zhang,Jiawei Han,Jianfeng Gao |  | 纸 |

| clusterKV:在语义空间中操纵LLM KV缓存以进行可回忆的压缩 liu,chengwei li,jieru zhao,chenqi Zhang,minyi guo | 纸 | |

| 使用LeanKV统一大型语言模型的KV缓存压缩 Yanqi Zhang,Yuwei Hu,Runyuan Zhao,John CS Lui,Haibo Chen | 纸 | |

| 通过层间注意力相似性来压缩长篇文化LLM推断的KV缓存 Da Ma,Lu Chen,Situo Zhang,Yuxun Miao,Su Zhu,Zhi Chen,Hongshen Xu,Hanqi Li,Shuai Fan,Lei Pan,Kai Yu | 纸 | |

| MiniKV:通过2位层 - 歧义kV缓存推动LLM推理的限制 Akshat Sharma,Hangliang Ding,Jianping Li,Neel Dani,Minjia Zhang | 纸 | |

| TokenSelect:通过动态令牌kV缓存选择LLM的有效长篇小说推断和长度外推。 Wei Wu,Zhuoshi Pan,Chao Wang,Liyi Chen,Yunchu Bai,Kun Fu,Zheng Wang,Hui Xiong | 纸 | |

并非所有的头都重要:带有集成检索和推理的头部级KV缓存压缩方法 Yu Fu,Zefan Cai,Abedelkadir Asi,Wayne Xiong,Yue Dong,Wen Xiao |  | github 纸 |

嗡嗡声:蜂巢结构的稀疏kV缓存,分段重型击球手用于有效的LLM推理 Junqi Zhao,Zhijin Fang,Shu Li,Shaohui Yang,Shichao他 | github 纸 | |

对有效LLM推断的跨层KV共享的系统研究 你吴,海耶·吴,凯威·托 |  | github 纸 |

| 无损KV缓存压缩至2% Zhen Yang,Jnhan,Kan Wu,Ruobing Xie,An Wang,Xingwu Sun,Zhanhui Kang | 纸 | |

| Matryoshkakv:通过可训练的正交投影自适应KV压缩 Bokai Lin,Zihao Zeng,Zipeng Xiao,Siqi Kou,Tianqi Hou,Xiaofeng Gao,Hao Zhang,Zhijie Deng | 纸 | |

大语言模型中KV缓存压缩的剩余矢量量化 Ankur Kumar | github 纸 | |

KVSharer:通过层次不同的KV缓存共享有效推断 Yifei Yang,Zouying Cao,Qiguang Chen,Libo Qin,Dongjie Yang,Hai Zhao,Zhi Chen |  | github 纸 |

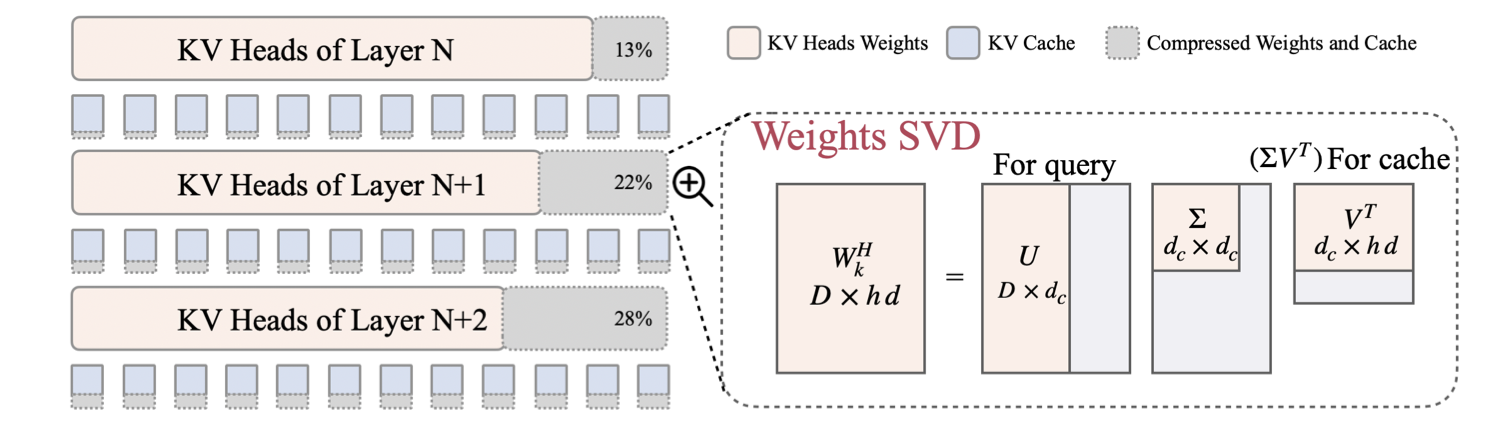

| LORC:LLMS KV高速缓存的低级压缩策略 Rongzhi Zhang,Kuang Wang,Liyuan Liu,Shuohang Wang,Hao Cheng,Chao Zhang,Yelong Shen |  | 纸 |

| SwiftKV:通过知识保护模型转换快速预填充推理 Aurick Qiao,Zhewei Yao,Samyam Rajbhandari,Yuxiong他 | 纸 | |

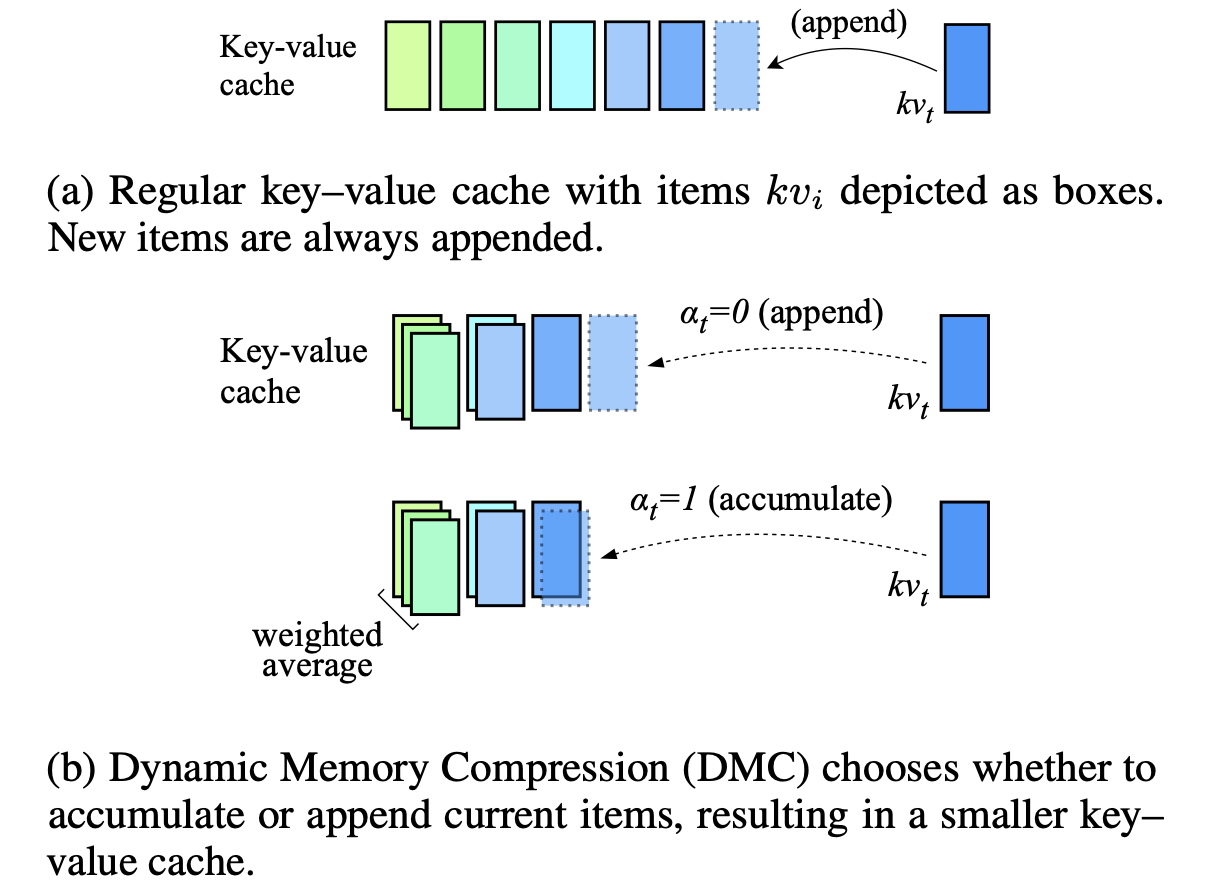

动态内存压缩:加速推理的翻新LLM Piotr Nawrot,Adrianolańcucki,Marcin Chochowski,David Tarjan,Edoardo M. Ponti |  | 纸 |

| KV压缩:每个注意力头的分类KV-CACHE压缩率具有可变的压缩率 艾萨克·雷格(Isaac Rehg) | 纸 | |

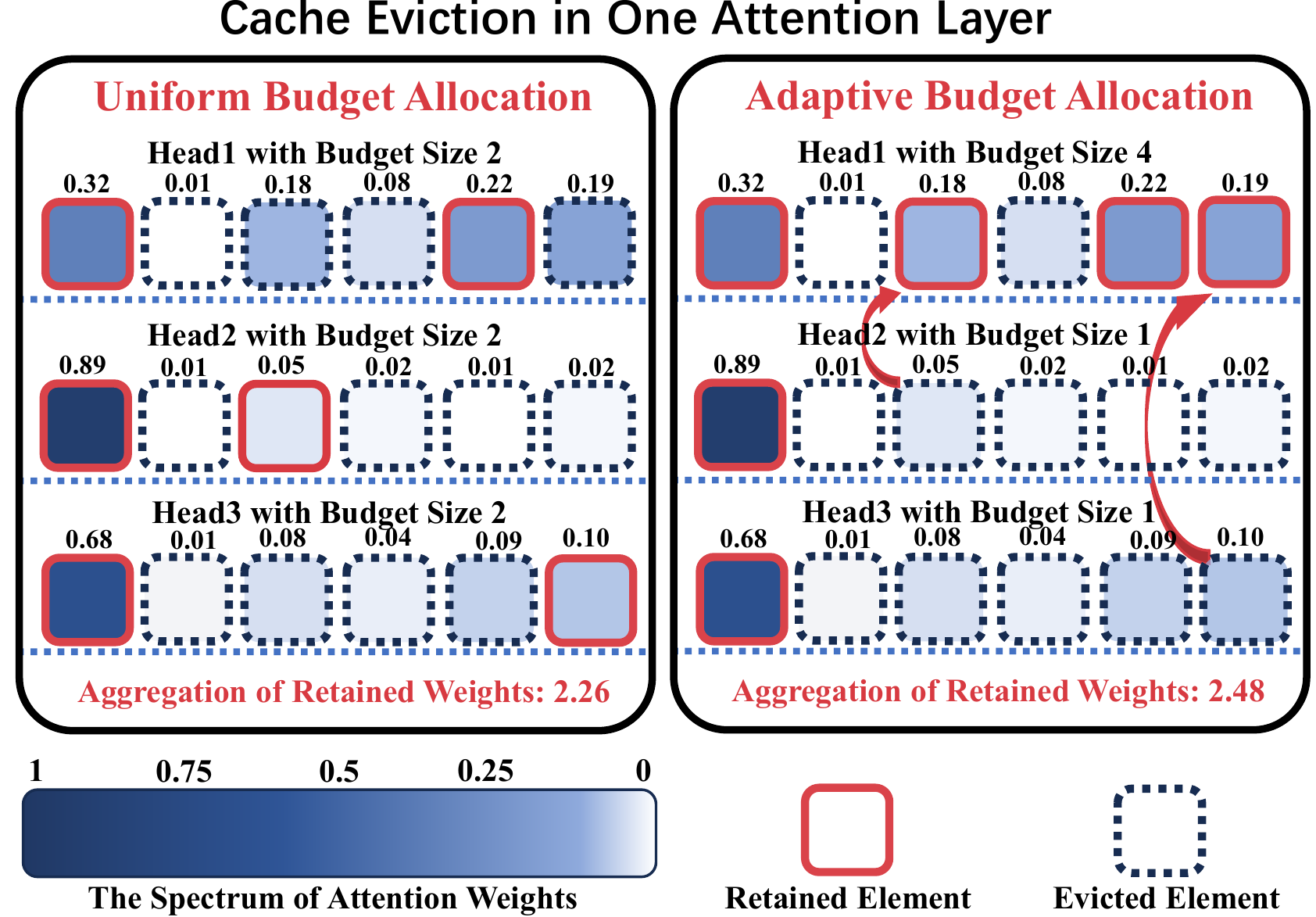

ADA-KV:通过自适应预算分配优化KV缓存驱逐有效的LLM推理 Yuan Feng,Junlin LV,Yukun Cao,Xike Xie,S。KevinZhou |  | github 纸 |

AlignedKV:通过精确对准的量化减少KV-CACHE的内存访问 Yifan Tan,Haoze Wang,Chao Yan,Yangdong Deng | github 纸 | |

| CSKV:在长篇文化场景中,训练效率的频道缩小了KV高速缓存 Luning Wang,Shiyao Li,Xuefei Ning,Zhihang Yuan,Shengen Yan,Guohao Dai,Yu Wang | 纸 | |

| 首先查看有效且安全的设备LLM针对KV泄漏的推断 Huan Yang,Deyu Zhang,Yudong Zhao,Yuanchun Li,Yunxin Liu | 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

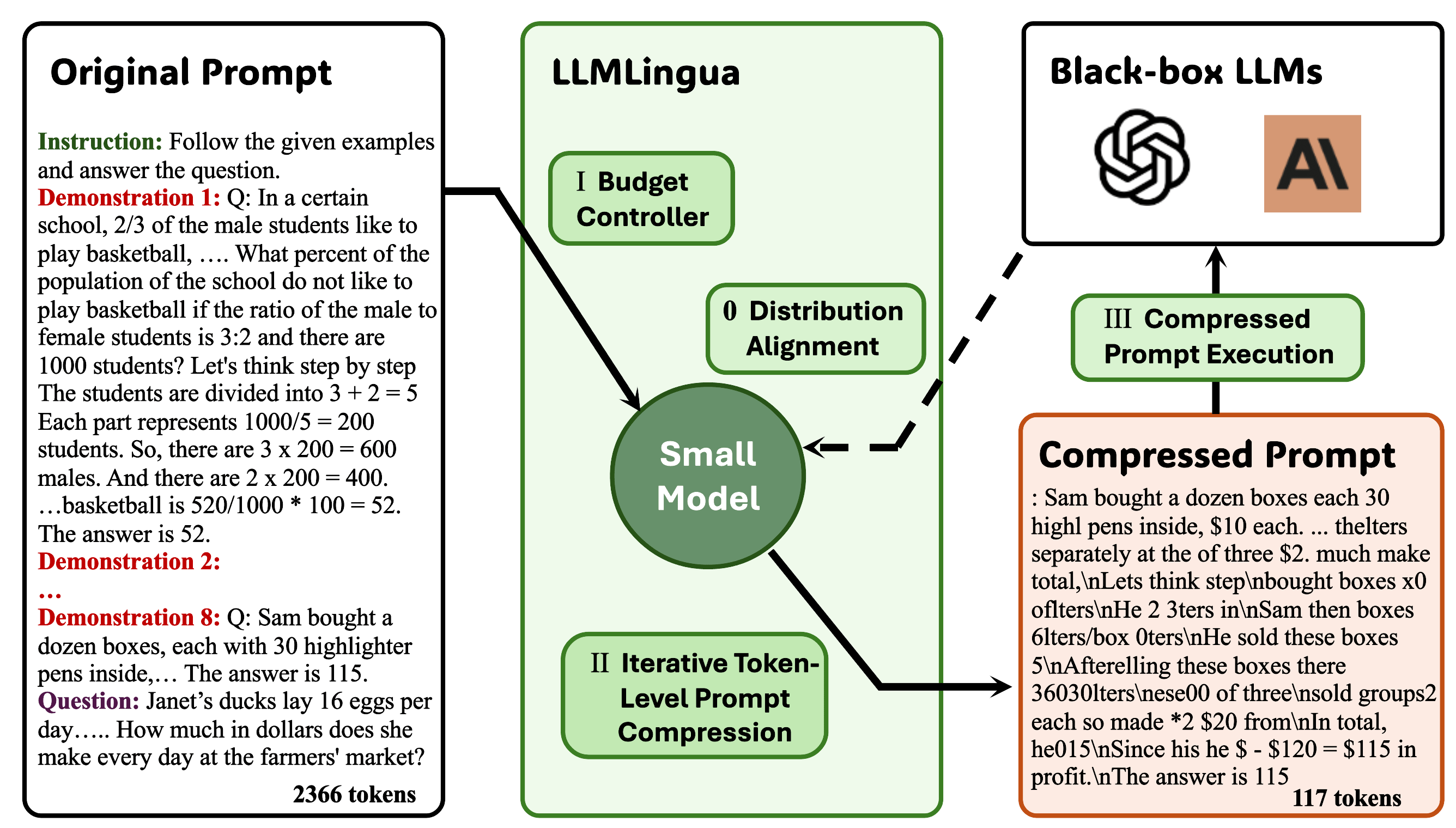

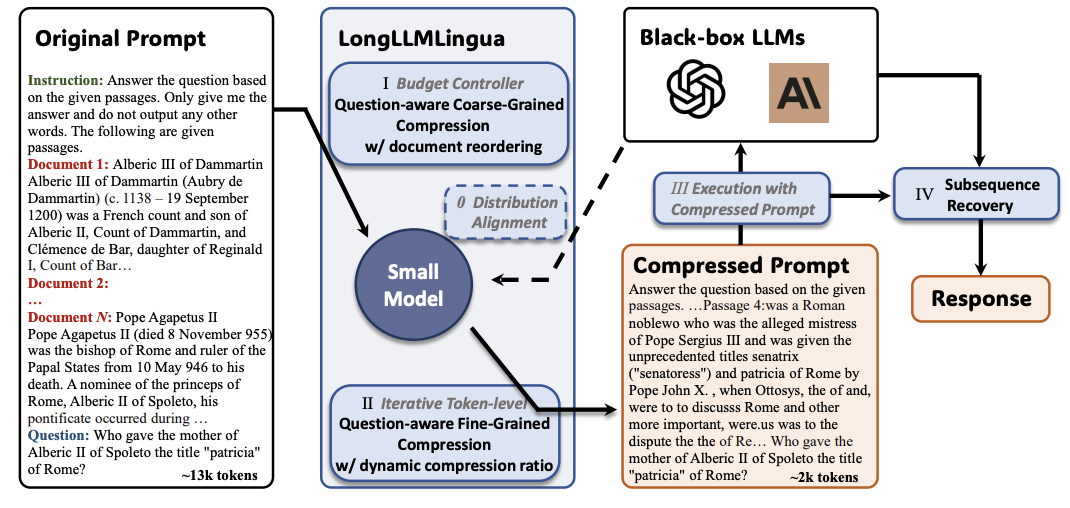

llmlingua:压缩提示以加速大语模型的推理 Huiqiang Jiang,Qianhui Wu,Chin-Yew Lin,Yuqing Yang,Lili Qiu |  | github 纸 |

longllmlingua:通过及时压缩在长上下文方案中加速和增强LLM Huiqiang Jiang,Qianhui Wu,Xufang Luo,Dongsheng Li,Chin-Yew Lin,Yuqing Yang,Lili Qiu |  | github 纸 |

| JPPO:加速大型语言模型服务的联合力量和及时优化 Feiran You,Hongyang DU,Kaibin Huang,Abbas Jamalipour | 纸 | |

生成上下文蒸馏 Haebin Shin,Lei JI,Yeyun Gong,Sungdong Kim,Eunbi Choi,Minjoon Seo |  | github 纸 |

Multitok:适用于LZW压缩的有效LLM的可变长度令牌化 Noel Elias,Homa Esfahanizadeh,Kaan Kale,Sriram Vishwanath,Muriel Medard | github 纸 | |

Selection-P:自我监督的任务无关及格及时压缩,以实现忠诚和可转让性 TSZ Ting Chung,Leyang Cui,Lemao Liu,Xinting Huang,Shuming Shi,Dit-Yan Yeung | 纸 | |

从阅读到压缩:探索多文件读取器以进行提示压缩 Eunseong Choi,Sunkyung Lee,Minjin Choi,June Park,Jongwuk Lee | 纸 | |

| 感知压缩机:在长上下文场景中的一种无训练的及时压缩方法 Jiwei Tang,Jin Xu,Tingwei Lu,Hai Lin,Yiming Zhao,Hai-Tao Zheng | 纸 | |

FineZip:推动大型语言模型的限制以进行实际无损文本压缩 Fazal Mittu,Yihuan BU,Akshat Gupta,Ashok Devireddy,Alp Eren Ozdarendeli,Anant Singh,Gopala Anumandanchipalli | github 纸 | |

解析树引导LLM提示压缩 Wenhao Mao,Chengbin Hou,Tianyu Zhang,Xinyu Lin,Ke Tang,Hairong LV | github 纸 | |

Alphazip:神经网络增强无损文本压缩 Swathi Shree Narashiman,Nitin Chandrachoodan | github 纸 | |

| TACO-RL:通过增强学习的任务意识提高压缩优化 Shivam Shandilya,Menglin Xia,Supriyo Ghosh,Huiqiang Jiang,Jue Zhang,Qianhui Wu,VictorRühle | 纸 | |

| 有效的LLM上下文蒸馏 Rajesh Upadhayaya,Zachary Smith,Chritopher Kottmyer,Manish Raj Osti | 纸 | |

通过指导感知的上下文压缩来增强和加速大型语言模型 Haowen Hou,Fei MA,Binwen Bai,Xinxin Zhu,Fei Yu | github 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

天然盛事:加速盛放,用于记忆效率的LLM训练和微调 Arijit Das | github 纸 | |

| 紧凑:记忆效率LLM训练的压缩激活 Yara Shamshoum,Nitzan Hodos,Yuval Sieradzki,Assaf Schuster | 纸 | |

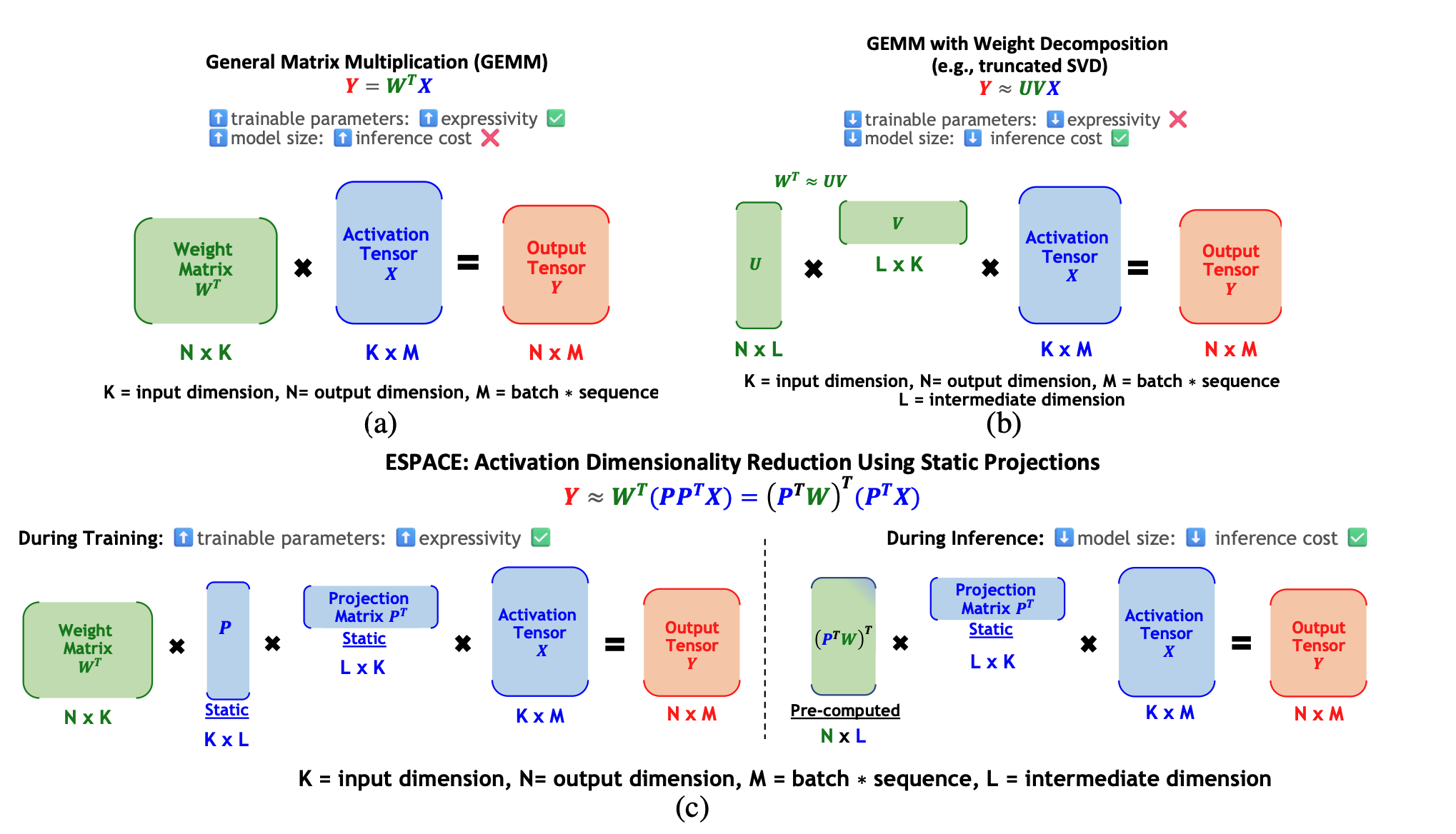

ESPACE:减少模型压缩激活的维度 Charbel Sakr,Brucek Khailany |  | 纸 |

| 标题和作者 | 介绍 | 链接 |

|---|---|---|

| FastSwitch:优化公平意识的上下文切换效率 - 大型语言模型服务 Ao Shen, Zhiyao Li, Mingyu Gao | 纸 | |

| CE-CoLLM: Efficient and Adaptive Large Language Models Through Cloud-Edge Collaboration Hongpeng Jin, Yanzhao Wu | 纸 | |

| Ripple: Accelerating LLM Inference on Smartphones with Correlation-Aware Neuron Management Tuowei Wang, Ruwen Fan, Minxing Huang, Zixu Hao, Kun Li, Ting Cao, Youyou Lu, Yaoxue Zhang, Ju Ren | 纸 | |

ALISE: Accelerating Large Language Model Serving with Speculative Scheduling Youpeng Zhao, Jun Wang | 纸 | |

| EPIC: Efficient Position-Independent Context Caching for Serving Large Language Models Junhao Hu, Wenrui Huang, Haoyi Wang, Weidong Wang, Tiancheng Hu, Qin Zhang, Hao Feng, Xusheng Chen, Yizhou Shan, Tao Xie | 纸 | |

SDP4Bit: Toward 4-bit Communication Quantization in Sharded Data Parallelism for LLM Training Jinda Jia, Cong Xie, Hanlin Lu, Daoce Wang, Hao Feng, Chengming Zhang, Baixi Sun, Haibin Lin, Zhi Zhang, Xin Liu, Dingwen Tao | 纸 | |

| FastAttention: Extend FlashAttention2 to NPUs and Low-resource GPUs Haoran Lin, Xianzhi Yu, Kang Zhao, Lu Hou, Zongyuan Zhan et al | 纸 | |

| POD-Attention: Unlocking Full Prefill-Decode Overlap for Faster LLM Inference Aditya K Kamath, Ramya Prabhu, Jayashree Mohan, Simon Peter, Ramachandran Ramjee, Ashish Panwar | 纸 | |

TPI-LLM: Serving 70B-scale LLMs Efficiently on Low-resource Edge Devices Zonghang Li, Wenjiao Feng, Mohsen Guizani, Hongfang Yu | Github 纸 | |

Efficient Arbitrary Precision Acceleration for Large Language Models on GPU Tensor Cores Shaobo Ma, Chao Fang, Haikuo Shao, Zhongfeng Wang | 纸 | |

OPAL: Outlier-Preserved Microscaling Quantization A ccelerator for Generative Large Language Models Jahyun Koo, Dahoon Park, Sangwoo Jung, Jaeha Kung | 纸 | |

| Accelerating Large Language Model Training with Hybrid GPU-based Compression Lang Xu, Quentin Anthony, Qinghua Zhou, Nawras Alnaasan, Radha R. Gulhane, Aamir Shafi, Hari Subramoni, Dhabaleswar K. Panda | 纸 |

| Title & Authors | 介绍 | 链接 |

|---|---|---|

| HELENE: Hessian Layer-wise Clipping and Gradient Annealing for Accelerating Fine-tuning LLM with Zeroth-order Optimization Huaqin Zhao, Jiaxi Li, Yi Pan, Shizhe Liang, Xiaofeng Yang, Wei Liu, Xiang Li, Fei Dou, Tianming Liu, Jin Lu | 纸 | |

Robust and Efficient Fine-tuning of LLMs with Bayesian Reparameterization of Low-Rank Adaptation Ayan Sengupta, Vaibhav Seth, Arinjay Pathak, Natraj Raman, Sriram Gopalakrishnan, Tanmoy Chakraborty | Github 纸 | |

MiLoRA: Efficient Mixture of Low-Rank Adaptation for Large Language Models Fine-tuning Jingfan Zhang, Yi Zhao, Dan Chen, Xing Tian, Huanran Zheng, Wei Zhu | 纸 | |

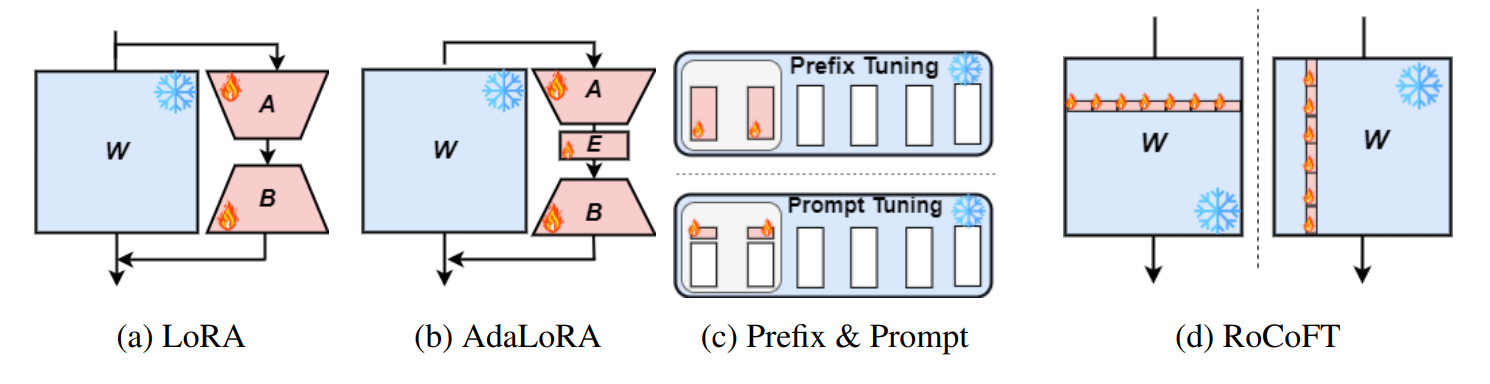

RoCoFT: Efficient Finetuning of Large Language Models with Row-Column Updates Md Kowsher, Tara Esmaeilbeig, Chun-Nam Yu, Mojtaba Soltanalian, Niloofar Yousefi |  | Github 纸 |

Layer-wise Importance Matters: Less Memory for Better Performance in Parameter-efficient Fine-tuning of Large Language Models Kai Yao, Penlei Gao, Lichun Li, Yuan Zhao, Xiaofeng Wang, Wei Wang, Jianke Zhu | Github 纸 | |

Parameter-Efficient Fine-Tuning of Large Language Models using Semantic Knowledge Tuning Nusrat Jahan Prottasha, Asif Mahmud, Md. Shohanur Islam Sobuj, Prakash Bhat, Md Kowsher, Niloofar Yousefi, Ozlem Ozmen Garibay | 纸 | |

QEFT: Quantization for Efficient Fine-Tuning of LLMs Changhun Lee, Jun-gyu Jin, Younghyun Cho, Eunhyeok Park | Github 纸 | |

BIPEFT: Budget-Guided Iterative Search for Parameter Efficient Fine-Tuning of Large Pretrained Language Models Aofei Chang, Jiaqi Wang, Han Liu, Parminder Bhatia, Cao Xiao, Ting Wang, Fenglong Ma | Github 纸 | |

SparseGrad: A Selective Method for Efficient Fine-tuning of MLP Layers Viktoriia Chekalina, Anna Rudenko, Gleb Mezentsev, Alexander Mikhalev, Alexander Panchenko, Ivan Oseledets | Github 纸 | |

| SpaLLM: Unified Compressive Adaptation of Large Language Models with Sketching Tianyi Zhang, Junda Su, Oscar Wu, Zhaozhuo Xu, Anshumali Shrivastava | 纸 | |

Bone: Block Affine Transformation as Parameter Efficient Fine-tuning Methods for Large Language Models Jiale Kang | Github 纸 | |

| Enabling Resource-Efficient On-Device Fine-Tuning of LLMs Using Only Inference Engines Lei Gao, Amir Ziashahabi, Yue Niu, Salman Avestimehr, Murali Annavaram |  | 纸 |

| Title & Authors | 介绍 | 链接 |

|---|---|---|

| AutoMixQ: Self-Adjusting Quantization for High Performance Memory-Efficient Fine-Tuning Changhai Zhou, Shiyang Zhang, Yuhua Zhou, Zekai Liu, Shichao Weng |  | 纸 |

Scalable Efficient Training of Large Language Models with Low-dimensional Projected Attention Xingtai Lv, Ning Ding, Kaiyan Zhang, Ermo Hua, Ganqu Cui, Bowen Zhou | Github 纸 | |

| Less is More: Extreme Gradient Boost Rank-1 Adaption for Efficient Finetuning of LLMs Yifei Zhang, Hao Zhu, Aiwei Liu, Han Yu, Piotr Koniusz, Irwin King | 纸 | |

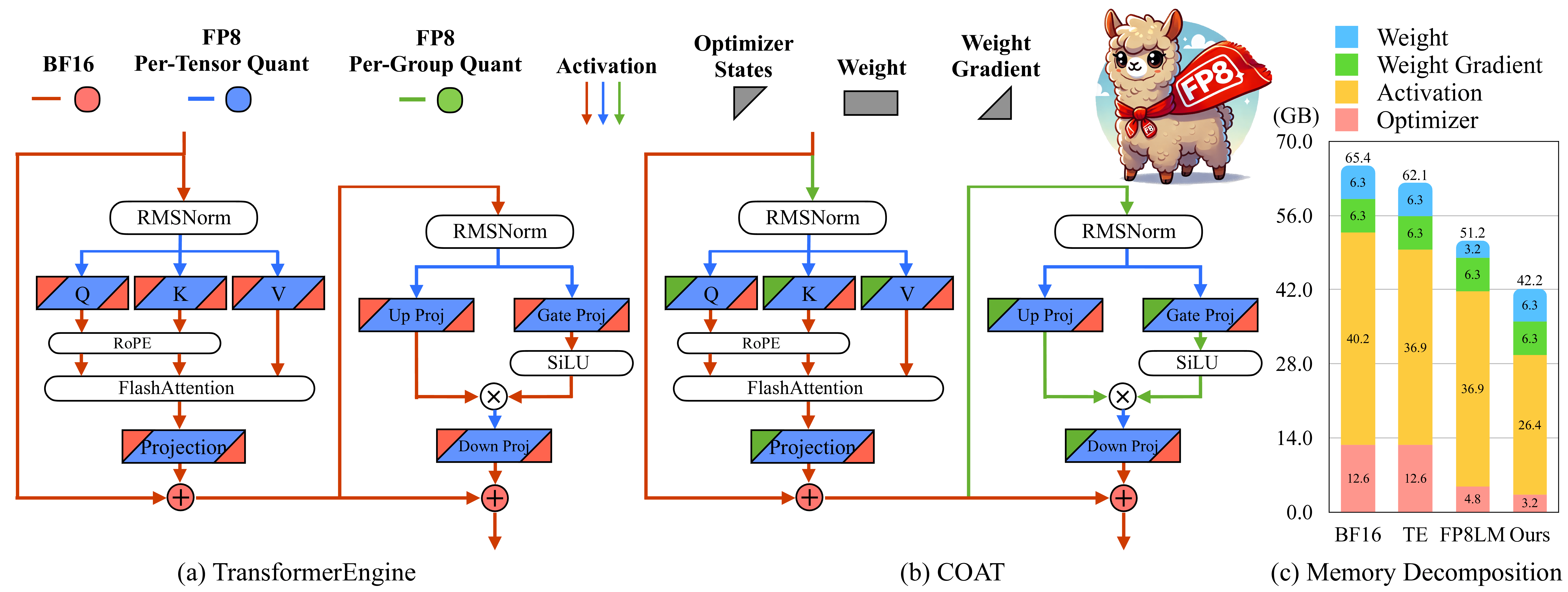

COAT: Compressing Optimizer states and Activation for Memory-Efficient FP8 Training Haocheng Xi, Han Cai, Ligeng Zhu, Yao Lu, Kurt Keutzer, Jianfei Chen, Song Han |  | Github 纸 |

BitPipe: Bidirectional Interleaved Pipeline Parallelism for Accelerating Large Models Training Houming Wu, Ling Chen, Wenjie Yu |  | Github 纸 |

| Title & Authors | 介绍 | 链接 |

|---|---|---|

| Closer Look at Efficient Inference Methods: A Survey of Speculative Decoding Hyun Ryu, Eric Kim | 纸 | |

LLM-Inference-Bench: Inference Benchmarking of Large Language Models on AI Accelerators Krishna Teja Chitty-Venkata, Siddhisanket Raskar, Bharat Kale, Farah Ferdaus et al | Github 纸 | |

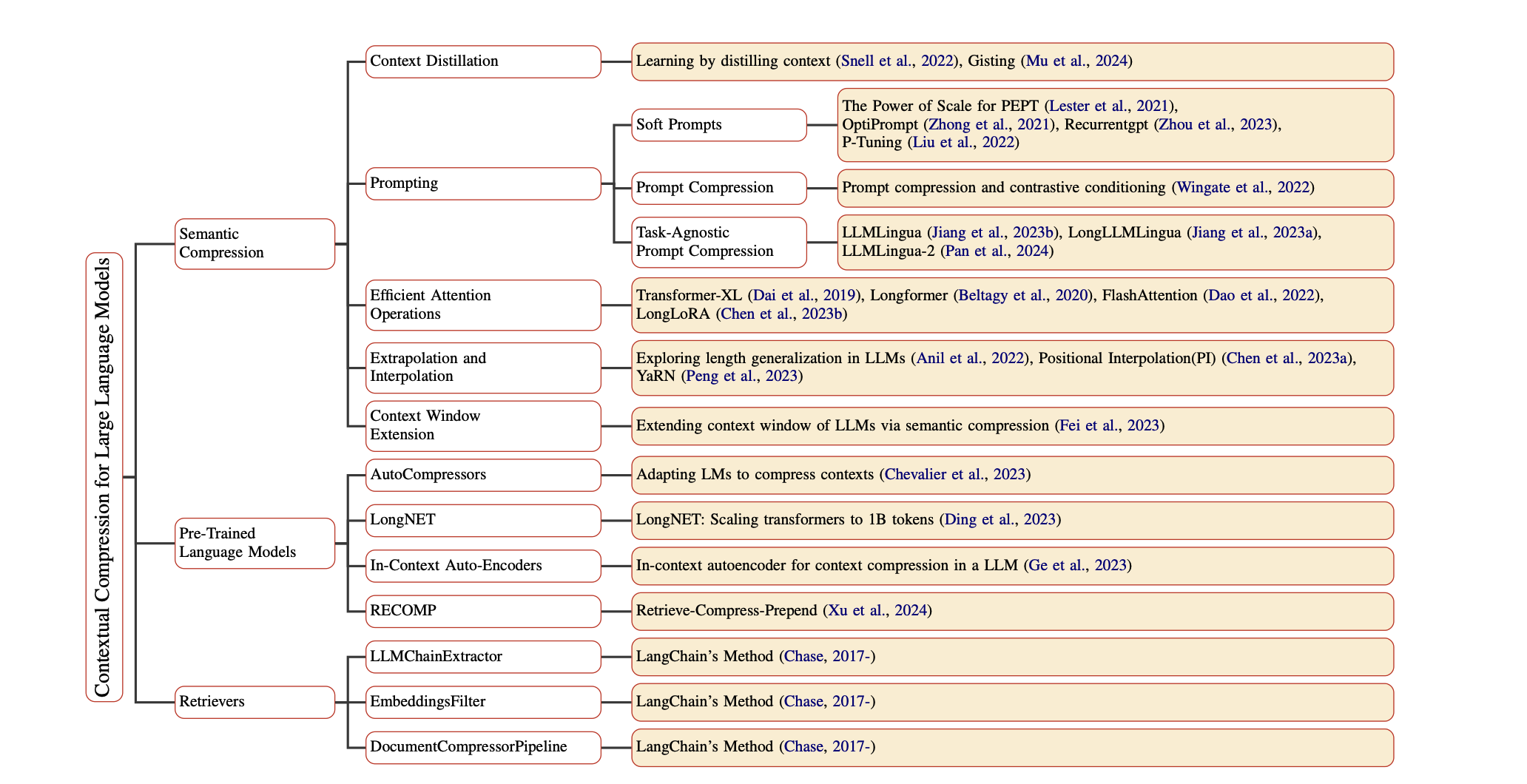

Prompt Compression for Large Language Models: A Survey Zongqian Li, Yinhong Liu, Yixuan Su, Nigel Collier | Github 纸 | |

| Large Language Model Inference Acceleration: A Comprehensive Hardware Perspective Jinhao Li, Jiaming Xu, Shan Huang, Yonghua Chen, Wen Li, Jun Liu, Yaoxiu Lian, Jiayi Pan, Li Ding, Hao Zhou, Guohao Dai | 纸 | |

| A Survey of Low-bit Large Language Models: Basics, Systems, and Algorithms Ruihao Gong, Yifu Ding, Zining Wang, Chengtao Lv, Xingyu Zheng, Jinyang Du, Haotong Qin, Jinyang Guo, Michele Magno, Xianglong Liu | 纸 | |

Contextual Compression in Retrieval-Augmented Generation for Large Language Models: A Survey Sourav Verma |  | Github 纸 |

| Art and Science of Quantizing Large-Scale Models: A Comprehensive Overview Yanshu Wang, Tong Yang, Xiyan Liang, Guoan Wang, Hanning Lu, Xu Zhe, Yaoming Li, Li Weitao | 纸 | |

| Hardware Acceleration of LLMs: A comprehensive survey and comparison Nikoletta Koilia, Christoforos Kachris | 纸 | |

| A Survey on Symbolic Knowledge Distillation of Large Language Models Kamal Acharya, Alvaro Velasquez, Houbing Herbert Song | 纸 |