Awesome Efficient LLM

1.0.0

A curated list for Efficient Large Language Models

If you'd like to include your paper, or need to update any details such as conference information or code URLs, please feel free to submit a pull request. You can generate the required markdown format for each paper by filling in the information in generate_item.py and execute python generate_item.py. We warmly appreciate your contributions to this list. Alternatively, you can email me with the links to your paper and code, and I would add your paper to the list at my earliest convenience.

For each topic, we have curated a list of recommended papers that have garnered a lot of GitHub stars or citations.

| Title & Authors | Introduction | Links |

|---|---|---|

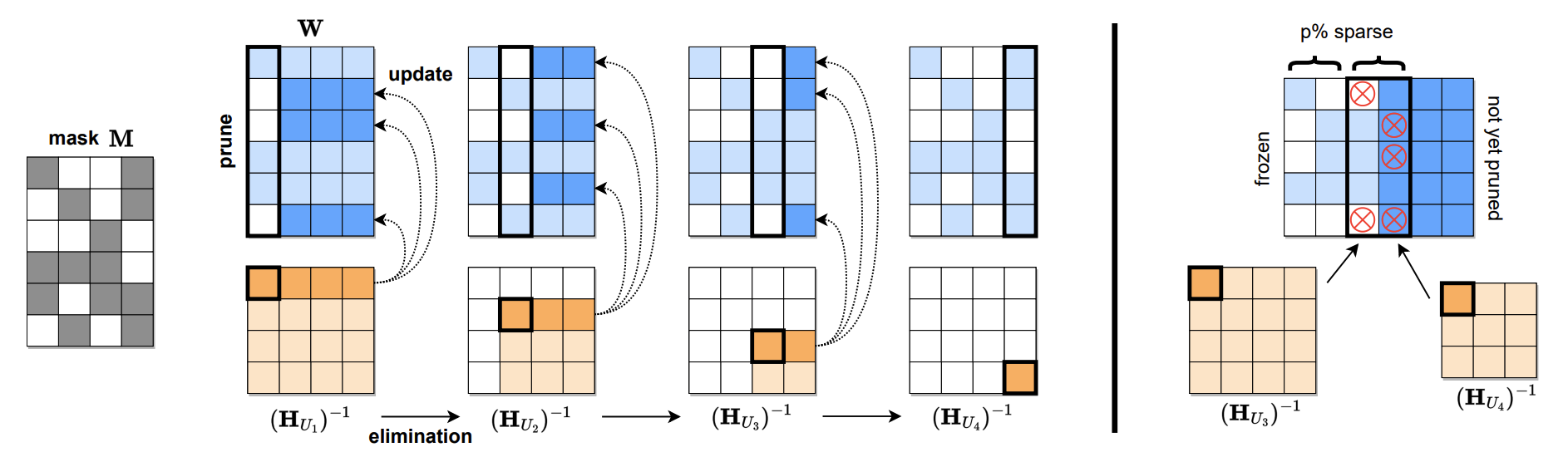

| SparseGPT: Massive Language Models Can Be Accurately Pruned in One-Shot Elias Frantar, Dan Alistarh |

|

Github paper |

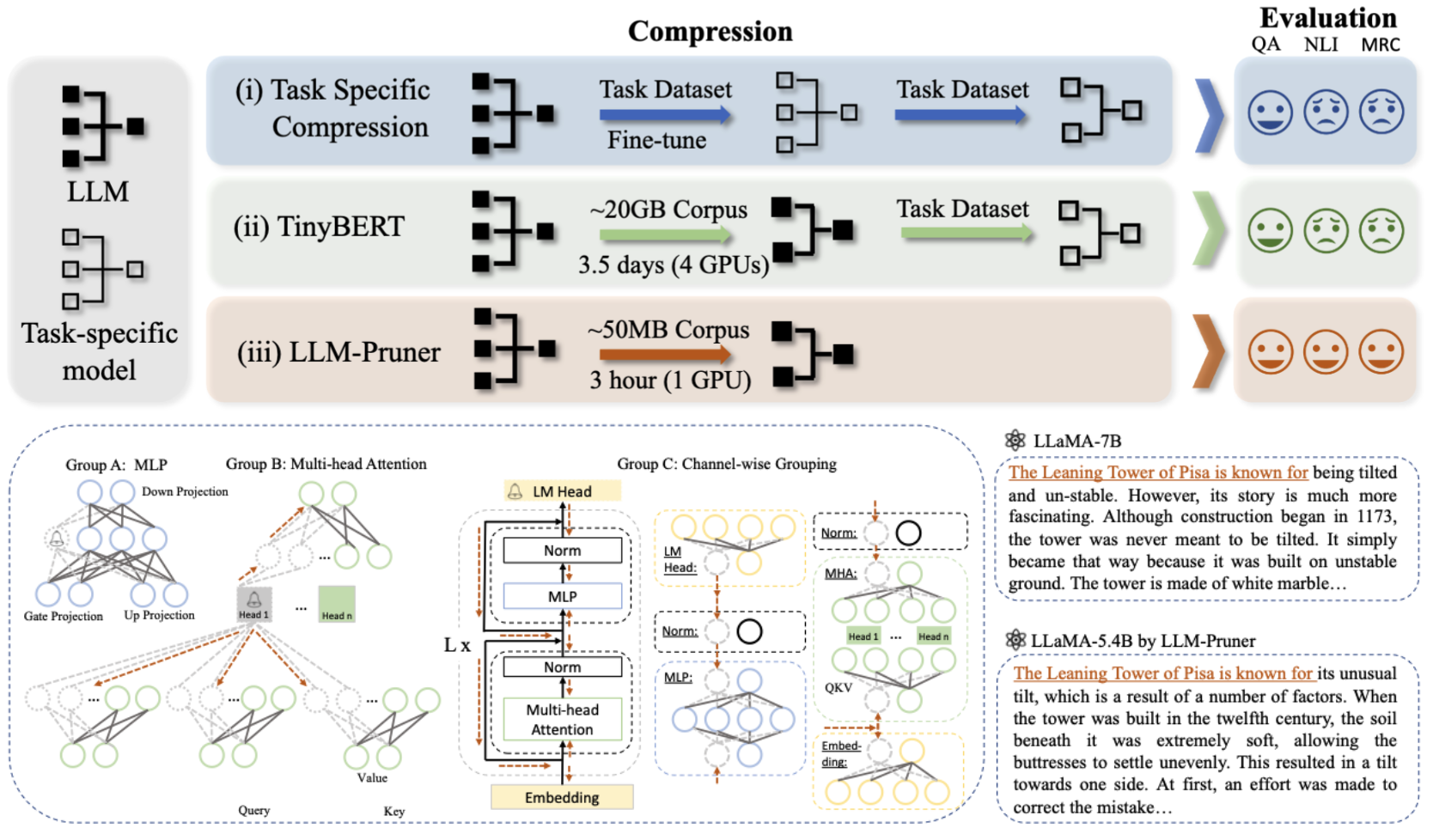

| LLM-Pruner: On the Structural Pruning of Large Language Models Xinyin Ma, Gongfan Fang, Xinchao Wang |

|

Github paper |

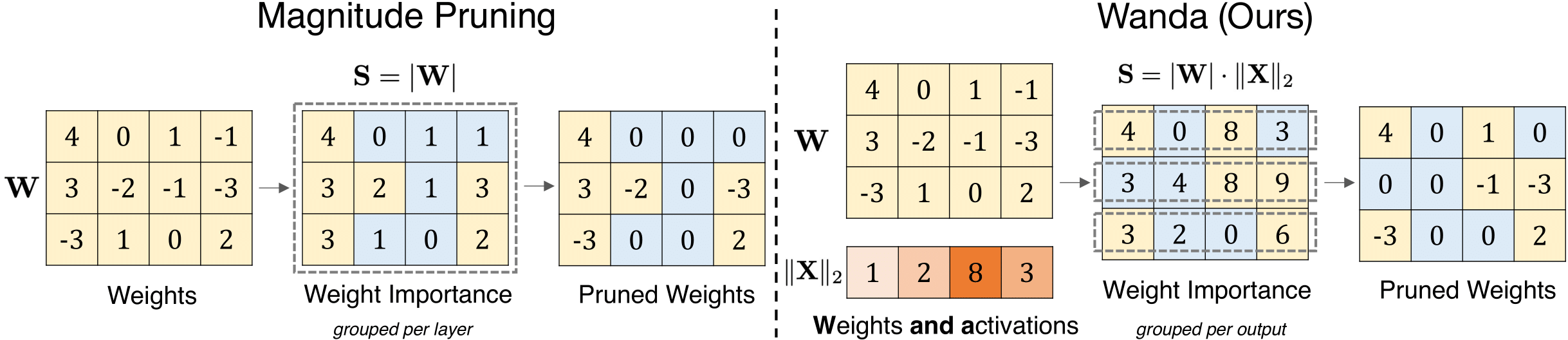

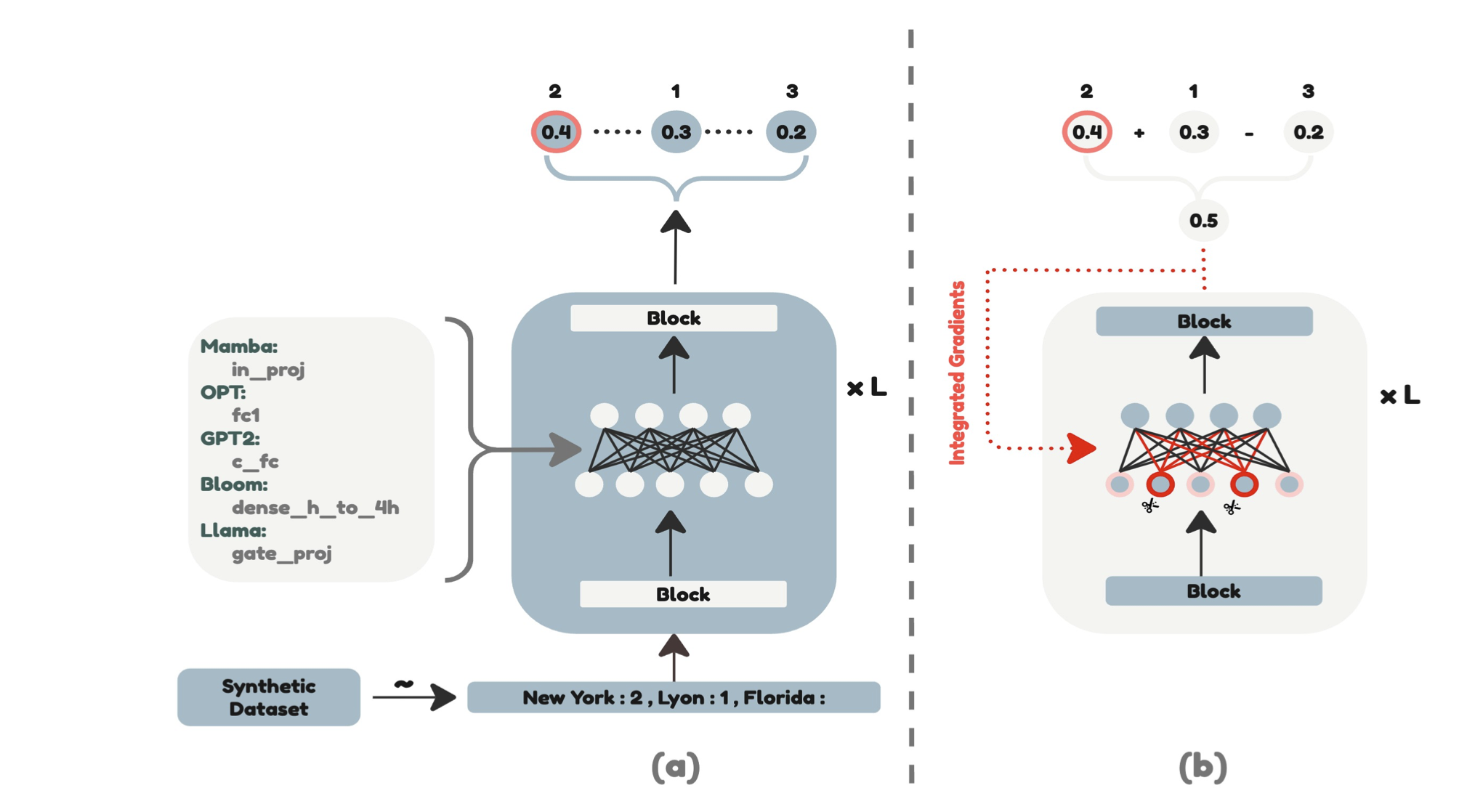

| A Simple and Effective Pruning Approach for Large Language Models Mingjie Sun, Zhuang Liu, Anna Bair, J. Zico Kolter |

|

Github Paper |

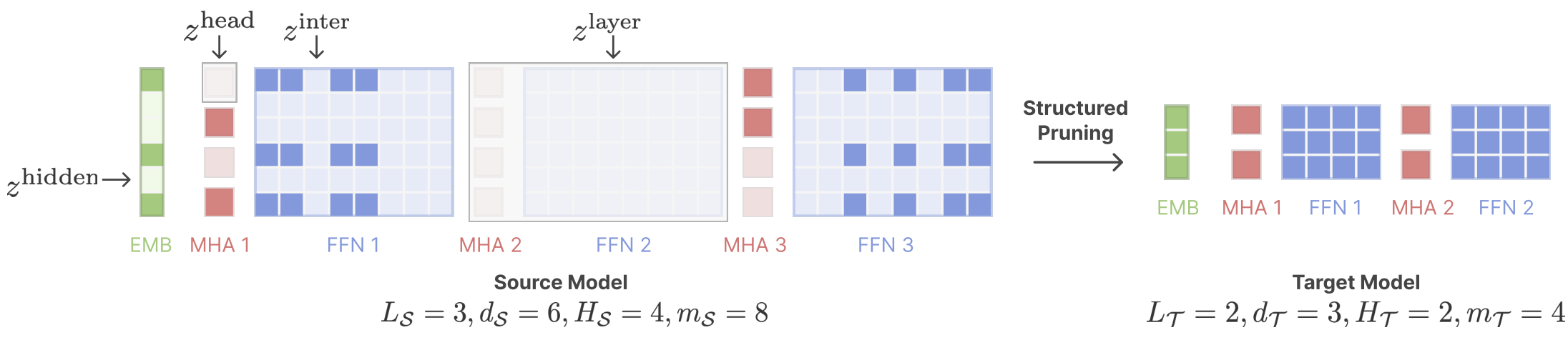

| Sheared LLaMA: Accelerating Language Model Pre-training via Structured Pruning Mengzhou Xia, Tianyu Gao, Zhiyuan Zeng, Danqi Chen |

|

Github Paper |

| Efficient LLM Inference using Dynamic Input Pruning and Cache-Aware Masking Marco Federici, Davide Belli, Mart van Baalen, Amir Jalalirad, Andrii Skliar, Bence Major, Markus Nagel, Paul Whatmough |

Paper | |

| Puzzle: Distillation-Based NAS for Inference-Optimized LLMs Akhiad Bercovich, Tomer Ronen, Talor Abramovich, Nir Ailon, Nave Assaf, Mohammad Dabbah et al |

Paper | |

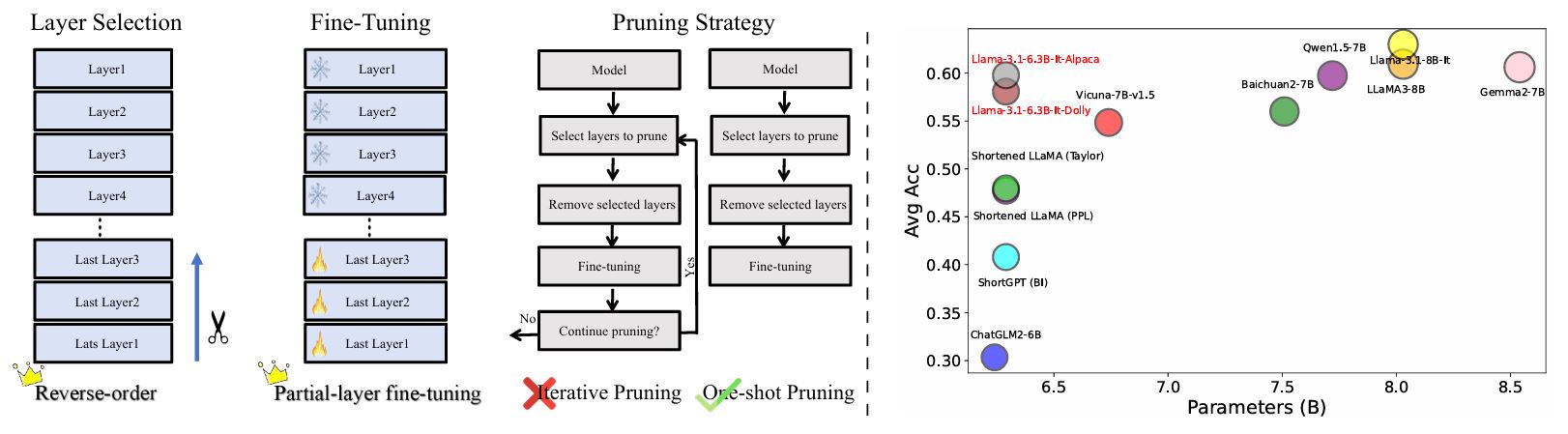

Reassessing Layer Pruning in LLMs: New Insights and Methods Yao Lu, Hao Cheng, Yujie Fang, Zeyu Wang, Jiaheng Wei, Dongwei Xu, Qi Xuan, Xiaoniu Yang, Zhaowei Zhu |

|

Github Paper |

| Layer Importance and Hallucination Analysis in Large Language Models via Enhanced Activation Variance-Sparsity Zichen Song, Sitan Huang, Yuxin Wu, Zhongfeng Kang |

Paper | |

AmoebaLLM: Constructing Any-Shape Large Language Models for Efficient and Instant Deployment Yonggan Fu, Zhongzhi Yu, Junwei Li, Jiayi Qian, Yongan Zhang, Xiangchi Yuan, Dachuan Shi, Roman Yakunin, Yingyan Celine Lin |

Github Paper |

|

| Scaling Law for Post-training after Model Pruning Xiaodong Chen, Yuxuan Hu, Jing Zhang, Xiaokang Zhang, Cuiping Li, Hong Chen |

Paper | |

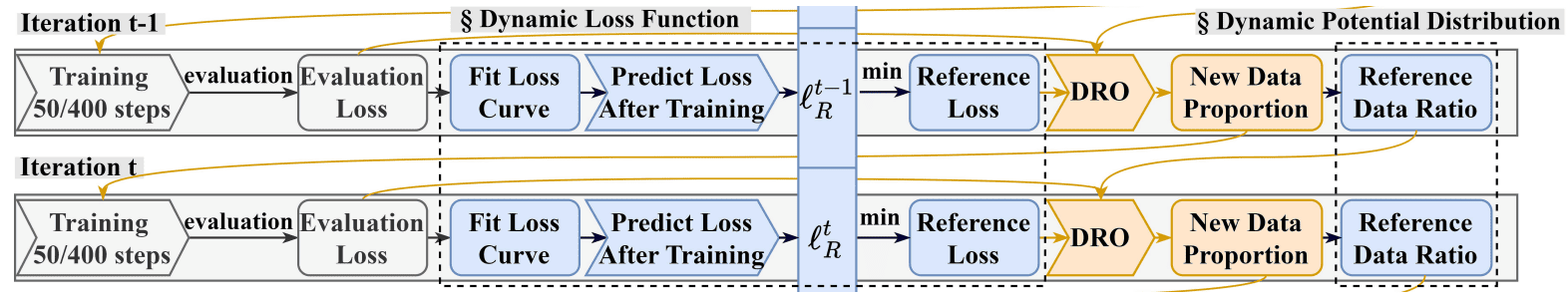

DRPruning: Efficient Large Language Model Pruning through Distributionally Robust Optimization Hexuan Deng, Wenxiang Jiao, Xuebo Liu, Min Zhang, Zhaopeng Tu |

|

Github Paper |

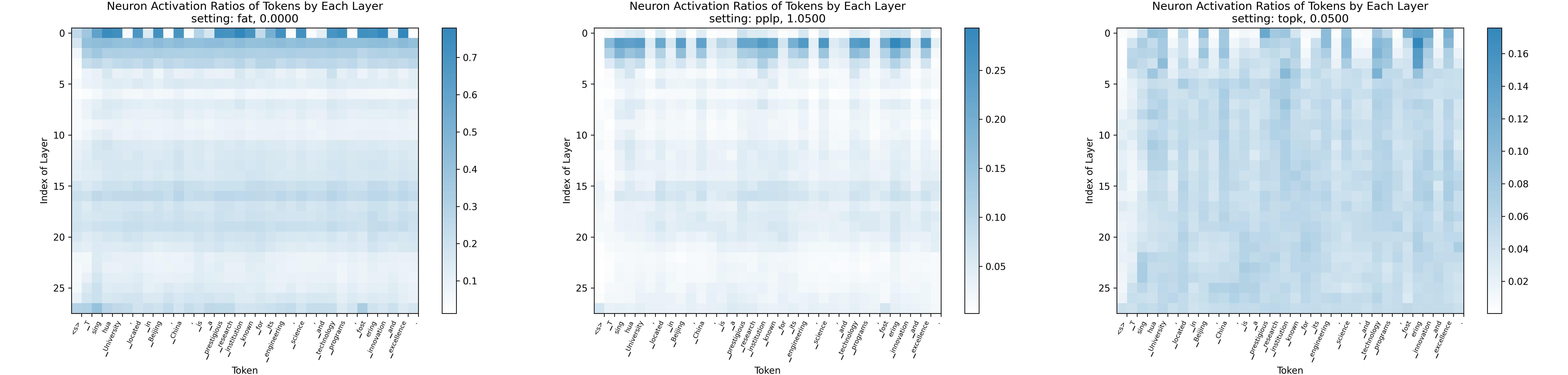

Sparsing Law: Towards Large Language Models with Greater Activation Sparsity Yuqi Luo, Chenyang Song, Xu Han, Yingfa Chen, Chaojun Xiao, Zhiyuan Liu, Maosong Sun |

|

Github Paper |

| AVSS: Layer Importance Evaluation in Large Language Models via Activation Variance-Sparsity Analysis Zichen Song, Yuxin Wu, Sitan Huang, Zhongfeng Kang |

Paper | |

| Tailored-LLaMA: Optimizing Few-Shot Learning in Pruned LLaMA Models with Task-Specific Prompts Danyal Aftab, Steven Davy |

Paper | |

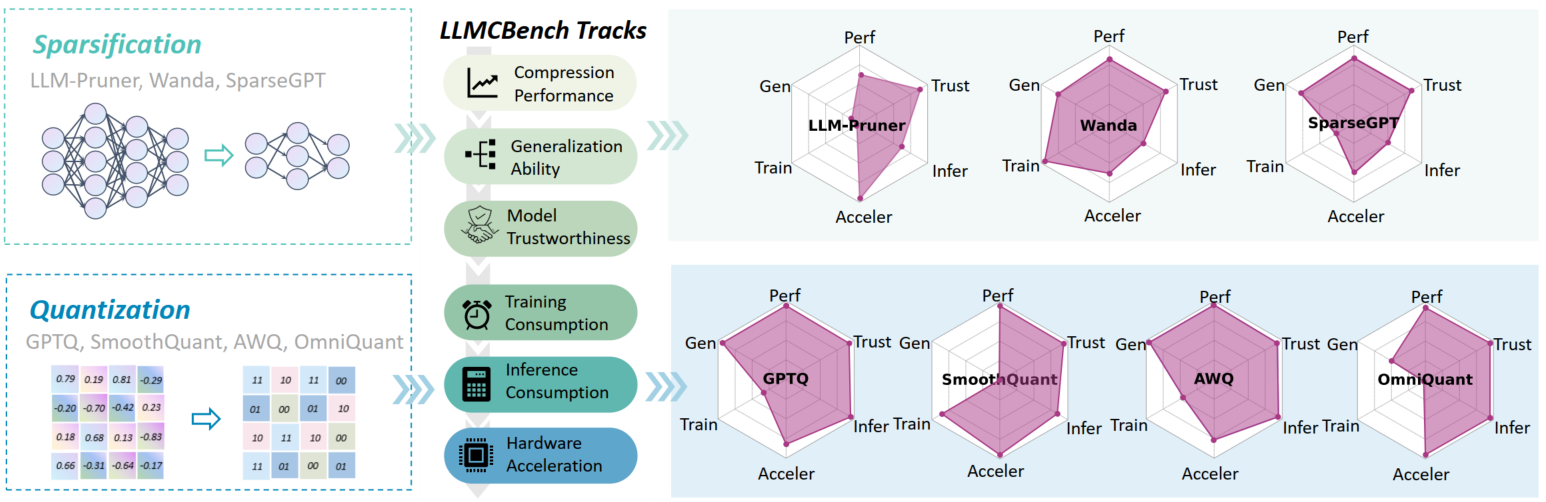

LLMCBench: Benchmarking Large Language Model Compression for Efficient Deployment Ge Yang, Changyi He, Jinyang Guo, Jianyu Wu, Yifu Ding, Aishan Liu, Haotong Qin, Pengliang Ji, Xianglong Liu |

|

Github Paper |

| Beyond 2:4: exploring V:N:M sparsity for efficient transformer inference on GPUs Kang Zhao, Tao Yuan, Han Bao, Zhenfeng Su, Chang Gao, Zhaofeng Sun, Zichen Liang, Liping Jing, Jianfei Chen |

Paper | |

EvoPress: Towards Optimal Dynamic Model Compression via Evolutionary Search Oliver Sieberling, Denis Kuznedelev, Eldar Kurtic, Dan Alistarh |

|

Github Paper |

| FedSpaLLM: Federated Pruning of Large Language Models Guangji Bai, Yijiang Li, Zilinghan Li, Liang Zhao, Kibaek Kim |

Paper | |

Pruning Foundation Models for High Accuracy without Retraining Pu Zhao, Fei Sun, Xuan Shen, Pinrui Yu, Zhenglun Kong, Yanzhi Wang, Xue Lin |

Github Paper |

|

| Self-calibration for Language Model Quantization and Pruning Miles Williams, George Chrysostomou, Nikolaos Aletras |

Paper | |

| Beware of Calibration Data for Pruning Large Language Models Yixin Ji, Yang Xiang, Juntao Li, Qingrong Xia, Ping Li, Xinyu Duan, Zhefeng Wang, Min Zhang |

Paper | |

AlphaPruning: Using Heavy-Tailed Self Regularization Theory for Improved Layer-wise Pruning of Large Language Models Haiquan Lu, Yefan Zhou, Shiwei Liu, Zhangyang Wang, Michael W. Mahoney, Yaoqing Yang |

Github Paper |

|

| Beyond Linear Approximations: A Novel Pruning Approach for Attention Matrix Yingyu Liang, Jiangxuan Long, Zhenmei Shi, Zhao Song, Yufa Zhou |

Paper | |

DISP-LLM: Dimension-Independent Structural Pruning for Large Language Models Shangqian Gao, Chi-Heng Lin, Ting Hua, Tang Zheng, Yilin Shen, Hongxia Jin, Yen-Chang Hsu |

Paper | |

Self-Data Distillation for Recovering Quality in Pruned Large Language Models Vithursan Thangarasa, Ganesh Venkatesh, Nish Sinnadurai, Sean Lie |

Paper | |

| LLM-Rank: A Graph Theoretical Approach to Pruning Large Language Models David Hoffmann, Kailash Budhathoki, Matthaeus Kleindessner |

Paper | |

Is C4 Dataset Optimal for Pruning? An Investigation of Calibration Data for LLM Pruning Abhinav Bandari, Lu Yin, Cheng-Yu Hsieh, Ajay Kumar Jaiswal, Tianlong Chen, Li Shen, Ranjay Krishna, Shiwei Liu |

Github Paper |

|

| Mitigating Copy Bias in In-Context Learning through Neuron Pruning Ameen Ali, Lior Wolf, Ivan Titov |

|

Paper |

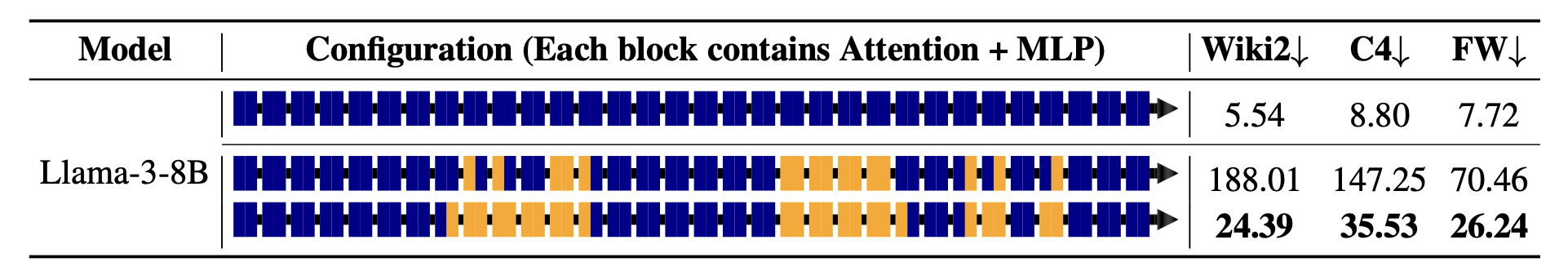

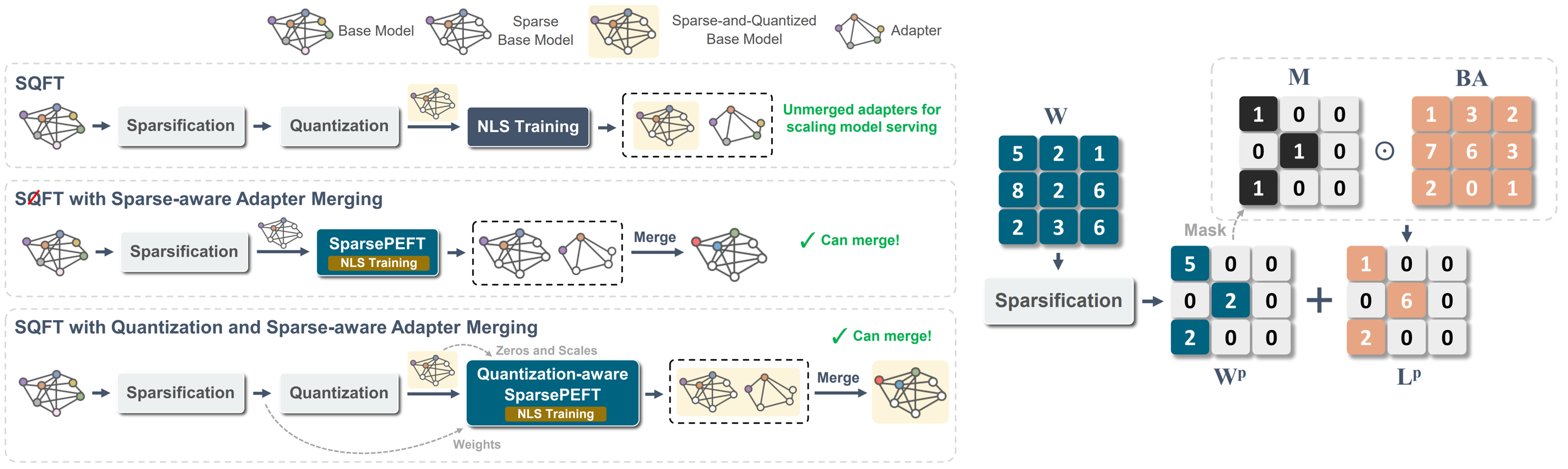

| SQFT: Low-cost Model Adaptation in Low-precision Sparse Foundation Models Juan Pablo Munoz, Jinjie Yuan, Nilesh Jain |

|

Github Paper |

| MaskLLM: Learnable Semi-Structured Sparsity for Large Language Models Gongfan Fang, Hongxu Yin, Saurav Muralidharan, Greg Heinrich, Jeff Pool, Jan Kautz, Pavlo Molchanov, Xinchao Wang |

|

Github Paper |

Search for Efficient Large Language Models Xuan Shen, Pu Zhao, Yifan Gong, Zhenglun Kong, Zheng Zhan, Yushu Wu, Ming Lin, Chao Wu, Xue Lin, Yanzhi Wang |

Paper | |

CFSP: An Efficient Structured Pruning Framework for LLMs with Coarse-to-Fine Activation Information Yuxin Wang, Minghua Ma, Zekun Wang, Jingchang Chen, Huiming Fan, Liping Shan, Qing Yang, Dongliang Xu, Ming Liu, Bing Qin |

Github Paper |

|

| OATS: Outlier-Aware Pruning Through Sparse and Low Rank Decomposition Stephen Zhang, Vardan Papyan |

Paper | |

| KVPruner: Structural Pruning for Faster and Memory-Efficient Large Language Models Bo Lv, Quan Zhou, Xuanang Ding, Yan Wang, Zeming Ma |

Paper | |

| Evaluating the Impact of Compression Techniques on Task-Specific Performance of Large Language Models Bishwash Khanal, Jeffery M. Capone |

Paper | |

| STUN: Structured-Then-Unstructured Pruning for Scalable MoE Pruning Jaeseong Lee, seung-won hwang, Aurick Qiao, Daniel F Campos, Zhewei Yao, Yuxiong He |

Paper | |

PAT: Pruning-Aware Tuning for Large Language Models Yijiang Liu, Huanrui Yang, Youxin Chen, Rongyu Zhang, Miao Wang, Yuan Du, Li Du |

|

Github Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

| Knowledge Distillation of Large Language Models Yuxian Gu, Li Dong, Furu Wei, Minlie Huang |

|

Github Paper |

| Improving Mathematical Reasoning Capabilities of Small Language Models via Feedback-Driven Distillation Xunyu Zhu, Jian Li, Can Ma, Weiping Wang |

Paper | |

Generative Context Distillation Haebin Shin, Lei Ji, Yeyun Gong, Sungdong Kim, Eunbi Choi, Minjoon Seo |

|

Github Paper |

| SWITCH: Studying with Teacher for Knowledge Distillation of Large Language Models Jahyun Koo, Yerin Hwang, Yongil Kim, Taegwan Kang, Hyunkyung Bae, Kyomin Jung |

|

Paper |

Beyond Autoregression: Fast LLMs via Self-Distillation Through Time Justin Deschenaux, Caglar Gulcehre |

Github Paper |

|

| Pre-training Distillation for Large Language Models: A Design Space Exploration Hao Peng, Xin Lv, Yushi Bai, Zijun Yao, Jiajie Zhang, Lei Hou, Juanzi Li |

Paper | |

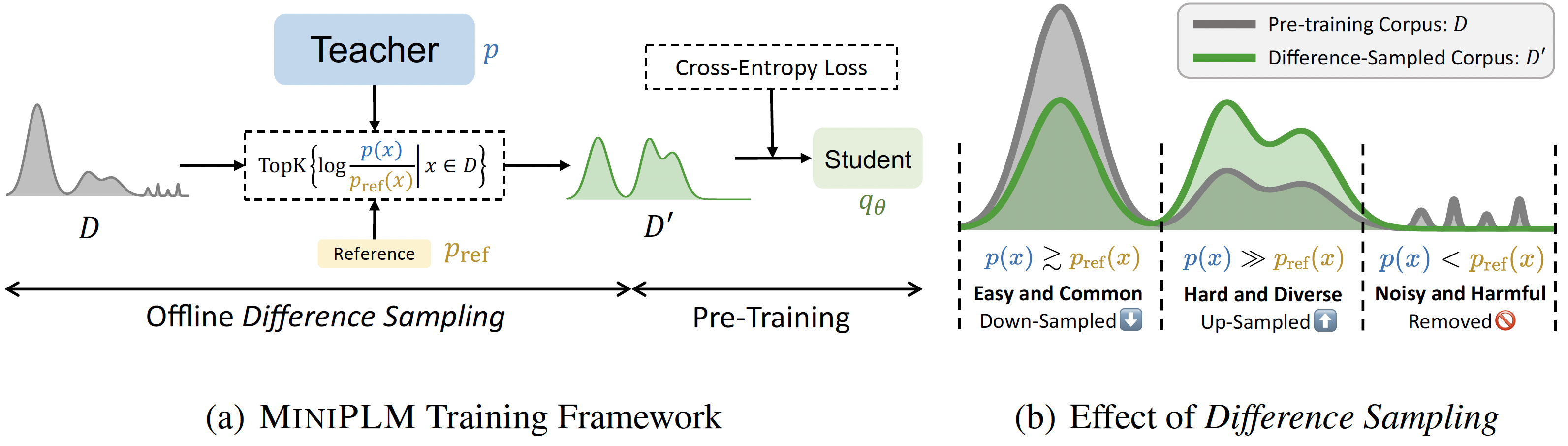

MiniPLM: Knowledge Distillation for Pre-Training Language Models Yuxian Gu, Hao Zhou, Fandong Meng, Jie Zhou, Minlie Huang |

|

Github Paper |

| Speculative Knowledge Distillation: Bridging the Teacher-Student Gap Through Interleaved Sampling Wenda Xu, Rujun Han, Zifeng Wang, Long T. Le, Dhruv Madeka, Lei Li, William Yang Wang, Rishabh Agarwal, Chen-Yu Lee, Tomas Pfister |

Paper | |

| Evolutionary Contrastive Distillation for Language Model Alignment Julian Katz-Samuels, Zheng Li, Hyokun Yun, Priyanka Nigam, Yi Xu, Vaclav Petricek, Bing Yin, Trishul Chilimbi |

Paper | |

| BabyLlama-2: Ensemble-Distilled Models Consistently Outperform Teachers With Limited Data Jean-Loup Tastet, Inar Timiryasov |

Paper | |

| EchoAtt: Attend, Copy, then Adjust for More Efficient Large Language Models Hossein Rajabzadeh, Aref Jafari, Aman Sharma, Benyamin Jami, Hyock Ju Kwon, Ali Ghodsi, Boxing Chen, Mehdi Rezagholizadeh |

Paper | |

SKIntern: Internalizing Symbolic Knowledge for Distilling Better CoT Capabilities into Small Language Models Huanxuan Liao, Shizhu He, Yupu Hao, Xiang Li, Yuanzhe Zhang, Kang Liu, Jun Zhao |

Github Paper |

|

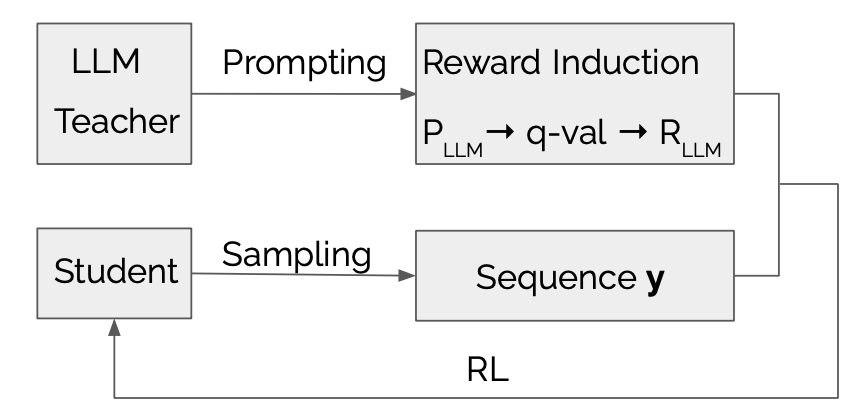

LLMR: Knowledge Distillation with a Large Language Model-Induced Reward Dongheng Li, Yongchang Hao, Lili Mou |

|

Github Paper |

| Exploring and Enhancing the Transfer of Distribution in Knowledge Distillation for Autoregressive Language Models Jun Rao, Xuebo Liu, Zepeng Lin, Liang Ding, Jing Li, Dacheng Tao |

Paper | |

| Efficient Knowledge Distillation: Empowering Small Language Models with Teacher Model Insights Mohamad Ballout, Ulf Krumnack, Gunther Heidemann, Kai-Uwe Kühnberger |

Paper | |

The Mamba in the Llama: Distilling and Accelerating Hybrid Models Junxiong Wang, Daniele Paliotta, Avner May, Alexander M. Rush, Tri Dao |

Github Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

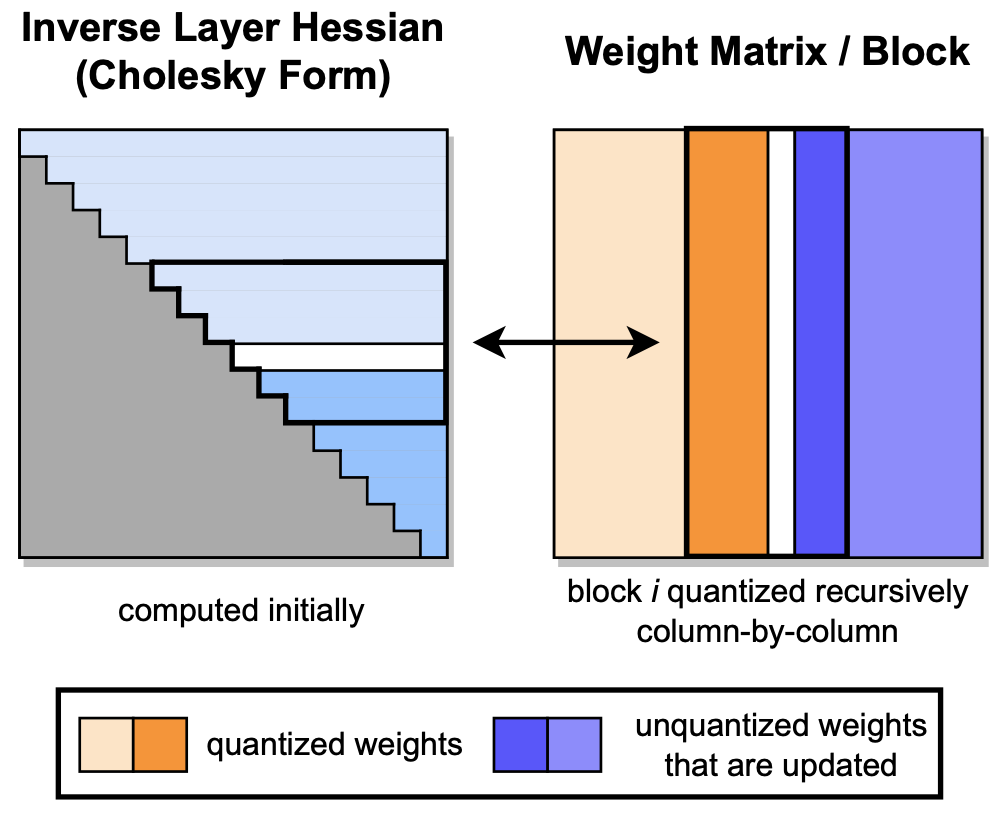

GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers Elias Frantar, Saleh Ashkboos, Torsten Hoefler, Dan Alistarh |

|

Github Paper |

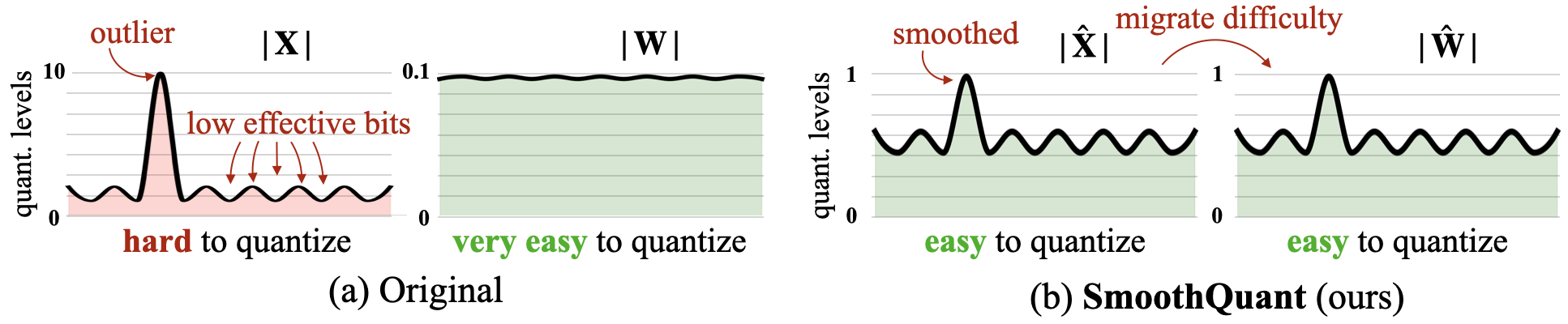

| SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models Guangxuan Xiao, Ji Lin, Mickael Seznec, Hao Wu, Julien Demouth, Song Han |

|

Github Paper |

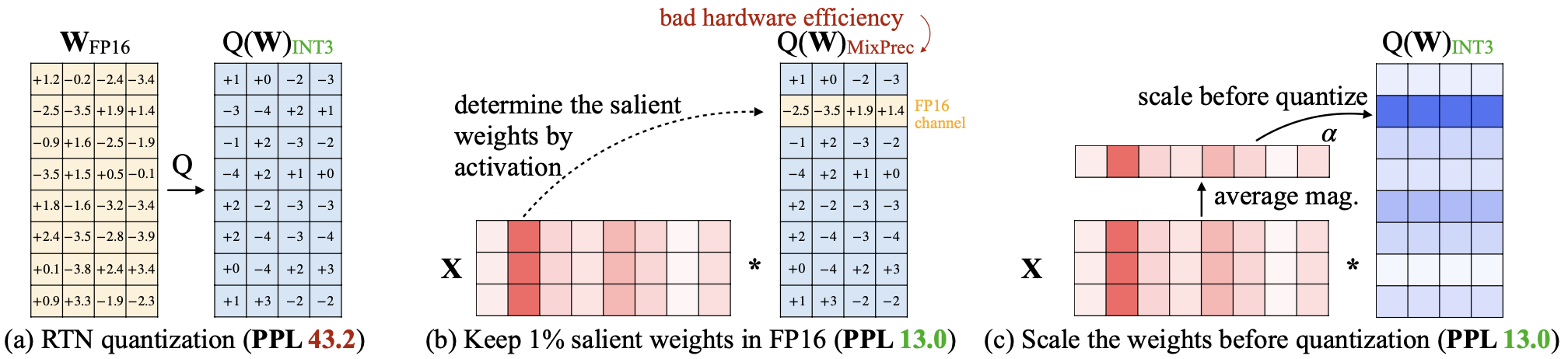

| AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration Ji Lin, Jiaming Tang, Haotian Tang, Shang Yang, Xingyu Dang, Song Han |

|

Github Paper |

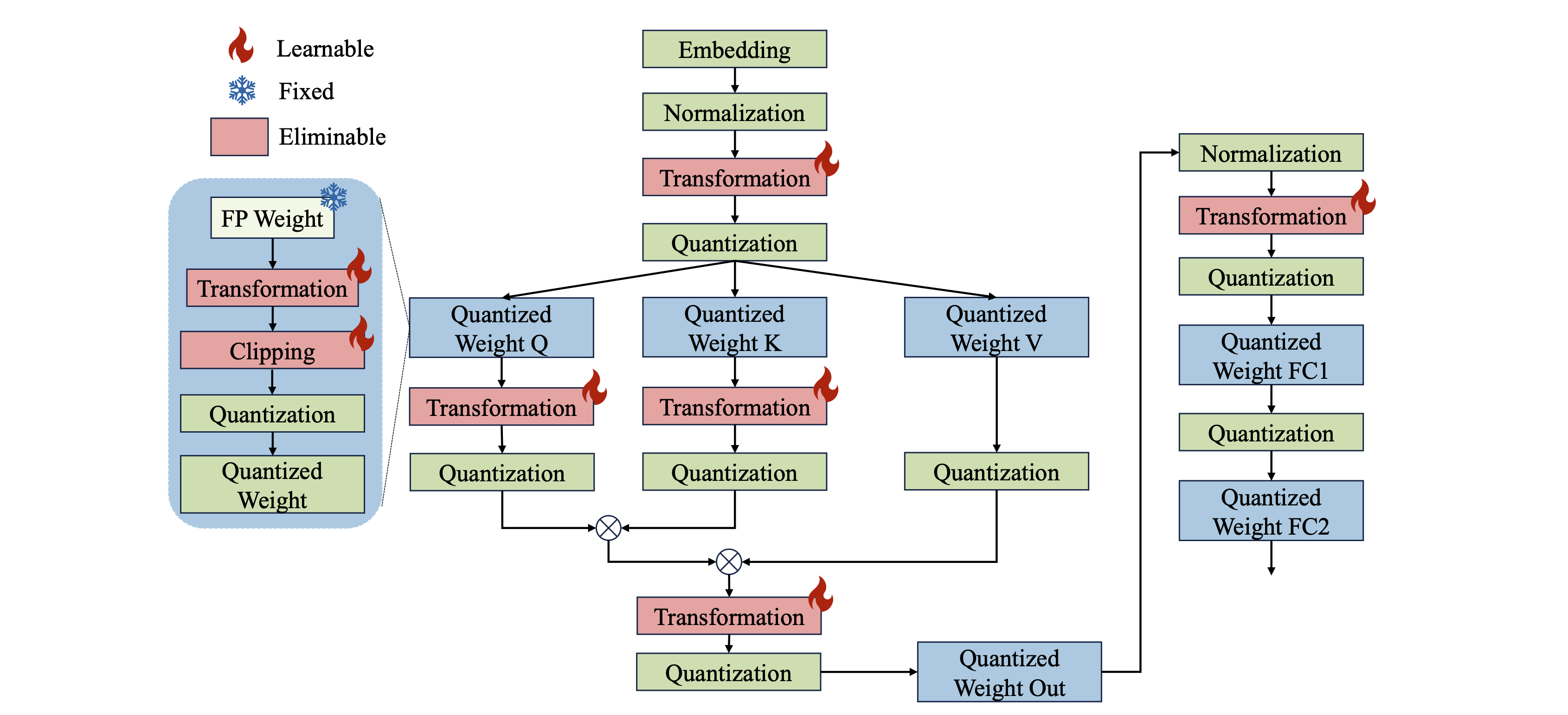

OmniQuant: Omnidirectionally Calibrated Quantization for Large Language Models Wenqi Shao, Mengzhao Chen, Zhaoyang Zhang, Peng Xu, Lirui Zhao, Zhiqian Li, Kaipeng Zhang, Peng Gao, Yu Qiao, Ping Luo |

|

Github Paper |

| SKIM: Any-bit Quantization Pushing The Limits of Post-Training Quantization Runsheng Bai, Qiang Liu, Bo Liu |

Paper | |

| CPTQuant -- A Novel Mixed Precision Post-Training Quantization Techniques for Large Language Models Amitash Nanda, Sree Bhargavi Balija, Debashis Sahoo |

Paper | |

Anda: Unlocking Efficient LLM Inference with a Variable-Length Grouped Activation Data Format Chao Fang, Man Shi, Robin Geens, Arne Symons, Zhongfeng Wang, Marian Verhelst |

Paper | |

| MixPE: Quantization and Hardware Co-design for Efficient LLM Inference Yu Zhang, Mingzi Wang, Lancheng Zou, Wulong Liu, Hui-Ling Zhen, Mingxuan Yuan, Bei Yu |

Paper | |

BitMoD: Bit-serial Mixture-of-Datatype LLM Acceleration Yuzong Chen, Ahmed F. AbouElhamayed, Xilai Dai, Yang Wang, Marta Andronic, George A. Constantinides, Mohamed S. Abdelfattah |

Github Paper |

|

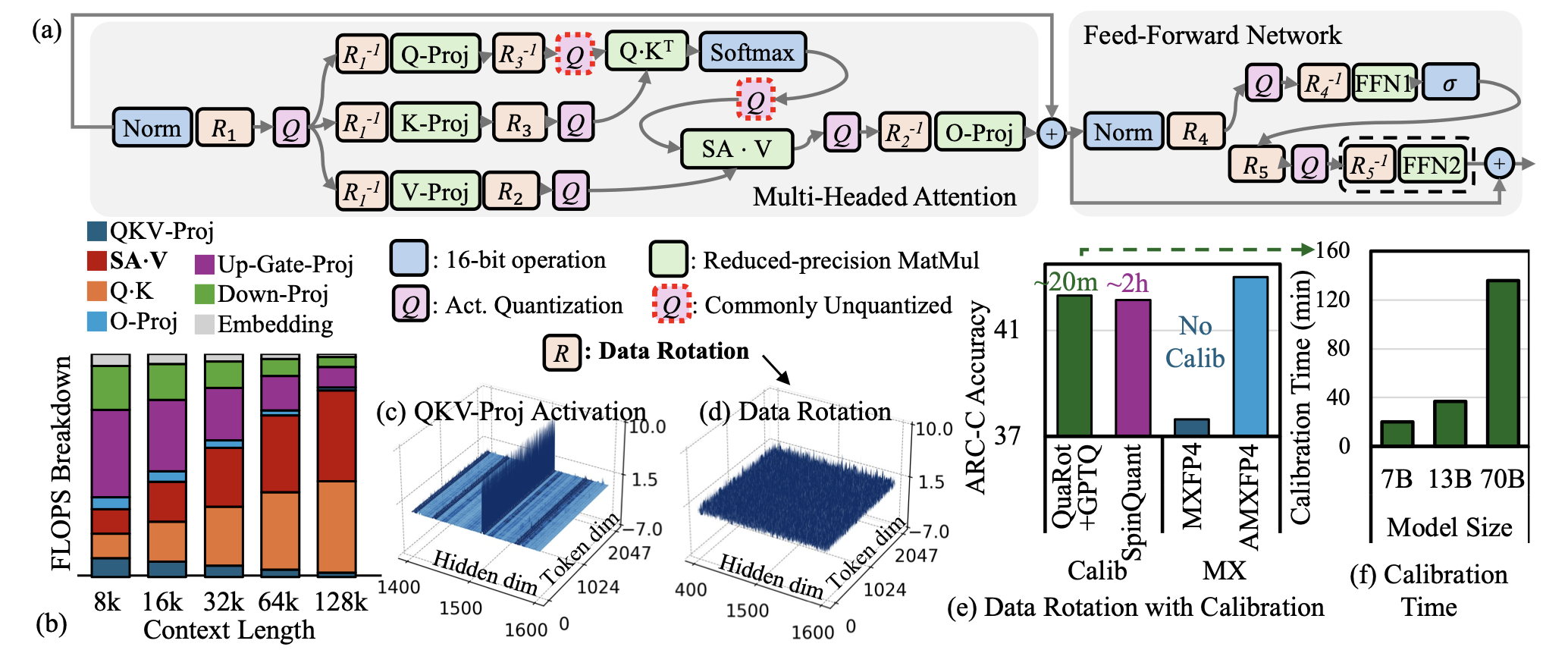

| AMXFP4: Taming Activation Outliers with Asymmetric Microscaling Floating-Point for 4-bit LLM Inference Janghwan Lee, Jiwoong Park, Jinseok Kim, Yongjik Kim, Jungju Oh, Jinwook Oh, Jungwook Choi |

|

Paper |

| Bi-Mamba: Towards Accurate 1-Bit State Space Models Shengkun Tang, Liqun Ma, Haonan Li, Mingjie Sun, Zhiqiang Shen |

Paper | |

| "Give Me BF16 or Give Me Death"? Accuracy-Performance Trade-Offs in LLM Quantization Eldar Kurtic, Alexandre Marques, Shubhra Pandit, Mark Kurtz, Dan Alistarh |

Paper | |

| GWQ: Gradient-Aware Weight Quantization for Large Language Models Yihua Shao, Siyu Liang, Xiaolin Lin, Zijian Ling, Zixian Zhu et al |

Paper | |

| A Comprehensive Study on Quantization Techniques for Large Language Models Jiedong Lang, Zhehao Guo, Shuyu Huang |

Paper | |

| BitNet a4.8: 4-bit Activations for 1-bit LLMs Hongyu Wang, Shuming Ma, Furu Wei |

Paper | |

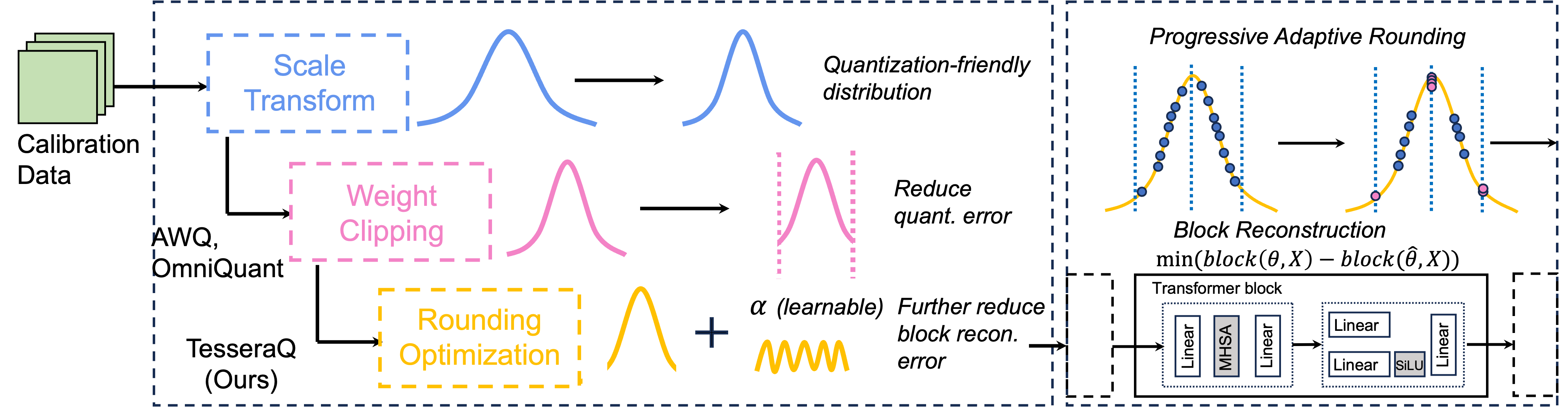

TesseraQ: Ultra Low-Bit LLM Post-Training Quantization with Block Reconstruction Yuhang Li, Priyadarshini Panda |

|

Github Paper |

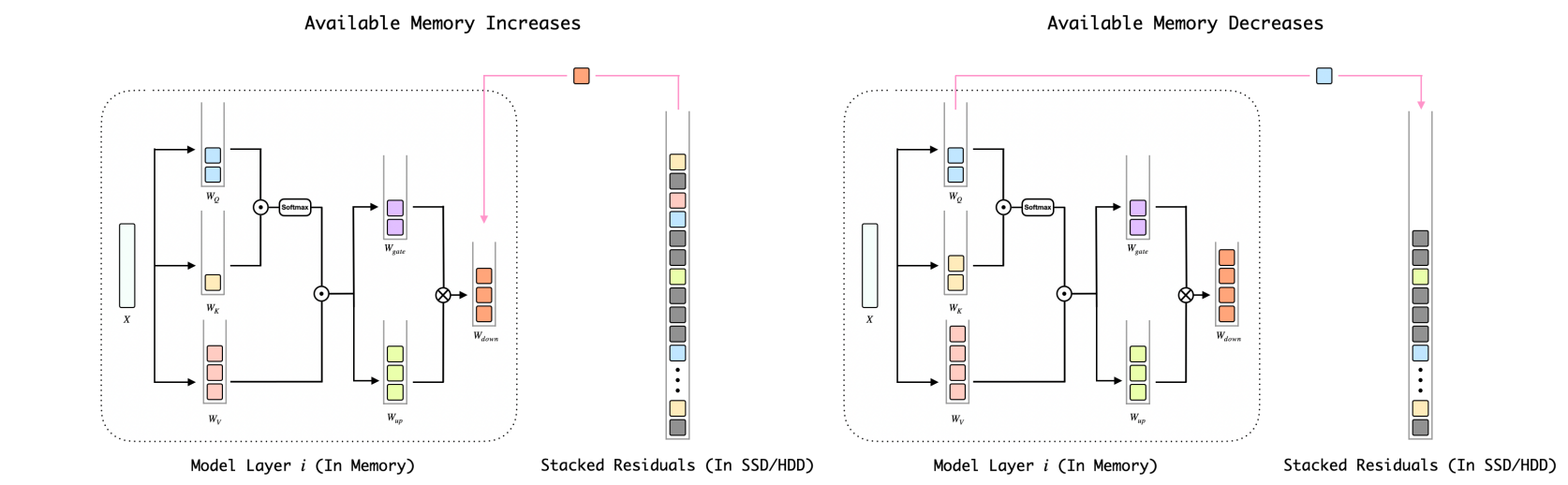

BitStack: Fine-Grained Size Control for Compressed Large Language Models in Variable Memory Environments Xinghao Wang, Pengyu Wang, Bo Wang, Dong Zhang, Yunhua Zhou, Xipeng Qiu |

|

Github Paper |

| The Impact of Inference Acceleration Strategies on Bias of LLMs Elisabeth Kirsten, Ivan Habernal, Vedant Nanda, Muhammad Bilal Zafar |

Paper | |

| Understanding the difficulty of low-precision post-training quantization of large language models Zifei Xu, Sayeh Sharify, Wanzin Yazar, Tristan Webb, Xin Wang |

Paper | |

1-bit AI Infra: Part 1.1, Fast and Lossless BitNet b1.58 Inference on CPUs Jinheng Wang, Hansong Zhou, Ting Song, Shaoguang Mao, Shuming Ma, Hongyu Wang, Yan Xia, Furu Wei |

Github Paper |

|

| QuAILoRA: Quantization-Aware Initialization for LoRA Neal Lawton, Aishwarya Padmakumar, Judith Gaspers, Jack FitzGerald, Anoop Kumar, Greg Ver Steeg, Aram Galstyan |

Paper | |

| Evaluating Quantized Large Language Models for Code Generation on Low-Resource Language Benchmarks Enkhbold Nyamsuren |

Paper | |

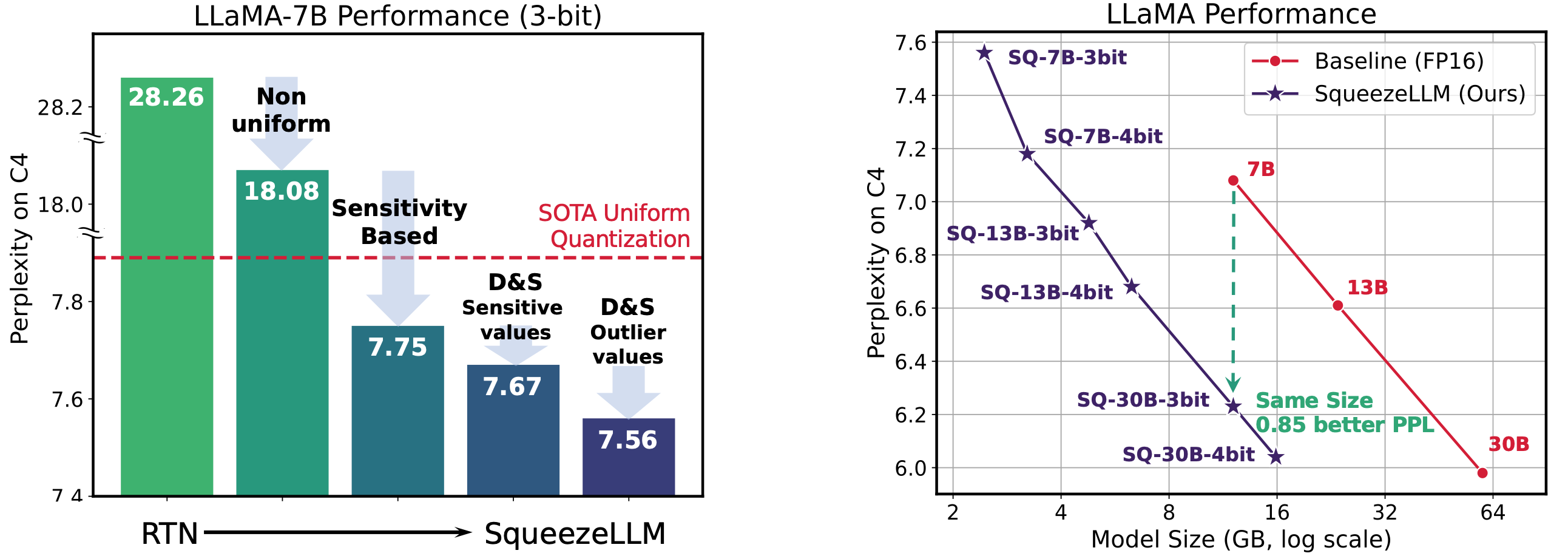

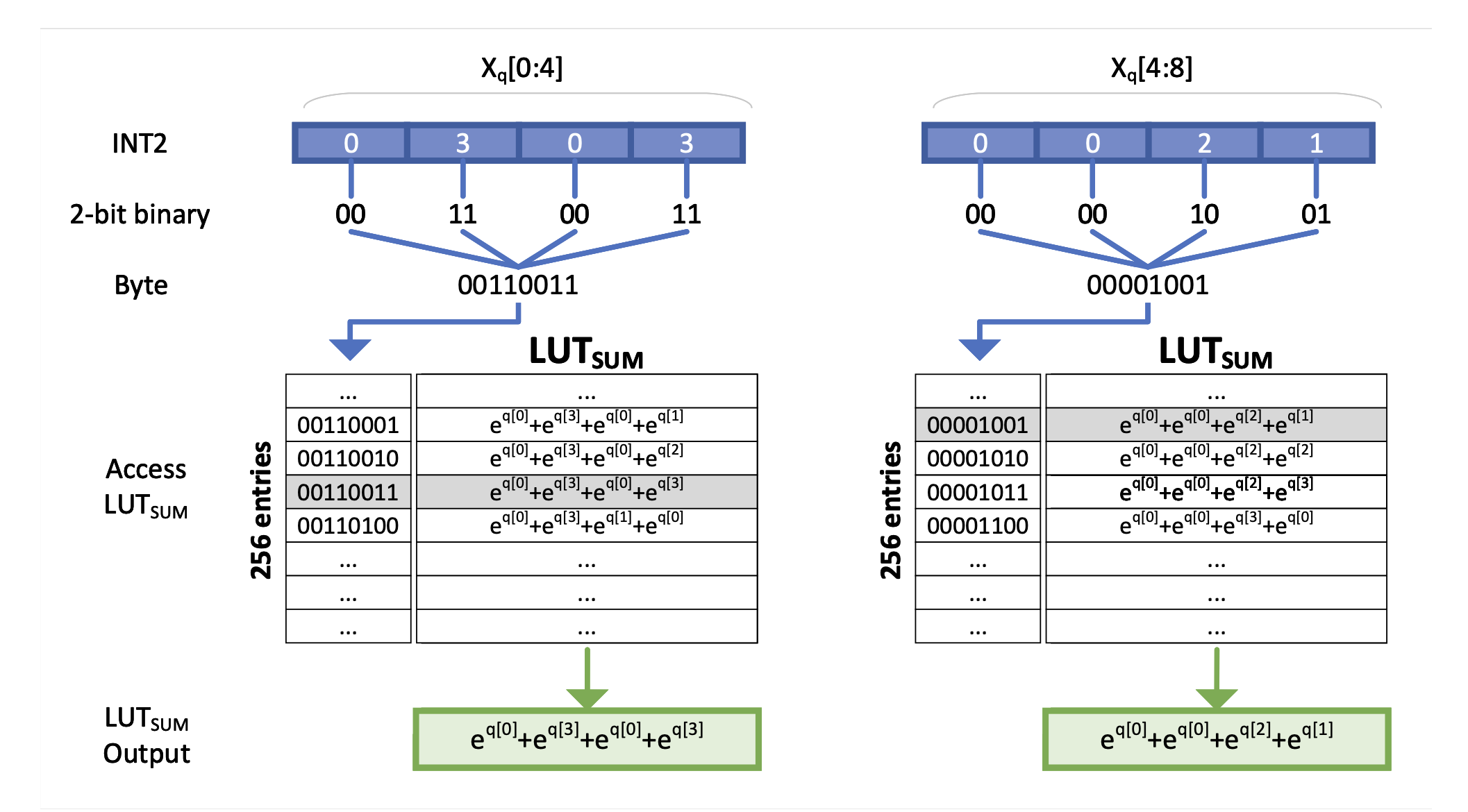

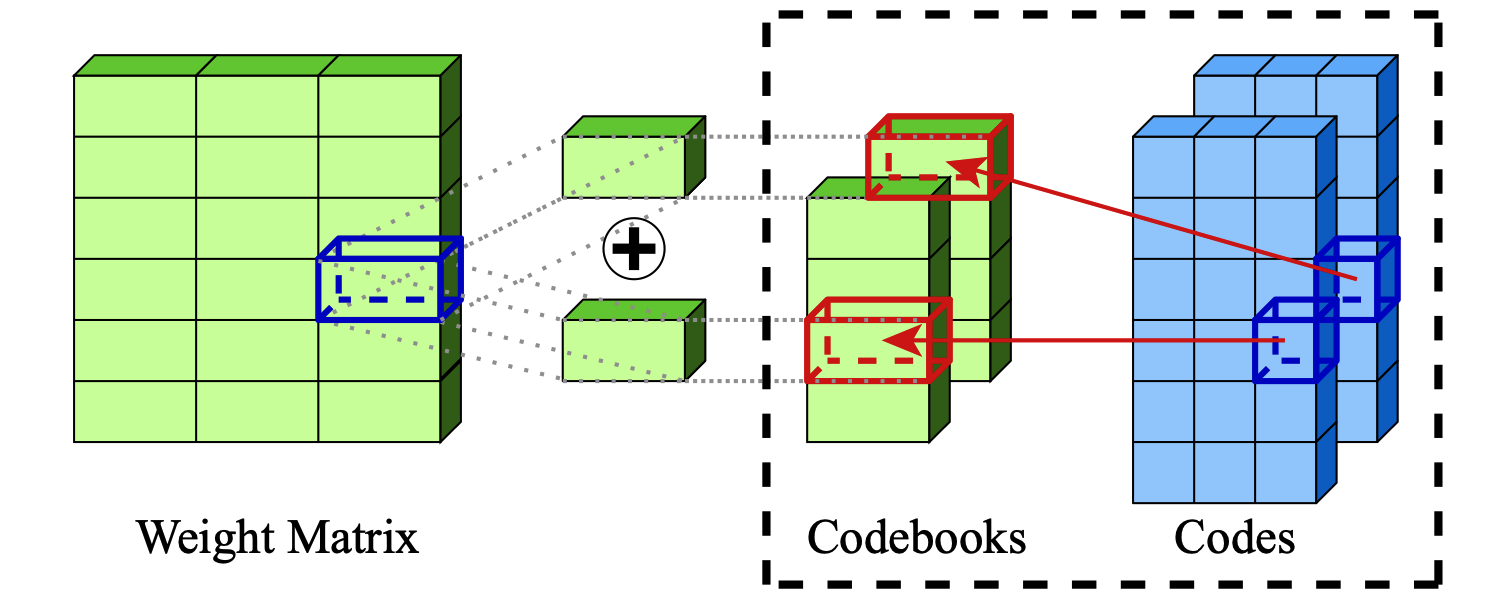

| SqueezeLLM: Dense-and-Sparse Quantization Sehoon Kim, Coleman Hooper, Amir Gholami, Zhen Dong, Xiuyu Li, Sheng Shen, Michael W. Mahoney, Kurt Keutzer |

|

Github Paper |

| Pyramid Vector Quantization for LLMs Tycho F. A. van der Ouderaa, Maximilian L. Croci, Agrin Hilmkil, James Hensman |

Paper | |

| SeedLM: Compressing LLM Weights into Seeds of Pseudo-Random Generators Rasoul Shafipour, David Harrison, Maxwell Horton, Jeffrey Marker, Houman Bedayat, Sachin Mehta, Mohammad Rastegari, Mahyar Najibi, Saman Naderiparizi |

Paper | |

FlatQuant: Flatness Matters for LLM Quantization Yuxuan Sun, Ruikang Liu, Haoli Bai, Han Bao, Kang Zhao, Yuening Li, Jiaxin Hu, Xianzhi Yu, Lu Hou, Chun Yuan, Xin Jiang, Wulong Liu, Jun Yao |

Github Paper |

|

SLiM: One-shot Quantized Sparse Plus Low-rank Approximation of LLMs Mohammad Mozaffari, Maryam Mehri Dehnavi |

Github Paper |

|

| Scaling laws for post-training quantized large language models Zifei Xu, Alexander Lan, Wanzin Yazar, Tristan Webb, Sayeh Sharify, Xin Wang |

Paper | |

| Continuous Approximations for Improving Quantization Aware Training of LLMs He Li, Jianhang Hong, Yuanzhuo Wu, Snehal Adbol, Zonglin Li |

Paper | |

DAQ: Density-Aware Post-Training Weight-Only Quantization For LLMs Yingsong Luo, Ling Chen |

Github Paper |

|

Quamba: A Post-Training Quantization Recipe for Selective State Space Models Hung-Yueh Chiang, Chi-Chih Chang, Natalia Frumkin, Kai-Chiang Wu, Diana Marculescu |

Github Paper |

|

| AsymKV: Enabling 1-Bit Quantization of KV Cache with Layer-Wise Asymmetric Quantization Configurations Qian Tao, Wenyuan Yu, Jingren Zhou |

Paper | |

| Channel-Wise Mixed-Precision Quantization for Large Language Models Zihan Chen, Bike Xie, Jundong Li, Cong Shen |

Paper | |

| Progressive Mixed-Precision Decoding for Efficient LLM Inference Hao Mark Chen, Fuwen Tan, Alexandros Kouris, Royson Lee, Hongxiang Fan, Stylianos I. Venieris |

Paper | |

EXAQ: Exponent Aware Quantization For LLMs Acceleration Moran Shkolnik, Maxim Fishman, Brian Chmiel, Hilla Ben-Yaacov, Ron Banner, Kfir Yehuda Levy |

|

Github Paper |

PrefixQuant: Static Quantization Beats Dynamic through Prefixed Outliers in LLMs Mengzhao Chen, Yi Liu, Jiahao Wang, Yi Bin, Wenqi Shao, Ping Luo |

Github Paper |

|

Extreme Compression of Large Language Models via Additive Quantization Vage Egiazarian, Andrei Panferov, Denis Kuznedelev, Elias Frantar, Artem Babenko, Dan Alistarh |

|

Github Paper |

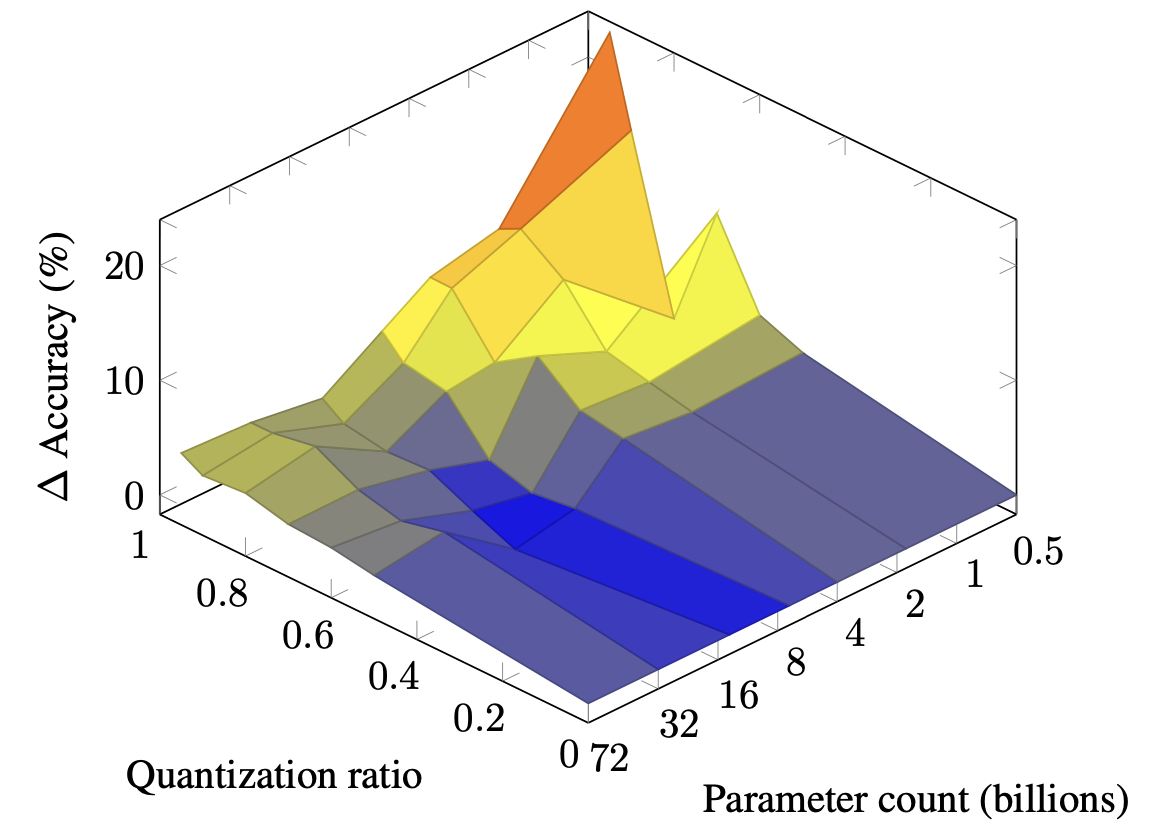

| Scaling Laws for Mixed quantization in Large Language Models Zeyu Cao, Cheng Zhang, Pedro Gimenes, Jianqiao Lu, Jianyi Cheng, Yiren Zhao |

|

Paper |

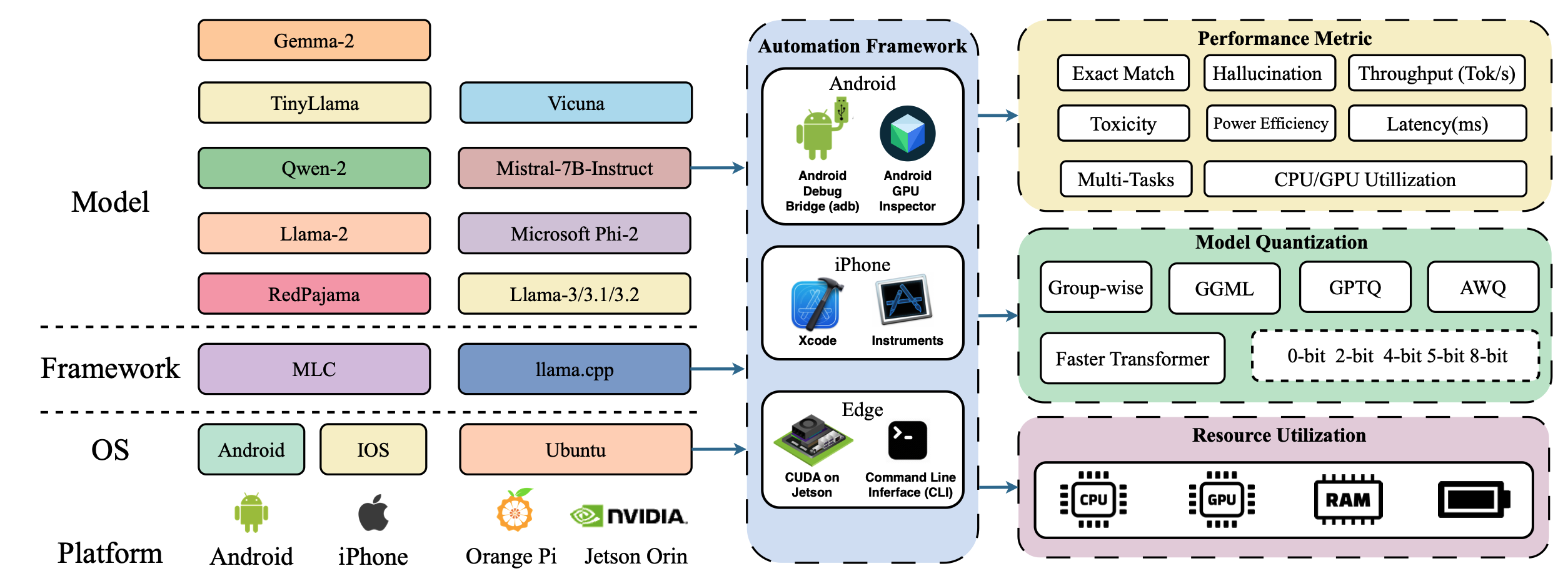

| PalmBench: A Comprehensive Benchmark of Compressed Large Language Models on Mobile Platforms Yilong Li, Jingyu Liu, Hao Zhang, M Badri Narayanan, Utkarsh Sharma, Shuai Zhang, Pan Hu, Yijing Zeng, Jayaram Raghuram, Suman Banerjee |

|

Paper |

| CrossQuant: A Post-Training Quantization Method with Smaller Quantization Kernel for Precise Large Language Model Compression Wenyuan Liu, Xindian Ma, Peng Zhang, Yan Wang |

Paper | |

| SageAttention: Accurate 8-Bit Attention for Plug-and-play Inference Acceleration Jintao Zhang, Jia wei, Pengle Zhang, Jun Zhu, Jianfei Chen |

Paper | |

| Addition is All You Need for Energy-efficient Language Models Hongyin Luo, Wei Sun |

Paper | |

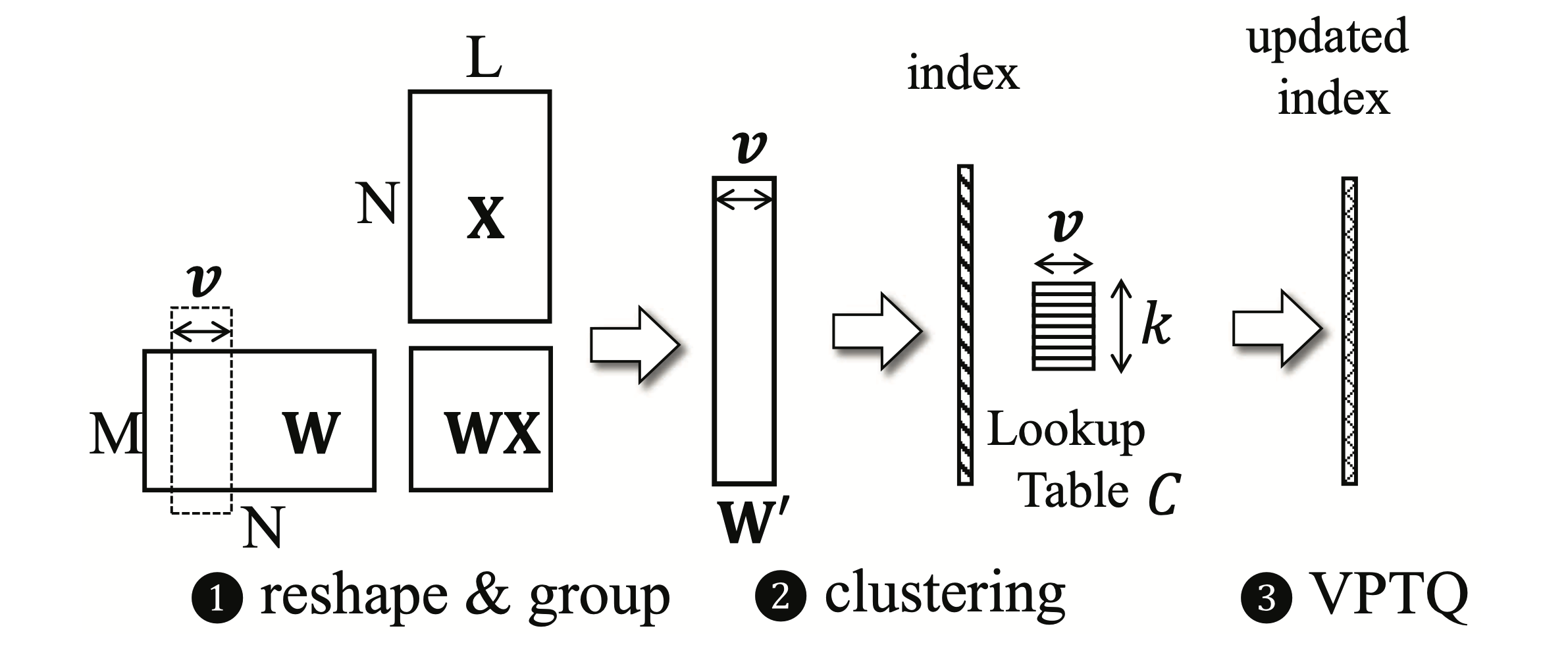

VPTQ: Extreme Low-bit Vector Post-Training Quantization for Large Language Models Yifei Liu, Jicheng Wen, Yang Wang, Shengyu Ye, Li Lyna Zhang, Ting Cao, Cheng Li, Mao Yang |

|

Github Paper |

INT-FlashAttention: Enabling Flash Attention for INT8 Quantization Shimao Chen, Zirui Liu, Zhiying Wu, Ce Zheng, Peizhuang Cong, Zihan Jiang, Yuhan Wu, Lei Su, Tong Yang |

Github Paper |

|

| Accumulator-Aware Post-Training Quantization Ian Colbert, Fabian Grob, Giuseppe Franco, Jinjie Zhang, Rayan Saab |

Paper | |

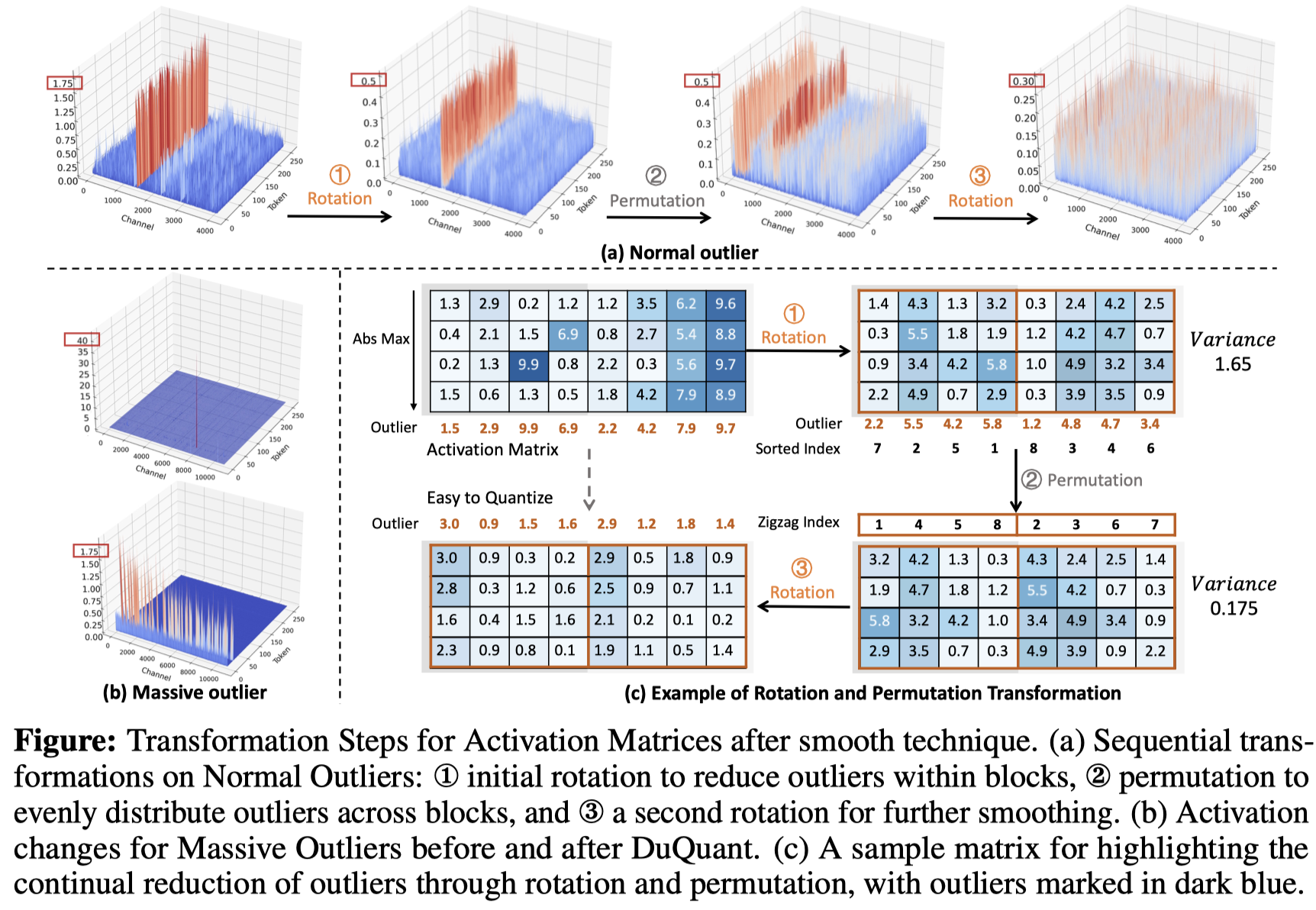

DuQuant: Distributing Outliers via Dual Transformation Makes Stronger Quantized LLMs Haokun Lin, Haobo Xu, Yichen Wu, Jingzhi Cui, Yingtao Zhang, Linzhan Mou, Linqi Song, Zhenan Sun, Ying Wei |

|

Github Paper |

| A Comprehensive Evaluation of Quantized Instruction-Tuned Large Language Models: An Experimental Analysis up to 405B Jemin Lee, Sihyeong Park, Jinse Kwon, Jihun Oh, Yongin Kwon |

Paper | |

| The Uniqueness of LLaMA3-70B with Per-Channel Quantization: An Empirical Study Minghai Qin |

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

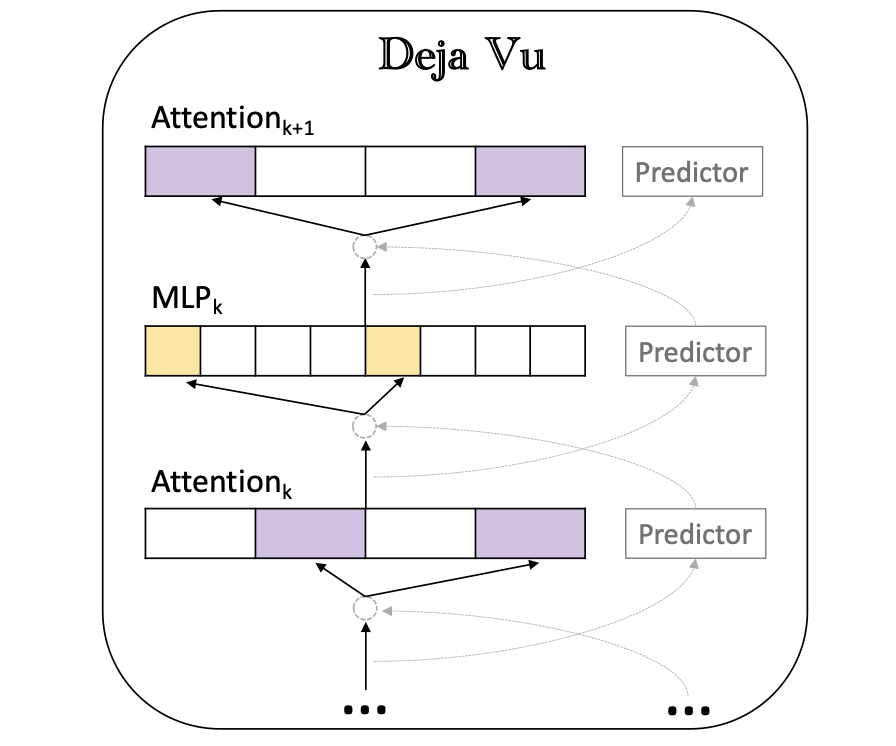

Deja Vu: Contextual Sparsity for Efficient LLMs at Inference Time Zichang Liu, Jue WANG, Tri Dao, Tianyi Zhou, Binhang Yuan, Zhao Song, Anshumali Shrivastava, Ce Zhang, Yuandong Tian, Christopher Re, Beidi Chen |

|

Github Paper |

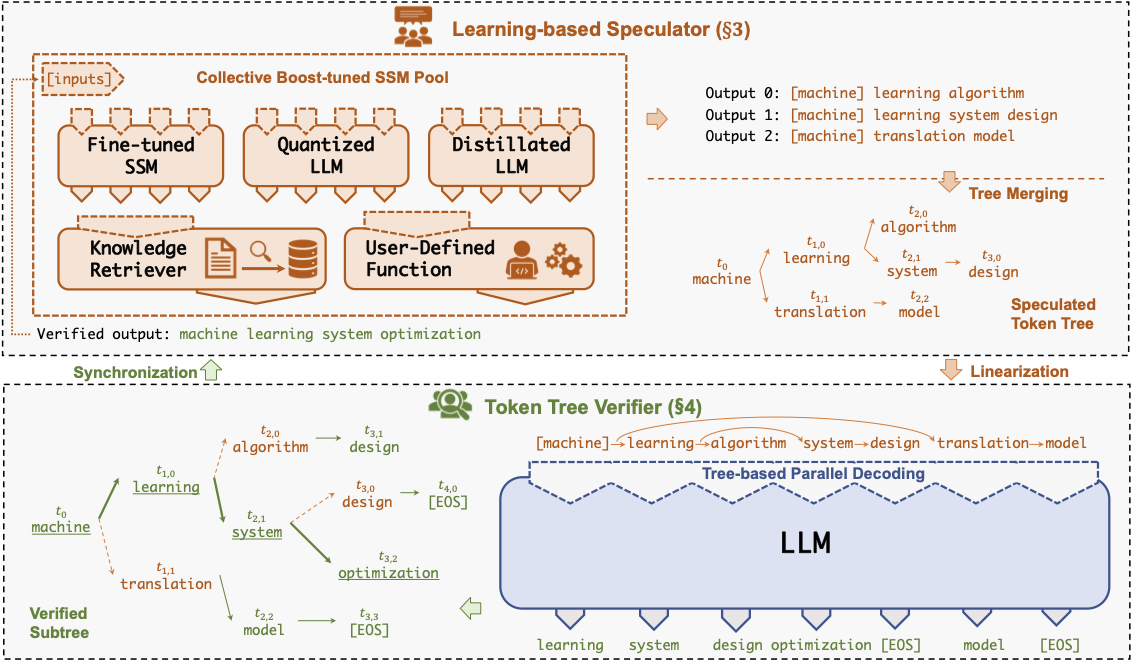

| SpecInfer: Accelerating Generative LLM Serving with Speculative Inference and Token Tree Verification Xupeng Miao, Gabriele Oliaro, Zhihao Zhang, Xinhao Cheng, Zeyu Wang, Rae Ying Yee Wong, Zhuoming Chen, Daiyaan Arfeen, Reyna Abhyankar, Zhihao Jia |

|

Github paper |

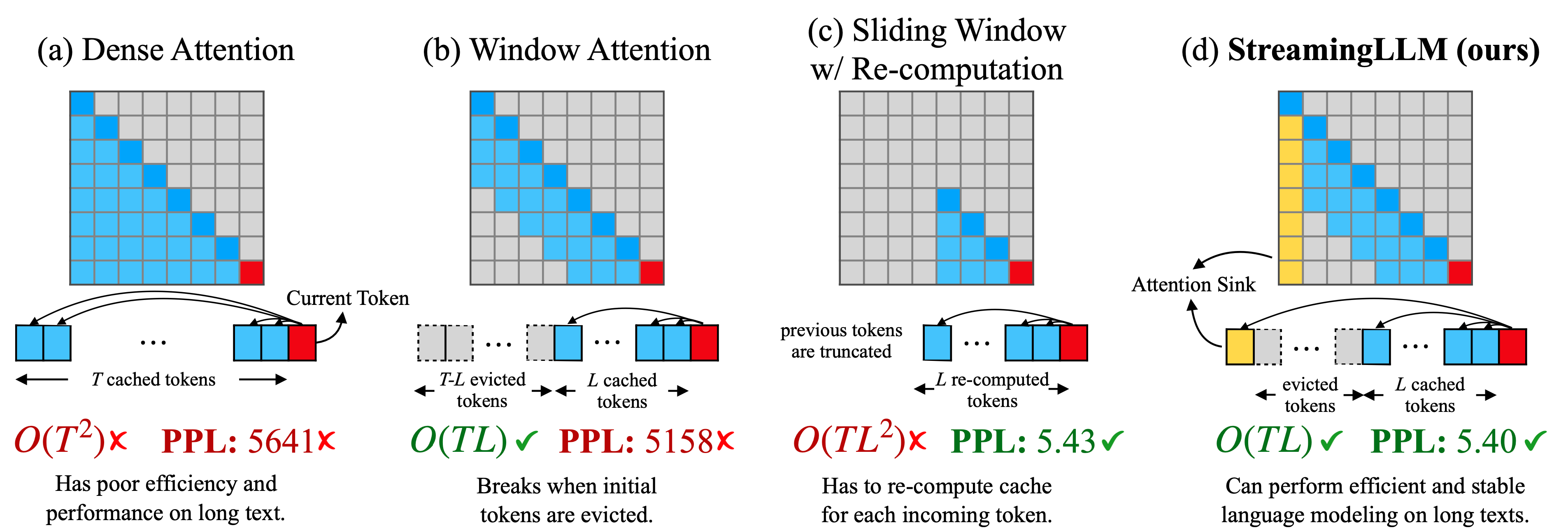

Efficient Streaming Language Models with Attention Sinks Guangxuan Xiao, Yuandong Tian, Beidi Chen, Song Han, Mike Lewis |

|

Github Paper |

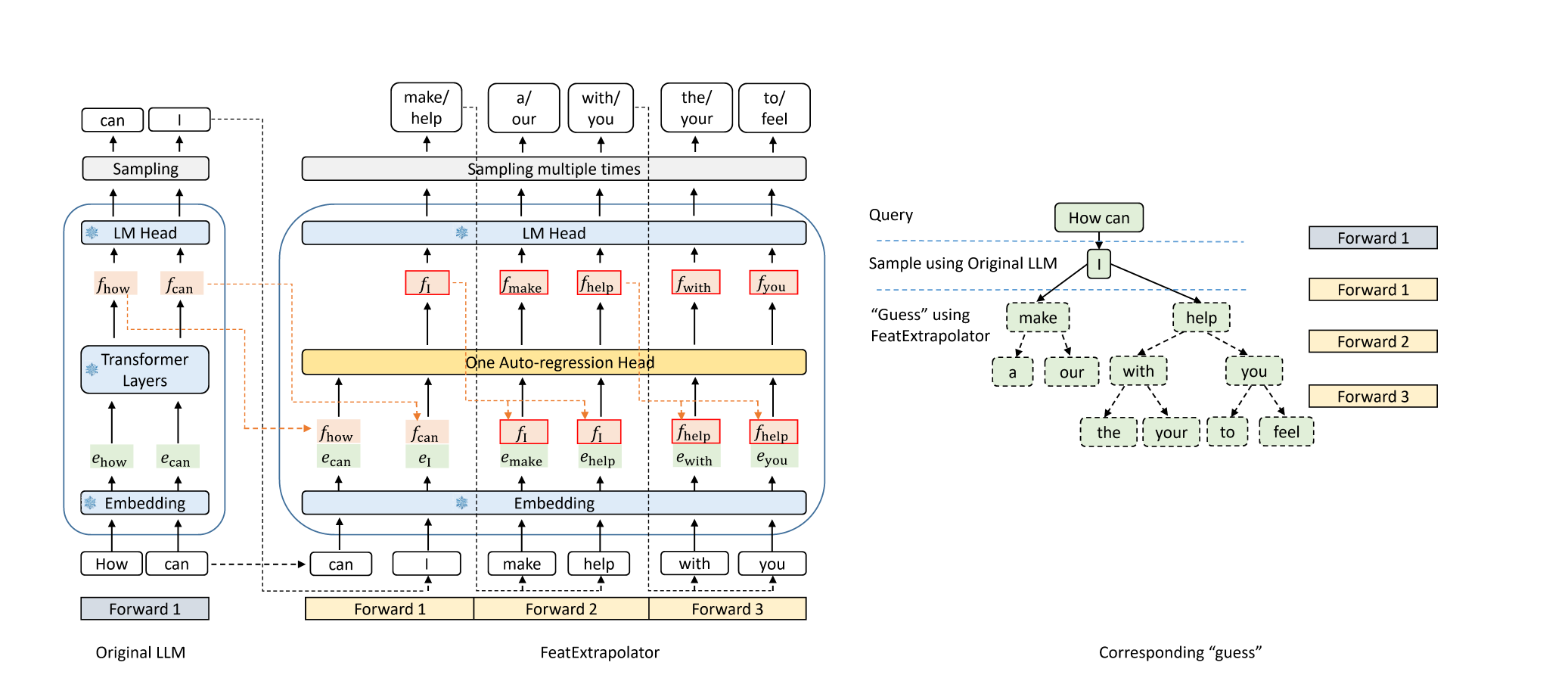

EAGLE: Lossless Acceleration of LLM Decoding by Feature Extrapolation Yuhui Li, Chao Zhang, and Hongyang Zhang |

|

Github Blog |

Medusa: Simple LLM Inference Acceleration Framework with Multiple Decoding Heads Tianle Cai, Yuhong Li, Zhengyang Geng, Hongwu Peng, Jason D. Lee, Deming Chen, Tri Dao |

Github Paper |

|

| Speculative Decoding with CTC-based Draft Model for LLM Inference Acceleration Zhuofan Wen, Shangtong Gui, Yang Feng |

Paper | |

| PLD+: Accelerating LLM inference by leveraging Language Model Artifacts Shwetha Somasundaram, Anirudh Phukan, Apoorv Saxena |

Paper | |

FastDraft: How to Train Your Draft Ofir Zafrir, Igor Margulis, Dorin Shteyman, Guy Boudoukh |

Paper | |

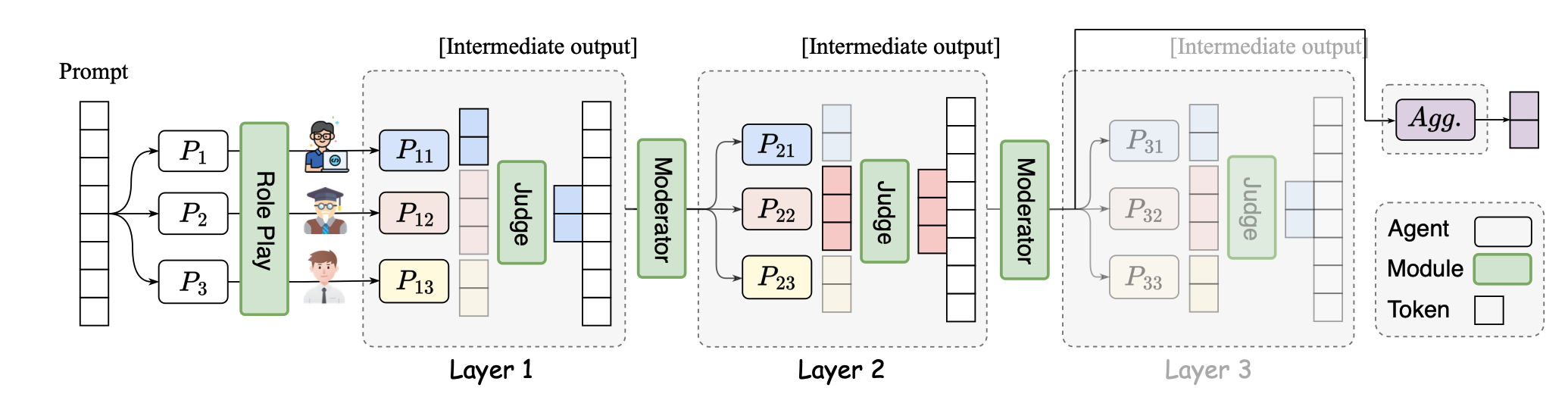

SMoA: Improving Multi-agent Large Language Models with Sparse Mixture-of-Agents Dawei Li, Zhen Tan, Peijia Qian, Yifan Li, Kumar Satvik Chaudhary, Lijie Hu, Jiayi Shen |

|

Github Paper |

| The N-Grammys: Accelerating Autoregressive Inference with Learning-Free Batched Speculation Lawrence Stewart, Matthew Trager, Sujan Kumar Gonugondla, Stefano Soatto |

Paper | |

| Accelerated AI Inference via Dynamic Execution Methods Haim Barad, Jascha Achterberg, Tien Pei Chou, Jean Yu |

Paper | |

| SuffixDecoding: A Model-Free Approach to Speeding Up Large Language Model Inference Gabriele Oliaro, Zhihao Jia, Daniel Campos, Aurick Qiao |

Paper | |

| Dynamic Strategy Planning for Efficient Question Answering with Large Language Models Tanmay Parekh, Pradyot Prakash, Alexander Radovic, Akshay Shekher, Denis Savenkov |

Paper | |

MagicPIG: LSH Sampling for Efficient LLM Generation Zhuoming Chen, Ranajoy Sadhukhan, Zihao Ye, Yang Zhou, Jianyu Zhang, Niklas Nolte, Yuandong Tian, Matthijs Douze, Leon Bottou, Zhihao Jia, Beidi Chen |

Github Paper |

|

| Faster Language Models with Better Multi-Token Prediction Using Tensor Decomposition Artem Basharin, Andrei Chertkov, Ivan Oseledets |

|

Paper |

| Efficient Inference for Augmented Large Language Models Rana Shahout, Cong Liang, Shiji Xin, Qianru Lao, Yong Cui, Minlan Yu, Michael Mitzenmacher |

Paper | |

Dynamic Vocabulary Pruning in Early-Exit LLMs Jort Vincenti, Karim Abdel Sadek, Joan Velja, Matteo Nulli, Metod Jazbec |

|

Github Paper |

CoreInfer: Accelerating Large Language Model Inference with Semantics-Inspired Adaptive Sparse Activation Qinsi Wang, Saeed Vahidian, Hancheng Ye, Jianyang Gu, Jianyi Zhang, Yiran Chen |

Github Paper |

|

DuoAttention: Efficient Long-Context LLM Inference with Retrieval and Streaming Heads Guangxuan Xiao, Jiaming Tang, Jingwei Zuo, Junxian Guo, Shang Yang, Haotian Tang, Yao Fu, Song Han |

|

Github Paper |

| DySpec: Faster Speculative Decoding with Dynamic Token Tree Structure Yunfan Xiong, Ruoyu Zhang, Yanzeng Li, Tianhao Wu, Lei Zou |

Paper | |

| QSpec: Speculative Decoding with Complementary Quantization Schemes Juntao Zhao, Wenhao Lu, Sheng Wang, Lingpeng Kong, Chuan Wu |

Paper | |

| TidalDecode: Fast and Accurate LLM Decoding with Position Persistent Sparse Attention Lijie Yang, Zhihao Zhang, Zhuofu Chen, Zikun Li, Zhihao Jia |

Paper | |

| ParallelSpec: Parallel Drafter for Efficient Speculative Decoding Zilin Xiao, Hongming Zhang, Tao Ge, Siru Ouyang, Vicente Ordonez, Dong Yu |

Paper | |

SWIFT: On-the-Fly Self-Speculative Decoding for LLM Inference Acceleration Heming Xia, Yongqi Li, Jun Zhang, Cunxiao Du, Wenjie Li |

|

Github Paper |

TurboRAG: Accelerating Retrieval-Augmented Generation with Precomputed KV Caches for Chunked Text Songshuo Lu, Hua Wang, Yutian Rong, Zhi Chen, Yaohua Tang |

|

Github Paper |

| A Little Goes a Long Way: Efficient Long Context Training and Inference with Partial Contexts Suyu Ge, Xihui Lin, Yunan Zhang, Jiawei Han, Hao Peng |

Paper | |

| Mnemosyne: Parallelization Strategies for Efficiently Serving Multi-Million Context Length LLM Inference Requests Without Approximations Amey Agrawal, Junda Chen, Íñigo Goiri, Ramachandran Ramjee, Chaojie Zhang, Alexey Tumanov, Esha Choukse |

Paper | |

Discovering the Gems in Early Layers: Accelerating Long-Context LLMs with 1000x Input Token Reduction Zhenmei Shi, Yifei Ming, Xuan-Phi Nguyen, Yingyu Liang, Shafiq Joty |

Github Paper |

|

| Dynamic-Width Speculative Beam Decoding for Efficient LLM Inference Zongyue Qin, Zifan He, Neha Prakriya, Jason Cong, Yizhou Sun |

Paper | |

CritiPrefill: A Segment-wise Criticality-based Approach for Prefilling Acceleration in LLMs Junlin Lv, Yuan Feng, Xike Xie, Xin Jia, Qirong Peng, Guiming Xie |

Github Paper |

|

| RetrievalAttention: Accelerating Long-Context LLM Inference via Vector Retrieval Di Liu, Meng Chen, Baotong Lu, Huiqiang Jiang, Zhenhua Han, Qianxi Zhang, Qi Chen, Chengruidong Zhang, Bailu Ding, Kai Zhang, Chen Chen, Fan Yang, Yuqing Yang, Lili Qiu |

Paper | |

Sirius: Contextual Sparsity with Correction for Efficient LLMs Yang Zhou, Zhuoming Chen, Zhaozhuo Xu, Victoria Lin, Beidi Chen |

Github Paper |

|

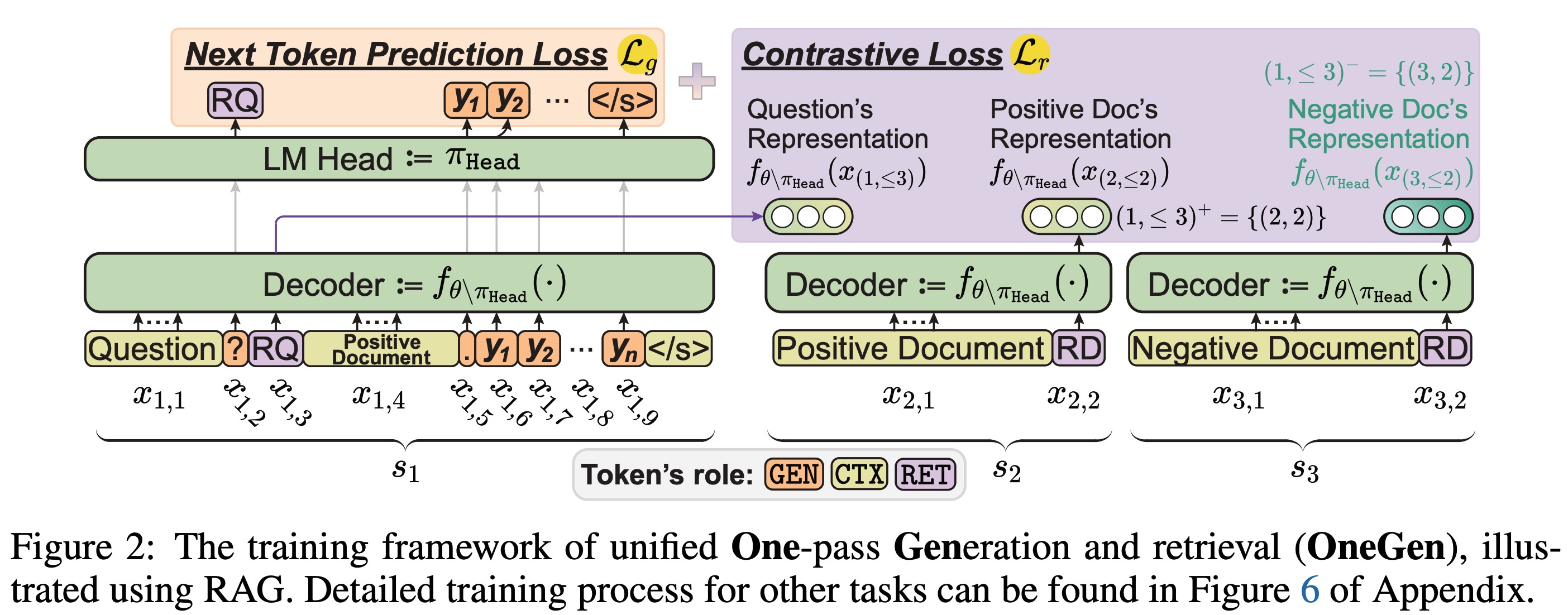

OneGen: Efficient One-Pass Unified Generation and Retrieval for LLMs Jintian Zhang, Cheng Peng, Mengshu Sun, Xiang Chen, Lei Liang, Zhiqiang Zhang, Jun Zhou, Huajun Chen, Ningyu Zhang |

|

Github Paper |

| Path-Consistency: Prefix Enhancement for Efficient Inference in LLM Jiace Zhu, Yingtao Shen, Jie Zhao, An Zou |

Paper | |

| Boosting Lossless Speculative Decoding via Feature Sampling and Partial Alignment Distillation Lujun Gui, Bin Xiao, Lei Su, Weipeng Chen |

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

Fast Inference of Mixture-of-Experts Language Models with Offloading Artyom Eliseev, Denis Mazur |

|

Github Paper |

Condense, Don't Just Prune: Enhancing Efficiency and Performance in MoE Layer Pruning Mingyu Cao, Gen Li, Jie Ji, Jiaqi Zhang, Xiaolong Ma, Shiwei Liu, Lu Yin |

Github Paper |

|

| Mixture of Cache-Conditional Experts for Efficient Mobile Device Inference Andrii Skliar, Ties van Rozendaal, Romain Lepert, Todor Boinovski, Mart van Baalen, Markus Nagel, Paul Whatmough, Babak Ehteshami Bejnordi |

Paper | |

MoNTA: Accelerating Mixture-of-Experts Training with Network-Traffc-Aware Parallel Optimization Jingming Guo, Yan Liu, Yu Meng, Zhiwei Tao, Banglan Liu, Gang Chen, Xiang Li |

Github Paper |

|

MoE-I2: Compressing Mixture of Experts Models through Inter-Expert Pruning and Intra-Expert Low-Rank Decomposition Cheng Yang, Yang Sui, Jinqi Xiao, Lingyi Huang, Yu Gong, Yuanlin Duan, Wenqi Jia, Miao Yin, Yu Cheng, Bo Yuan |

Github Paper |

|

| HOBBIT: A Mixed Precision Expert Offloading System for Fast MoE Inference Peng Tang, Jiacheng Liu, Xiaofeng Hou, Yifei Pu, Jing Wang, Pheng-Ann Heng, Chao Li, Minyi Guo |

Paper | |

| ProMoE: Fast MoE-based LLM Serving using Proactive Caching Xiaoniu Song, Zihang Zhong, Rong Chen |

Paper | |

| ExpertFlow: Optimized Expert Activation and Token Allocation for Efficient Mixture-of-Experts Inference Xin He, Shunkang Zhang, Yuxin Wang, Haiyan Yin, Zihao Zeng, Shaohuai Shi, Zhenheng Tang, Xiaowen Chu, Ivor Tsang, Ong Yew Soon |

Paper | |

| EPS-MoE: Expert Pipeline Scheduler for Cost-Efficient MoE Inference Yulei Qian, Fengcun Li, Xiangyang Ji, Xiaoyu Zhao, Jianchao Tan, Kefeng Zhang, Xunliang Cai |

Paper | |

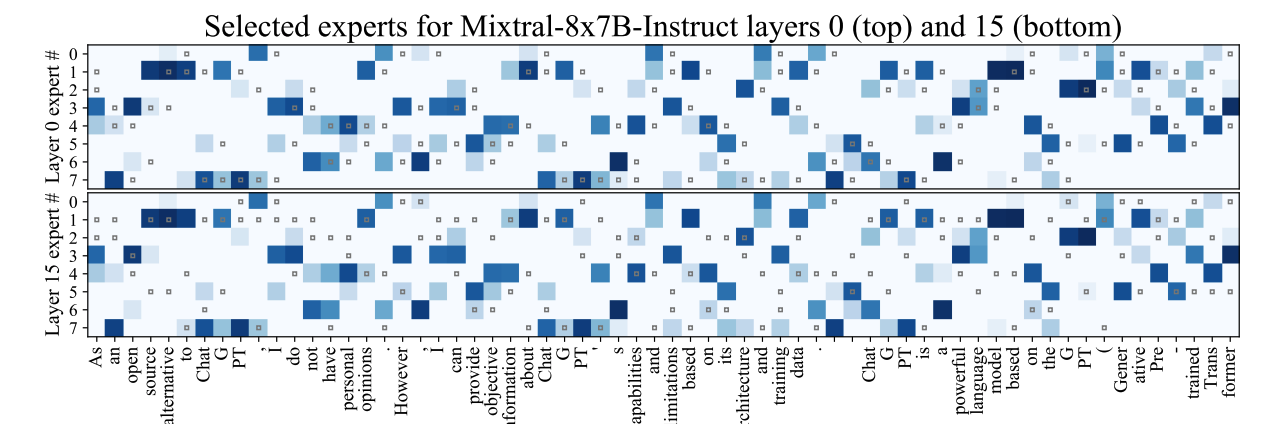

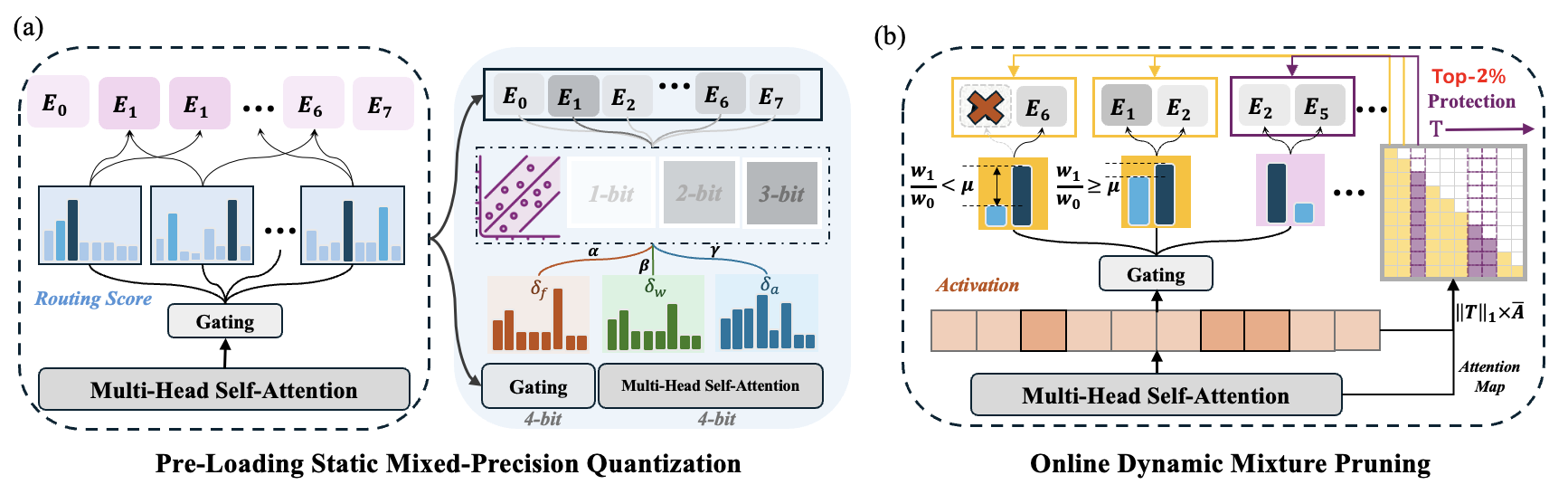

MC-MoE: Mixture Compressor for Mixture-of-Experts LLMs Gains More Wei Huang, Yue Liao, Jianhui Liu, Ruifei He, Haoru Tan, Shiming Zhang, Hongsheng Li, Si Liu, Xiaojuan Qi |

|

Github Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

MobiLlama: Towards Accurate and Lightweight Fully Transparent GPT Omkar Thawakar, Ashmal Vayani, Salman Khan, Hisham Cholakal, Rao M. Anwer, Michael Felsberg, Tim Baldwin, Eric P. Xing, Fahad Shahbaz Khan |

|

Github Paper Model |

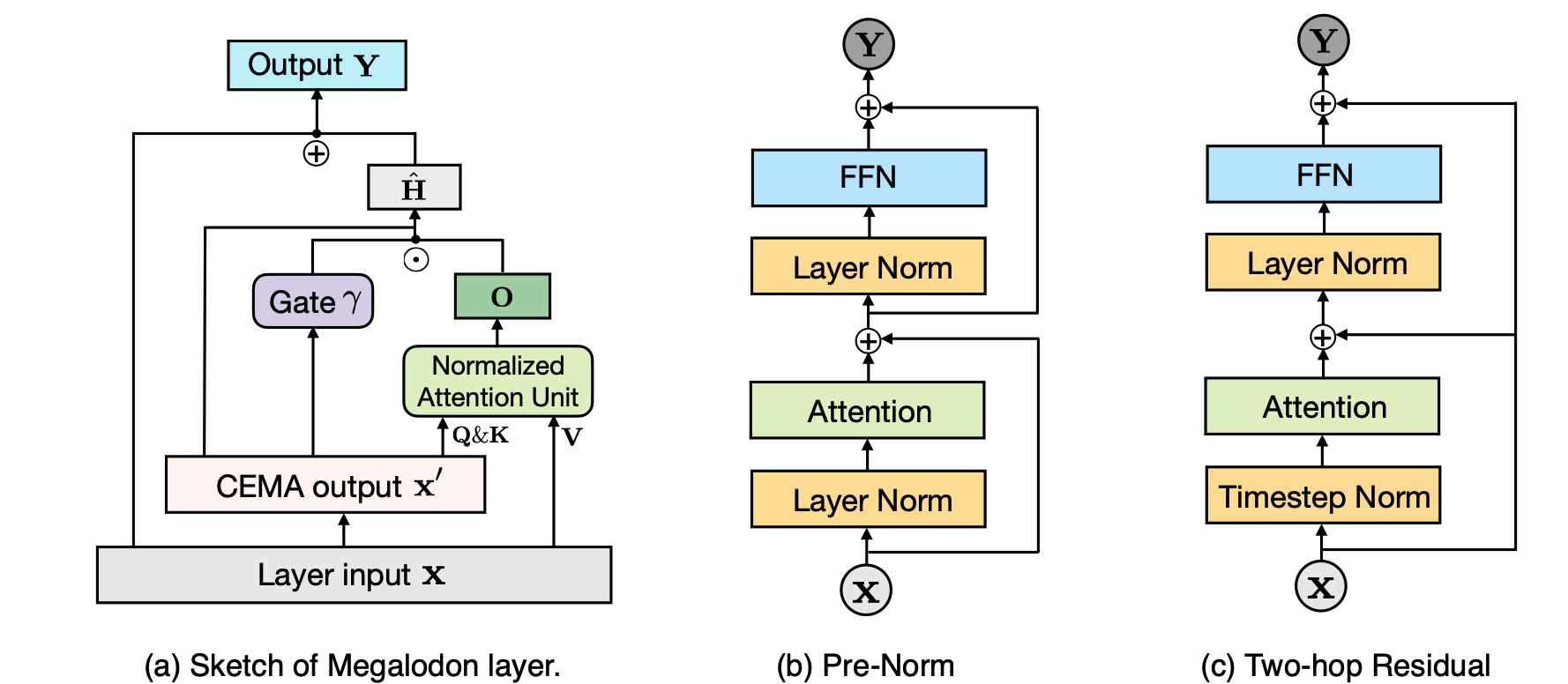

Megalodon: Efficient LLM Pretraining and Inference with Unlimited Context Length Xuezhe Ma, Xiaomeng Yang, Wenhan Xiong, Beidi Chen, Lili Yu, Hao Zhang, Jonathan May, Luke Zettlemoyer, Omer Levy, Chunting Zhou |

|

Github Paper |

| Taipan: Efficient and Expressive State Space Language Models with Selective Attention Chien Van Nguyen, Huy Huu Nguyen, Thang M. Pham, Ruiyi Zhang, Hanieh Deilamsalehy, Puneet Mathur, Ryan A. Rossi, Trung Bui, Viet Dac Lai, Franck Dernoncourt, Thien Huu Nguyen |

Paper | |

SeerAttention: Learning Intrinsic Sparse Attention in Your LLMs Yizhao Gao, Zhichen Zeng, Dayou Du, Shijie Cao, Hayden Kwok-Hay So, Ting Cao, Fan Yang, Mao Yang |

Github Paper |

|

Basis Sharing: Cross-Layer Parameter Sharing for Large Language Model Compression Jingcun Wang, Yu-Guang Chen, Ing-Chao Lin, Bing Li, Grace Li Zhang |

Github Paper |

|

| Rodimus*: Breaking the Accuracy-Efficiency Trade-Off with Efficient Attentions Zhihao He, Hang Yu, Zi Gong, Shizhan Liu, Jianguo Li, Weiyao Lin |

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

| Model Tells You What to Discard: Adaptive KV Cache Compression for LLMs Suyu Ge, Yunan Zhang, Liyuan Liu, Minjia Zhang, Jiawei Han, Jianfeng Gao |

|

Paper |

| ClusterKV: Manipulating LLM KV Cache in Semantic Space for Recallable Compression Guangda Liu, Chengwei Li, Jieru Zhao, Chenqi Zhang, Minyi Guo |

Paper | |

| Unifying KV Cache Compression for Large Language Models with LeanKV Yanqi Zhang, Yuwei Hu, Runyuan Zhao, John C.S. Lui, Haibo Chen |

Paper | |

| Compressing KV Cache for Long-Context LLM Inference with Inter-Layer Attention Similarity Da Ma, Lu Chen, Situo Zhang, Yuxun Miao, Su Zhu, Zhi Chen, Hongshen Xu, Hanqi Li, Shuai Fan, Lei Pan, Kai Yu |

Paper | |

| MiniKV: Pushing the Limits of LLM Inference via 2-Bit Layer-Discriminative KV Cache Akshat Sharma, Hangliang Ding, Jianping Li, Neel Dani, Minjia Zhang |

Paper | |

| TokenSelect: Efficient Long-Context Inference and Length Extrapolation for LLMs via Dynamic Token-Level KV Cache Selection Wei Wu, Zhuoshi Pan, Chao Wang, Liyi Chen, Yunchu Bai, Kun Fu, Zheng Wang, Hui Xiong |

Paper | |

Not All Heads Matter: A Head-Level KV Cache Compression Method with Integrated Retrieval and Reasoning Yu Fu, Zefan Cai, Abedelkadir Asi, Wayne Xiong, Yue Dong, Wen Xiao |

|

Github Paper |

BUZZ: Beehive-structured Sparse KV Cache with Segmented Heavy Hitters for Efficient LLM Inference Junqi Zhao, Zhijin Fang, Shu Li, Shaohui Yang, Shichao He |

Github Paper |

|

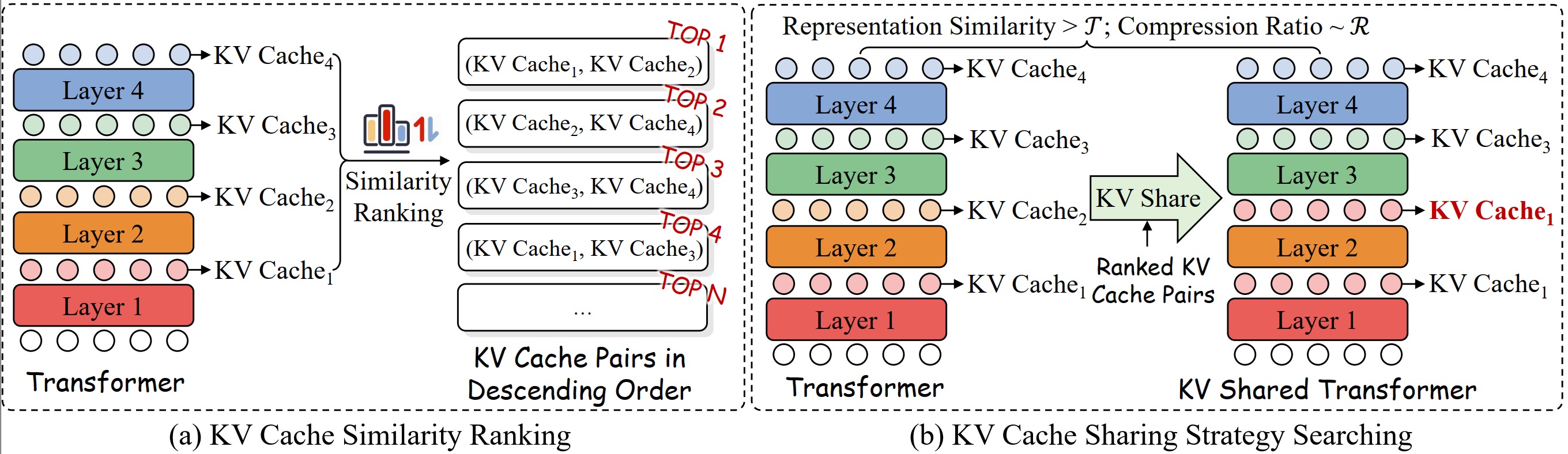

A Systematic Study of Cross-Layer KV Sharing for Efficient LLM Inference You Wu, Haoyi Wu, Kewei Tu |

|

Github Paper |

| Lossless KV Cache Compression to 2% Zhen Yang, J.N.Han, Kan Wu, Ruobing Xie, An Wang, Xingwu Sun, Zhanhui Kang |

Paper | |

| MatryoshkaKV: Adaptive KV Compression via Trainable Orthogonal Projection Bokai Lin, Zihao Zeng, Zipeng Xiao, Siqi Kou, Tianqi Hou, Xiaofeng Gao, Hao Zhang, Zhijie Deng |

Paper | |

Residual vector quantization for KV cache compression in large language model Ankur Kumar |

Github Paper |

|

KVSharer: Efficient Inference via Layer-Wise Dissimilar KV Cache Sharing Yifei Yang, Zouying Cao, Qiguang Chen, Libo Qin, Dongjie Yang, Hai Zhao, Zhi Chen |

|

Github Paper |

| LoRC: Low-Rank Compression for LLMs KV Cache with a Progressive Compression Strategy Rongzhi Zhang, Kuang Wang, Liyuan Liu, Shuohang Wang, Hao Cheng, Chao Zhang, Yelong Shen |

|

Paper |

| SwiftKV: Fast Prefill-Optimized Inference with Knowledge-Preserving Model Transformation Aurick Qiao, Zhewei Yao, Samyam Rajbhandari, Yuxiong He |

Paper | |

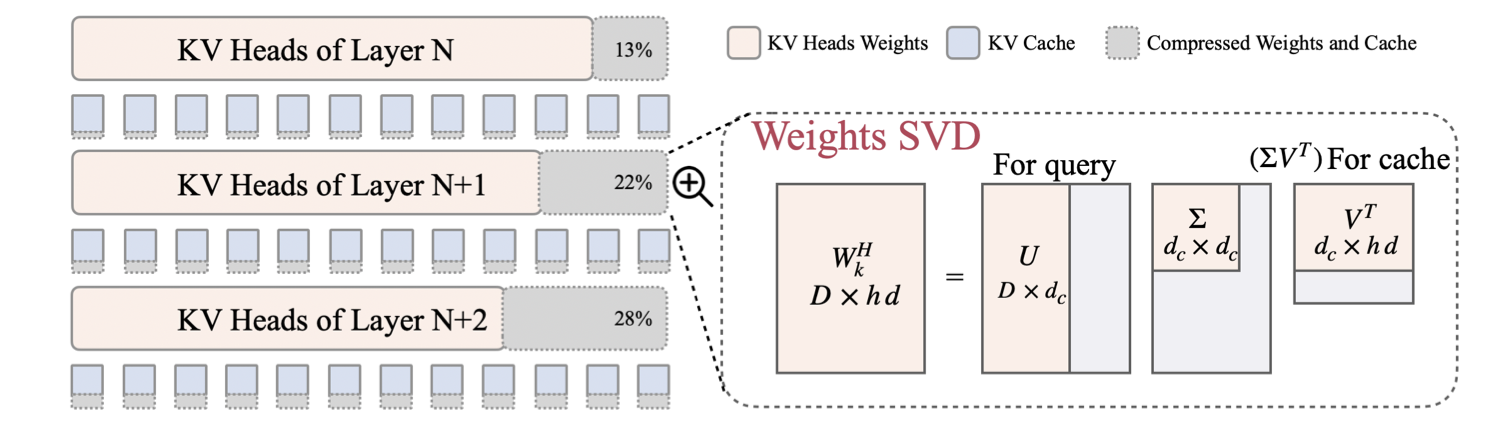

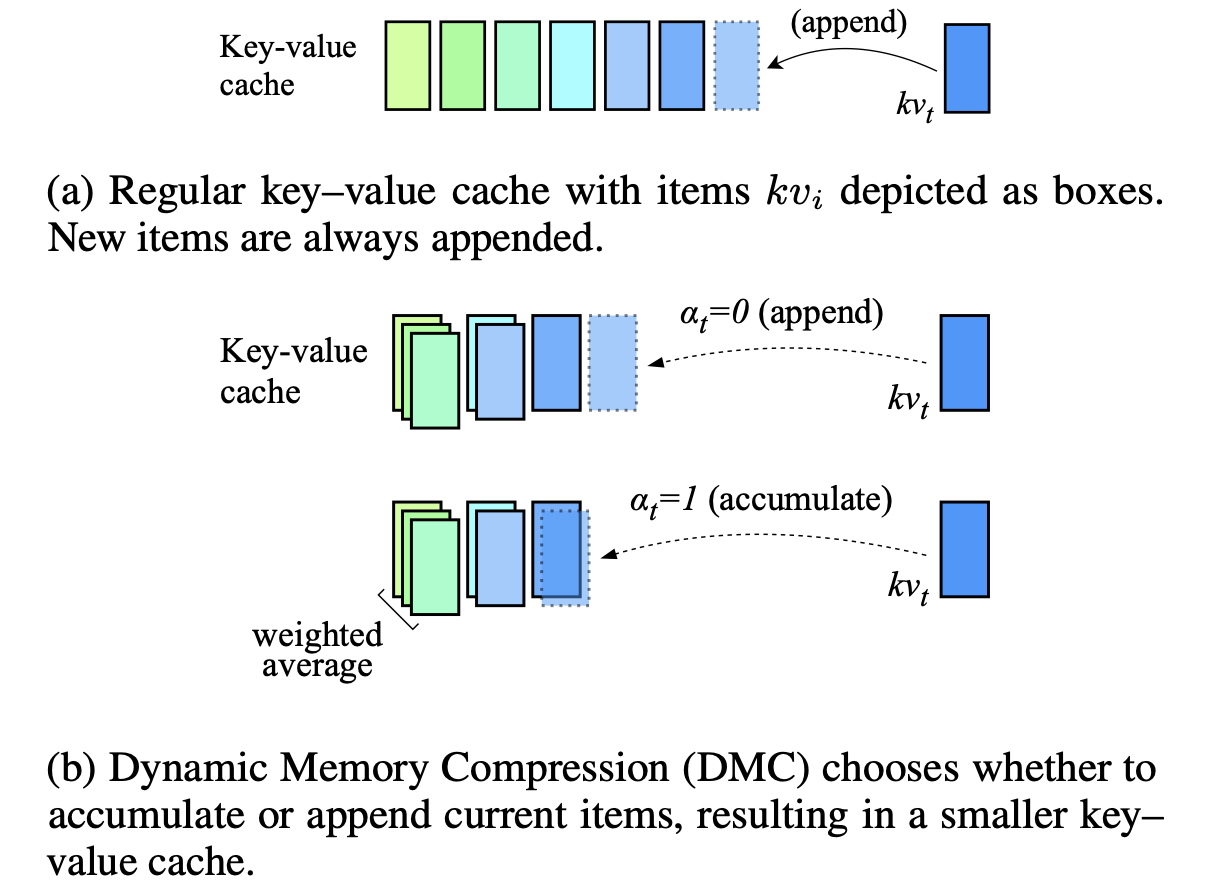

Dynamic Memory Compression: Retrofitting LLMs for Accelerated Inference Piotr Nawrot, Adrian Łańcucki, Marcin Chochowski, David Tarjan, Edoardo M. Ponti |

|

Paper |

| KV-Compress: Paged KV-Cache Compression with Variable Compression Rates per Attention Head Isaac Rehg |

Paper | |

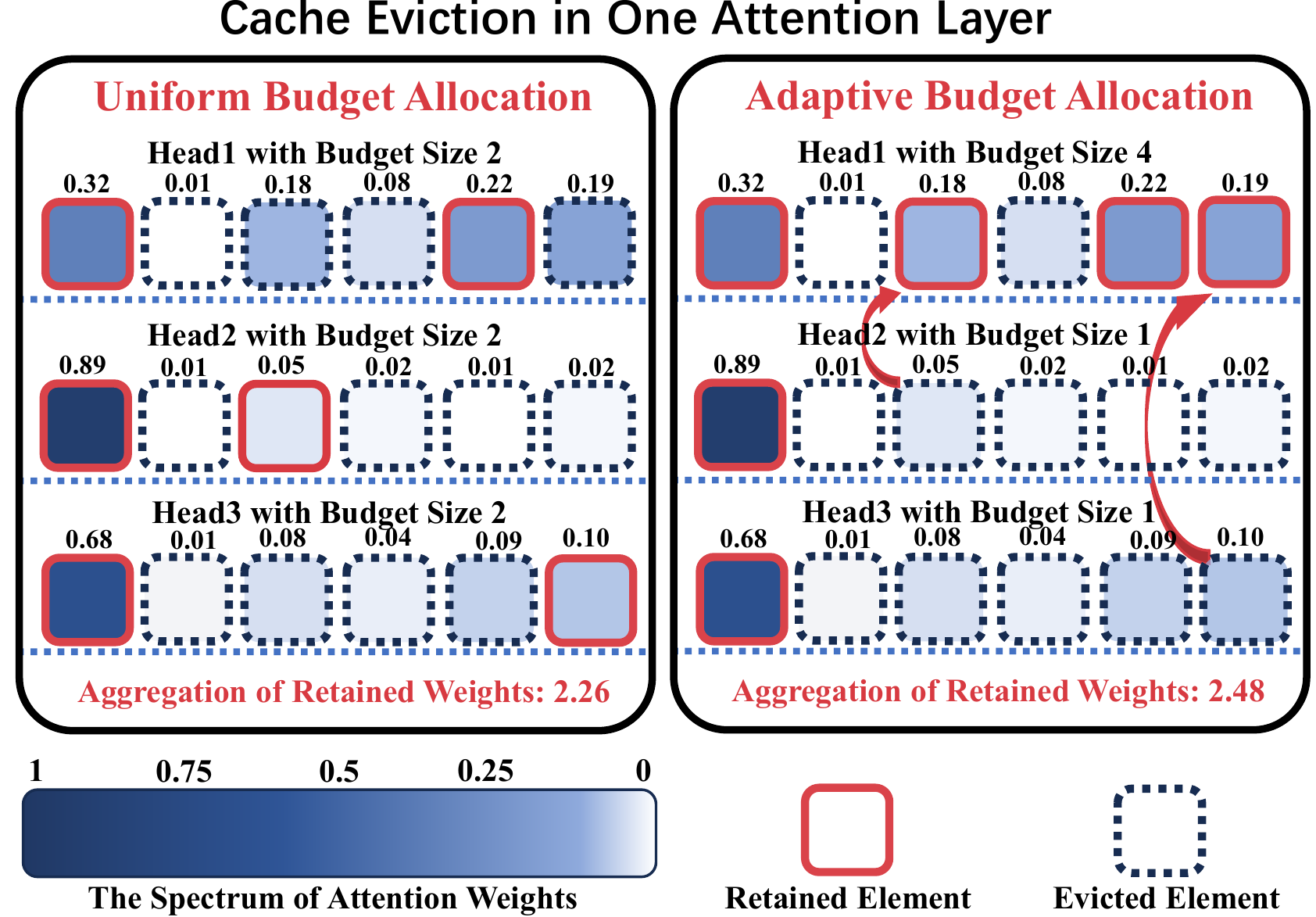

Ada-KV: Optimizing KV Cache Eviction by Adaptive Budget Allocation for Efficient LLM Inference Yuan Feng, Junlin Lv, Yukun Cao, Xike Xie, S. Kevin Zhou |

|

Github Paper |

AlignedKV: Reducing Memory Access of KV-Cache with Precision-Aligned Quantization Yifan Tan, Haoze Wang, Chao Yan, Yangdong Deng |

Github Paper |

|

| CSKV: Training-Efficient Channel Shrinking for KV Cache in Long-Context Scenarios Luning Wang, Shiyao Li, Xuefei Ning, Zhihang Yuan, Shengen Yan, Guohao Dai, Yu Wang |

Paper | |

| A First Look At Efficient And Secure On-Device LLM Inference Against KV Leakage Huan Yang, Deyu Zhang, Yudong Zhao, Yuanchun Li, Yunxin Liu |

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

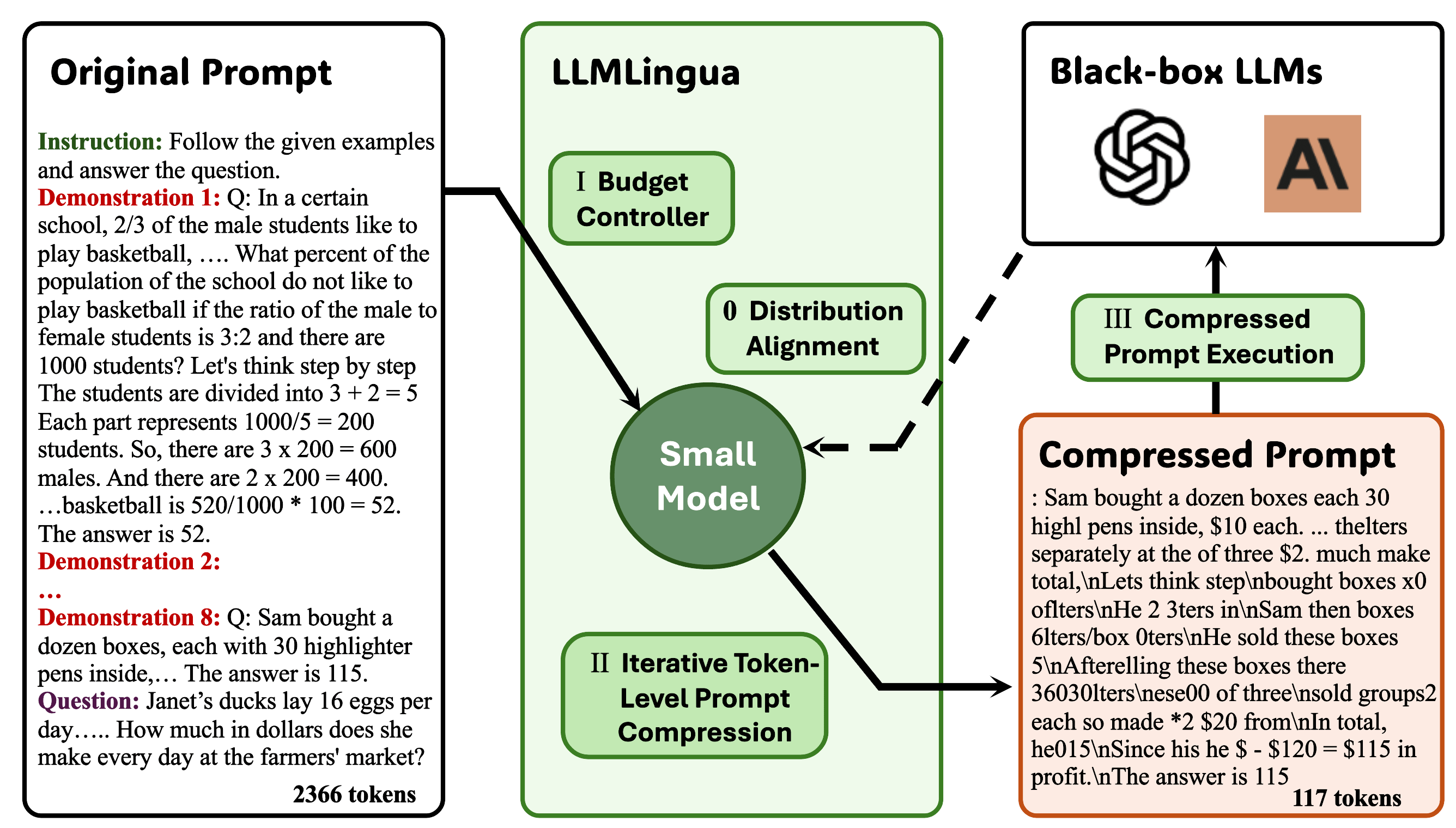

LLMLingua: Compressing Prompts for Accelerated Inference of Large Language Models Huiqiang Jiang, Qianhui Wu, Chin-Yew Lin, Yuqing Yang, Lili Qiu |

|

Github Paper |

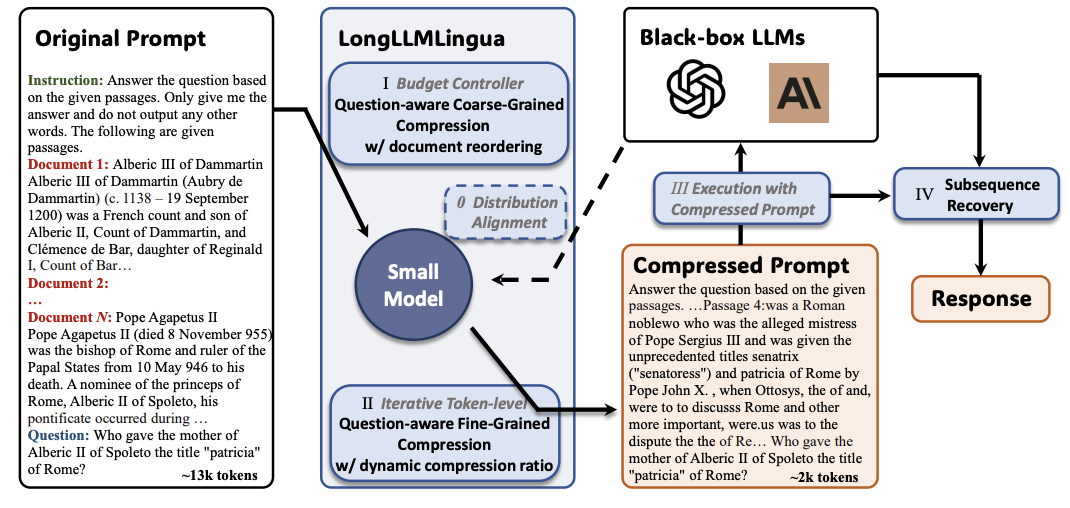

LongLLMLingua: Accelerating and Enhancing LLMs in Long Context Scenarios via Prompt Compression Huiqiang Jiang, Qianhui Wu, Xufang Luo, Dongsheng Li, Chin-Yew Lin, Yuqing Yang, Lili Qiu |

|

Github Paper |

| JPPO: Joint Power and Prompt Optimization for Accelerated Large Language Model Services Feiran You, Hongyang Du, Kaibin Huang, Abbas Jamalipour |

Paper | |

Generative Context Distillation Haebin Shin, Lei Ji, Yeyun Gong, Sungdong Kim, Eunbi Choi, Minjoon Seo |

|

Github Paper |

MultiTok: Variable-Length Tokenization for Efficient LLMs Adapted from LZW Compression Noel Elias, Homa Esfahanizadeh, Kaan Kale, Sriram Vishwanath, Muriel Medard |

Github Paper |

|

Selection-p: Self-Supervised Task-Agnostic Prompt Compression for Faithfulness and Transferability Tsz Ting Chung, Leyang Cui, Lemao Liu, Xinting Huang, Shuming Shi, Dit-Yan Yeung |

Paper | |

From Reading to Compressing: Exploring the Multi-document Reader for Prompt Compression Eunseong Choi, Sunkyung Lee, Minjin Choi, June Park, Jongwuk Lee |

Paper | |

| Perception Compressor:A training-free prompt compression method in long context scenarios Jiwei Tang, Jin Xu, Tingwei Lu, Hai Lin, Yiming Zhao, Hai-Tao Zheng |

Paper | |

FineZip : Pushing the Limits of Large Language Models for Practical Lossless Text Compression Fazal Mittu, Yihuan Bu, Akshat Gupta, Ashok Devireddy, Alp Eren Ozdarendeli, Anant Singh, Gopala Anumanchipalli |

Github Paper |

|

Parse Trees Guided LLM Prompt Compression Wenhao Mao, Chengbin Hou, Tianyu Zhang, Xinyu Lin, Ke Tang, Hairong Lv |

Github Paper |

|

AlphaZip: Neural Network-Enhanced Lossless Text Compression Swathi Shree Narashiman, Nitin Chandrachoodan |

Github Paper |

|

| TACO-RL: Task Aware Prompt Compression Optimization with Reinforcement Learning Shivam Shandilya, Menglin Xia, Supriyo Ghosh, Huiqiang Jiang, Jue Zhang, Qianhui Wu, Victor Rühle |

Paper | |

| Efficient LLM Context Distillation Rajesh Upadhayayaya, Zachary Smith, Chritopher Kottmyer, Manish Raj Osti |

Paper | |

Enhancing and Accelerating Large Language Models via Instruction-Aware Contextual Compression Haowen Hou, Fei Ma, Binwen Bai, Xinxin Zhu, Fei Yu |

Github Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

Natural GaLore: Accelerating GaLore for memory-efficient LLM Training and Fine-tuning Arijit Das |

Github Paper |

|

| CompAct: Compressed Activations for Memory-Efficient LLM Training Yara Shamshoum, Nitzan Hodos, Yuval Sieradzki, Assaf Schuster |

Paper | |

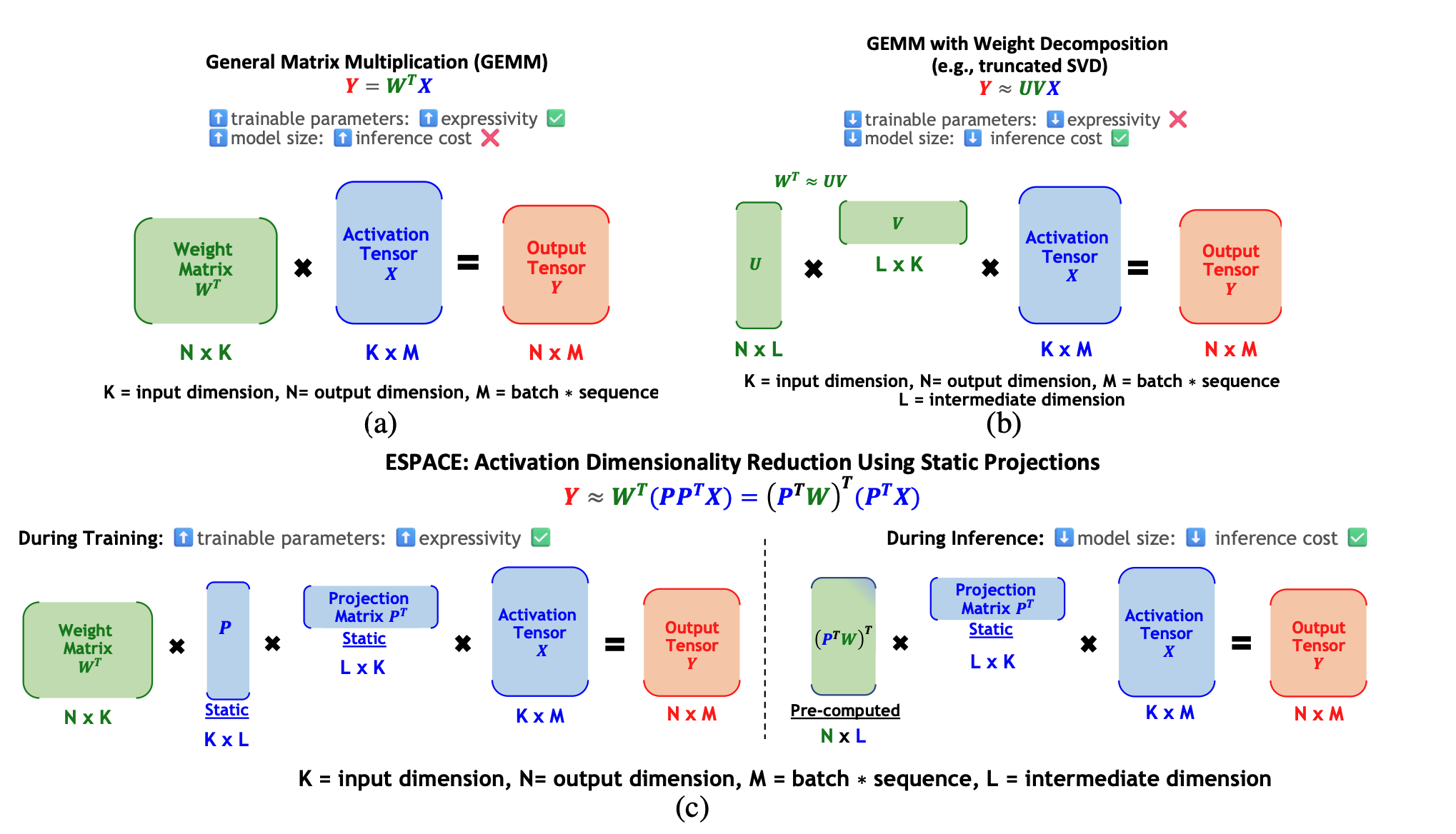

ESPACE: Dimensionality Reduction of Activations for Model Compression Charbel Sakr, Brucek Khailany |

|

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

| FastSwitch: Optimizing Context Switching Efficiency in Fairness-aware Large Language Model Serving Ao Shen, Zhiyao Li, Mingyu Gao |

Paper | |

| CE-CoLLM: Efficient and Adaptive Large Language Models Through Cloud-Edge Collaboration Hongpeng Jin, Yanzhao Wu |

Paper | |

| Ripple: Accelerating LLM Inference on Smartphones with Correlation-Aware Neuron Management Tuowei Wang, Ruwen Fan, Minxing Huang, Zixu Hao, Kun Li, Ting Cao, Youyou Lu, Yaoxue Zhang, Ju Ren |

Paper | |

ALISE: Accelerating Large Language Model Serving with Speculative Scheduling Youpeng Zhao, Jun Wang |

Paper | |

| EPIC: Efficient Position-Independent Context Caching for Serving Large Language Models Junhao Hu, Wenrui Huang, Haoyi Wang, Weidong Wang, Tiancheng Hu, Qin Zhang, Hao Feng, Xusheng Chen, Yizhou Shan, Tao Xie |

Paper | |

SDP4Bit: Toward 4-bit Communication Quantization in Sharded Data Parallelism for LLM Training Jinda Jia, Cong Xie, Hanlin Lu, Daoce Wang, Hao Feng, Chengming Zhang, Baixi Sun, Haibin Lin, Zhi Zhang, Xin Liu, Dingwen Tao |

Paper | |

| FastAttention: Extend FlashAttention2 to NPUs and Low-resource GPUs Haoran Lin, Xianzhi Yu, Kang Zhao, Lu Hou, Zongyuan Zhan et al |

Paper | |

| POD-Attention: Unlocking Full Prefill-Decode Overlap for Faster LLM Inference Aditya K Kamath, Ramya Prabhu, Jayashree Mohan, Simon Peter, Ramachandran Ramjee, Ashish Panwar |

Paper | |

TPI-LLM: Serving 70B-scale LLMs Efficiently on Low-resource Edge Devices Zonghang Li, Wenjiao Feng, Mohsen Guizani, Hongfang Yu |

Github Paper |

|

Efficient Arbitrary Precision Acceleration for Large Language Models on GPU Tensor Cores Shaobo Ma, Chao Fang, Haikuo Shao, Zhongfeng Wang |

Paper | |

OPAL: Outlier-Preserved Microscaling Quantization A ccelerator for Generative Large Language Models Jahyun Koo, Dahoon Park, Sangwoo Jung, Jaeha Kung |

Paper | |

| Accelerating Large Language Model Training with Hybrid GPU-based Compression Lang Xu, Quentin Anthony, Qinghua Zhou, Nawras Alnaasan, Radha R. Gulhane, Aamir Shafi, Hari Subramoni, Dhabaleswar K. Panda |

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

| HELENE: Hessian Layer-wise Clipping and Gradient Annealing for Accelerating Fine-tuning LLM with Zeroth-order Optimization Huaqin Zhao, Jiaxi Li, Yi Pan, Shizhe Liang, Xiaofeng Yang, Wei Liu, Xiang Li, Fei Dou, Tianming Liu, Jin Lu |

Paper | |

Robust and Efficient Fine-tuning of LLMs with Bayesian Reparameterization of Low-Rank Adaptation Ayan Sengupta, Vaibhav Seth, Arinjay Pathak, Natraj Raman, Sriram Gopalakrishnan, Tanmoy Chakraborty |

Github Paper |

|

MiLoRA: Efficient Mixture of Low-Rank Adaptation for Large Language Models Fine-tuning Jingfan Zhang, Yi Zhao, Dan Chen, Xing Tian, Huanran Zheng, Wei Zhu |

Paper | |

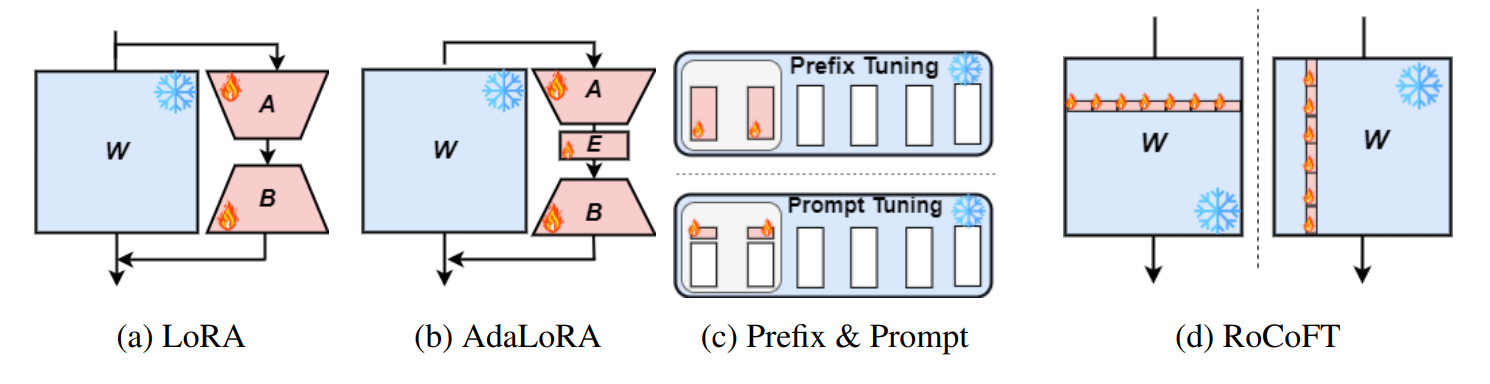

RoCoFT: Efficient Finetuning of Large Language Models with Row-Column Updates Md Kowsher, Tara Esmaeilbeig, Chun-Nam Yu, Mojtaba Soltanalian, Niloofar Yousefi |

|

Github Paper |

Layer-wise Importance Matters: Less Memory for Better Performance in Parameter-efficient Fine-tuning of Large Language Models Kai Yao, Penlei Gao, Lichun Li, Yuan Zhao, Xiaofeng Wang, Wei Wang, Jianke Zhu |

Github Paper |

|

Parameter-Efficient Fine-Tuning of Large Language Models using Semantic Knowledge Tuning Nusrat Jahan Prottasha, Asif Mahmud, Md. Shohanur Islam Sobuj, Prakash Bhat, Md Kowsher, Niloofar Yousefi, Ozlem Ozmen Garibay |

Paper | |

QEFT: Quantization for Efficient Fine-Tuning of LLMs Changhun Lee, Jun-gyu Jin, Younghyun Cho, Eunhyeok Park |

Github Paper |

|

BIPEFT: Budget-Guided Iterative Search for Parameter Efficient Fine-Tuning of Large Pretrained Language Models Aofei Chang, Jiaqi Wang, Han Liu, Parminder Bhatia, Cao Xiao, Ting Wang, Fenglong Ma |

Github Paper |

|

SparseGrad: A Selective Method for Efficient Fine-tuning of MLP Layers Viktoriia Chekalina, Anna Rudenko, Gleb Mezentsev, Alexander Mikhalev, Alexander Panchenko, Ivan Oseledets |

Github Paper |

|

| SpaLLM: Unified Compressive Adaptation of Large Language Models with Sketching Tianyi Zhang, Junda Su, Oscar Wu, Zhaozhuo Xu, Anshumali Shrivastava |

Paper | |

Bone: Block Affine Transformation as Parameter Efficient Fine-tuning Methods for Large Language Models Jiale Kang |

Github Paper |

|

| Enabling Resource-Efficient On-Device Fine-Tuning of LLMs Using Only Inference Engines Lei Gao, Amir Ziashahabi, Yue Niu, Salman Avestimehr, Murali Annavaram |

|

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

| AutoMixQ: Self-Adjusting Quantization for High Performance Memory-Efficient Fine-Tuning Changhai Zhou, Shiyang Zhang, Yuhua Zhou, Zekai Liu, Shichao Weng |

|

Paper |

Scalable Efficient Training of Large Language Models with Low-dimensional Projected Attention Xingtai Lv, Ning Ding, Kaiyan Zhang, Ermo Hua, Ganqu Cui, Bowen Zhou |

Github Paper |

|

| Less is More: Extreme Gradient Boost Rank-1 Adaption for Efficient Finetuning of LLMs Yifei Zhang, Hao Zhu, Aiwei Liu, Han Yu, Piotr Koniusz, Irwin King |

Paper | |

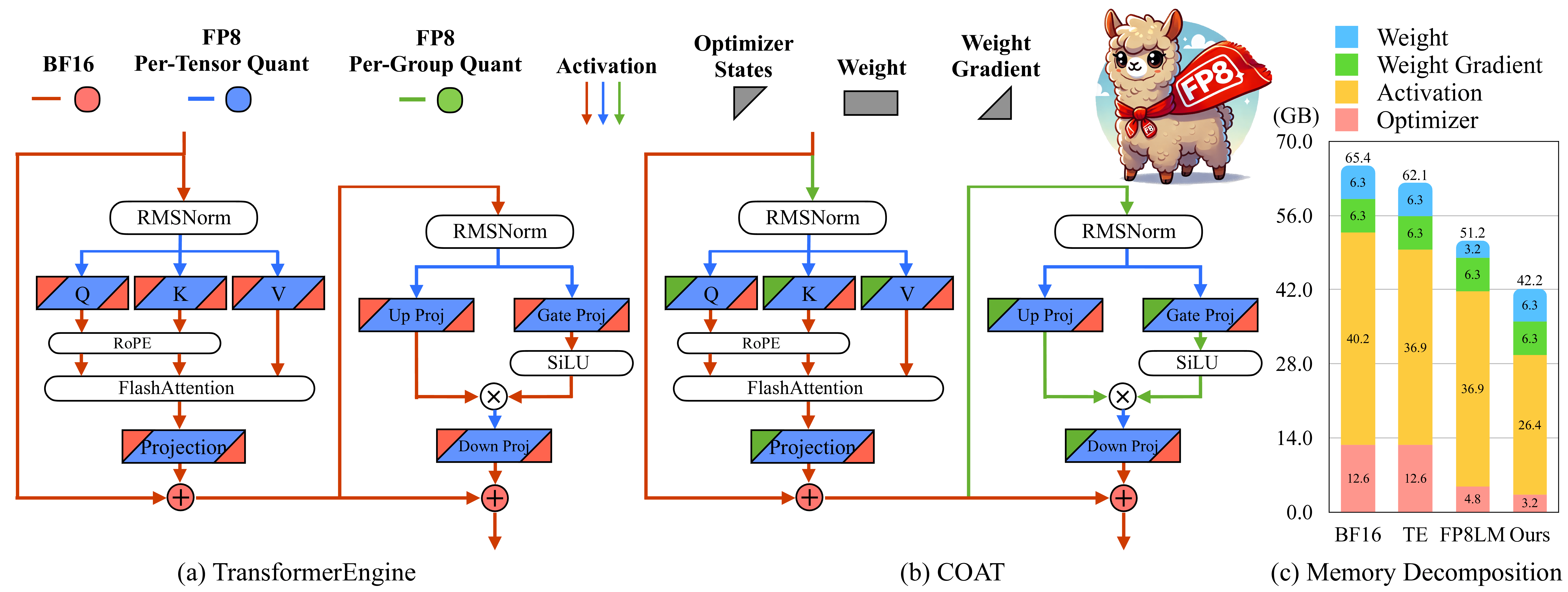

COAT: Compressing Optimizer states and Activation for Memory-Efficient FP8 Training Haocheng Xi, Han Cai, Ligeng Zhu, Yao Lu, Kurt Keutzer, Jianfei Chen, Song Han |

|

Github Paper |

BitPipe: Bidirectional Interleaved Pipeline Parallelism for Accelerating Large Models Training Houming Wu, Ling Chen, Wenjie Yu |

|

Github Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

| Closer Look at Efficient Inference Methods: A Survey of Speculative Decoding Hyun Ryu, Eric Kim |

Paper | |

LLM-Inference-Bench: Inference Benchmarking of Large Language Models on AI Accelerators Krishna Teja Chitty-Venkata, Siddhisanket Raskar, Bharat Kale, Farah Ferdaus et al |

Github Paper |

|

Prompt Compression for Large Language Models: A Survey Zongqian Li, Yinhong Liu, Yixuan Su, Nigel Collier |

Github Paper |

|

| Large Language Model Inference Acceleration: A Comprehensive Hardware Perspective Jinhao Li, Jiaming Xu, Shan Huang, Yonghua Chen, Wen Li, Jun Liu, Yaoxiu Lian, Jiayi Pan, Li Ding, Hao Zhou, Guohao Dai |

Paper | |

| A Survey of Low-bit Large Language Models: Basics, Systems, and Algorithms Ruihao Gong, Yifu Ding, Zining Wang, Chengtao Lv, Xingyu Zheng, Jinyang Du, Haotong Qin, Jinyang Guo, Michele Magno, Xianglong Liu |

Paper | |

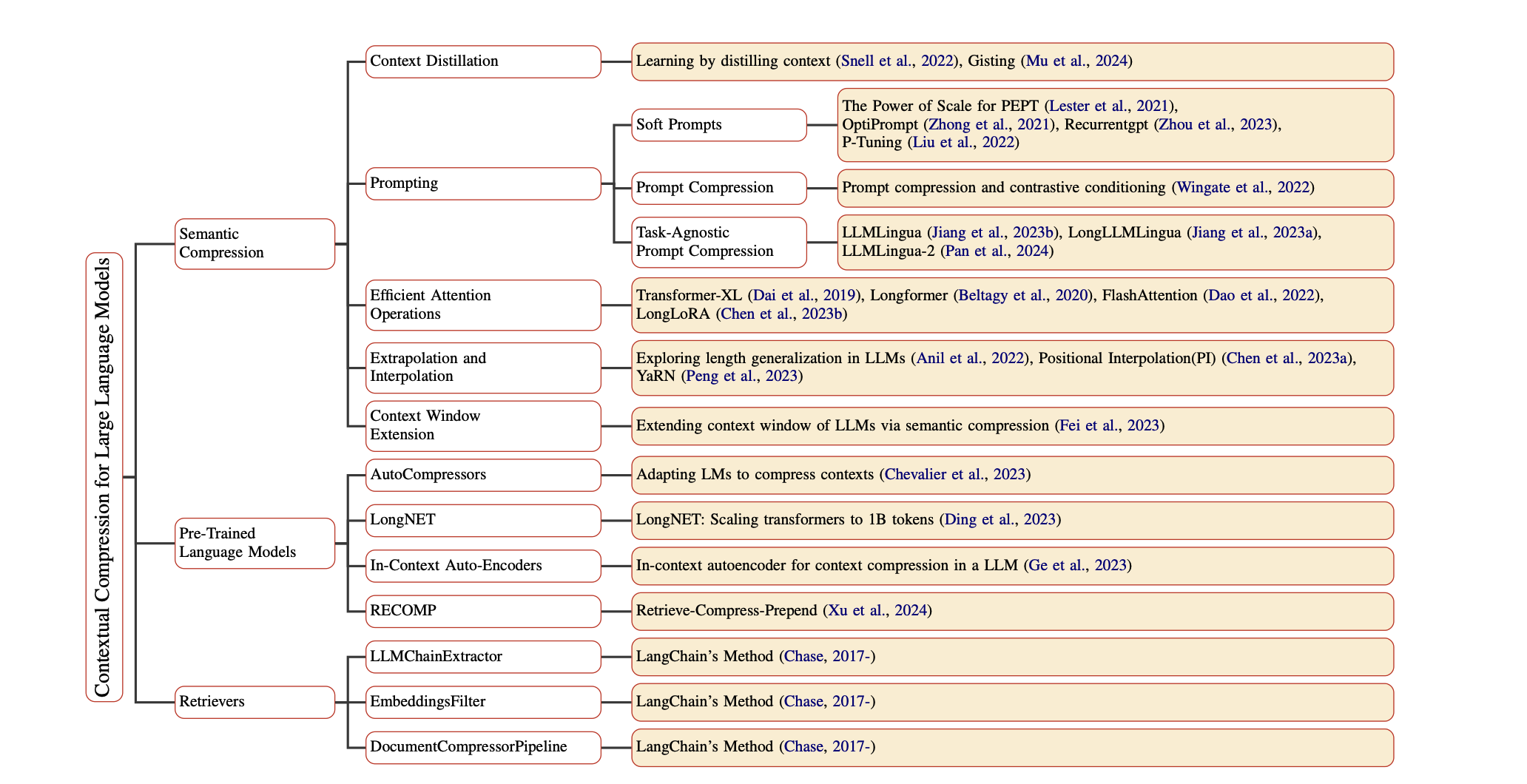

Contextual Compression in Retrieval-Augmented Generation for Large Language Models: A Survey Sourav Verma |

|

Github Paper |

| Art and Science of Quantizing Large-Scale Models: A Comprehensive Overview Yanshu Wang, Tong Yang, Xiyan Liang, Guoan Wang, Hanning Lu, Xu Zhe, Yaoming Li, Li Weitao |

Paper | |

| Hardware Acceleration of LLMs: A comprehensive survey and comparison Nikoletta Koilia, Christoforos Kachris |

Paper | |

| A Survey on Symbolic Knowledge Distillation of Large Language Models Kamal Acharya, Alvaro Velasquez, Houbing Herbert Song |

Paper |