At the GTC2025 conference, Nvidia officially announced its next generation of artificial intelligence (AI) chip platform and named it "Vera Rubin", paying tribute to the famous American astronomer Vera Rubin, continuing Nvidia's tradition of naming architecture after scientists. The first product of the series, Vera Rubin NVL144, is expected to be released in the second half of 2026.

Nvidia CEO Jensen Huang said that Rubin's performance will reach 900 times that of the current Hopper architecture. In comparison, the latest Blackwell architecture has achieved a 68-fold performance improvement on Hopper, indicating that Rubin will bring another huge leap in computing power.

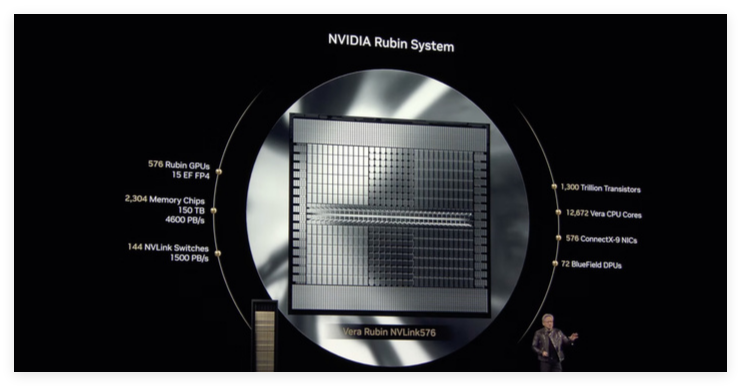

According to the official information, the Vera Rubin NVL144 has an inference computing power of up to 3.6ExaFLOPS under FP4 accuracy, and its training performance under FP8 accuracy is 1.2ExaFLOPS. Compared with the GB300NVL72, the performance is 3.3 times higher. Rubin will be equipped with the latest HBM4 video memory, with an amazing bandwidth of 13TB/s and 75TB of fast memory, 1.6 times the previous generation. In terms of interconnection, Rubin supports NVLink6 and CX9, with bandwidths reaching 260TB/s and 28.8TB/s respectively, both of which are twice the previous generation.

The standard version of the Rubin chip will be equipped with HBM4 video memory, and its overall performance will far exceed the current flagship Hopper H100 chip.

It is worth mentioning that the Rubin platform will also introduce a brand new CPU called Veru as the successor of Grace CPU. Veru contains 88 customized Arm cores, each supporting 176 threads and enables high bandwidth connections up to 1.8TB/s via NVLink-C2C. Nvidia says the custom Vera CPU will double the speed of the CPU used in the Grace Blackwell chip last year.

When used with Vera CPU, Rubin's computing power in inference tasks can reach 50petaflops, more than twice that of Blackwell20petaflops. In addition, Rubin will support up to 288GB of HBM4 memory, which is crucial for developers who need to handle large AI models.

Similar to Blackwell, Rubin actually consists of two GPUs, which are integrated into one overall operation through advanced packaging technology, further improving overall computing efficiency and performance. The release of Rubin undoubtedly once again demonstrates Nvidia's strong innovation capabilities in the field of AI chips and its profound insight into future computing power needs.