As a complex computer game centered on construction and resource management, Factorio has become an important tool for researchers to evaluate artificial intelligence capabilities in recent years. This game not only requires players to plan and build complex systems, but also needs to manage multiple resources and production chains at the same time, so it can effectively test the performance of language models in complex environments. By simulating resource allocation and production processes in the real world, Factorio provides an extremely challenging platform for artificial intelligence research.

To more systematically evaluate the capabilities of artificial intelligence, the research team developed a system called the Factorio Learning Environment (FLE). The system provides two different test modes: "Experimental Mode" and "Open Mode". In the experimental mode, AI agents need to complete 24 structured challenges ranging from simple two machines to complex nearly a hundred machines factory, setting specific goals and limited resources. In open mode, AI agents can freely explore program-generated maps, with the only goal of building the largest factory possible. These two modes test the performance of AI in constrained and free environments, respectively.

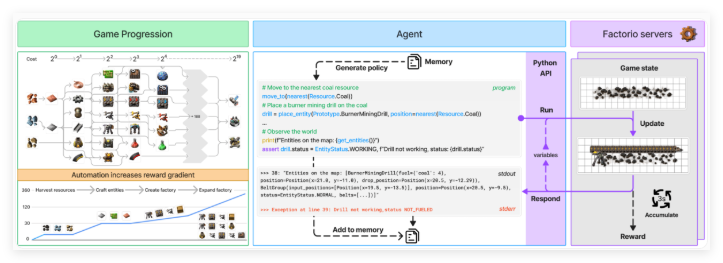

The AI agent interacts with Factorio through a Python API, and is able to generate code to perform various operations and check game status. This API allows the agent to perform functions such as placing and connecting components, managing resources, and monitoring production progress. In this way, the research team was able to test the ability of language models to synthesize programs and handle complex systems. The API is designed to enable AI agents to simulate real-world decision-making processes in games, thus providing rich data for research.

To evaluate the performance of AI agents, the researchers used two key metrics: “production score” and “milestone.” Production scores are used to calculate the value of the total output and grow exponentially as the complexity of the production chain increases; milestones track important achievements such as creating new items or researching technology. The game's economic simulation also takes into account factors such as resource scarcity, market price and production efficiency, making the assessment more comprehensive and authentic.

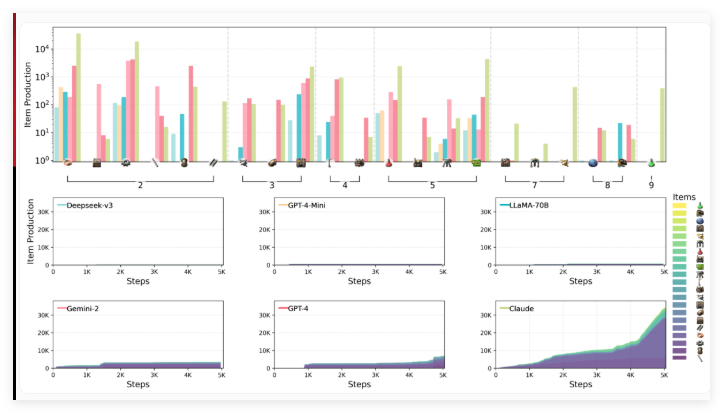

The research team, including scientists from Anthropic, evaluated the performance of six leading language models in the FLE environment, including Claude3.5Sonnet, GPT-4o and its mini version, DeepSeek-V3, Gemini2.0Flash, and Llama-3.3-70B-Instruct. Large inference models (LRMs) were not included in this round of tests, but previous benchmarks showed that models like o1 performed well in planning capabilities, although they also had limitations.

The test results show that the language models involved in the assessment face significant challenges in spatial reasoning, long-term planning, and error correction. When building a factory, AI agents have difficulties in efficiently arranging and connecting machines, resulting in suboptimal layout and production bottlenecks. Strategic thinking is also a challenge, and models generally prefer to prioritize short-term goals over long-term planning. Furthermore, while they can handle basic troubleshooting, they tend to fall into an inefficient debugging loop when facing more complex problems.

Among the models tested, Claude 3.5Sonnet performed the best, but still failed to grasp all the challenges. In experimental mode, Claude successfully completed 15 of 24 tasks, while other models only completed 10 at most. In the open test, Claude's production score reached 2456 points, with GPT-4o following closely behind with 1789 points. Claude shows the complex gameplay of "Factorio" and uses its strategic manufacturing and research methods to quickly shift from basic products to complex production processes, especially the improvement of electric drilling technology, which significantly improves the production speed of iron plates.

Researchers believe that FLE's open and scalable features make it of important value in future testing of more powerful language models. They suggest extending the environment to include multi-agent scenarios and human performance benchmarks in order to provide a better evaluation context. This work further enriches the collection of game-based AI benchmarks, which also include BALROG and the upcoming MCBench, which will be modeled using Minecraft.

Factorio Learning Environment: https://top.aibase.com/tool/factorio-learning-environment

Key points:

The Factorio game has become a new tool for evaluating AI capabilities and testing the complex system management capabilities of language models.

Factorio Learning Environment (FLE) provides experimental and open modes that allow AI to challenge under different conditions.

Tests show that Claude3.5Sonnet performs best, but there are still difficulties in long-term planning and handling complex problems.