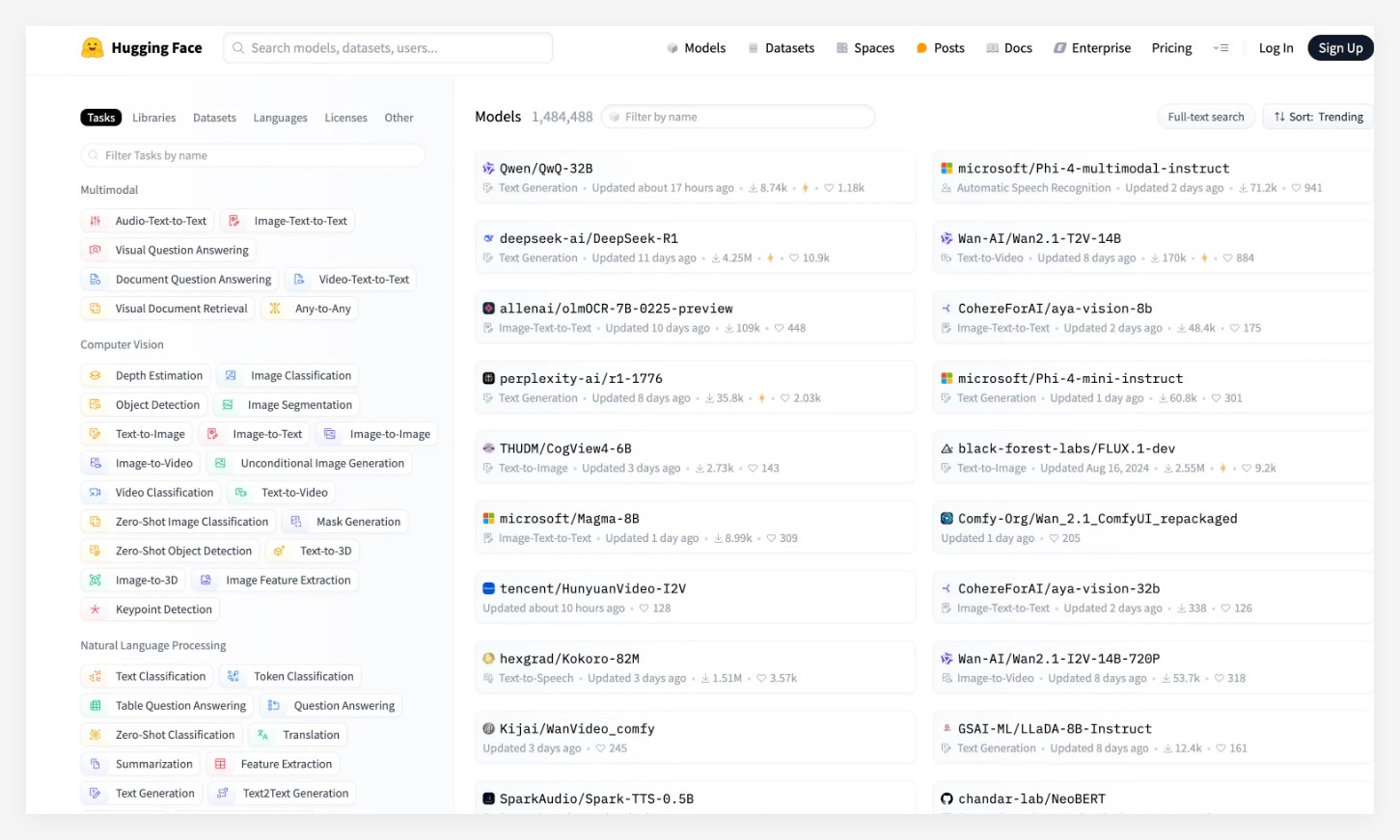

In the latest update of HuggingFace, the world's largest AI open source community, Alibaba recently launched the Tongyi Qianwen inference model QwQ-32B successfully won the first place in the big model list. This model has attracted widespread attention after its release, surpassing well-known models such as Microsoft's Phi-4 and DeepSeek-R1, and showing strong performance.

The QwQ-32B model has made a qualitative leap in mathematics, code processing and general capabilities, especially its small number of parameters, which makes its overall performance comparable to that of DeepSeek-R1. In addition, the design of this model allows users to implement local deployment on consumer graphics cards, greatly reducing the cost of model application. This breakthrough provides more users with a more convenient and economical AI application choice.

Among multiple authoritative benchmarks, the QwQ-32B model performed very well, almost completely surpassing OpenAI's o1-mini and comparable to DeepSeek-R1's performance. Especially in the AIME24 evaluation set for mathematical abilities and LiveCodeBench, the QwQ-32B scores are comparable to DeepSeek-R1, far ahead of the o1-mini and its R1 distillation model of the same size.

At present, the QwQ-32B model has been open sourced on platforms such as Modai Community, HuggingFace and GitHub based on the loose Apache2.0 protocol. Anyone can download and deploy it locally for free. At the same time, users can also directly call the model API service through the Alibaba Cloud Bailian platform.

Key points: The QwQ-32B model ranks first on the HuggingFace list, surpassing several well-known models. This model achieves breakthroughs in performance and application costs, and supports the local deployment of consumer-grade graphics cards. Excellent performance in multiple benchmarks, comparable to the strongest model DeepSeek-R1.