Recently, benchmarking in the field of artificial intelligence has become the focus of public attention. An OpenAI employee publicly accused xAI, an AI company founded by Musk, of misleading behavior when it released the results of Grok3's benchmark test. XAI co-founder Igor Babushenjin denied this, insisting that the company's test results were not problematic.

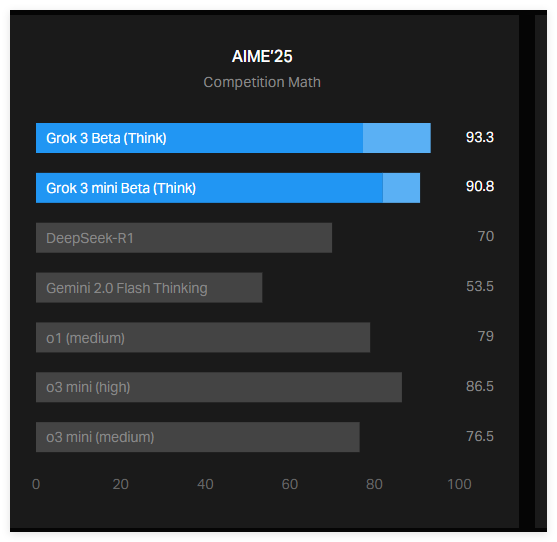

The fuse of this controversy was a graph posted by xAI on its official blog showing how Grok3 performed in the AIME2025 test. AIME2025 is a test based on the mathematics invitational competition, which contains a series of difficult math problems. Although some experts question the effectiveness of AIME as an AI benchmark, it is still widely used to evaluate the mathematical capabilities of AI models.

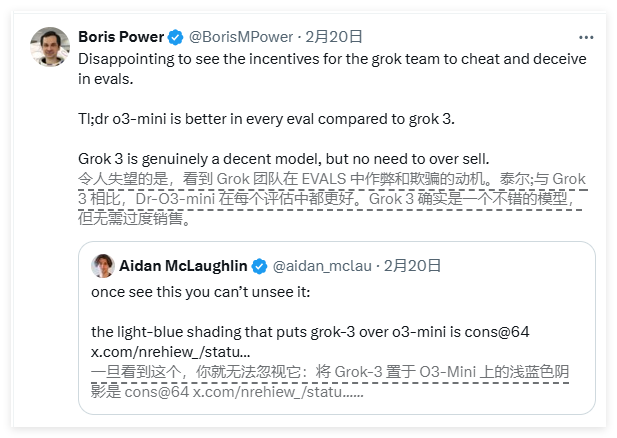

According to the chart released by xAI, the two versions of Grok3—Grok3Reasoning Beta and Grok3mini Reasoning, performed better than OpenAI's current best model o3-mini-high in the AIME2025 test. However, OpenAI employees quickly pointed out that the xAI chart does not contain the scores calculated by o3-mini-high in the AIME2025 test in the "cons@64" method, and this omission may be misleading the results.

So, what exactly is "cons@64"? It is the abbreviation of "consensus@64", which means having the model try 64 times on each question and select the answer that appears most frequently as the final answer. This scoring mechanism can significantly improve the benchmark score of the model. Therefore, if this data is not included in the chart, it may be mistaken for one model to perform better than another, and this may not be the case.

In fact, the "@1" score (i.e. the score of the model's first attempt) in the AIME2025 test is lower than the o3-mini-high of OpenAI. In addition, Grok3Reasoning Beta's performance is also slightly inferior to OpenAI's o1 model. Despite this, xAI still promotes Grok3 as "the smartest AI in the world", which further exacerbates the controversy between the two sides.

Babushenjin responded on social media that OpenAI has published similar misleading benchmark charts in the past, mainly used to compare the performance of its own models. Meanwhile, a neutral expert organized the performance of each model into a more “accurate” chart, sparking wider discussion.

In addition, AI researcher Nathan Lambert pointed out that a more important indicator in the current AI benchmark is still unclear, that is, the computational and financial costs required for each model to obtain the best score. The existence of this problem suggests that existing AI benchmarks still have shortcomings in communicating the limitations and advantages of the model.

In summary, the controversy over the Grok3 benchmark results between xAI and OpenAI have attracted widespread attention. The xAI chart does not contain the key scoring indicator "cons@64" of the OpenAI model, which may lead to public misunderstandings about model performance. Meanwhile, the computational and financial costs behind the performance of AI models remain an unsolved mystery, further highlighting the limitations of current AI benchmarks.