Deepseek released a major product on the second day of Open Source Week - DeepEP, the first open source EP communication library for the Hybrid Expert Model (MoE). The library focuses on implementing full-stack optimization of hybrid expert models in training and inference, providing developers with an efficient and flexible solution.

DeepEP is a high-performance communication library designed for Hybrid Expert Models (MoE) and Expert Parallel (EP). Its core goal is to optimize the efficiency of MoE scheduling and combination by providing many-to-many GPU cores with high throughput and low latency. This design makes DeepEP perform well when dealing with large-scale models, especially in scenarios where efficient communication is required.

DeepEP not only supports low-precision operations such as FP8, but also perfectly matches the group limit gating algorithm proposed in the DeepSeek-V3 paper. DeepEP significantly improves data transmission efficiency by optimizing kernels for bandwidth forwarding of asymmetric domains, such as forwarding data from NVLink domains to RDMA domains. These cores perform well in training and inference pre-filling tasks and have flexible control over the number of stream processors, further improving the scalability of the system.

For latency-sensitive inference coding tasks, DeepEP provides a set of low-latency kernels that minimize latency using pure RDMA technology. In addition, DeepEP also introduces a hook-based communication-computing overlap method, which further improves the overall performance of the system without occupying any stream processor resources.

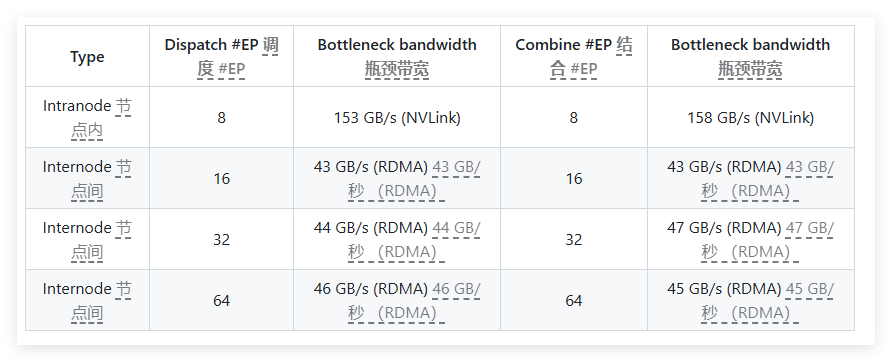

In performance testing, DeepEP conducted multiple tests on the H800 and CX7 InfiniBand 400Gb/s RDMA network cards. Test results show that normal kernels perform excellently in internal and cross-node bandwidth, while low-latency kernels achieve the expected results in both latency and bandwidth. Specifically, the low-latency core has a latency of only 163 microseconds and a bandwidth of up to 46GB/s when processing 8 experts.

DeepEP is well tested and is primarily compatible with the InfiniBand network, but it also theoretically supports running on Convergent Ethernet (RoCE). To ensure that different traffic types do not interfere with each other, it is recommended to isolate traffic in different virtual channels to ensure independent operation of normal and low-latency kernels.

As an efficient communication library designed for hybrid expert models, DeepEP excels in optimizing performance, reducing latency and flexible configuration. Whether it is large-scale model training or latency-sensitive inference tasks, DeepEP provides excellent solutions.

Project entrance: https://x.com/deepseek_ai/status/1894211757604049133

Key points:

Designed for hybrid expert models, DeepEP provides high throughput and low latency communication solutions.

Supports a variety of low-precision operations and optimizes the bandwidth performance of data forwarding.

After testing and verification, DeepEP is compatible with the InfiniBand network and is suitable for isolation and management of different traffic types.