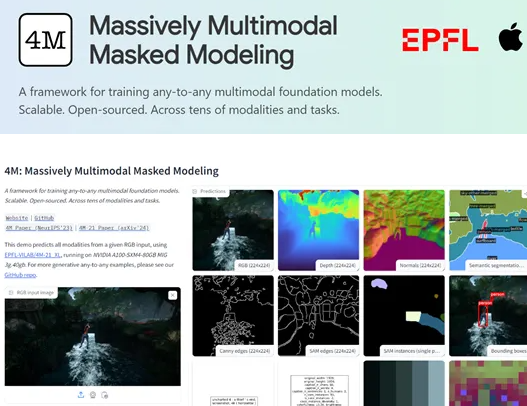

Apple and the Swiss Federal Institute of Technology (EPFL) have jointly launched an open source multimodal vision model called 4M-21. This cooperation marks a major breakthrough in the field of artificial intelligence. With its excellent versatility and flexibility, the 4M-21 model has become a new star in the field of multimodal learning. Although its parameter scale is only 3 billion, far smaller than some mainstream large models, it can show excellence in dozens of tasks such as image classification, object detection, semantic segmentation, instance segmentation, depth estimation, surface normal estimation, etc. performance.

The core innovation of the 4M-21 model lies in its "discrete tokens" conversion technology. This technology can uniformly convert data from different modes, such as images, neural network feature maps, vectors, structured data, and text, into tokens sequences that are understandable by the model. This transformation not only simplifies the training process of the model, but also provides a solid foundation for the fusion and processing of multimodal data. Through this technology, the 4M-21 can efficiently process multiple data types, thus demonstrating powerful capabilities in multimodal learning.

During the training process, 4M-21 adopted a mask modeling method. This method forces the model to learn the statistical structure and potential relationship of the input data by randomly occluding parts of the tokens in the input sequence and predicting the occluded parts based on the remaining tokens. Mask modeling not only improves the generalization ability of the model, but also significantly improves its accuracy in the generation task. The application of this method allows 4M-21 to capture the information commonality and interaction between different modes in multimodal learning.

The researchers conducted extensive evaluations of 4M-21, covering multiple tasks such as image classification, object detection, semantic segmentation, instance segmentation, depth estimation, surface normal estimation, and 3D human pose estimation. The evaluation results show that the 4M-21 performs in these tasks comparable to the current state-of-the-art models, and even surpasses existing technologies in some tasks. This fully demonstrates the outstanding capabilities of 4M-21 in multimodal processing.

Key points:

- Apple and the Federal Institute of Technology of Lausanne, Switzerland, jointly open sourced the 4M-21 model, which has become an important achievement in the field of multimodal learning with its broad versatility and flexibility.

- 4M-21 can perform well in dozens of tasks such as image classification, object detection, semantic segmentation, instance segmentation, depth estimation, surface normal estimation, etc.

- The core technology of 4M-21 is "discrete tokens" conversion, which can transform data from multiple modalities into a tokens sequence that is understandable tokens.