This article introduces the ShareGPT4Video series, a project aimed at improving the video understanding capabilities of large-scale video language models (LVLMs) and the video generation capabilities of text-to-video models (T2VMs). The series consists of three main parts: ShareGPT4Video, a dense captioning dataset of 40,000 videos annotated by GPT4V; ShareCaptioner-Video, an efficient video caption generation model, which has been used to annotate 4,800,000 videos; and one on three video benchmarks ShareGPT4Video-8B, an LVLM model that achieves SOTA performance. The research team overcame the problems of lack of details and time confusion in video subtitle generation in existing methods, and achieved high-quality and scalable video subtitle generation through a carefully designed differential video subtitle strategy.

1) ShareGPT4Video, a dense subtitle collection of 40,000 videos of varying lengths and sources annotated by GPT4V, developed through carefully designed data filtering and annotation strategies.

2) ShareCaptioner-Video, an efficient and powerful video subtitle generation model suitable for arbitrary videos, which annotates 4,800,000 high-quality aesthetic videos.

3) ShareGPT4Video-8B, a simple but superior LVLM, achieves SOTA performance on three advanced video benchmarks.

In addition to non-scalable and costly human annotators, the study found that using GPT4V to generate subtitles for videos with a simple multi-frame or frame concatenation input strategy resulted in results that lacked detail and were sometimes temporally garbled. The research team believes that the challenge of designing high-quality video subtitle strategies lies in three aspects:

1) Understand the precise temporal changes between frames.

2) Describe the detailed content within the frame.

3) Scalability to the number of frames for videos of arbitrary length.

To this end, researchers carefully designed a differential video subtitle strategy that is stable, scalable, and efficient for generating video subtitles of arbitrary resolutions, aspect ratios, and lengths. ShareGPT4Video was built on this, containing 40,000 high-quality videos covering a wide range of categories. The generated subtitles contain rich world knowledge, object properties, camera movements, and detailed and precise time descriptions of key events.

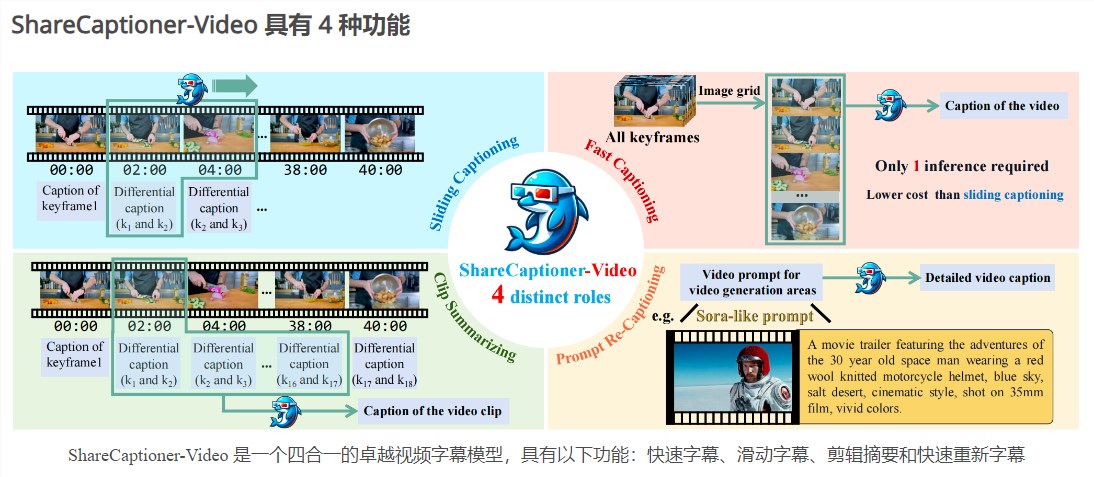

Based on ShareGPT4Video, ShareCaptioner-Video has been further developed, an excellent subtitle generation model that can efficiently generate high-quality subtitles for any video. We use it to annotate 4,800,000 aesthetically appealing videos and verify their effectiveness on a 10-second text-to-video generation task. ShareCaptioner-Video is a four-in-one superior video subtitle model with the following features: Quick Caption, Sliding Caption, Clip Summary and Quick Re-subtitle.

In terms of video understanding, the research team also verified the effectiveness of ShareGPT4Video on several current LVLM architectures and presented the outstanding new LVLM ShareGPT4Video-8B.

Product entrance: https://top.aibase.com/tool/sharegpt4video

The ShareGPT4Video series has brought significant progress to the field of video understanding and generation, and its high-quality data sets and models are expected to promote the further development of related technologies. Visit the link for more details.