llama index RAG

1.0.0

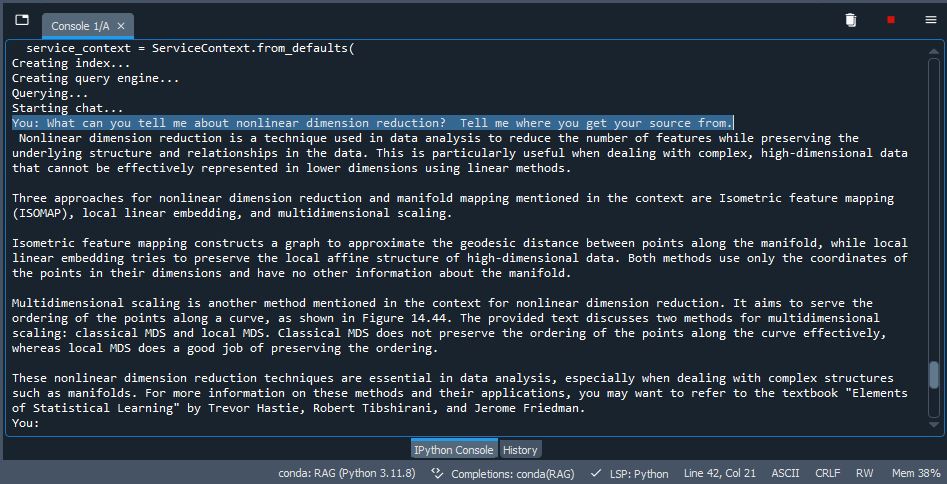

A RAG implementation on Llama Index using Qdrant as storage. Take some pdfs (you can either use the test pdfs include in /data or delete and use your own docs), index/embed them in a vdb, use LLM to inference and generate output. Pretty nifty.

pip install -r /path/to/requirements.txt in cmd promptollama run mistral or whatever model you pick. If you're using the new Ollama for Windows then not necessary since it runs in the background (ensure it's active).