This project realizes the deployment of various open source生成式AI模型for computing BM1684X chips, mainly LLM. The model is converted into bmodel through the TPU-MLIR compiler and deployed to a PCIE environment or SoC environment using C++ code. I wrote an explanation on Zhihu, taking ChatGLM2-6B as an example, so that everyone can understand the source code: ChatGLM2 process analysis and TPU-MLIR deployment

The deployed models are as follows (arranged in alphabetical order):

| Model | INT4 | INT8 | FP16/BF16 | Huggingface Link |

|---|---|---|---|---|

| Baichuan2-7B | ✅ | LINK | ||

| ChatGLM3-6B | ✅ | ✅ | ✅ | LINK |

| ChatGLM4-9B | ✅ | ✅ | ✅ | LINK |

| CodeFuse-7B | ✅ | ✅ | LINK | |

| DeepSeek-6.7B | ✅ | ✅ | LINK | |

| Falcon-40B | ✅ | ✅ | LINK | |

| Phi-3-mini-4k | ✅ | ✅ | ✅ | LINK |

| Qwen-7B | ✅ | ✅ | ✅ | LINK |

| Qwen-14B | ✅ | ✅ | ✅ | LINK |

| Qwen-72B | ✅ | LINK | ||

| Qwen1.5-0.5B | ✅ | ✅ | ✅ | LINK |

| Qwen1.5-1.8B | ✅ | ✅ | ✅ | LINK |

| Qwen1.5-7B | ✅ | ✅ | ✅ | LINK |

| Qwen2-7B | ✅ | ✅ | ✅ | LINK |

| Qwen2.5-7B | ✅ | ✅ | ✅ | LINK |

| Llama2-7B | ✅ | ✅ | ✅ | LINK |

| Llama2-13B | ✅ | ✅ | ✅ | LINK |

| Llama3-8B | ✅ | ✅ | ✅ | LINK |

| Llama3.1-8B | ✅ | ✅ | ✅ | LINK |

| LWM-Text-Chat | ✅ | ✅ | ✅ | LINK |

| MiniCPM3-4B | ✅ | ✅ | LINK | |

| Mistral-7B-Instruct | ✅ | ✅ | LINK | |

| Stable Diffusion | ✅ | LINK | ||

| Stable Diffusion XL | ✅ | LINK | ||

| WizardCoder-15B | ✅ | LINK | ||

| Yi-6B-chat | ✅ | ✅ | LINK | |

| Yi-34B-chat | ✅ | ✅ | LINK | |

| Qwen-VL-Chat | ✅ | ✅ | LINK | |

| Qwen2-VL-Chat | ✅ | ✅ | LINK | |

| InternVL2-4B | ✅ | ✅ | LINK | |

| InternVL2-2B | ✅ | ✅ | LINK | |

| MiniCPM-V-2_6 | ✅ | ✅ | LINK | |

| Llama3.2-Vision-11B | ✅ | ✅ | ✅ | LINK |

If you want to know the conversion details and source code, you can go to the models subdirectory of this project to view the deployment details of various models.

If you are interested in our chips, you can also contact us through the official website SOPHGO.

Clone the LLM-TPU project and execute the run.sh script

git clone https://github.com/sophgo/LLM-TPU.git

./run.sh --model llama2-7bPlease refer to Quick Start for details

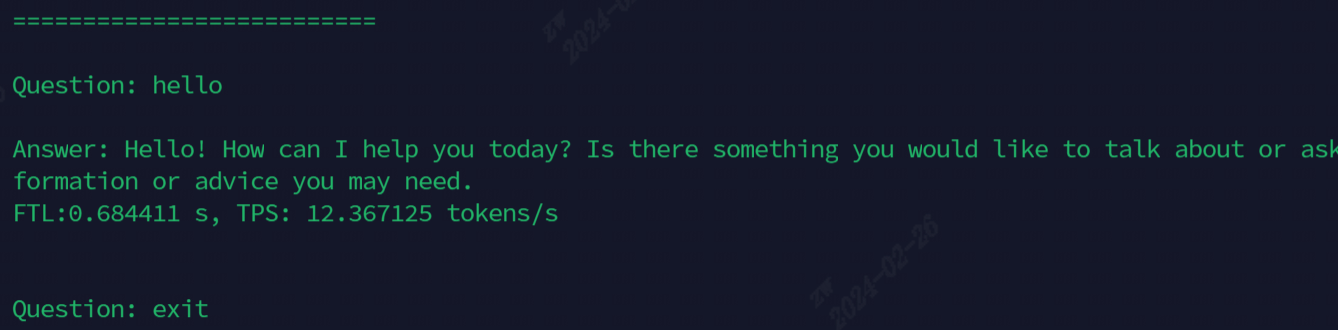

The effect after running is shown in the following figure

The models currently used for demonstration, all commands are shown in the following table

| Model | SoC | PCIE |

|---|---|---|

| ChatGLM3-6B | ./run.sh --model chatglm3-6b --arch soc | ./run.sh --model chatglm3-6b --arch pcie |

| Llama2-7B | ./run.sh --model llama2-7b --arch soc | ./run.sh --model llama2-7b --arch pcie |

| Llama3-7B | ./run.sh --model llama3-7b --arch soc | ./run.sh --model llama3-7b --arch pcie |

| Qwen-7B | ./run.sh --model qwen-7b --arch soc | ./run.sh --model qwen-7b --arch pcie |

| Qwen1.5-1.8B | ./run.sh --model qwen1.5-1.8b --arch soc | ./run.sh --model qwen1.5-1.8b --arch pcie |

| Qwen2.5-7B | ./run.sh --model qwen2.5-7b --arch pcie | |

| LWM-Text-Chat | ./run.sh --model lwm-text-chat --arch soc | ./run.sh --model lwm-text-chat --arch pcie |

| WizardCoder-15B | ./run.sh --model wizardcoder-15b --arch soc | ./run.sh --model wizardcoder-15b --arch pcie |

| InternVL2-4B | ./run.sh --model internvl2-4b --arch soc | ./run.sh --model internvl2-4b --arch pcie |

| MiniCPM-V-2_6 | ./run.sh --model minicv2_6 --arch soc | ./run.sh --model minicmv2_6 --arch pcie |

Advanced function description:

| Function | Table of contents | Function description |

|---|---|---|

| Multi-core | ChatGLM3/parallel_demo | Support ChatGLM3 2-core |

| Llama2/demo_parallel | Support Llama2 4/6/8 core | |

| Qwen/demo_parallel | Support Qwen 4/6/8 cores | |

| Qwen1_5/demo_parallel | Support Qwen1_5 4/6/8 cores | |

| Speculative sampling | Qwen/jacobi_demo | LookaheadDecoding |

| Qwen1_5/speculative_sample_demo | Speculative sampling | |

| prefill reuse | Qwen/prompt_cache_demo | Common sequence prefill multiplexing |

| Qwen/share_cache_demo | Common sequence prefill multiplexing | |

| Qwen1_5/share_cache_demo | Common sequence prefill multiplexing | |

| Model encryption | Qwen/share_cache_demo | Model encryption |

| Qwen1_5/share_cache_demo | Model encryption |

Please refer to LLM-TPU FAQs and Answers