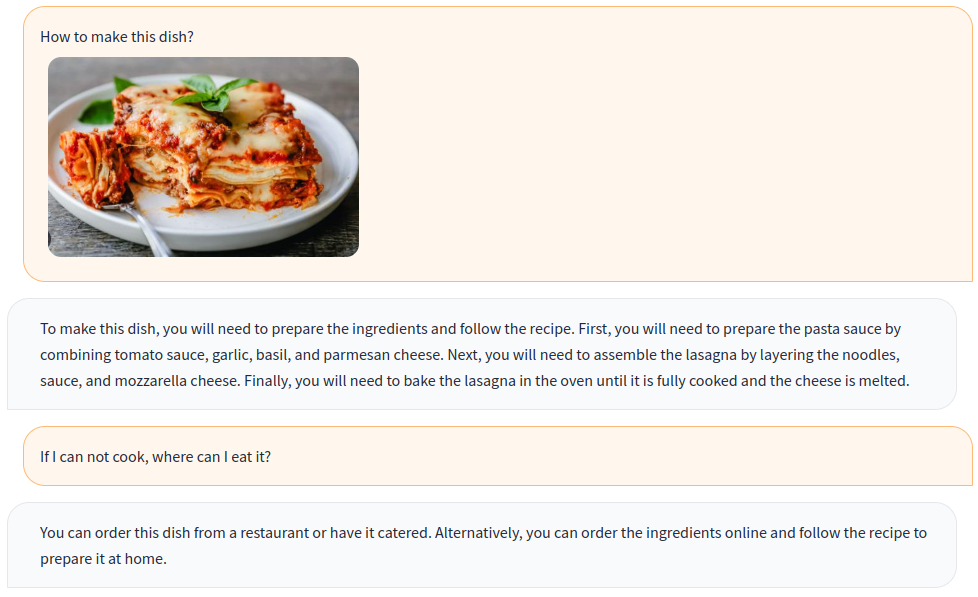

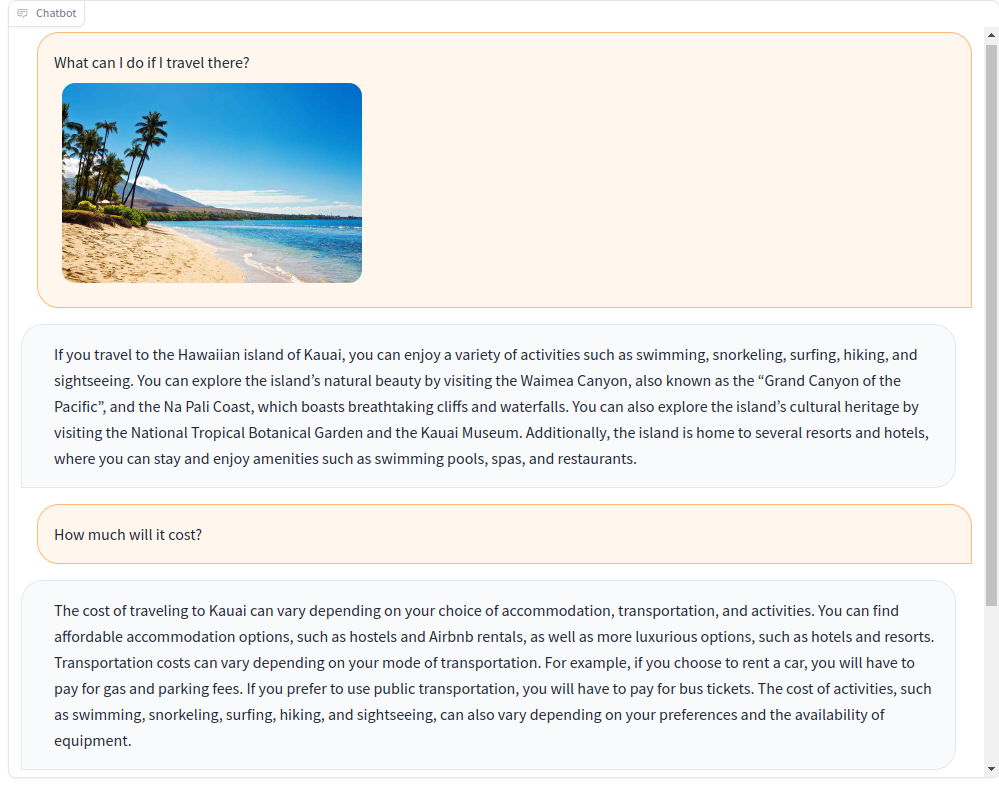

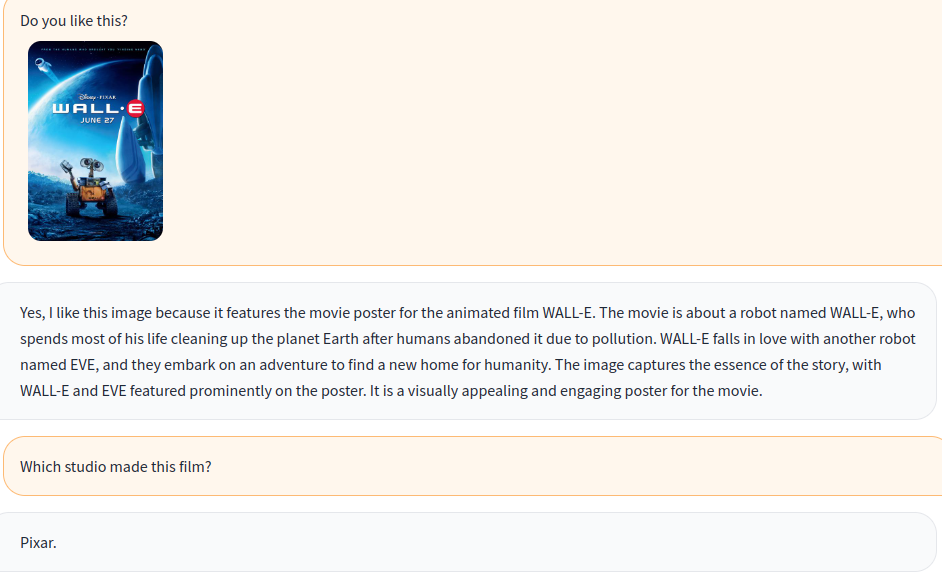

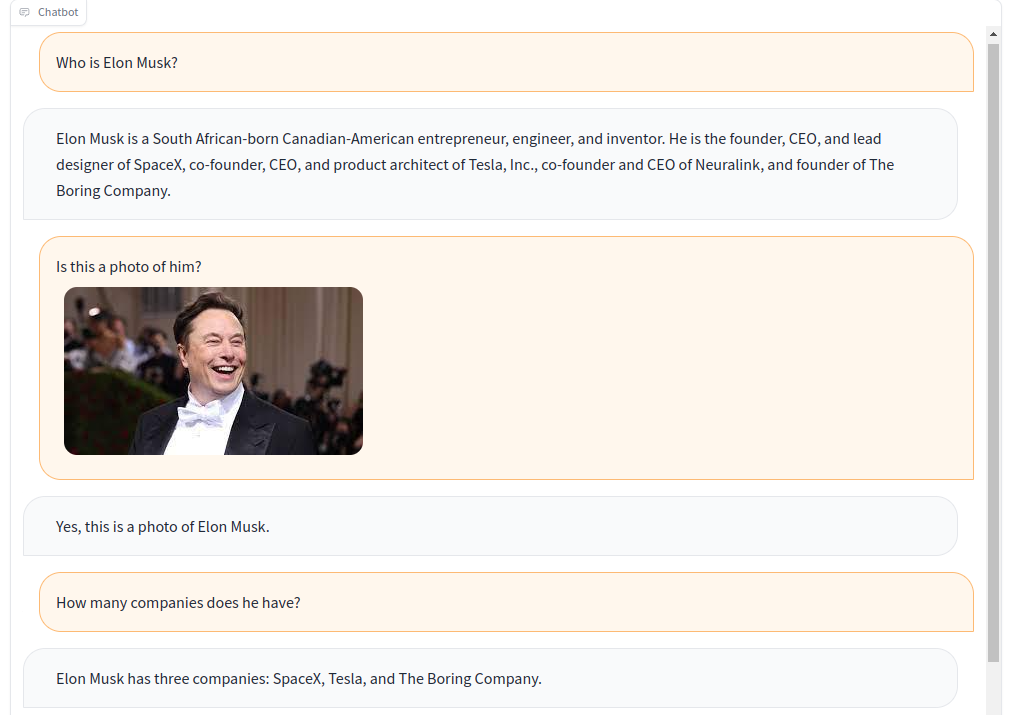

Train a multi-modal chatbot with visual and language instructions!

Based on the open-source multi-modal model OpenFlamingo, we create various visual instruction data with open datasets, including VQA, Image Captioning, Visual Reasoning, Text OCR, and Visual Dialogue. Additionally, we also train the language model component of OpenFlamingo using only language-only instruction data.

The joint training of visual and language instructions effectively improves the performance of the model! For more details please refer to our technical report.

Welcome to join us!

English | 简体中文

To install the package in an existing environment, run

git clone https://github.com/open-mmlab/Multimodal-GPT.git

cd Multimodal-GPT

pip install -r requirements.txt

pip install -v -e .or create a new conda environment

conda env create -f environment.ymlDownload the pre-trained weights.

Use this script for converting LLaMA weights to Hugging Face format.

Download the OpenFlamingo pre-trained model from openflamingo/OpenFlamingo-9B.

Download our LoRA Weight from here.

Then place these models in checkpoints folders like this:

checkpoints

├── llama-7b_hf

│ ├── config.json

│ ├── pytorch_model-00001-of-00002.bin

│ ├── ......

│ └── tokenizer.model

├── OpenFlamingo-9B

│ └──checkpoint.pt

├──mmgpt-lora-v0-release.pt

launch the gradio demo

python app.py

A-OKVQA

Download annotation from this link and unzip to data/aokvqa/annotations.

It also requires images from coco dataset which can be downloaded from here.

COCO Caption

Download from this link and unzip to data/coco.

It also requires images from coco dataset which can be downloaded from here.

OCR VQA

Download from this link and place in data/OCR_VQA/.

LlaVA

Download from liuhaotian/LLaVA-Instruct-150K and place in data/llava/.

It also requires images from coco dataset which can be downloaded from here.

Mini-GPT4

Download from Vision-CAIR/cc_sbu_align and place in data/cc_sbu_align/.

Dolly 15k

Download from databricks/databricks-dolly-15k and place it in data/dolly/databricks-dolly-15k.jsonl.

Alpaca GPT4

Download it from this link and place it in data/alpaca_gpt4/alpaca_gpt4_data.json.

You can also customize the data path in the configs/dataset_config.py.

Baize

Download it from this link and place it in data/baize/quora_chat_data.json.

torchrun --nproc_per_node=8 mmgpt/train/instruction_finetune.py

--lm_path checkpoints/llama-7b_hf

--tokenizer_path checkpoints/llama-7b_hf

--pretrained_path checkpoints/OpenFlamingo-9B/checkpoint.pt

--run_name train-my-gpt4

--learning_rate 1e-5

--lr_scheduler cosine

--batch_size 1

--tuning_config configs/lora_config.py

--dataset_config configs/dataset_config.py

--report_to_wandbIf you find our project useful for your research and applications, please cite using this BibTeX:

@misc{gong2023multimodalgpt,

title={MultiModal-GPT: A Vision and Language Model for Dialogue with Humans},

author={Tao Gong and Chengqi Lyu and Shilong Zhang and Yudong Wang and Miao Zheng and Qian Zhao and Kuikun Liu and Wenwei Zhang and Ping Luo and Kai Chen},

year={2023},

eprint={2305.04790},

archivePrefix={arXiv},

primaryClass={cs.CV}

}