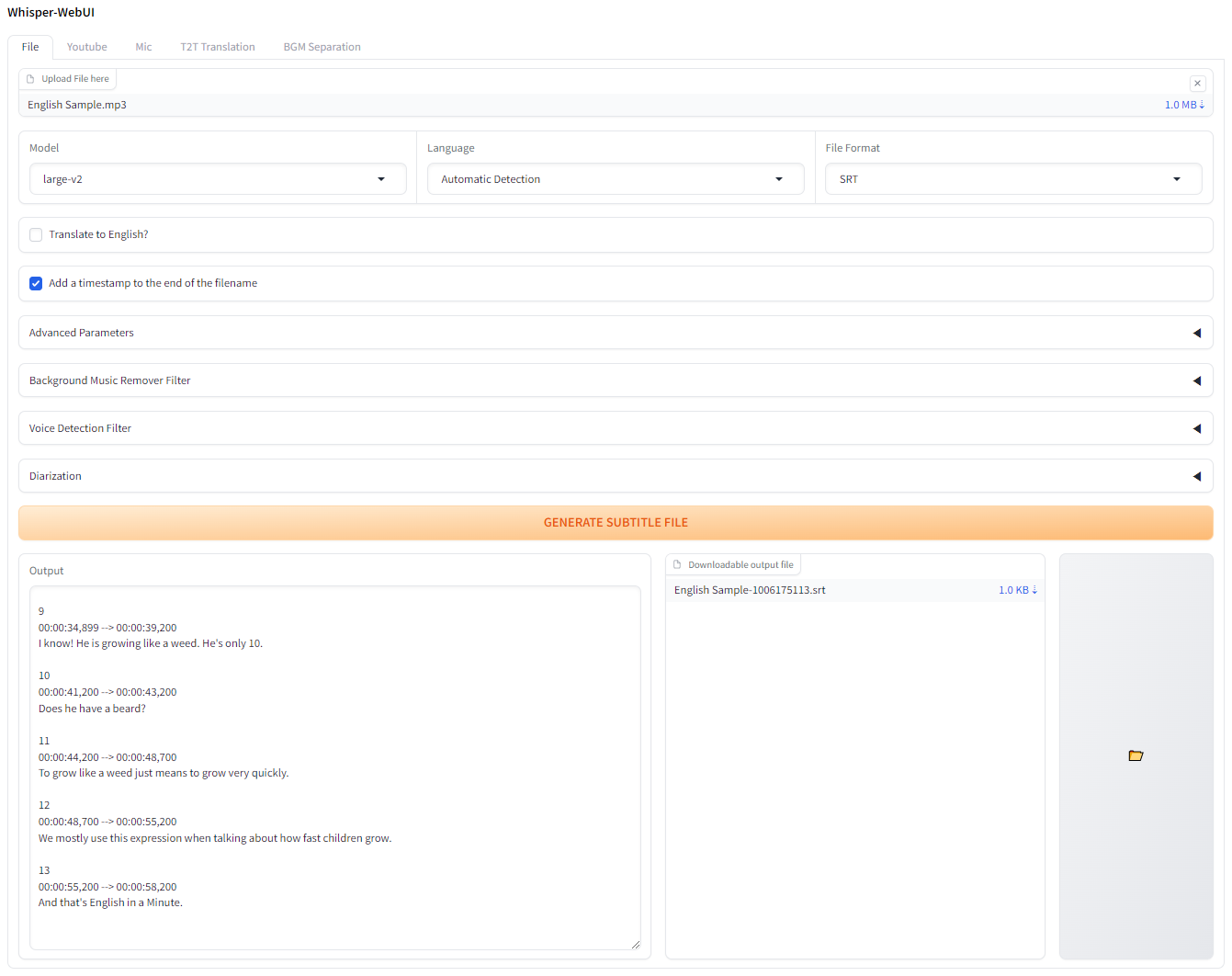

A Gradio-based browser interface for Whisper. You can use it as an Easy Subtitle Generator!

If you wish to try this on Colab, you can do it in here!

The app is able to run with Pinokio.

http://localhost:7860.Install and launch Docker-Desktop.

Git clone the repository

git clone https://github.com/jhj0517/Whisper-WebUI.gitdocker compose build docker compose uphttp://localhost:7860

If needed, update the docker-compose.yaml to match your environment.

To run this WebUI, you need to have git, 3.10 <= python <= 3.12, FFmpeg.

And if you're not using an Nvida GPU, or using a different CUDA version than 12.4, edit the requirements.txt to match your environment.

Please follow the links below to install the necessary software:

3.10 ~ 3.12 is recommended.

After installing FFmpeg, make sure to add the FFmpeg/bin folder to your system PATH!

git clone https://github.com/jhj0517/Whisper-WebUI.gitinstall.bat or install.sh to install dependencies. (It will create a venv directory and install dependencies there.)start-webui.bat or start-webui.sh (It will run python app.py after activating the venv)And you can also run the project with command line arguments if you like to, see wiki for a guide to arguments.

This project is integrated with faster-whisper by default for better VRAM usage and transcription speed.

According to faster-whisper, the efficiency of the optimized whisper model is as follows:

| Implementation | Precision | Beam size | Time | Max. GPU memory | Max. CPU memory |

|---|---|---|---|---|---|

| openai/whisper | fp16 | 5 | 4m30s | 11325MB | 9439MB |

| faster-whisper | fp16 | 5 | 54s | 4755MB | 3244MB |

If you want to use an implementation other than faster-whisper, use --whisper_type arg and the repository name.

Read wiki for more info about CLI args.

This is Whisper's original VRAM usage table for models.

| Size | Parameters | English-only model | Multilingual model | Required VRAM | Relative speed |

|---|---|---|---|---|---|

| tiny | 39 M | tiny.en |

tiny |

~1 GB | ~32x |

| base | 74 M | base.en |

base |

~1 GB | ~16x |

| small | 244 M | small.en |

small |

~2 GB | ~6x |

| medium | 769 M | medium.en |

medium |

~5 GB | ~2x |

| large | 1550 M | N/A | large |

~10 GB | 1x |

.en models are for English only, and the cool thing is that you can use the Translate to English option from the "large" models!

Any PRs that translate the language into translation.yaml would be greatly appreciated!