閱讀detrex基準紙| ?項目頁面| ? quite detrex | deepdataspace

文檔|安裝| ?模型動物園|很棒的detr |新聞|報告問題

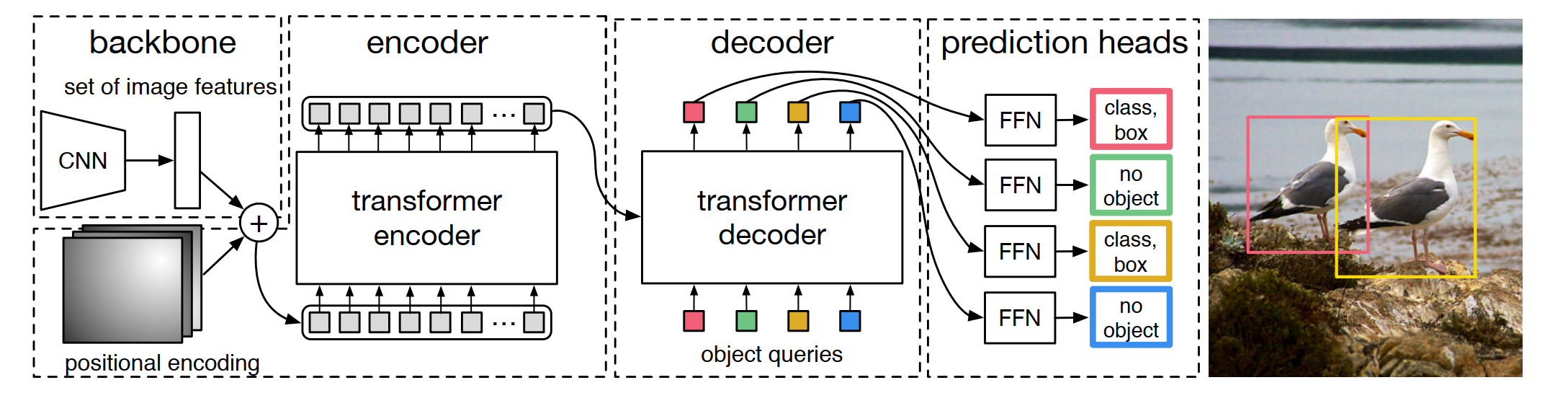

Detrex是一種開源工具箱,可提供基於最新的變壓器檢測算法。它建立在檢測之上,其模塊設計部分是從mmdetection和detr借來的。非常感謝他們組織良好的代碼。主要分支與Pytorch 1.10+或更高版本一起工作(我們建議Pytorch 1.12 )。

模塊化設計。 Detrex將基於變壓器的檢測框架分解為各種組件,這些框架可幫助用戶輕鬆構建自己的自定義模型。

強大的基線。 Detrex為基於變壓器的檢測模型提供了一系列強大的基準。通過優化大多數支持的算法中的超參數,我們進一步將模型性能從0.2 AP提高到1.1 AP 。

便於使用。 Detrex設計為輕巧且易於使用:

除了Detrex外,我們還發布了一個庫存真棒檢測變壓器,以介紹有關用於檢測和分割的變壓器的論文。

回購名稱detrex有幾種解釋:

DETR-EX :我們摘下帽子來磨牙,並將此存儲庫視為基於變壓器檢測算法的擴展。

DET-REX :雷克斯在拉丁語中的字面意思是“國王”。我們希望該倉庫可以通過提供研究界的最佳基於變壓器的檢測算法來幫助對物體檢測提高最新技術。

de-t.rex :de表示荷蘭語。 T.Rex,也稱為Tyrannosaurus Rex,意為“暴君之王”,並連接到我們的研究工作“ Dino”,這對於恐龍而言是簡短的。

V0.5.0於2023年1月16日發布:

有關詳細信息和發布歷史記錄,請參閱ChangElog.md。

請參閱安裝說明以獲取安裝詳細信息。

請參閱Detrex的入門,以獲取Detrex的基本用法。我們還提供其他教程:

儘管目前有一些教程提供了相對簡單的內容,但我們將不斷改進文檔,以幫助用戶獲得更好的用戶體驗。

請參閱文檔以獲取完整的API文檔和教程。

結果和模型在模型動物園中可用。

請參閱項目,以了解基於Detrex構建的項目的詳細信息。

該項目以Apache 2.0許可發布。

如果您在研究中使用此工具箱或希望參考此處發布的基線結果,請使用以下Bibtex條目:

@misc { ren2023detrex ,

title = { detrex: Benchmarking Detection Transformers } ,

author = { Tianhe Ren and Shilong Liu and Feng Li and Hao Zhang and Ailing Zeng and Jie Yang and Xingyu Liao and Ding Jia and Hongyang Li and He Cao and Jianan Wang and Zhaoyang Zeng and Xianbiao Qi and Yuhui Yuan and Jianwei Yang and Lei Zhang } ,

year = { 2023 } ,

eprint = { 2306.07265 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @inproceedings { carion2020end ,

title = { End-to-end object detection with transformers } ,

author = { Carion, Nicolas and Massa, Francisco and Synnaeve, Gabriel and Usunier, Nicolas and Kirillov, Alexander and Zagoruyko, Sergey } ,

booktitle = { European conference on computer vision } ,

pages = { 213--229 } ,

year = { 2020 } ,

organization = { Springer }

}

@inproceedings {

zhu2021deformable,

title = { Deformable {{}DETR{}}: Deformable Transformers for End-to-End Object Detection } ,

author = { Xizhou Zhu and Weijie Su and Lewei Lu and Bin Li and Xiaogang Wang and Jifeng Dai } ,

booktitle = { International Conference on Learning Representations } ,

year = { 2021 } ,

url = { https://openreview.net/forum?id=gZ9hCDWe6ke }

}

@inproceedings { meng2021-CondDETR ,

title = { Conditional DETR for Fast Training Convergence } ,

author = { Meng, Depu and Chen, Xiaokang and Fan, Zejia and Zeng, Gang and Li, Houqiang and Yuan, Yuhui and Sun, Lei and Wang, Jingdong } ,

booktitle = { Proceedings of the IEEE International Conference on Computer Vision (ICCV) } ,

year = { 2021 }

}

@inproceedings {

liu2022dabdetr,

title = { {DAB}-{DETR}: Dynamic Anchor Boxes are Better Queries for {DETR} } ,

author = { Shilong Liu and Feng Li and Hao Zhang and Xiao Yang and Xianbiao Qi and Hang Su and Jun Zhu and Lei Zhang } ,

booktitle = { International Conference on Learning Representations } ,

year = { 2022 } ,

url = { https://openreview.net/forum?id=oMI9PjOb9Jl }

}

@inproceedings { li2022dn ,

title = { Dn-detr: Accelerate detr training by introducing query denoising } ,

author = { Li, Feng and Zhang, Hao and Liu, Shilong and Guo, Jian and Ni, Lionel M and Zhang, Lei } ,

booktitle = { Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition } ,

pages = { 13619--13627 } ,

year = { 2022 }

}

@inproceedings {

zhang2023dino,

title = { {DINO}: {DETR} with Improved DeNoising Anchor Boxes for End-to-End Object Detection } ,

author = { Hao Zhang and Feng Li and Shilong Liu and Lei Zhang and Hang Su and Jun Zhu and Lionel Ni and Heung-Yeung Shum } ,

booktitle = { The Eleventh International Conference on Learning Representations } ,

year = { 2023 } ,

url = { https://openreview.net/forum?id=3mRwyG5one }

}

@InProceedings { Chen_2023_ICCV ,

author = { Chen, Qiang and Chen, Xiaokang and Wang, Jian and Zhang, Shan and Yao, Kun and Feng, Haocheng and Han, Junyu and Ding, Errui and Zeng, Gang and Wang, Jingdong } ,

title = { Group DETR: Fast DETR Training with Group-Wise One-to-Many Assignment } ,

booktitle = { Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) } ,

month = { October } ,

year = { 2023 } ,

pages = { 6633-6642 }

}

@InProceedings { Jia_2023_CVPR ,

author = { Jia, Ding and Yuan, Yuhui and He, Haodi and Wu, Xiaopei and Yu, Haojun and Lin, Weihong and Sun, Lei and Zhang, Chao and Hu, Han } ,

title = { DETRs With Hybrid Matching } ,

booktitle = { Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) } ,

month = { June } ,

year = { 2023 } ,

pages = { 19702-19712 }

}

@InProceedings { Li_2023_CVPR ,

author = { Li, Feng and Zhang, Hao and Xu, Huaizhe and Liu, Shilong and Zhang, Lei and Ni, Lionel M. and Shum, Heung-Yeung } ,

title = { Mask DINO: Towards a Unified Transformer-Based Framework for Object Detection and Segmentation } ,

booktitle = { Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) } ,

month = { June } ,

year = { 2023 } ,

pages = { 3041-3050 }

}

@article { yan2023bridging ,

title = { Bridging the Gap Between End-to-end and Non-End-to-end Multi-Object Tracking } ,

author = { Yan, Feng and Luo, Weixin and Zhong, Yujie and Gan, Yiyang and Ma, Lin } ,

journal = { arXiv preprint arXiv:2305.12724 } ,

year = { 2023 }

}

@InProceedings { Chen_2023_CVPR ,

author = { Chen, Fangyi and Zhang, Han and Hu, Kai and Huang, Yu-Kai and Zhu, Chenchen and Savvides, Marios } ,

title = { Enhanced Training of Query-Based Object Detection via Selective Query Recollection } ,

booktitle = { Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) } ,

month = { June } ,

year = { 2023 } ,

pages = { 23756-23765 }

}