In the field of artificial intelligence, the development of large language models (LLM) is changing with each passing day. The research team at Carnegie Mellon University (CMU) and HuggingFace recently proposed an innovative approach called "Meta Reinforcement Fine-Tuning" (MRT). This technique aims to optimize the computational efficiency of large language models during the testing phase, especially when dealing with complex inference tasks.

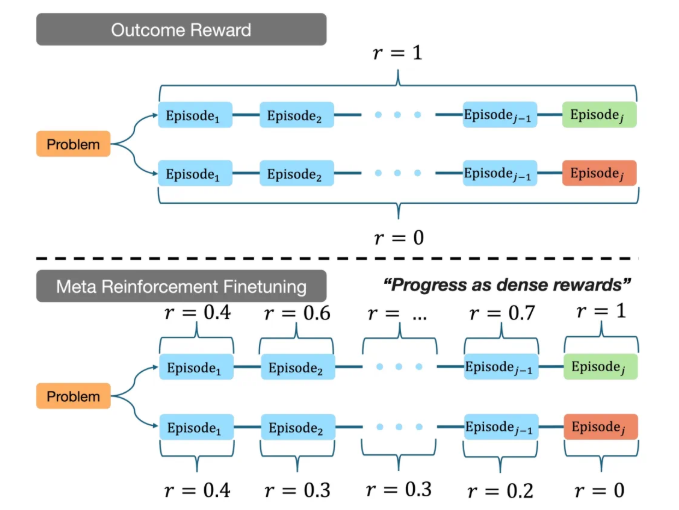

Research shows that existing large language models often consume a lot of computing resources during the inference process, while MRT's goal is to help the model find answers more efficiently within a limited computing budget. This method achieves a balance between exploration and utilization by segmenting the output of the large language model into multiple fragments. Through in-depth analysis of training data, MRT enables the model to make full use of known information when facing unknown problems, and explore new problem-solving strategies.

In the experiments of the CMU team, the model fine-tuned using MRT performed excellently in multiple inference benchmarks. Compared with traditional results reward reinforcement learning (GRPO), MRT's accuracy is 2 to 3 times higher, while 1.5 times higher in token usage efficiency. This result shows that MRT can not only enhance the model's inference ability, but also significantly reduce the consumption of computing resources, making it more competitive in practical applications.

In addition, the research team also proposed methods to effectively evaluate the performance of existing inference models, providing important reference for future research. This achievement not only demonstrates the potential of MRT, but also points out the direction for the development of large language models in more complex application scenarios.

Through this innovation, the research team at CMU and HuggingFace have taken an important step in promoting the cutting-edge field of artificial intelligence technology, empowering machines with stronger reasoning capabilities and laying a solid foundation for smarter applications in the future.

Project address: https://cohenqu.github.io/mrt.github.io/